An automatic text abstraction method based on a pre-training language model

A language model and automatic text technology, applied in the field of text processing, can solve problems such as large number of parameters, difficult model convergence, and inability to read abstracts, and achieves the effect of improving performance, good semantic compression effect, and improved readability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

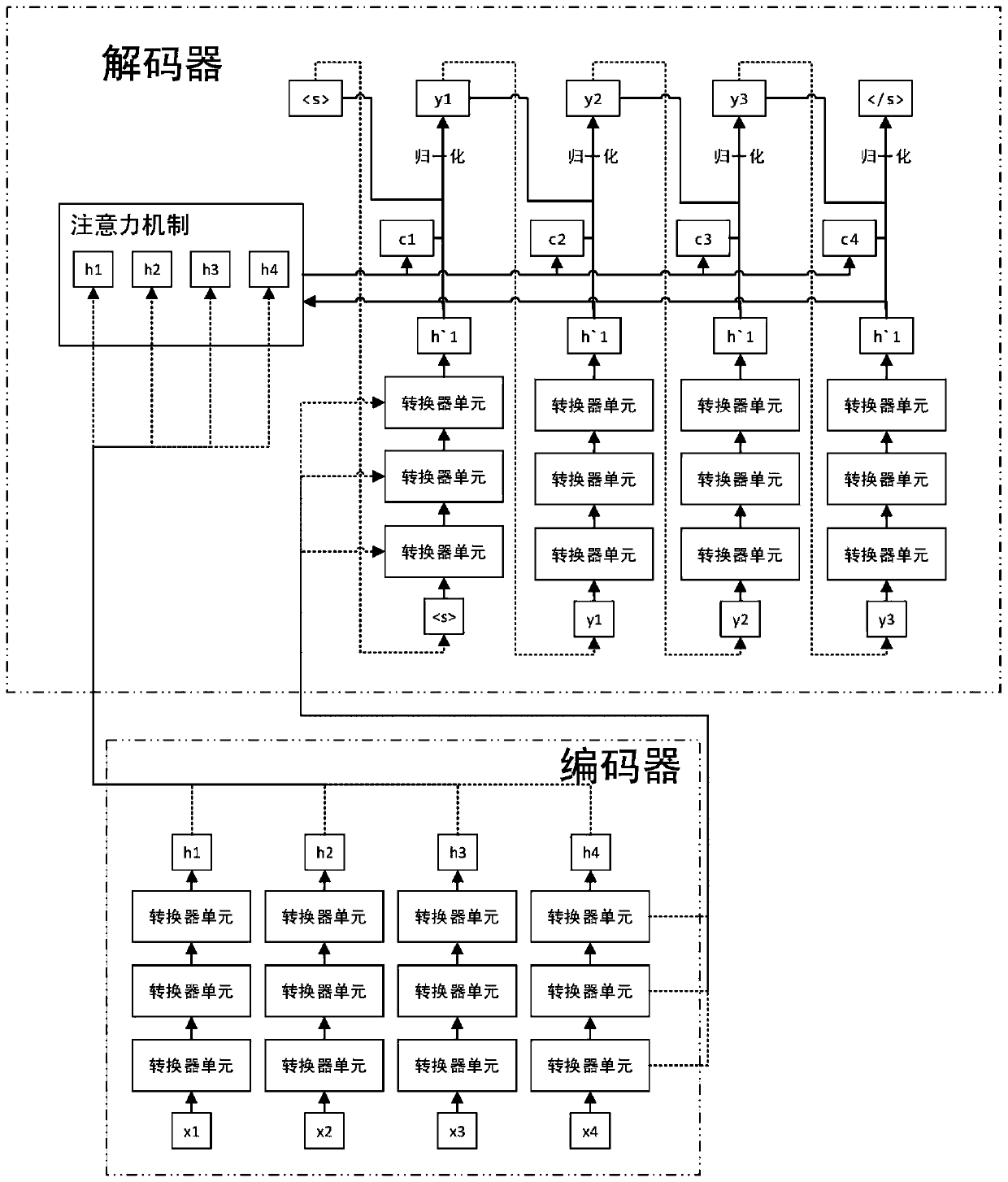

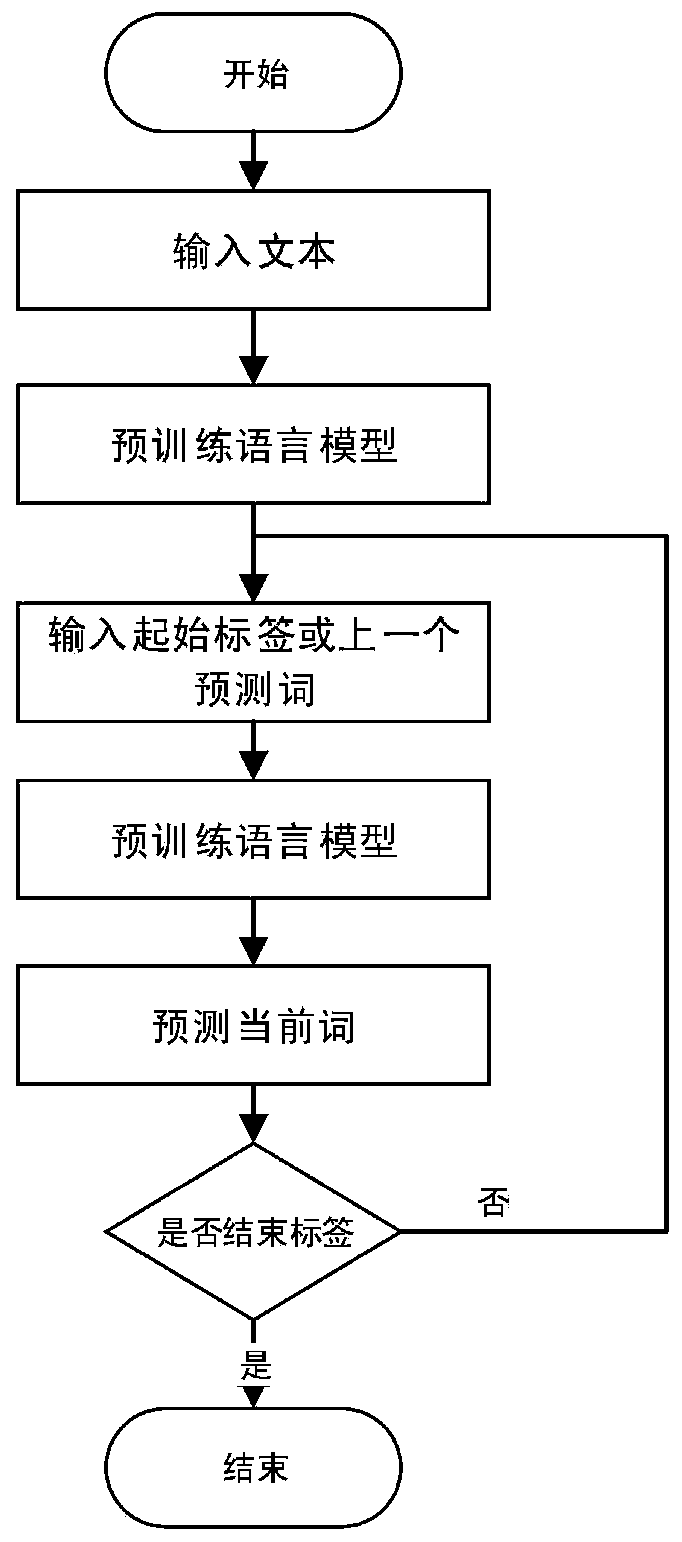

Method used

Image

Examples

Embodiment 1

[0042] Example 1, for the following text:

[0043] Original: In 2007, Jobs showed people the iPhone and declared that "it will change the world". Some people thought he was exaggerating. However, 8 years later, touch-screen smartphones represented by the iPhone have swept all corners of the world. In the future, smartphones will become "true personal computers" and make greater contributions to human development.

[0044] The present invention can obtain following summary:

[0045] Abstract: iPhone has been popularized all over the world and will make greater contributions to mankind

Embodiment 2

[0046] Example 2, for the following text:

[0047] Original: Last night, a China United Airlines flight from Chengdu to Beijing was found to have many people smoking. Later, due to weather reasons, the plane made an alternate landing at Taiyuan Airport. Several passengers were found smoking by the cabin door. Some passengers requested to re-check the security check, and the captain decided to continue the flight, which caused conflicts between the crew and non-smoking passengers. China United Airlines is currently contacting the crew for verification.

[0048] The present invention can obtain following summary:

[0049] Abstract: Passengers smoked when the plane landed, and non-smokers requested a second security check but failed to cause conflicts

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com