Audio and video mutual retrieval method based on user click behaviors

A video and user technology, applied in the field of data retrieval, can solve problems such as poor results and monotonous basis, and achieve the effect of improving interpretability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

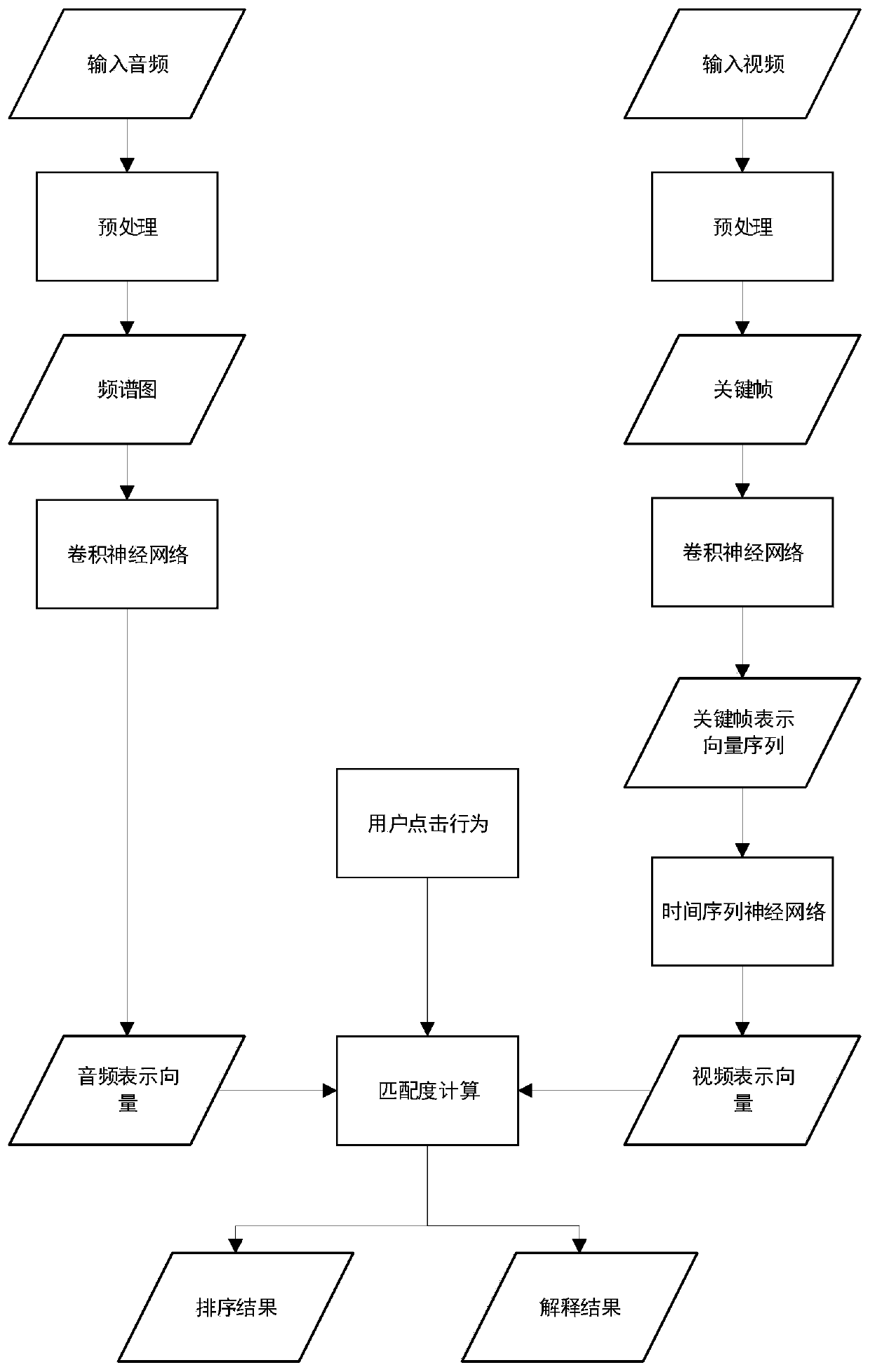

[0043] Such as figure 1 As shown, a method for mutual retrieval of audio and video based on user click behavior includes steps:

[0044] S1. Preprocessing the input audio and video data to obtain a spectrogram of the audio data and key frames of the video data;

[0045] The specific implementation manner of step S1 is: for the input audio data, the audio data is first drawn as a spectrogram. Then the spectrogram will be horizontally scaled to form a two-dimensional image I with a size of 128*128 pixels a . For the input video data, use the frame averaging method to extract 128 key frames as the key frame sequence S of the input video f =[f1 ,f 2 ,..., f n ]. Uniformly scale each picture in the key frame sequence into a two-dimensional image with a height of 128*128 pixels;

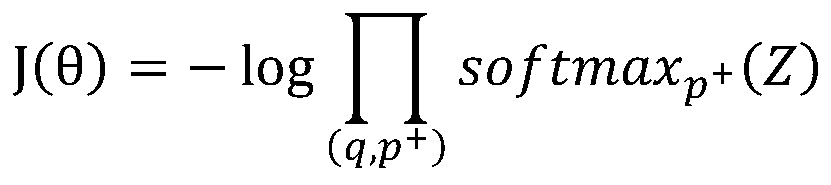

[0046] S2. Send the preprocessed audio data to an encoder composed of a deep convolutional neural network based on an attention mechanism. Obtain the representation vector and attention weight dist...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com