Three-dimensional object pose parameter estimation method and visual equipment

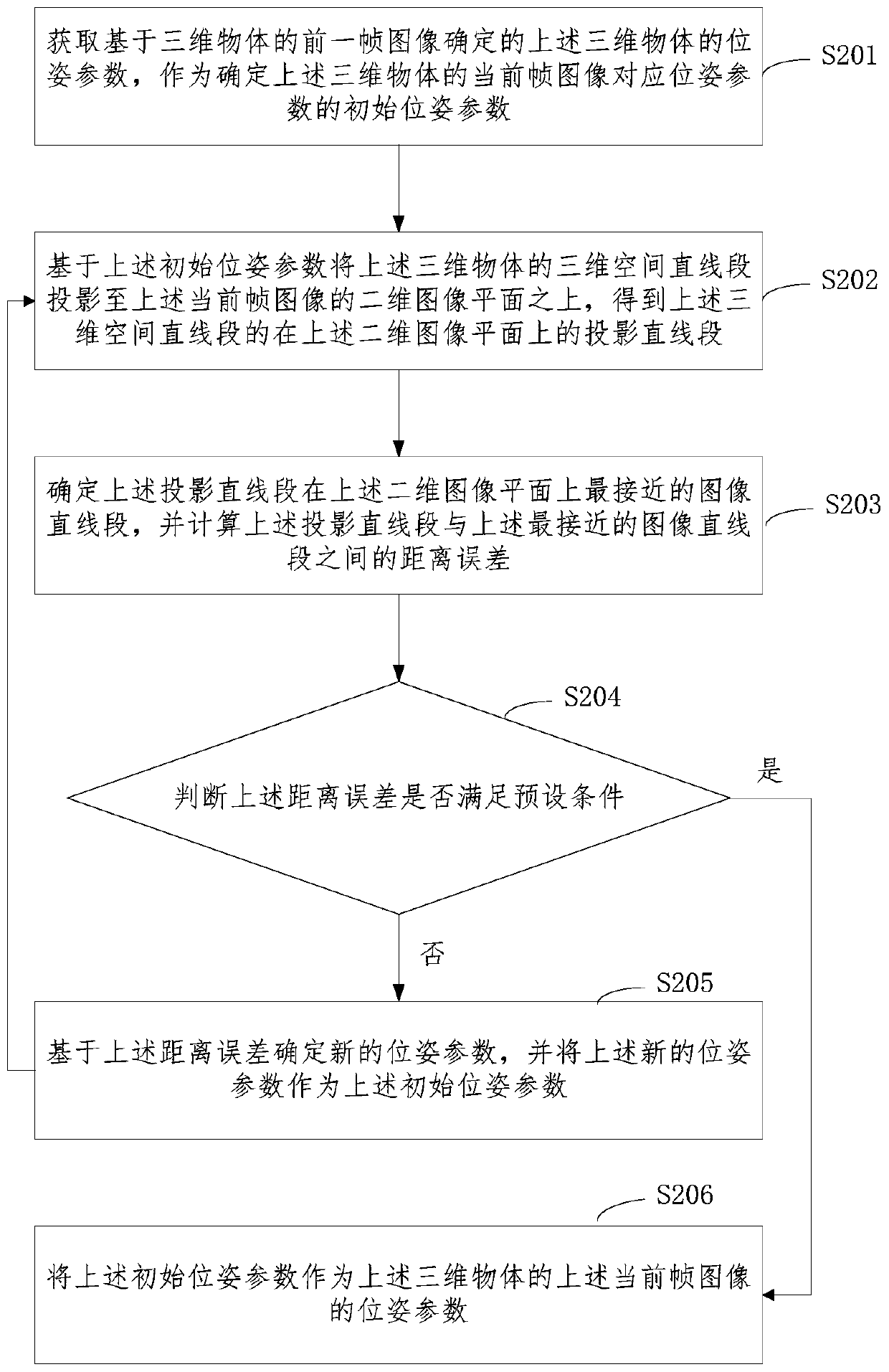

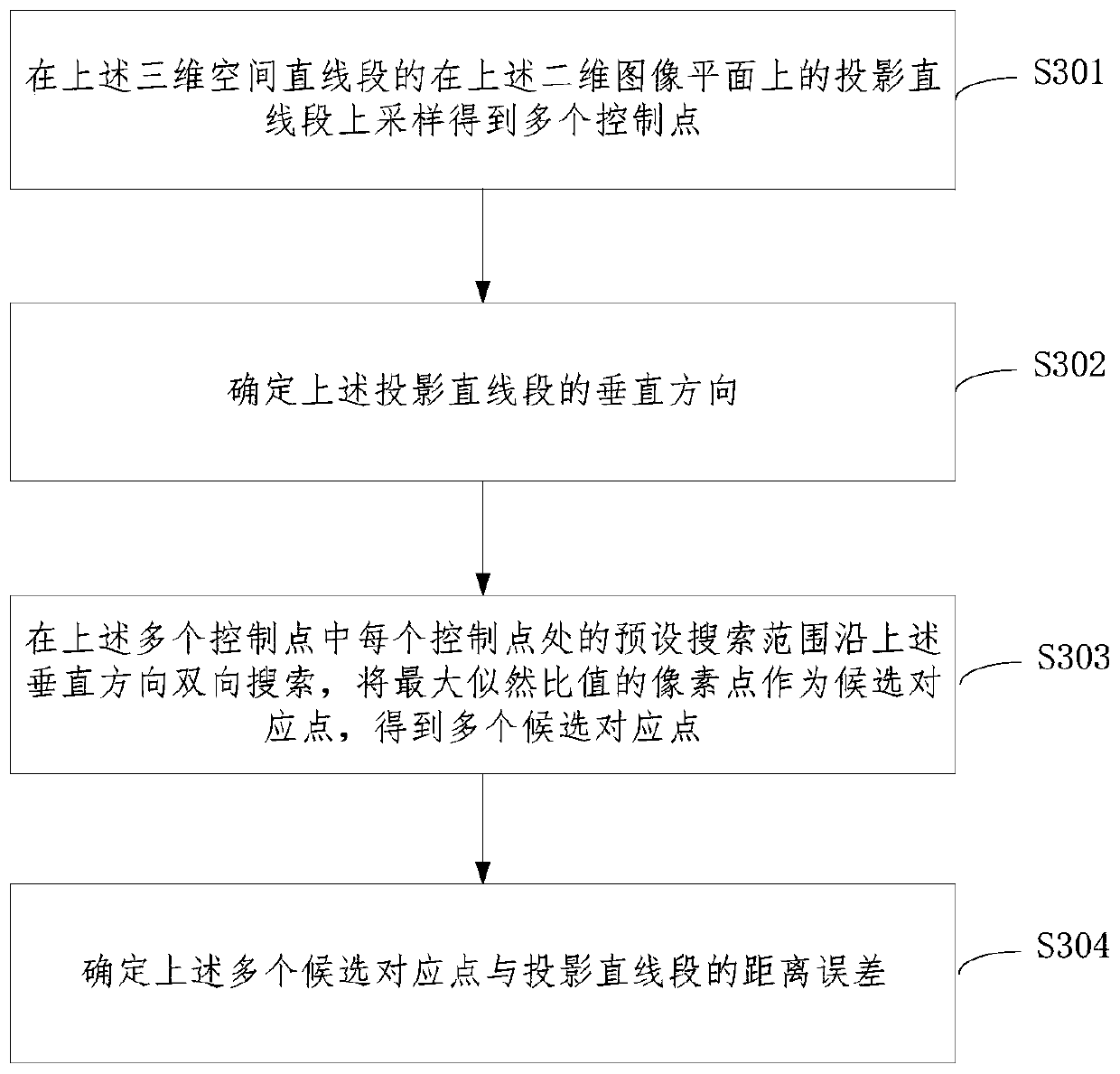

A technology of three-dimensional objects and pose parameters, applied in computing, image data processing, instruments, etc., can solve the problems of difficult two-dimensional and three-dimensional straight line feature matching, tediousness, and affecting the integrity of straight line features, so as to achieve the effect of removing the influence of external points Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

[0039] In the following description, use of suffixes such as 'module', 'part' or 'unit' for denoting elements is only for facilitating description of the present invention and has no specific meaning by itself. Therefore, 'module', 'part' or 'unit' may be used in combination.

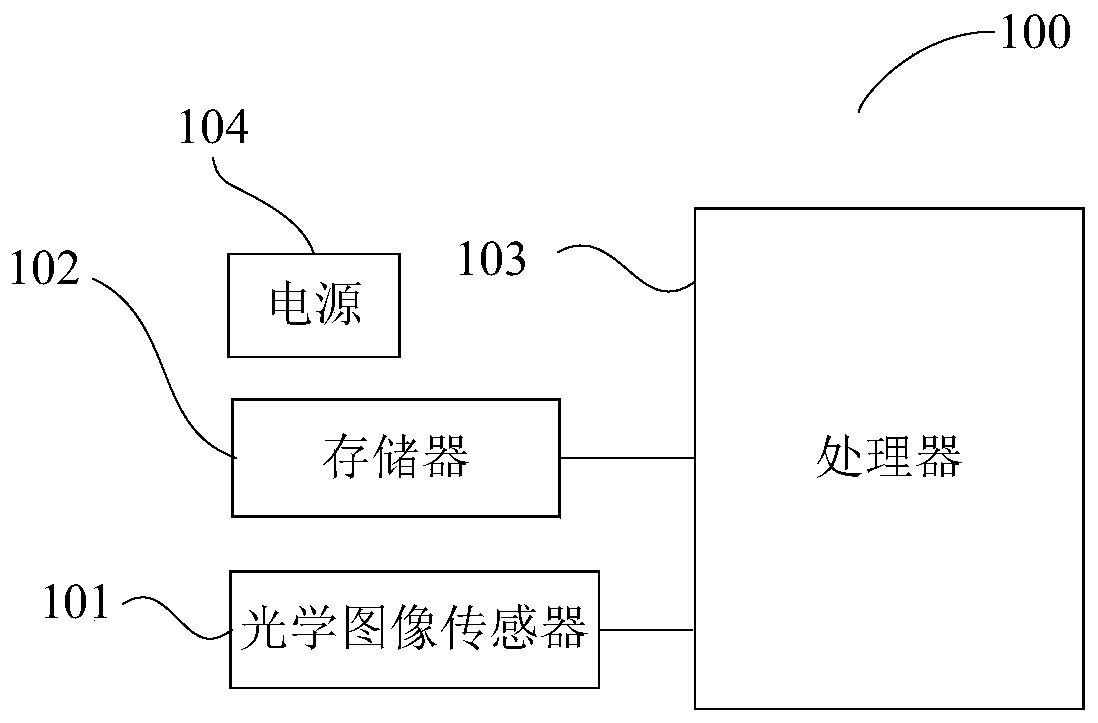

[0040] The vision devices provided in the embodiments of the present invention include but are not limited to industrial automation equipment, intelligent robots and other equipment or user terminals, which can identify and capture target objects, provide real-time image information and real-time spatial pose information of target objects. The visual device provided in the embodiment of the present invention may include: an RF (Radio Frequency, radio frequency) unit, a WiFi module, an audio output unit, an A / V (audio / video) input unit, a sensor...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap