Robot grabbing method, terminal and computer readable storage medium

A robot and the same technology, applied in the field of computer vision, can solve the problems of grasping failure, the robot cannot grasp objects, and the collected images are inaccurate, so as to achieve the effect of improving the success rate, improving the success rate of grasping, and ensuring stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] In order to make the objectives, technical solutions, and advantages of the embodiments of the present invention clearer, the following describes the embodiments of the present invention in detail with reference to the accompanying drawings. However, a person of ordinary skill in the art can understand that in each embodiment of the present invention, many technical details are proposed for the reader to better understand the present application. However, even without these technical details and various changes and modifications based on the following embodiments, the technical solution claimed in this application can be realized.

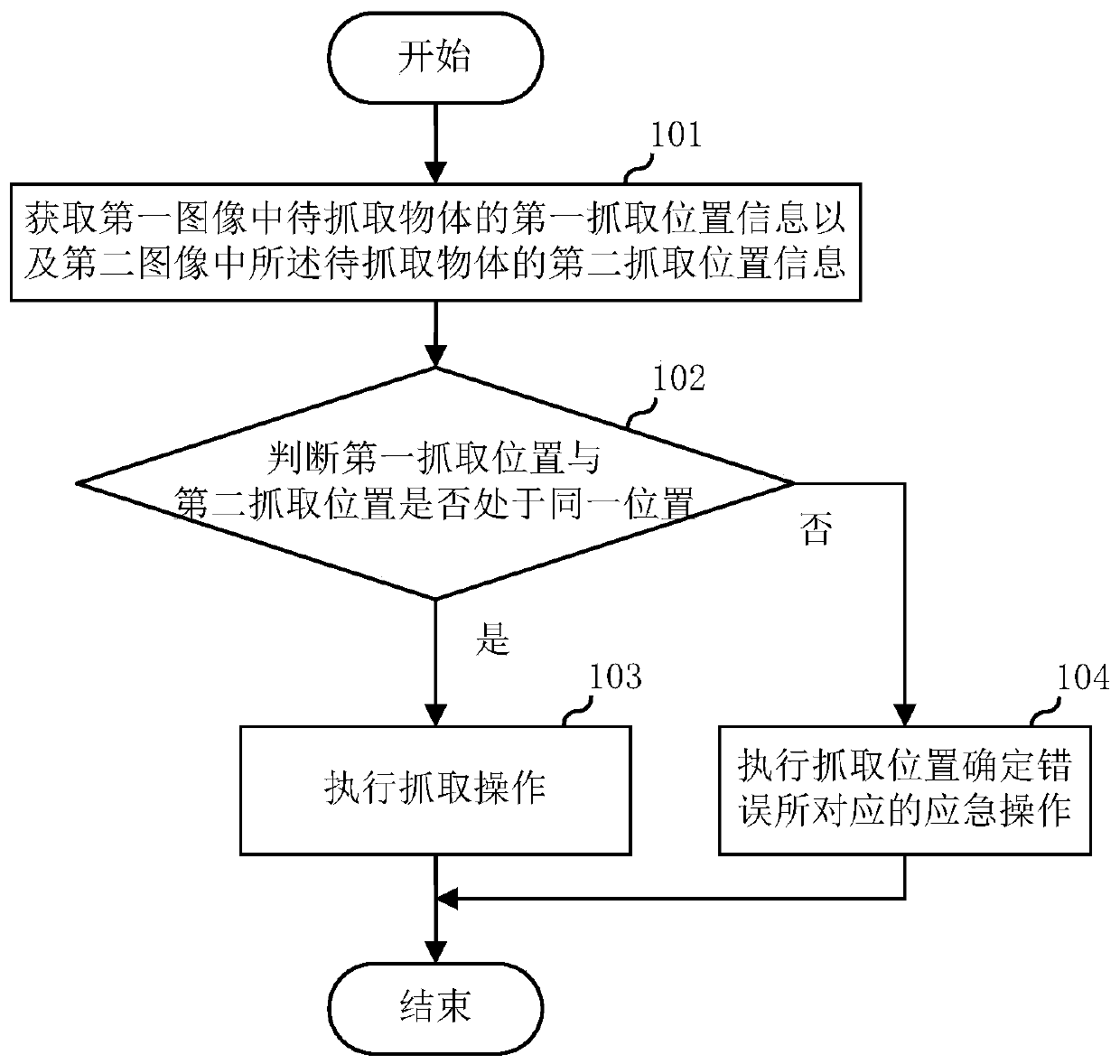

[0027] The first embodiment of the present invention relates to a method of robot grasping. The robot grasping method is applied to a robot, and the robot can be a separate robotic arm or an intelligent robot with a grasping arm. The specific process of the robot grasping method is as follows figure 1 Shown, including:

[0028] Step 101: Acquir...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com