Pose estimation method suitable for monocular vision camera in dynamic environment

A monocular vision, dynamic environment technology, applied in the field of computer vision, can solve the problems of inconsistent motion, inaccurate camera pose, affecting the accuracy of the SLAM system, and achieve the effect of improving accuracy and precision.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The objects and functions of the present invention and methods for achieving the objects and functions will be clarified by referring to the exemplary embodiments. However, the present invention is not limited to the exemplary embodiments disclosed below; it can be implemented in various forms. The essence of the description is only to help those skilled in the relevant art comprehensively understand the specific details of the present invention.

[0038] Hereinafter, embodiments of the present invention will be described with reference to the accompanying drawings. In the drawings, the same reference numerals represent the same or similar components, or the same or similar steps.

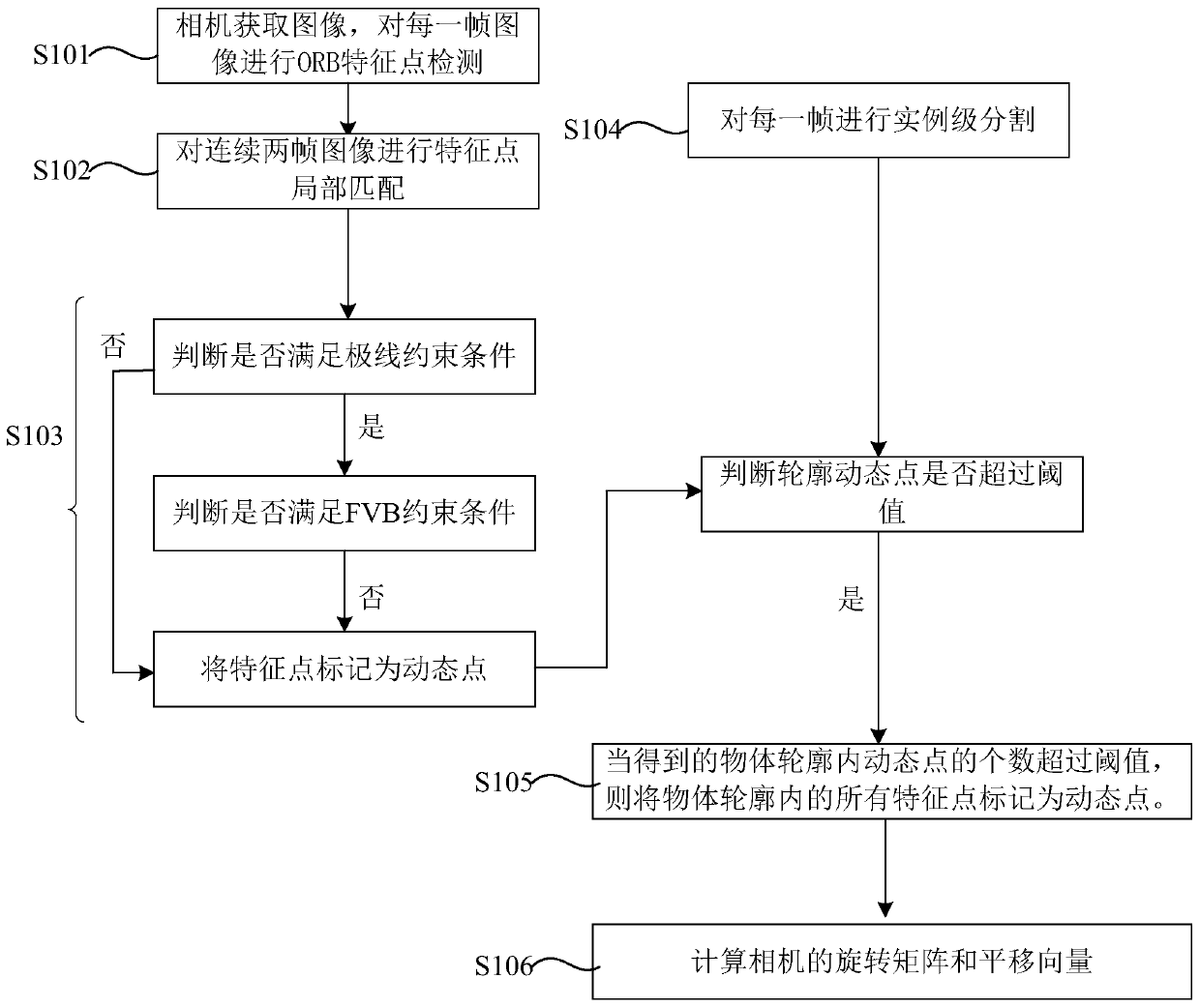

[0039] Below by specific embodiment content of the present invention is provided detailed description, as figure 1 Shown is an overall flow chart of a pose estimation method suitable for a monocular vision camera in a dynamic environment of the present invention. According to an embodimen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com