ROS-based laser radar and camera fusion calibration system and calibration method

A technology of laser radar and calibration method, which is applied in image analysis, image data processing, instruments, etc., can solve the problems of lack of information, insufficient perception dimension, etc., and achieve the effect of precise fusion, convenient embedded development and use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

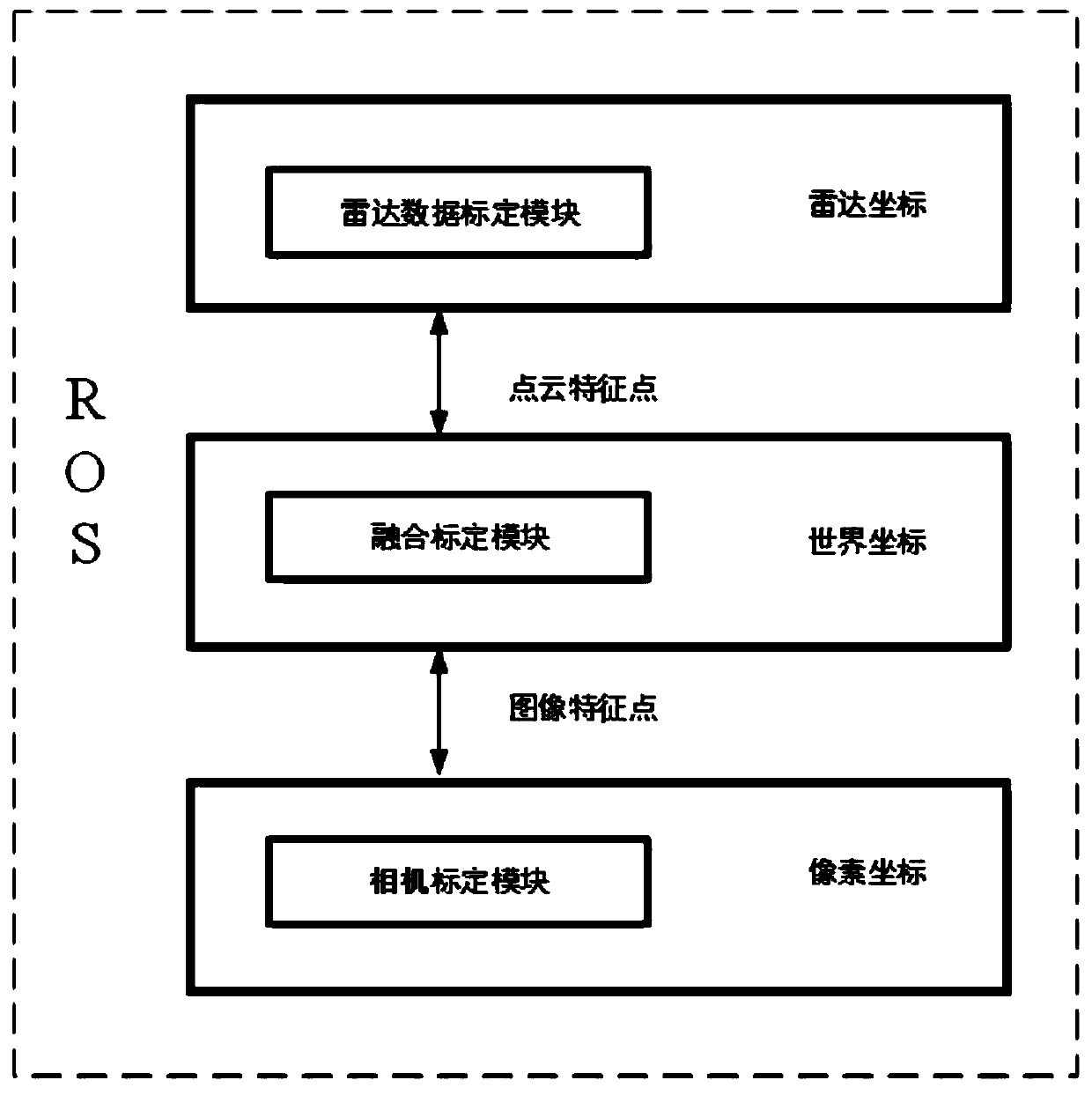

[0075] The aforementioned radar calibration module extracts a corner point of the pile barrel and 6-8 corner points of the two calibration plates through the pile barrel and two calibration plates placed at different positions outside the lidar, and obtains all the aforementioned corner points The coordinates in the world coordinate system and the coordinates in the radar coordinate system are obtained by solving the equation to obtain the transformation matrix from the radar coordinate system to the world coordinate system;

[0076] The aforementioned camera calibration module, by placing the calibration board in different angles and positions in the camera, and obtaining the internal parameters of the camera based on ROS, is to image the object in the camera coordinate system to the pixel coordinates;

[0077] The aforementioned fusion calibration module transforms the coordinates of the 7-9 corner points in the radar coordinate system in the radar calibration module to the c...

Embodiment 2

[0079] A fusion calibration method based on ROS lidar and camera, comprising the following steps:

[0080] Step 1: Obtain the internal parameters of the camera through the camera calibration module, that is, the conversion relationship from the camera coordinate system to the pixel coordinate system;

[0081] The second step: Obtain the external parameters between the lidar coordinates and the world coordinates through the radar calibration module, that is, the conversion relationship between the radar coordinate system and the world coordinate system;

[0082] Step 3: In the fusion module, obtain the 7-9 corner points of the pile bucket in the radar calibration module and the two calibration plates as feature points, select 9 feature points, and obtain the aforementioned 9 feature points in the world coordinates respectively coordinate system, pixel coordinate system;

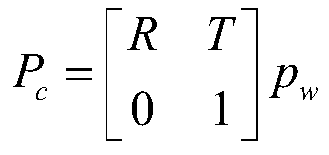

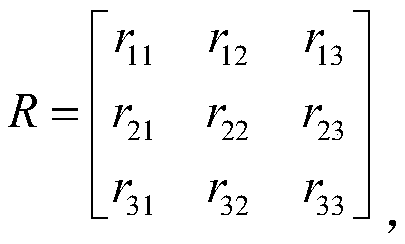

[0083] Step 4: Solve the pose estimation problem through the fusion calibration module, that is, solve the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com