Mobile robot map construction method based on visual inertial navigation fusion and related equipment

A mobile robot and map construction technology, applied in the field of mobile robot map construction based on visual inertial navigation fusion, can solve the problems of inaccurate fusion of camera and IMU data, real-time error of back-end processing, low information efficiency, etc., to shorten the initialization. time, improve robustness, and solve the effect of poor real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0047] The invention provides a mobile robot map construction method based on visual inertial navigation fusion, and a system using the method includes a simulated visual system and a simulated inertial system at the same time.

[0048] The analog vision system includes: two visual perception standardizers, signal conditioning circuit, analog-to-digital (AD) converter. Wherein, the two visual markers are arranged symmetrically, and the main control chip is preferably an STM32 main control chip.

[0049] Analog inertial systems include: gyroscopes, accelerometers, signal conditioning circuits, and analog-to-analog (AD) converters. Among them, the inertial measurement unit chooses the highly integrated MPU6050 chip, which contains a three-axis gyroscope, a three-axis accelerometer and a three-axis magnetometer.

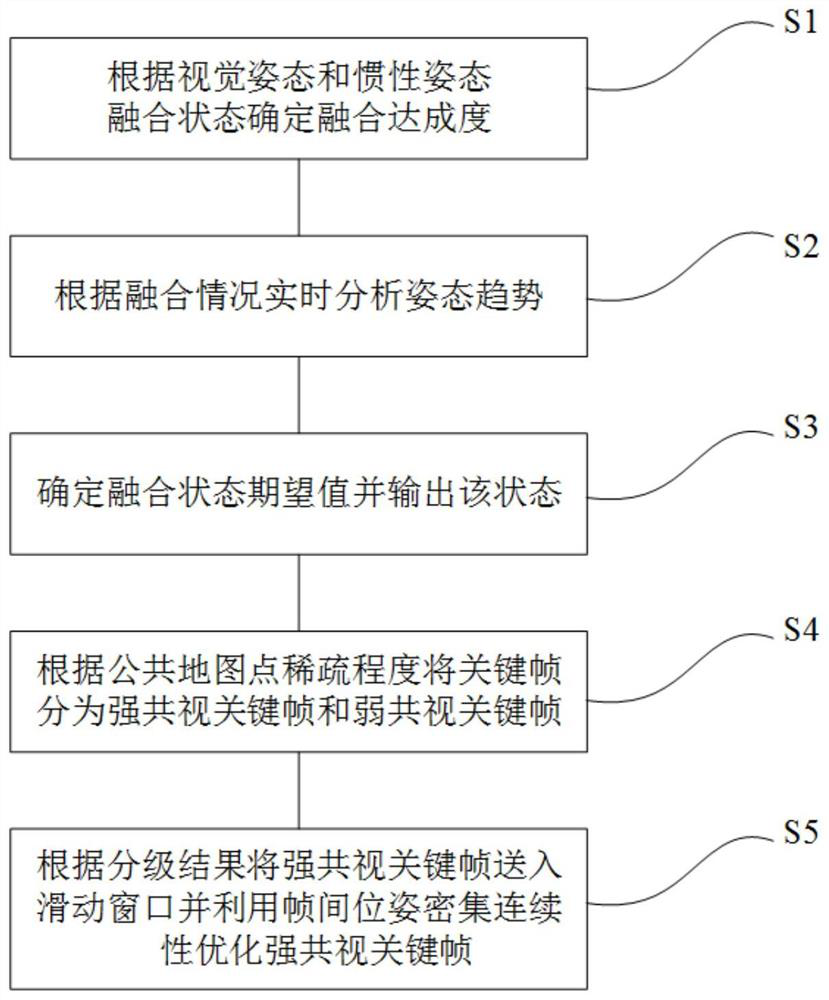

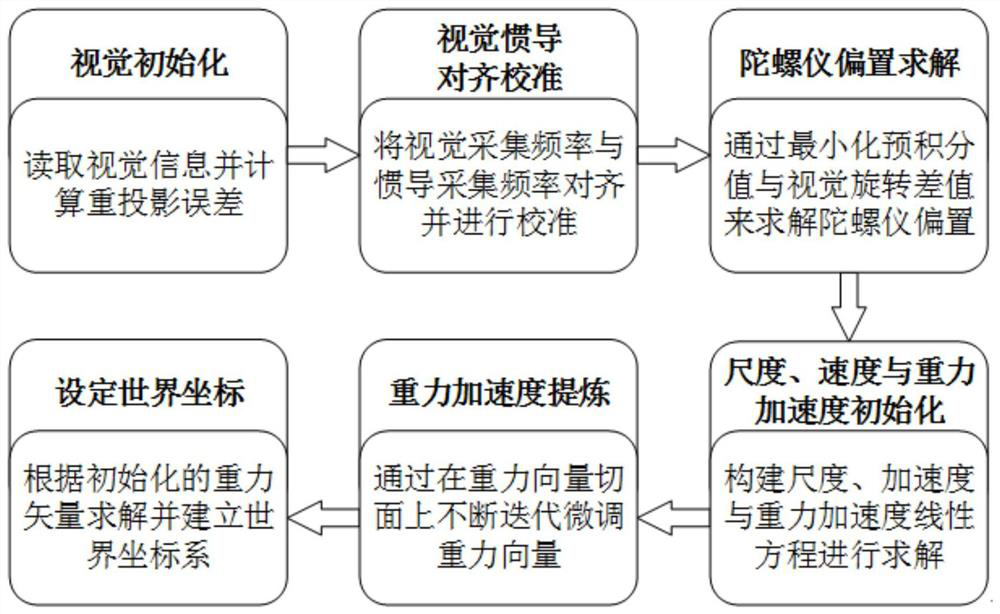

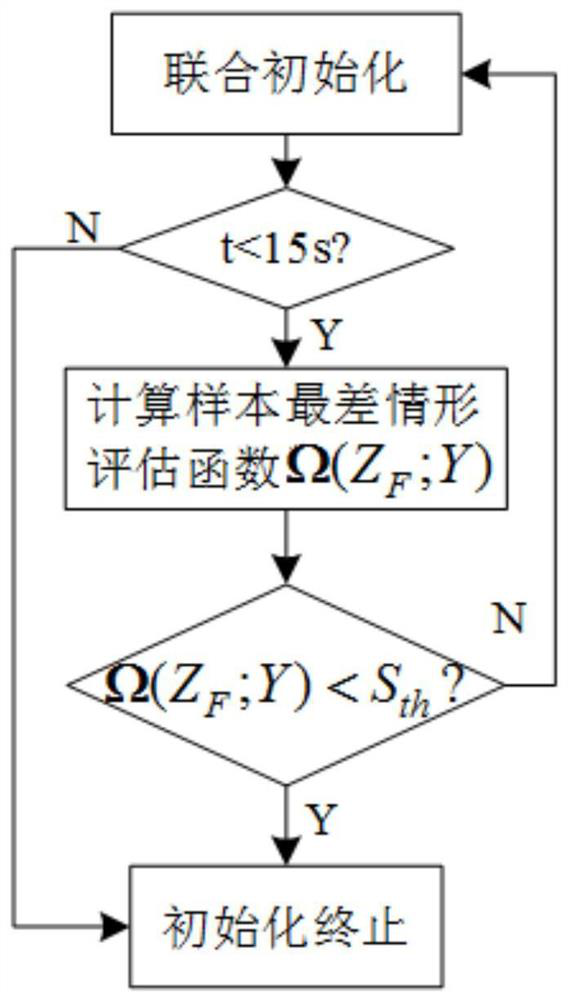

[0050] Such as Figure 1-3 As shown, the method specifically includes;

[0051] Step S1, determine the degree of fusion achievement according to the fusion state of ...

Embodiment 2

[0110] Corresponding to Embodiment 1 of the present invention, Embodiment 2 of the present invention provides a computer-readable storage medium on which a computer program is stored. When the program is executed by a processor, the following steps are implemented according to the method of Embodiment 1:

[0111]Step S1, determine the degree of fusion achievement according to the fusion state of visual attitude and inertial attitude, and complete visual initialization, visual-inertial calibration and alignment, and inertial initialization.

[0112] Step S2, constructing a situation evaluation function to analyze posture trends in real time.

[0113] Step S3, determine the local expected value of the fusion state and output the state.

[0114] In step S4, the key frames are divided into strong common view key frames and weak common view key frames according to the degree of sparsity of common map points.

[0115] Step S5, send the strong common-view keyframes into the sliding ...

Embodiment 3

[0119] Corresponding to Embodiment 1 of the present invention, Embodiment 3 of the present invention provides a computer device, including a memory, a processor, and a computer program stored in the memory and operable on the processor. When the processor executes the program, the The following steps:

[0120] Step S1, determine the degree of fusion achievement according to the fusion state of visual attitude and inertial attitude, and complete visual initialization, visual-inertial calibration and alignment, and inertial initialization.

[0121] Step S2, constructing a situation evaluation function to analyze posture trends in real time.

[0122] Step S3, determine the local expected value of the fusion state and output the state.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com