A cross-database speech emotion recognition method based on deep domain adaptive convolutional neural network

A convolutional neural network and speech emotion recognition technology, applied in the field of cross-database speech emotion recognition, to achieve the effect of high recognition accuracy and narrowing feature differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

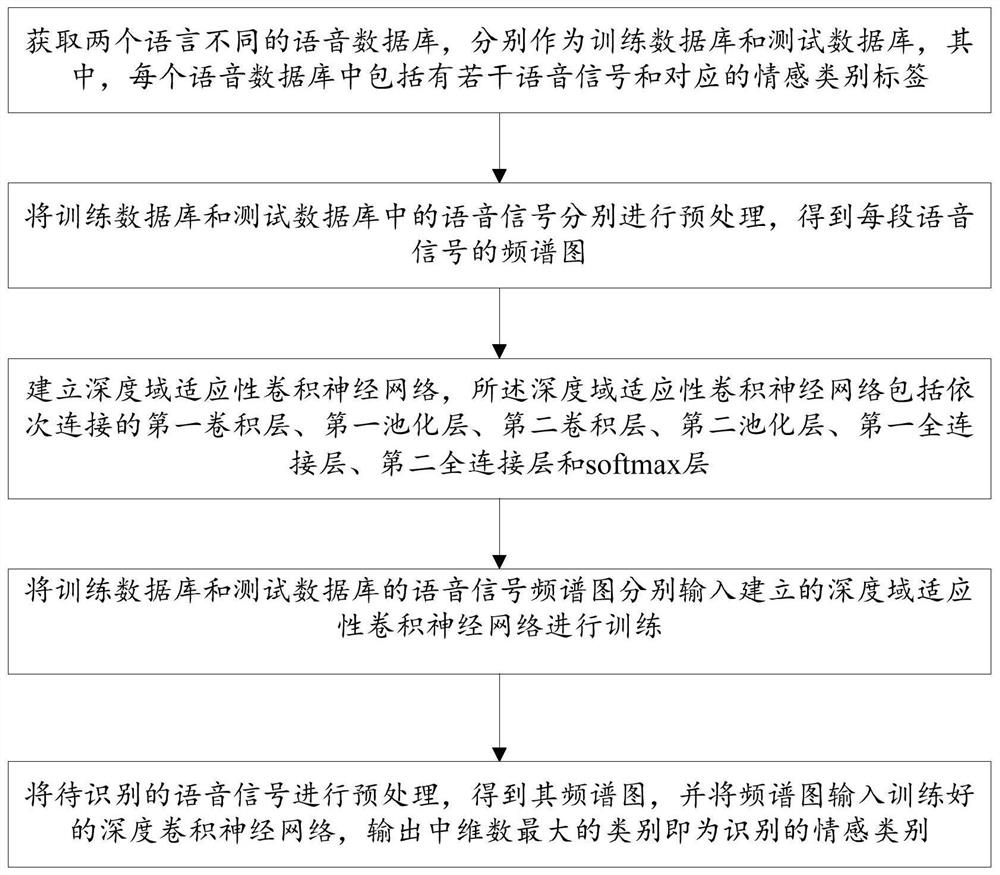

[0031] This embodiment provides a method for recognizing the emotion of speech across databases based on a deep domain adaptive convolutional neural network, such as figure 1 shown, including the following steps:

[0032] (1) Obtain two speech databases in different languages, which are respectively used as a training database and a test database, wherein each speech database includes several speech signals and corresponding emotion category labels.

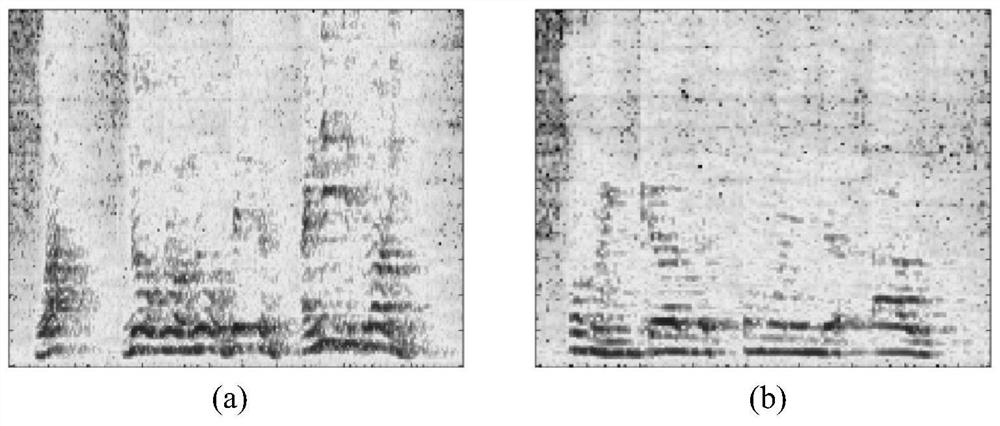

[0033] (2) The speech signals in the training database and the test database are preprocessed respectively to obtain the spectrum diagram of each speech signal. Speech signal spectrogram like figure 2 shown.

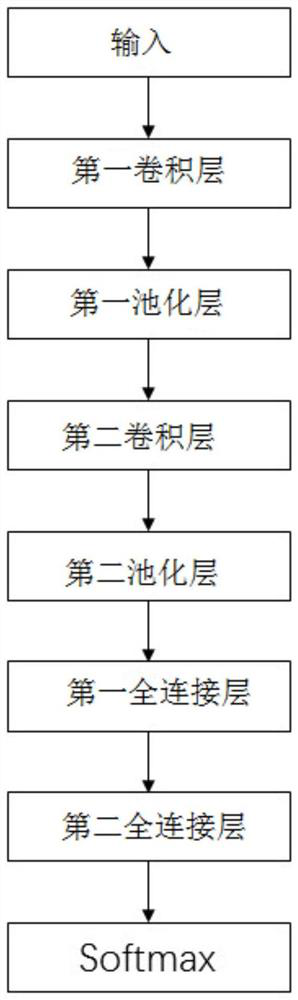

[0034] (3) Establish a deep domain adaptive convolutional neural network, which includes a first convolutional layer, a first pooling layer, a second convolutional layer, and a second pooling layer connected in sequence , the first fully connected layer, the second fully connected layer and the softmax layer, specifical...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com