Reinforcement Learning-Based Collaborative Caching Method for Ultra-dense Network Small Station Coding

An ultra-dense network and reinforcement learning technology, applied in the field of ultra-dense network small station coding collaborative caching, can solve problems such as caching decision-making cannot be well applied, change patterns cannot be mined, and file popularity cannot be tracked.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] In the present invention, an ultra-dense network small station coding cooperative caching method based on reinforcement learning is given by taking the LTE-A system as an example:

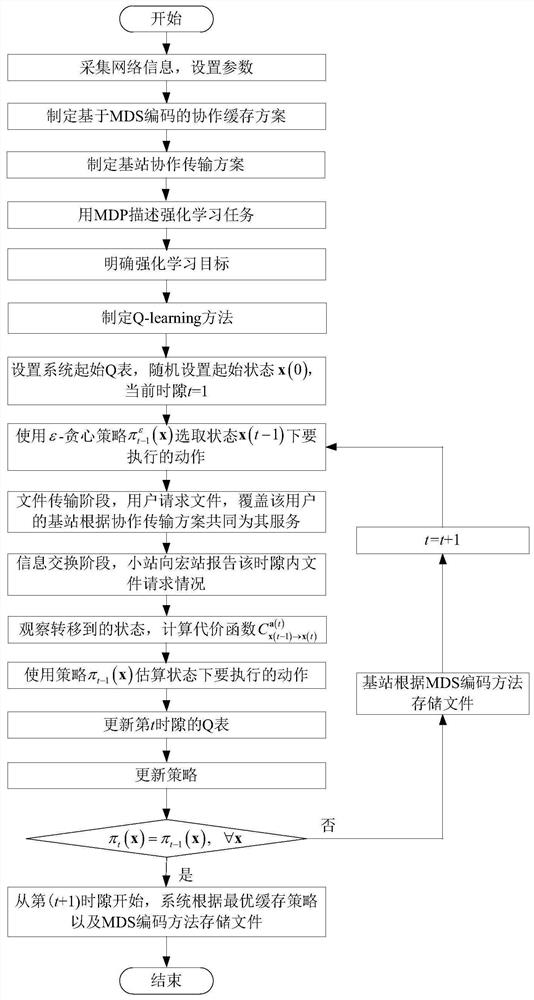

[0066] Such as figure 1 described, including the following steps:

[0067] Step 1: collect network information, set parameters: collect macro station set M={1,2,…,M} in the network, small station set P={1,2,…,P}, file request set F= {1,2,...,F}, the number of small stations within the coverage of the mth macro station p m ,m∈M; Obtain small station cache space M, M is determined by the operator according to the network operation and hardware cost; the operator divides a day into T time slots according to the file request in the ultra-dense network, and sets each Time starting point of time slots, each time slot is divided into three phases: file transfer phase, information exchange phase and cache decision phase;

[0068] Step 2: Formulate a base station cooperative caching scheme based o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com