Patents

Literature

87 results about "Code cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

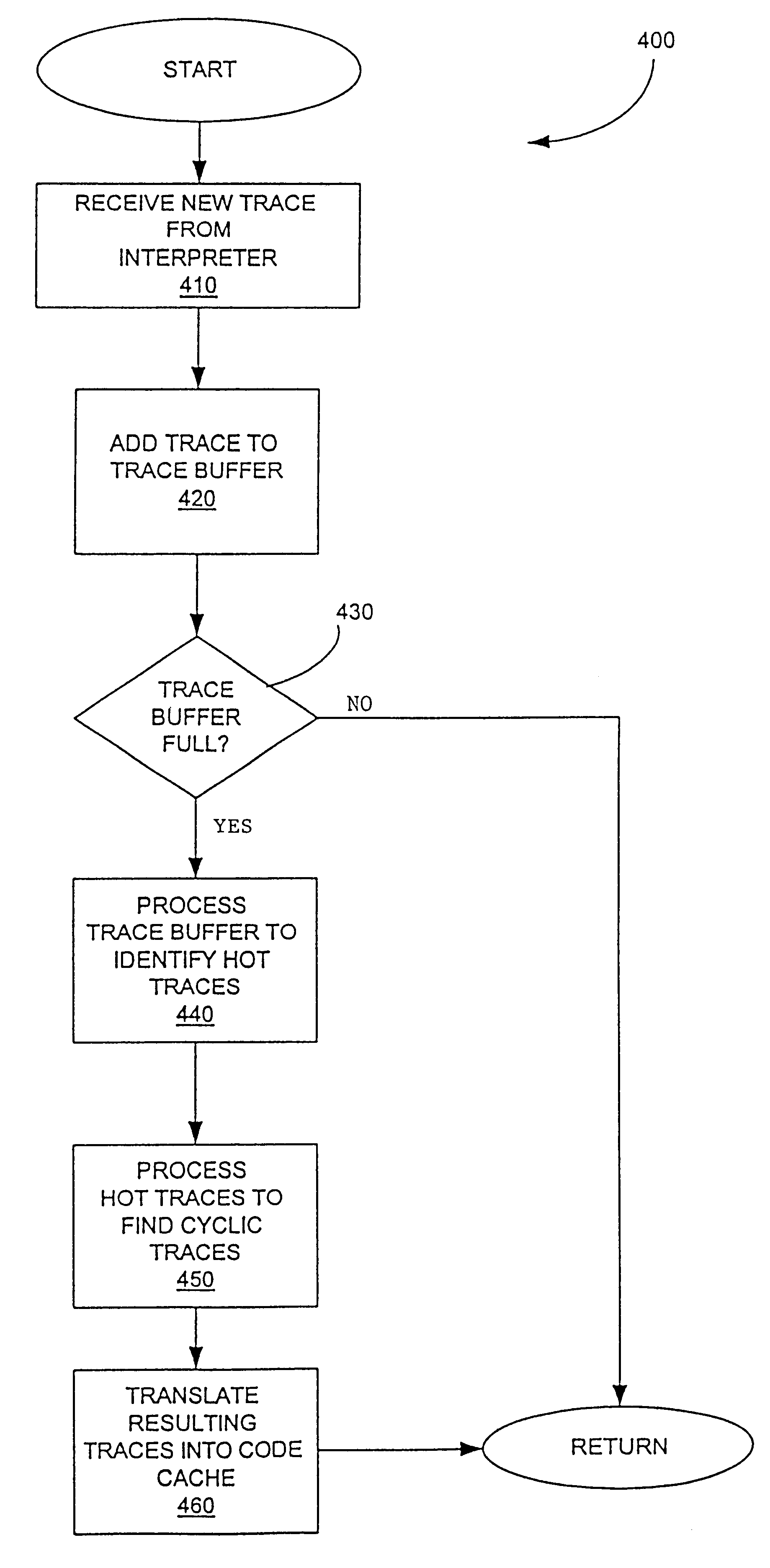

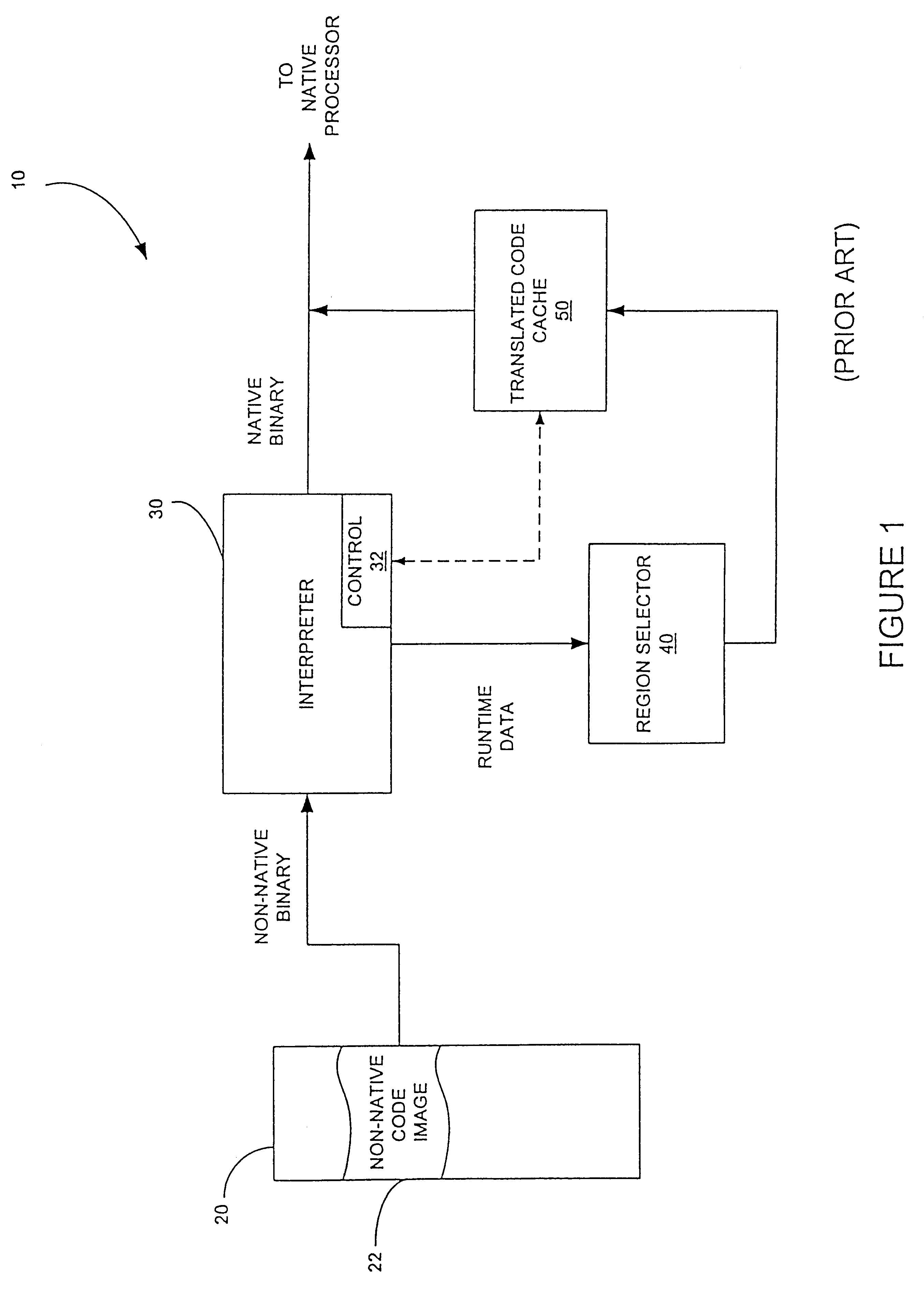

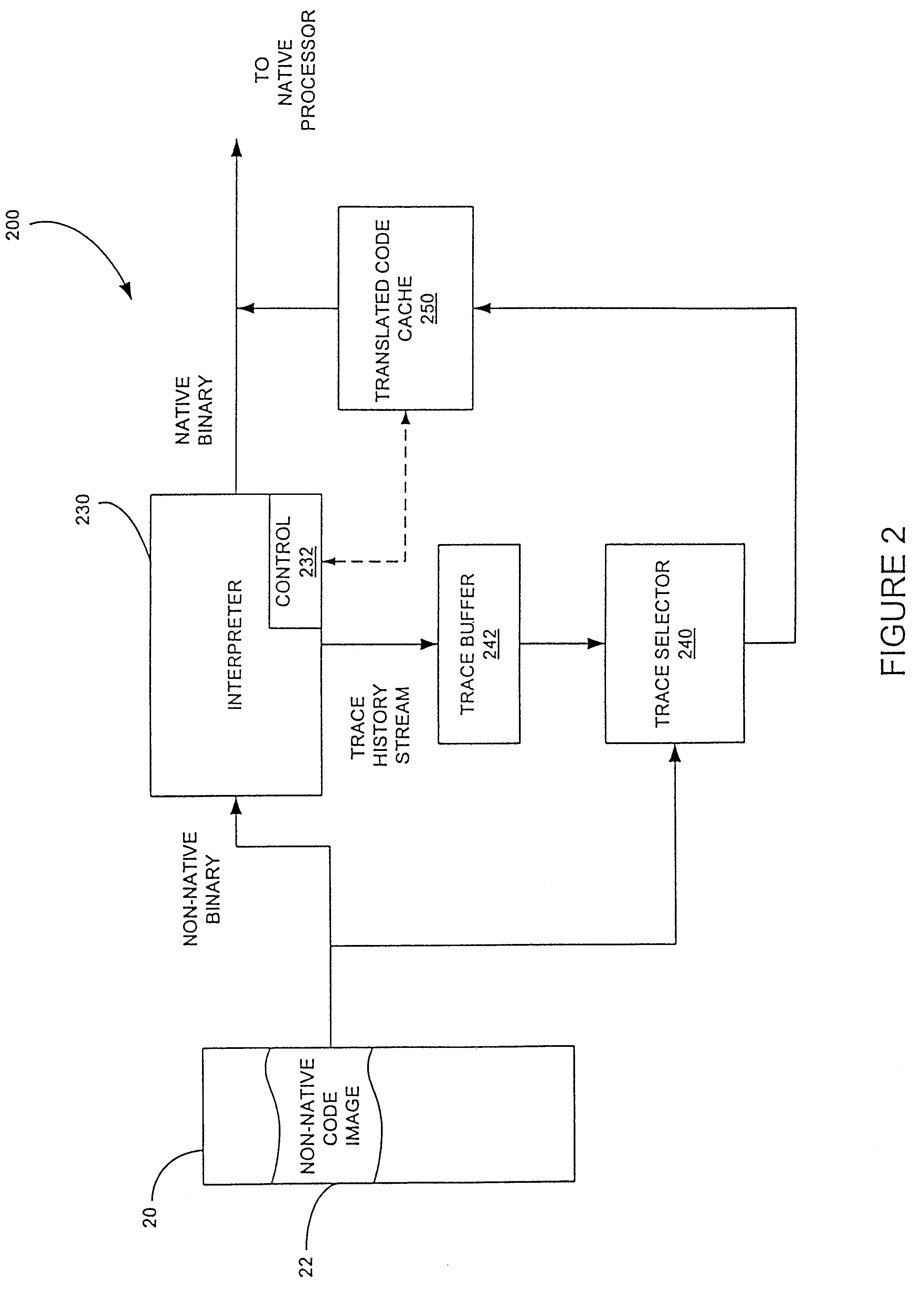

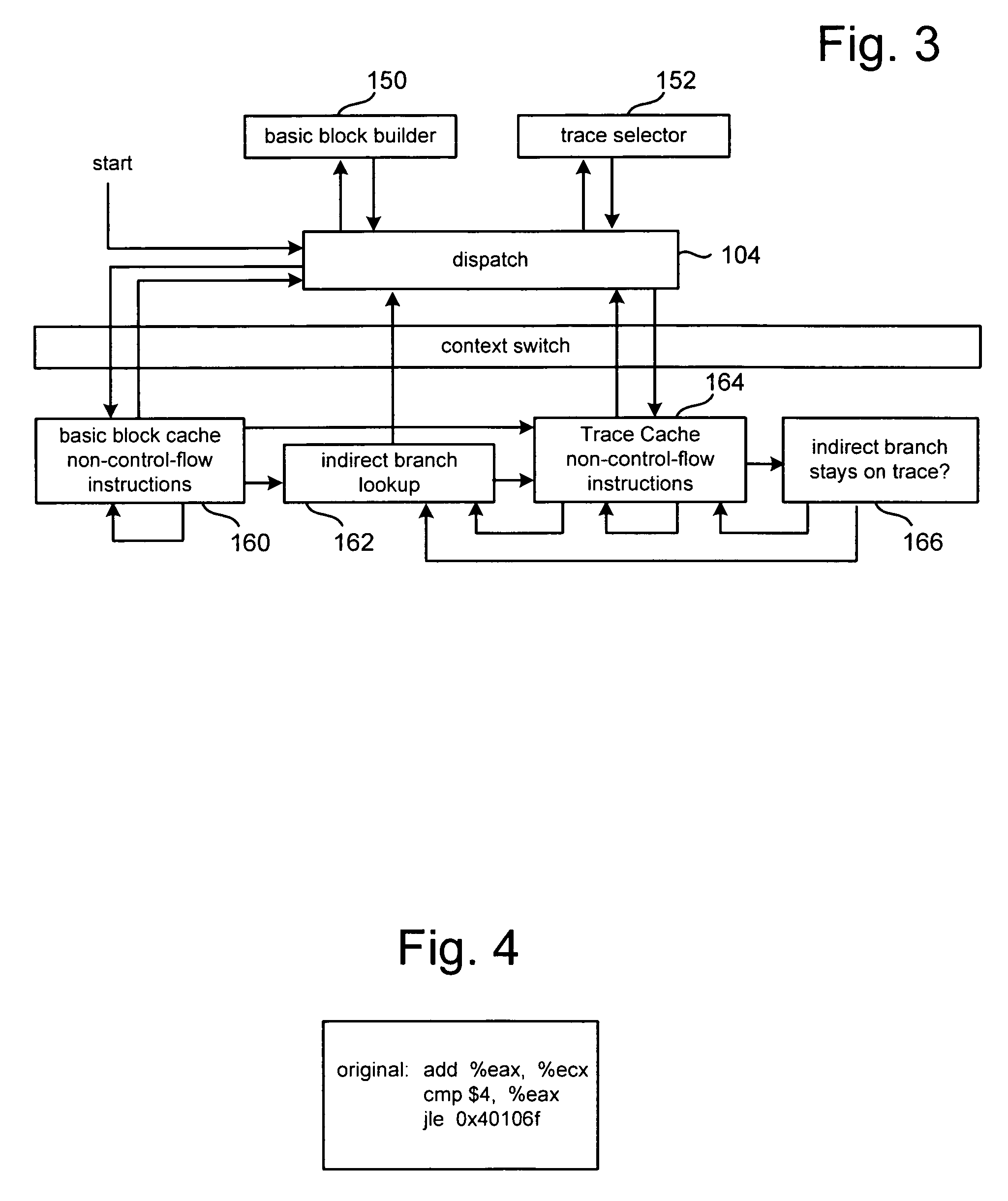

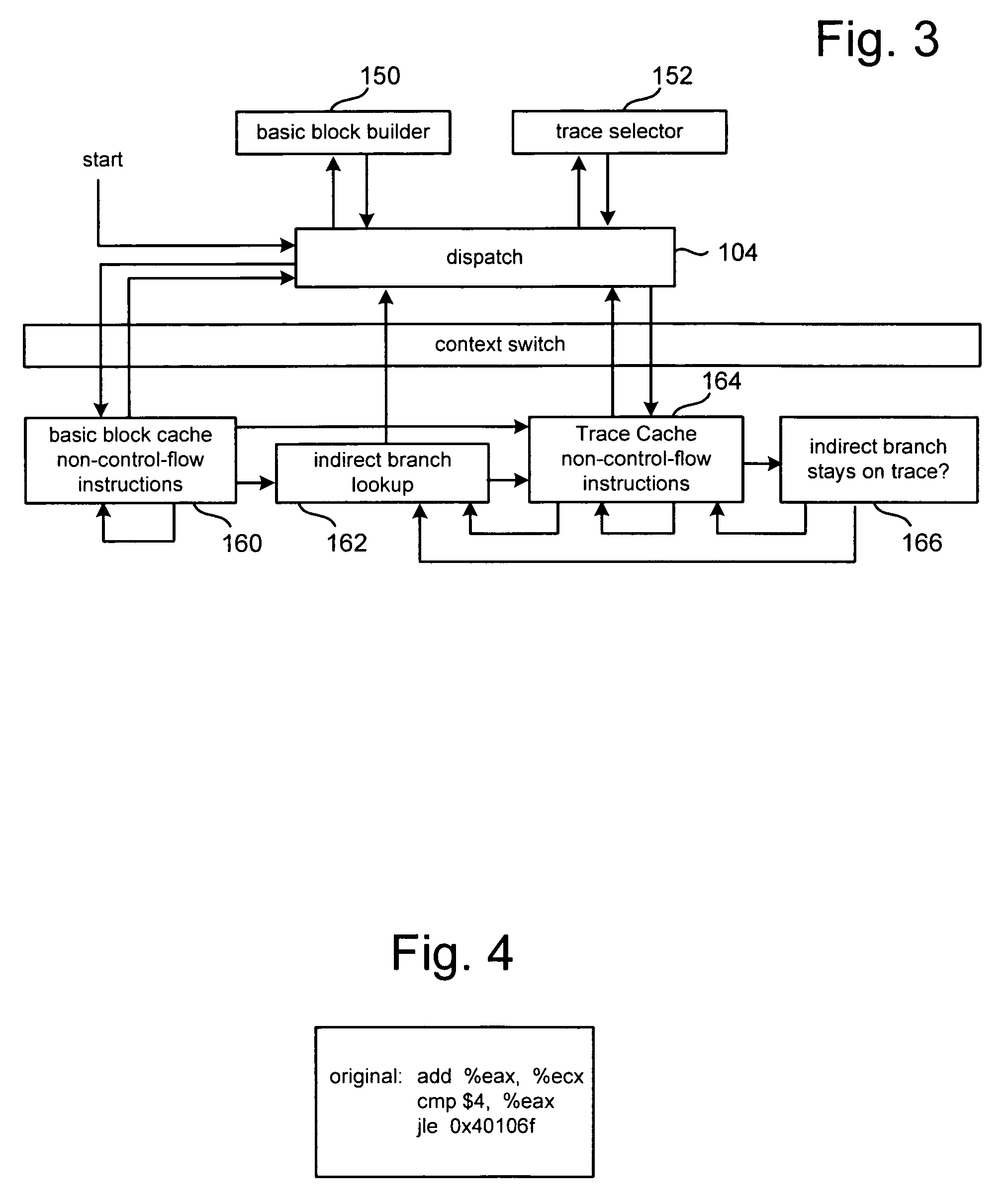

Method for selecting active code traces for translation in a caching dynamic translator

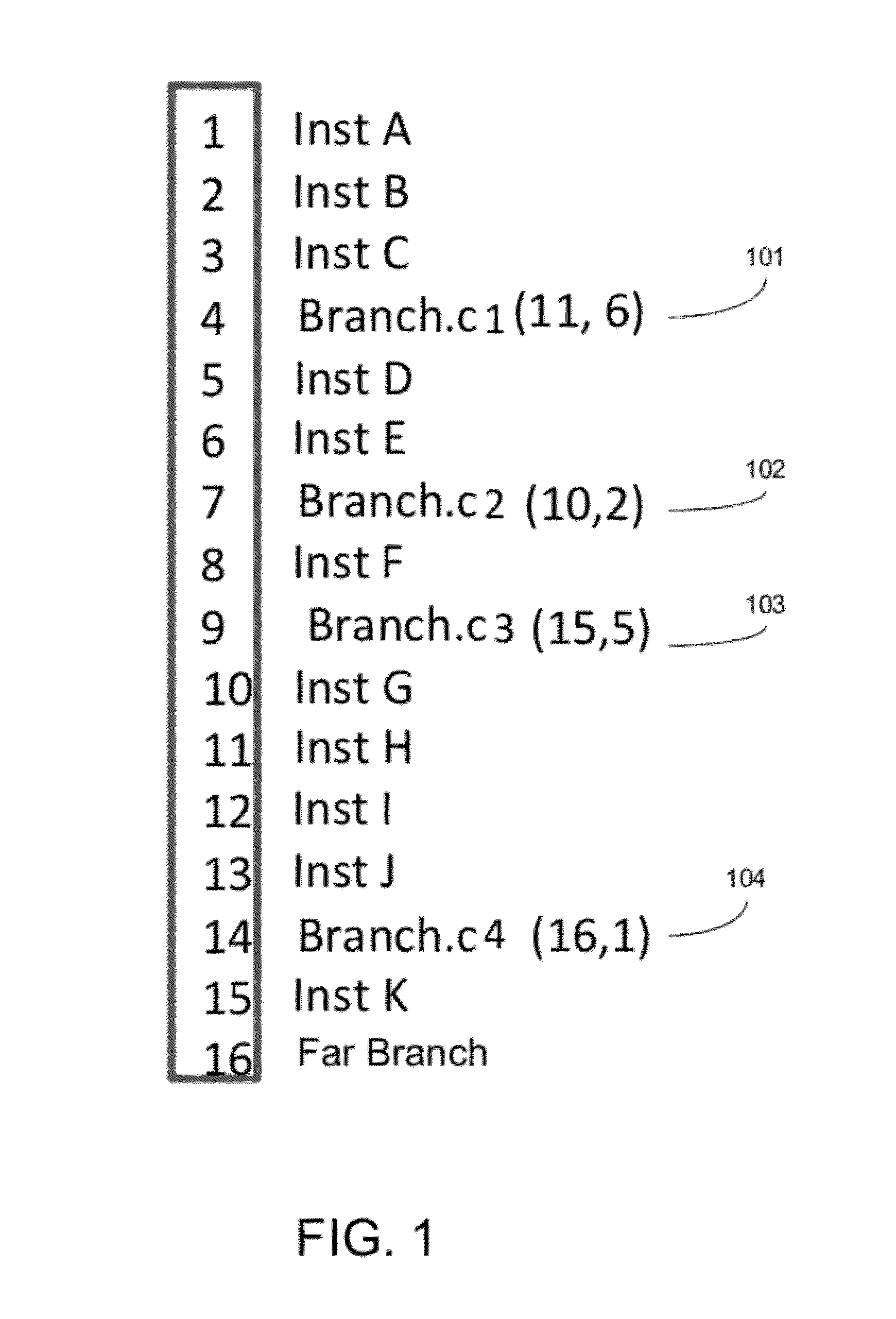

A method is shown for selecting active, or hot, code traces in an executing program for storage in a code cache. A trace is a sequence of dynamic instructions characterized by a start address and a branch history which allows the trace to be dynamically disassembled. Each trace is terminated by execution of a trace terminating condition which is a backward taken branch, an indirect branch, or a branch whose execution causes the branch history for the trace to reach a predetermined limit. As each trace is generated by the executing program, it is loaded into a buffer for processing. When the buffer is full, a counter corresponding to the start address of each trace is incremented. When the count for a start address exceeds a threshold, then the start address is marked as being hot. Each hot trace is then checked to see if the next trace in the buffer shares the same start address, in which case the hot trace is cyclic. If the start address of the next trace is not the same as the hot trace, then the traces in the buffer are checked to see they form a larger cycle of execution. If the traces subsequent to the hot trace are not hot themselves and are followed by a trace having the same start address as the hot trace, then their branch histories are companded with the branch history of the hot trace to form a cyclic trace. The cyclic traces are then disassembled and the instructions executed in the trace are stored in a code cache.

Owner:HEWLETT PACKARD DEV CO LP

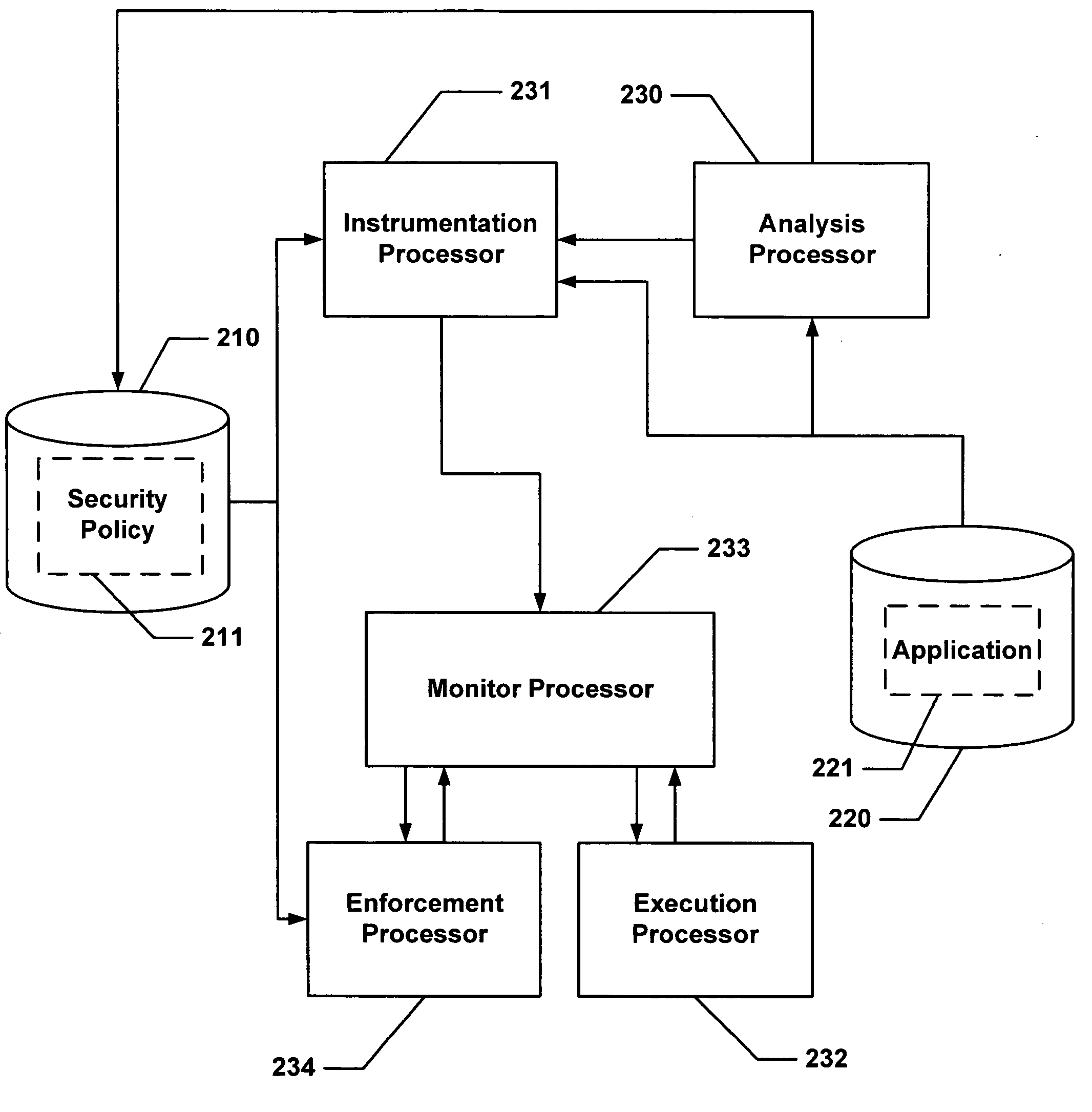

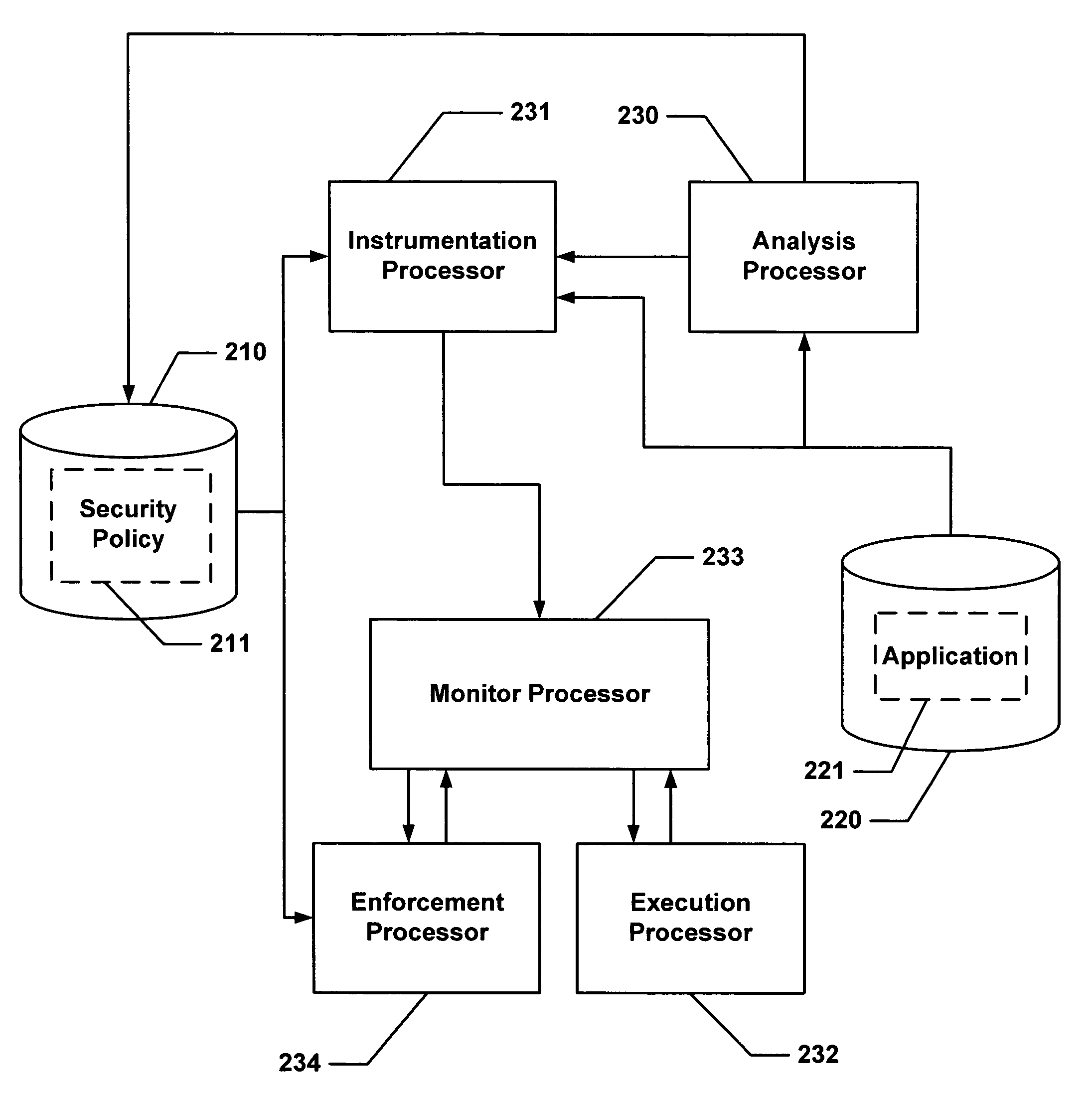

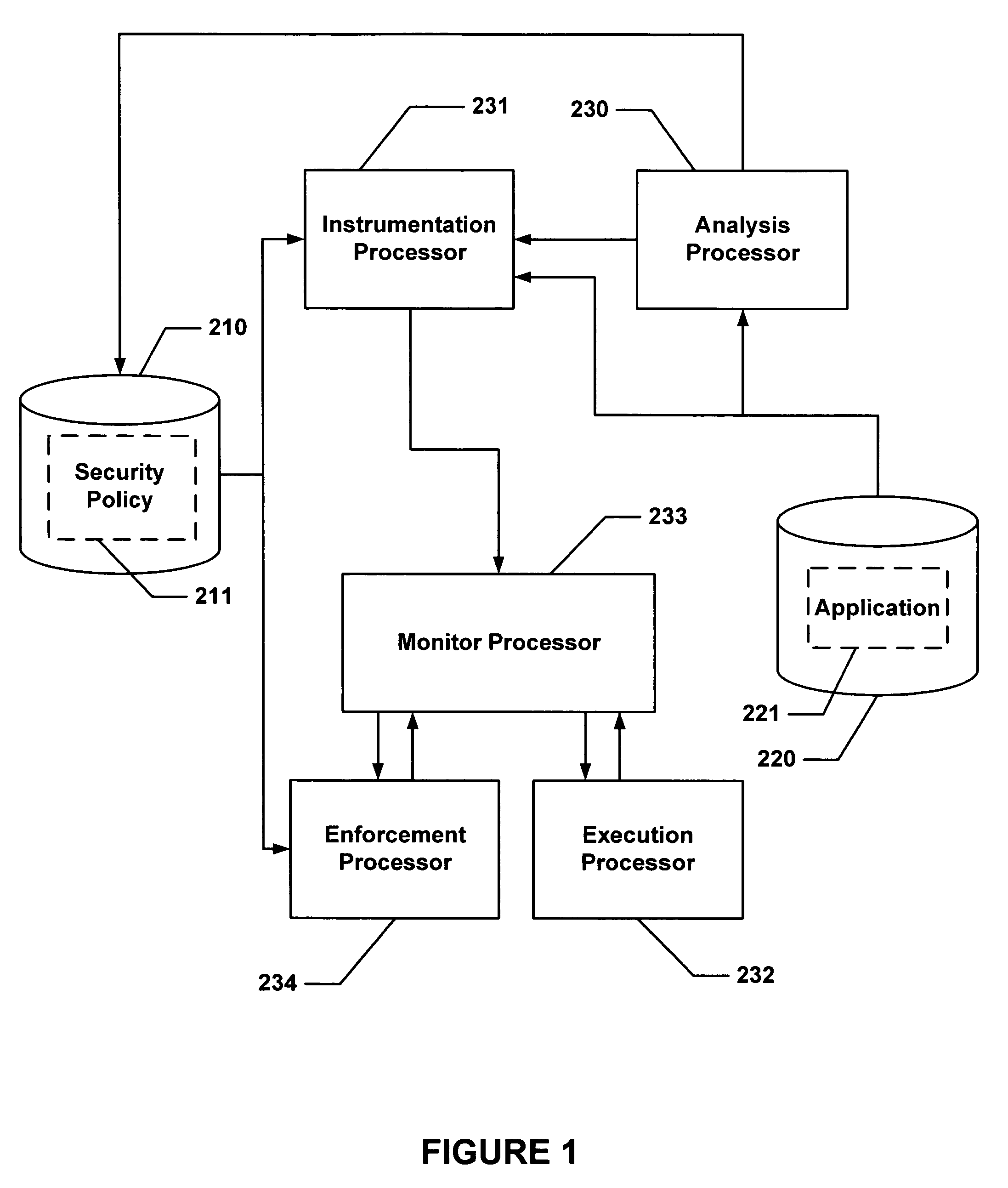

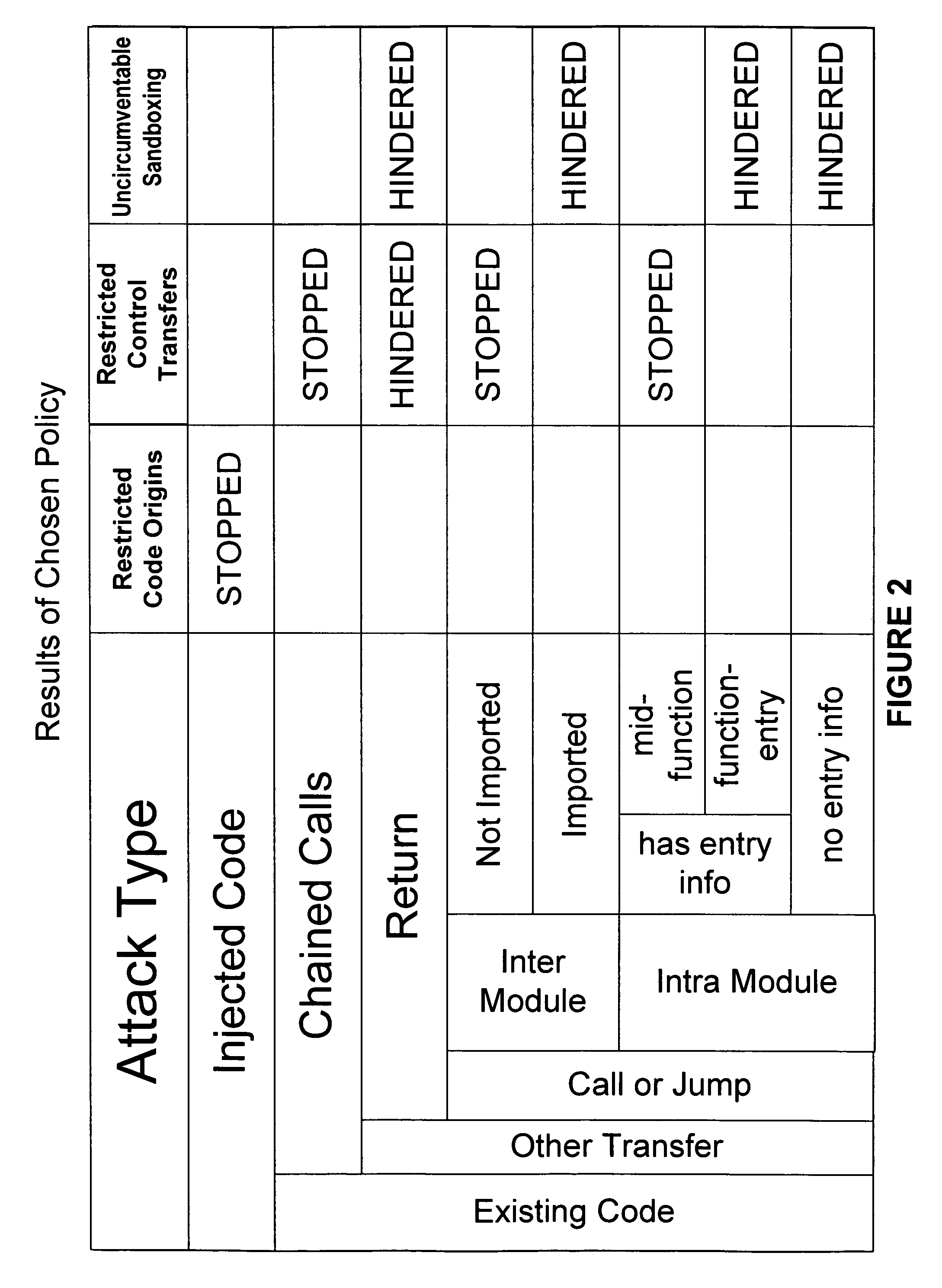

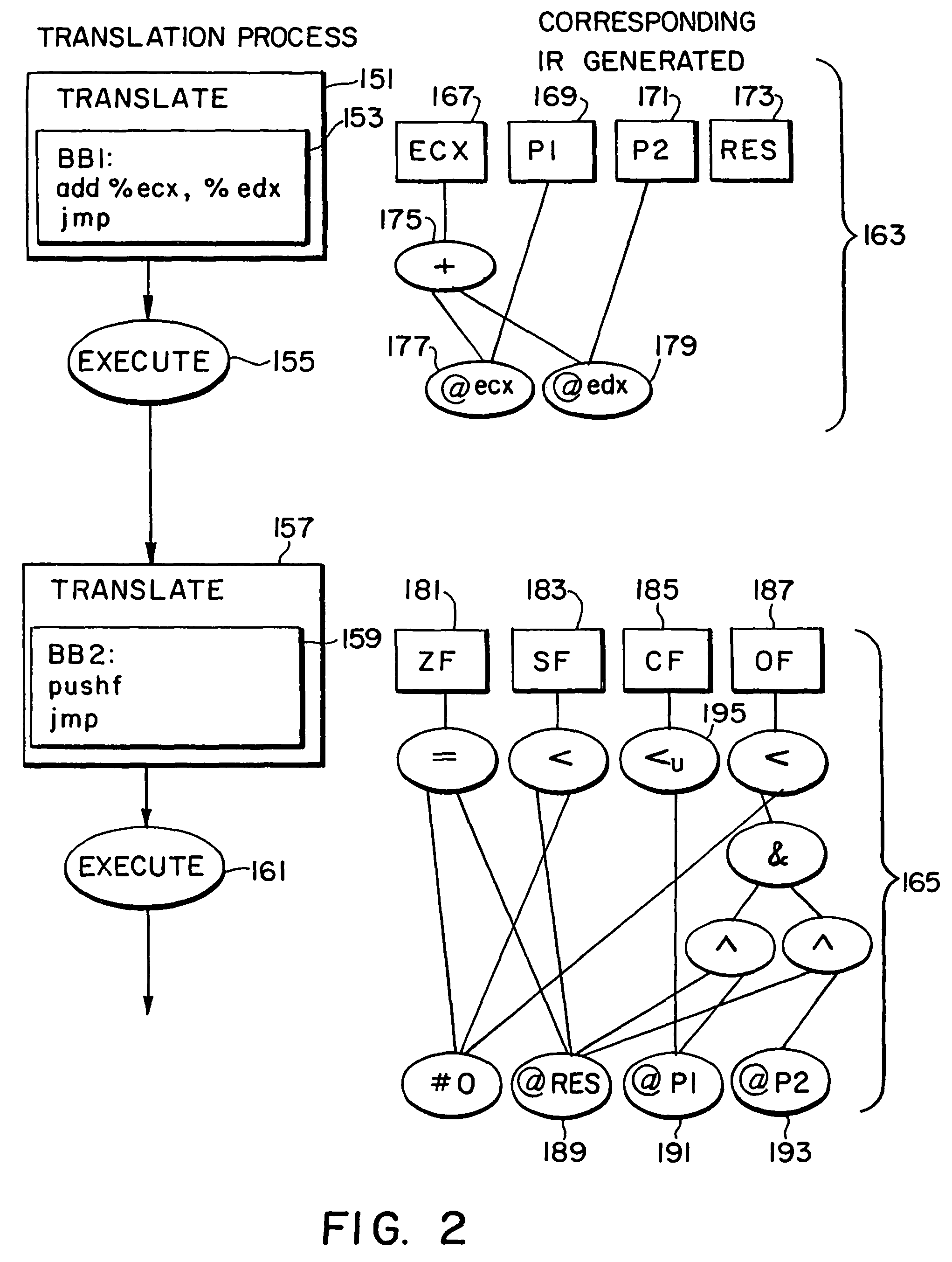

Secure execution of a computer program using a code cache

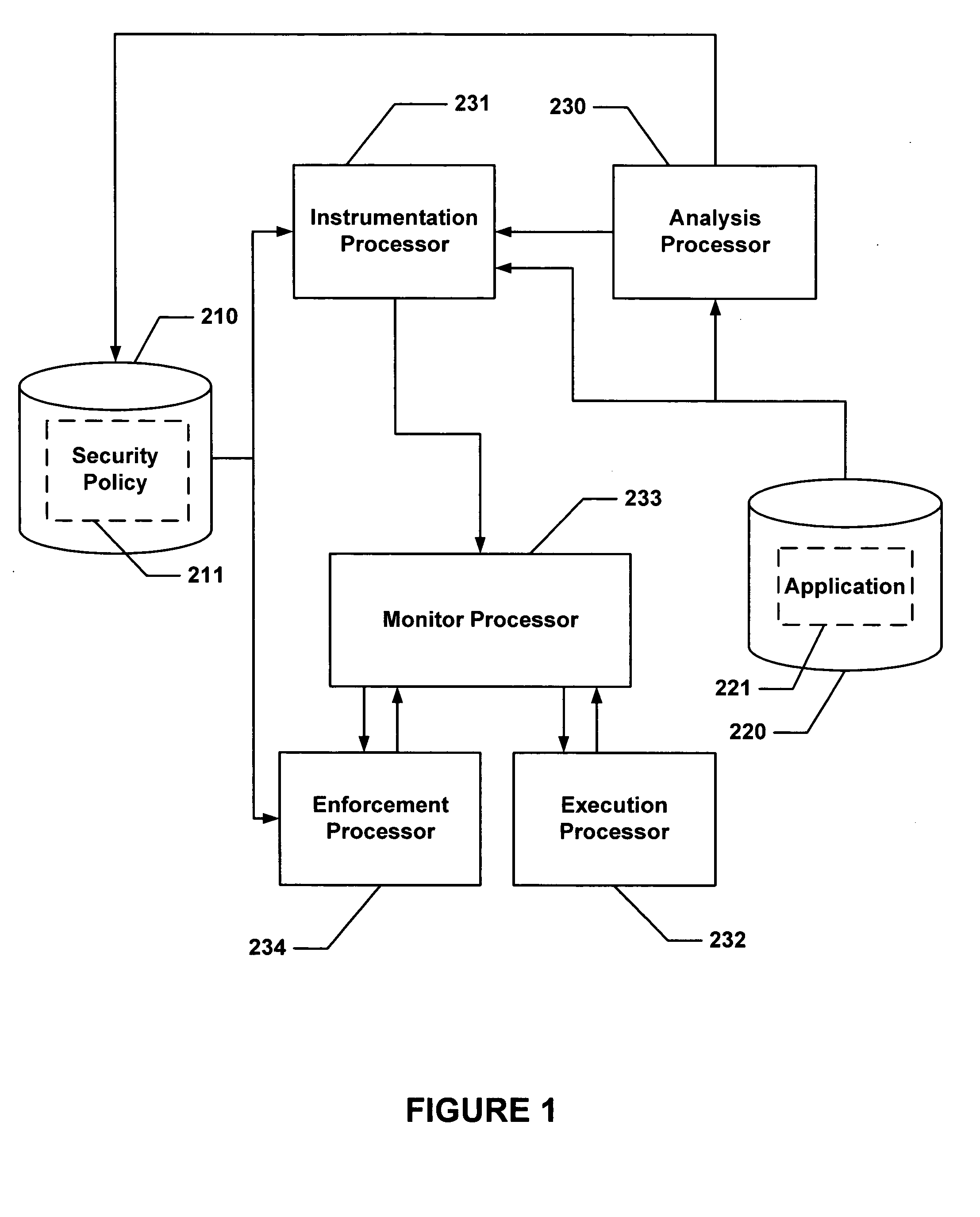

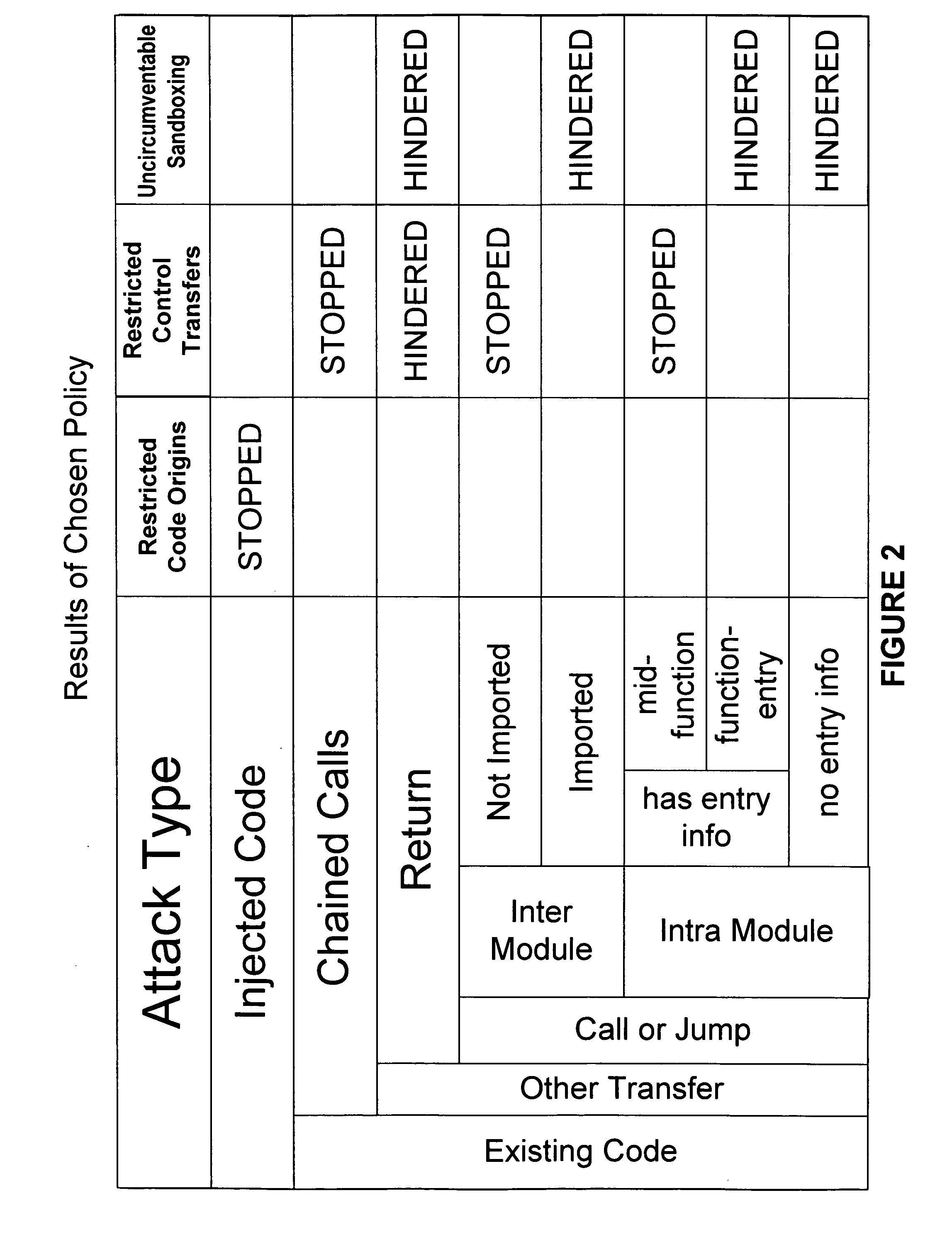

ActiveUS20050010804A1Avoid hijackingLimit executionMemory loss protectionError detection/correctionControl flowSecurity policy

Hijacking of an application is prevented by monitoring control flow transfers during program execution in order to enforce a security policy. At least three basic techniques are used. The first technique, Restricted Code Origins (RCO), can restrict execution privileges on the basis of the origins of instruction executed. This distinction can ensure that malicious code masquerading as data is never executed, thwarting a large class of security attacks. The second technique, Restricted Control Transfers (RCT), can restrict control transfers based on instruction type, source, and target. The third technique, Un-Circumventable Sandboxing (UCS), guarantees that sandboxing checks around any program operation will never be bypassed.

Owner:MASSACHUSETTS INST OF TECH

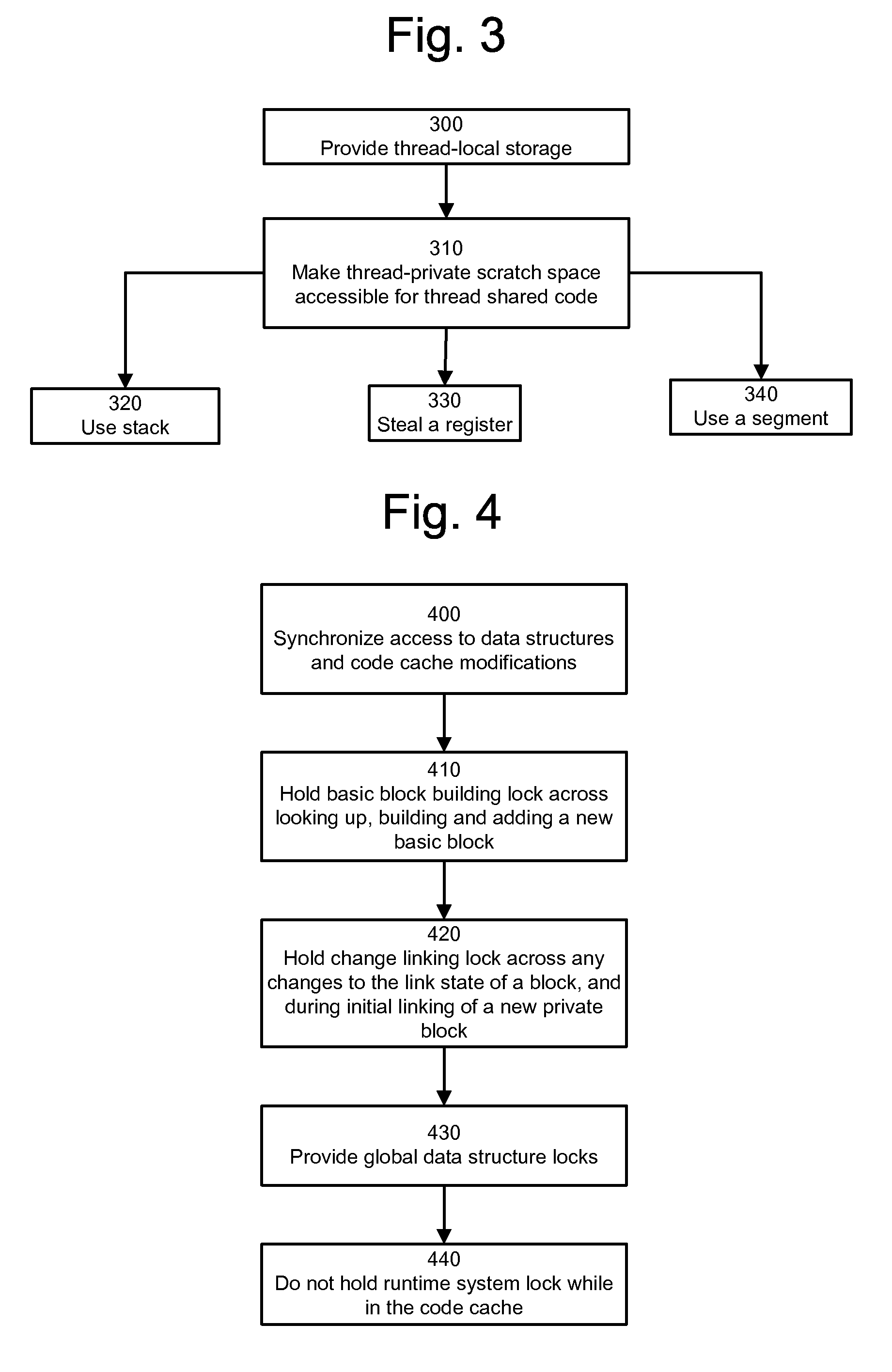

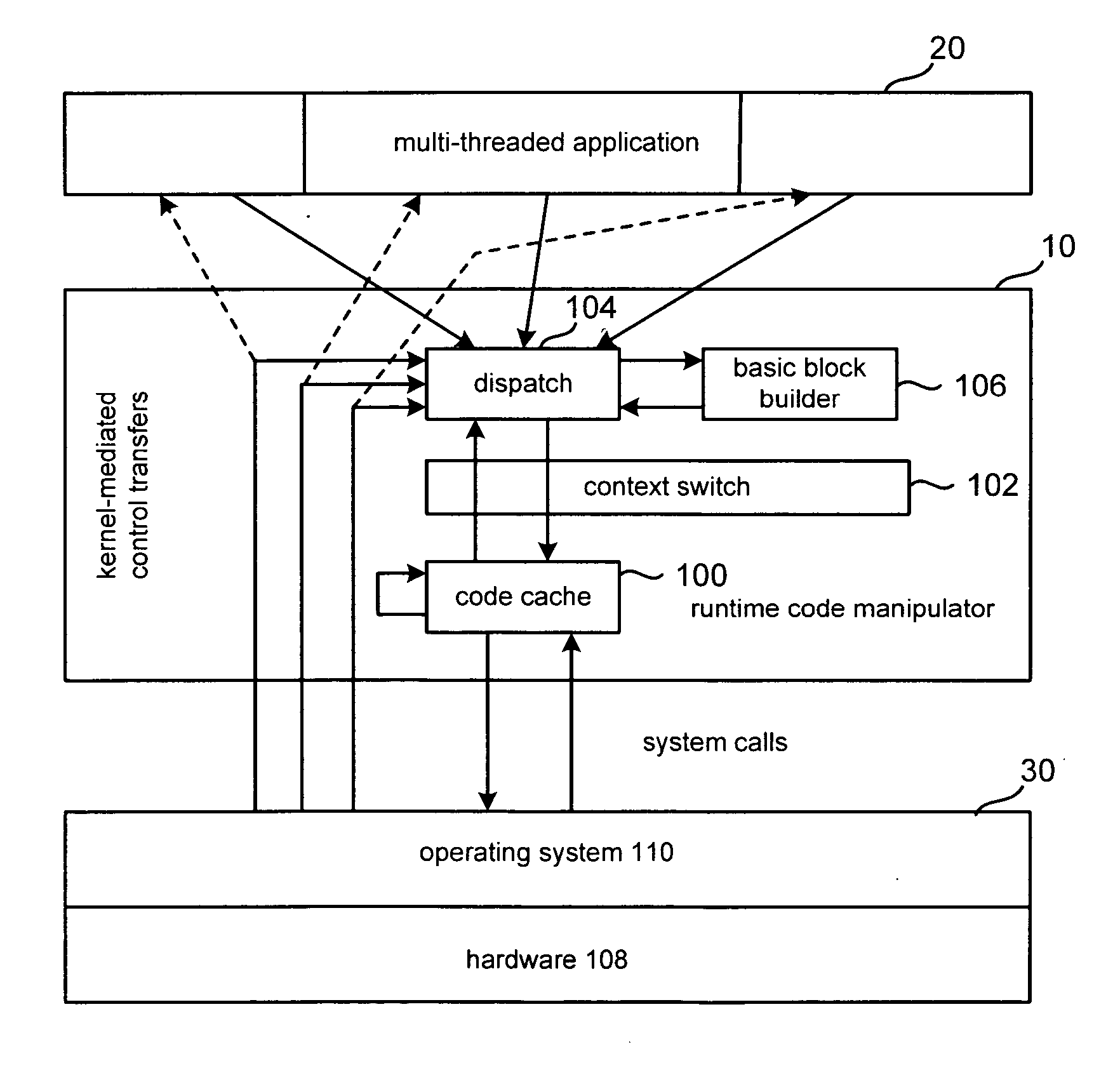

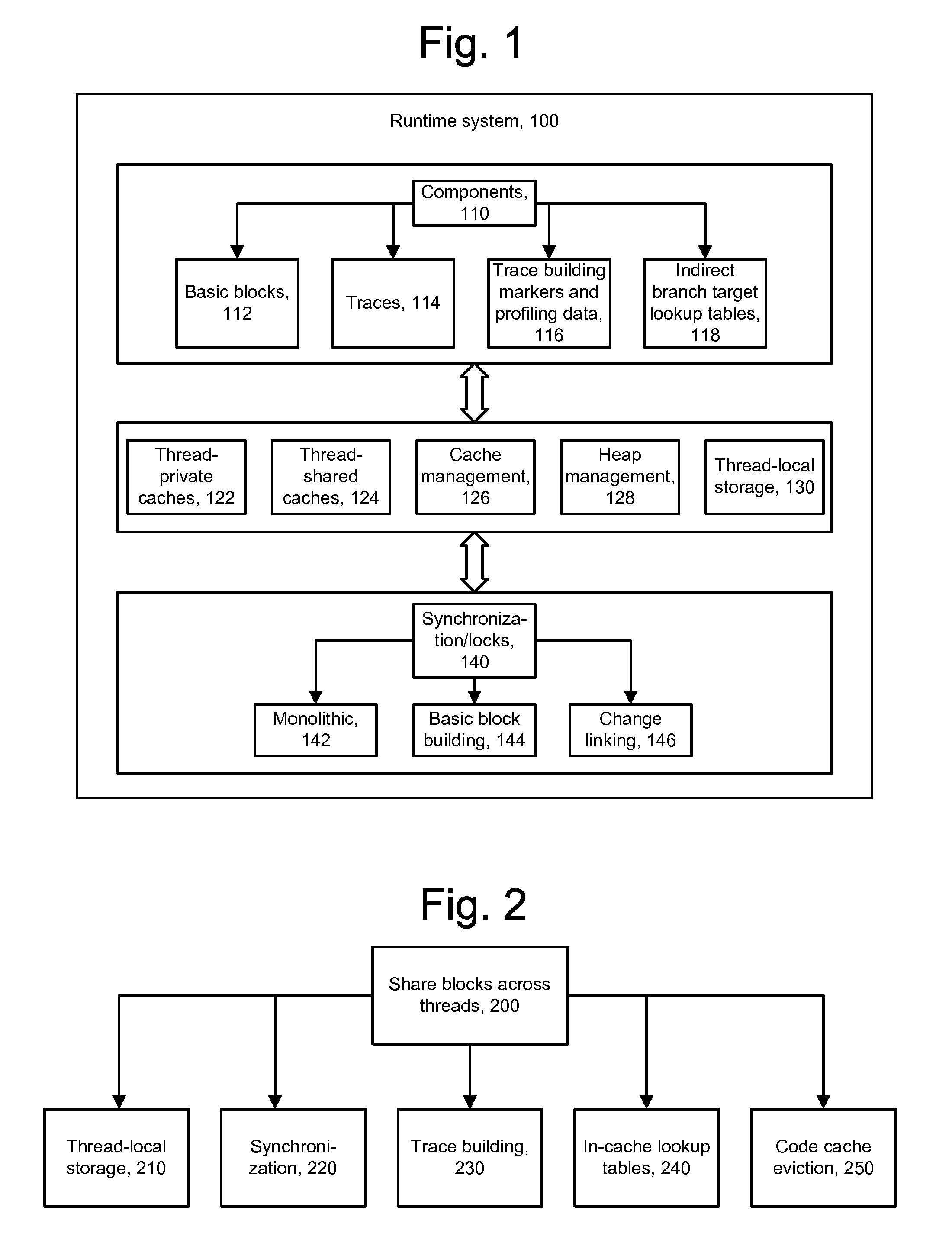

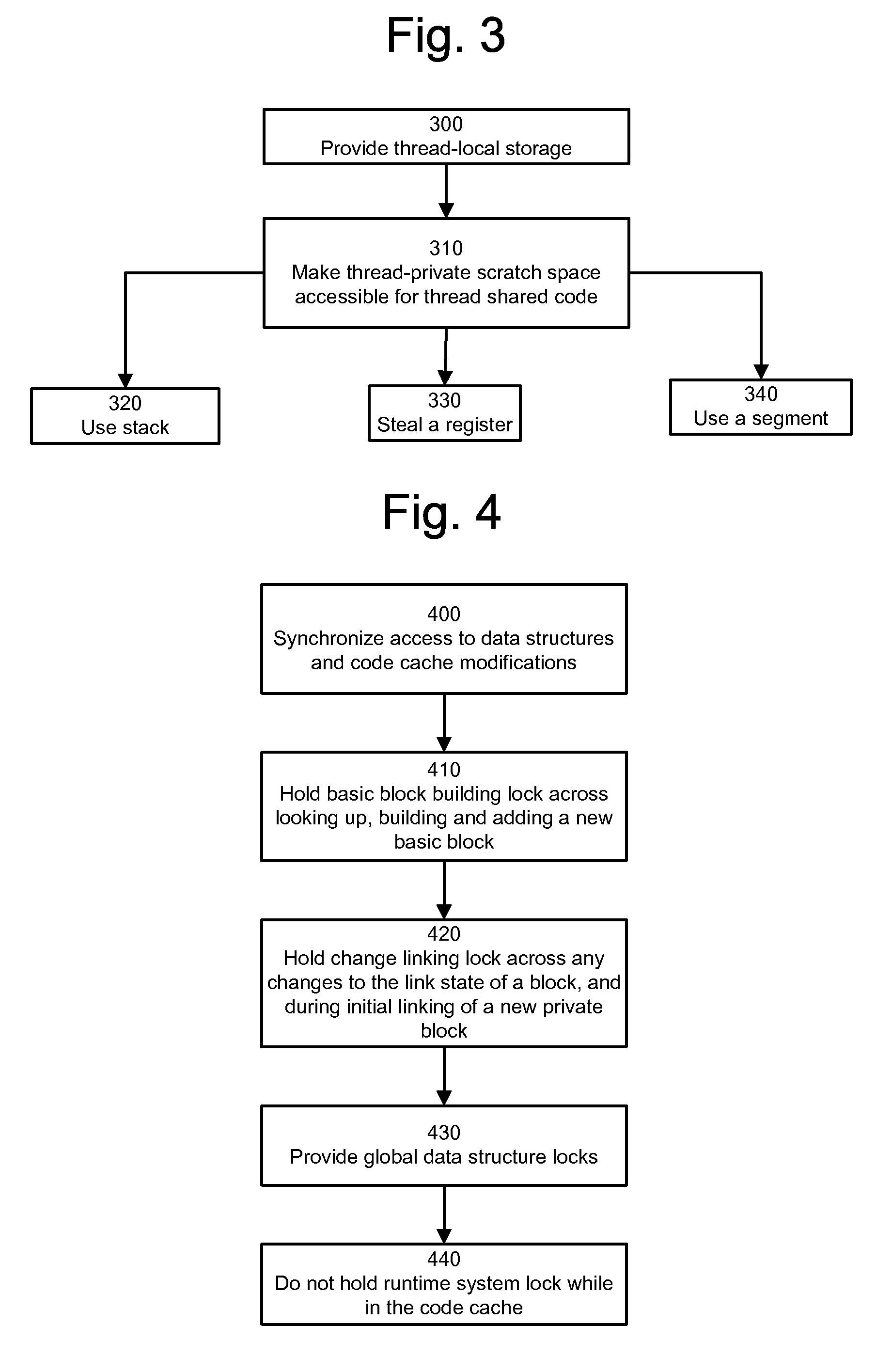

Thread-shared software code caches

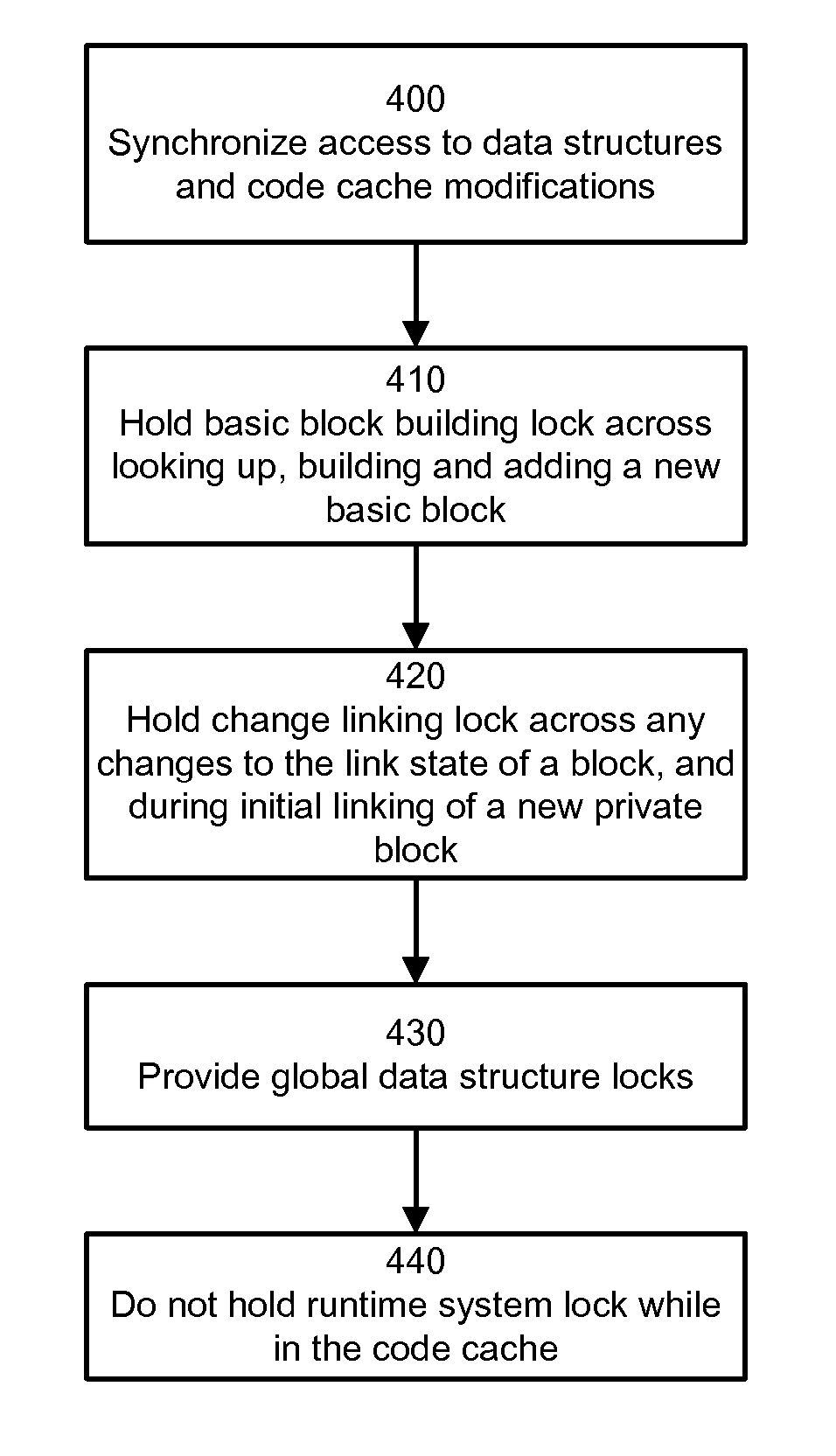

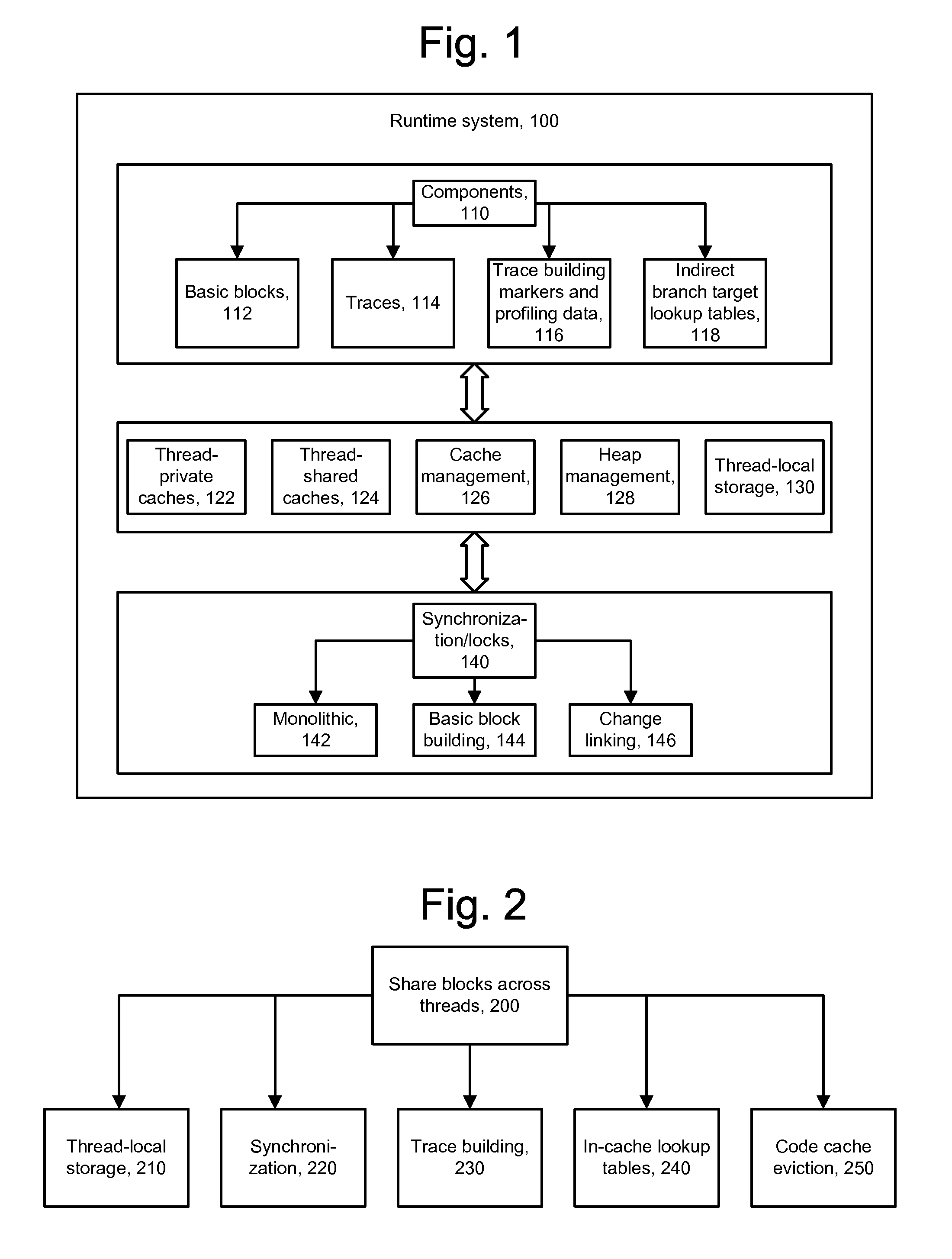

ActiveUS20070067573A1Avoiding brute-force all-thread-suspensionAvoiding monolithic global locksMemory adressing/allocation/relocationMultiprogramming arrangementsTimestampBrute force

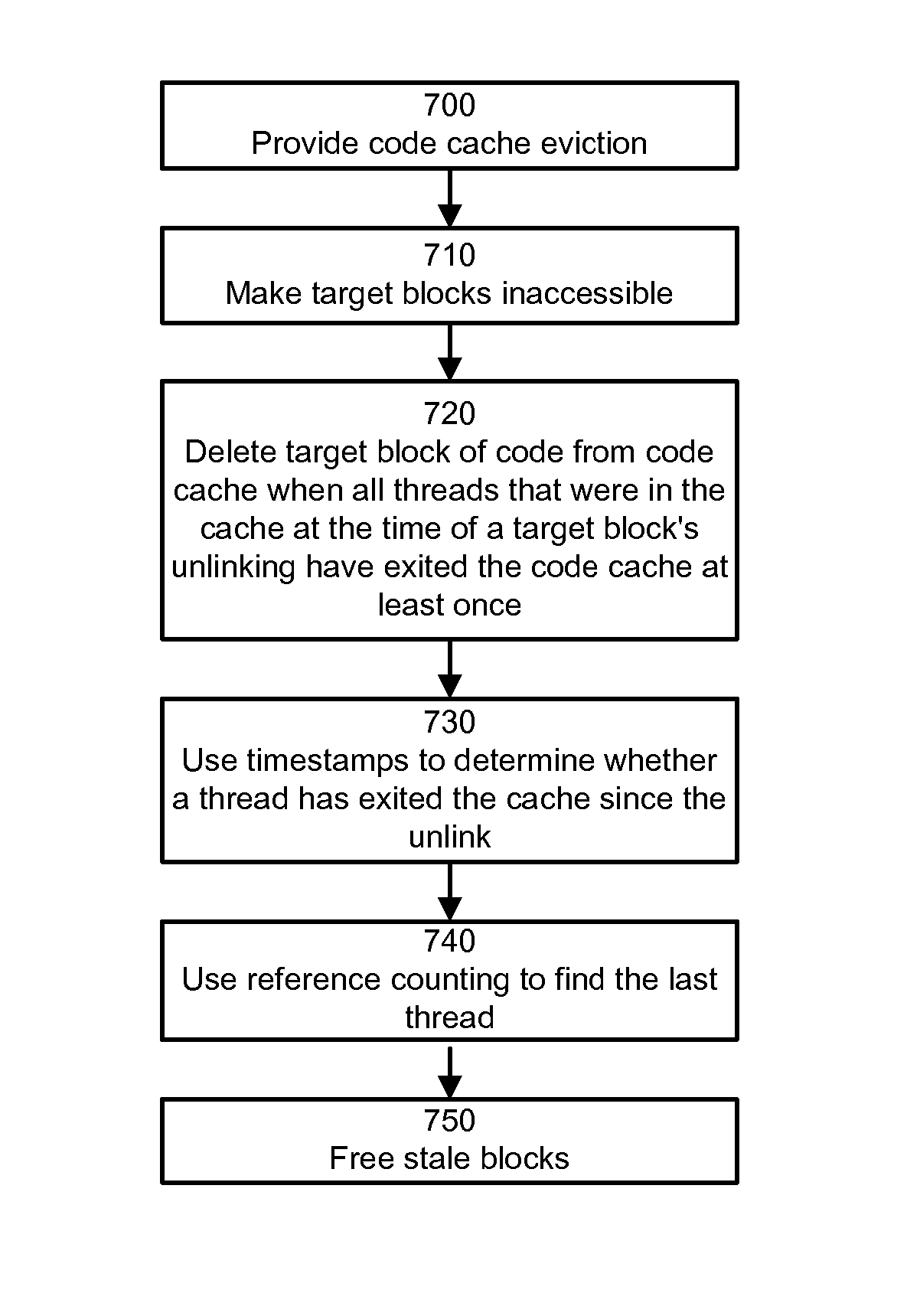

A runtime system using thread-shared code caches is provided which avoids brute-force all-thread-suspension and monolithic global locks. In one embodiment, medium-grained runtime system synchronization reduces lock contention. The system includes trace building that combines efficient private construction with shared results, in-cache lock-free lookup table access in the presence of entry invalidations, and a delayed deletion algorithm based on timestamps and reference counts. These enable reductions in memory usage and performance overhead.

Owner:VMWARE INC

Secure execution of a computer program using a code cache

ActiveUS7603704B2Avoid hijackingMemory loss protectionError detection/correctionControl flowApplication software

Hijacking of an application is prevented by monitoring control flow transfers during program execution in order to enforce a security policy. At least three basic techniques are used. The first technique, Restricted Code Origins (RCO), can restrict execution privileges on the basis of the origins of instruction executed. This distinction can ensure that malicious code masquerading as data is never executed, thwarting a large class of security attacks. The second technique, Restricted Control Transfers (RCT), can restrict control transfers based on instruction type, source, and target. The third technique, Un-Circumventable Sandboxing (UCS), guarantees that sandboxing checks around any program operation will never be bypassed.

Owner:MASSACHUSETTS INST OF TECH

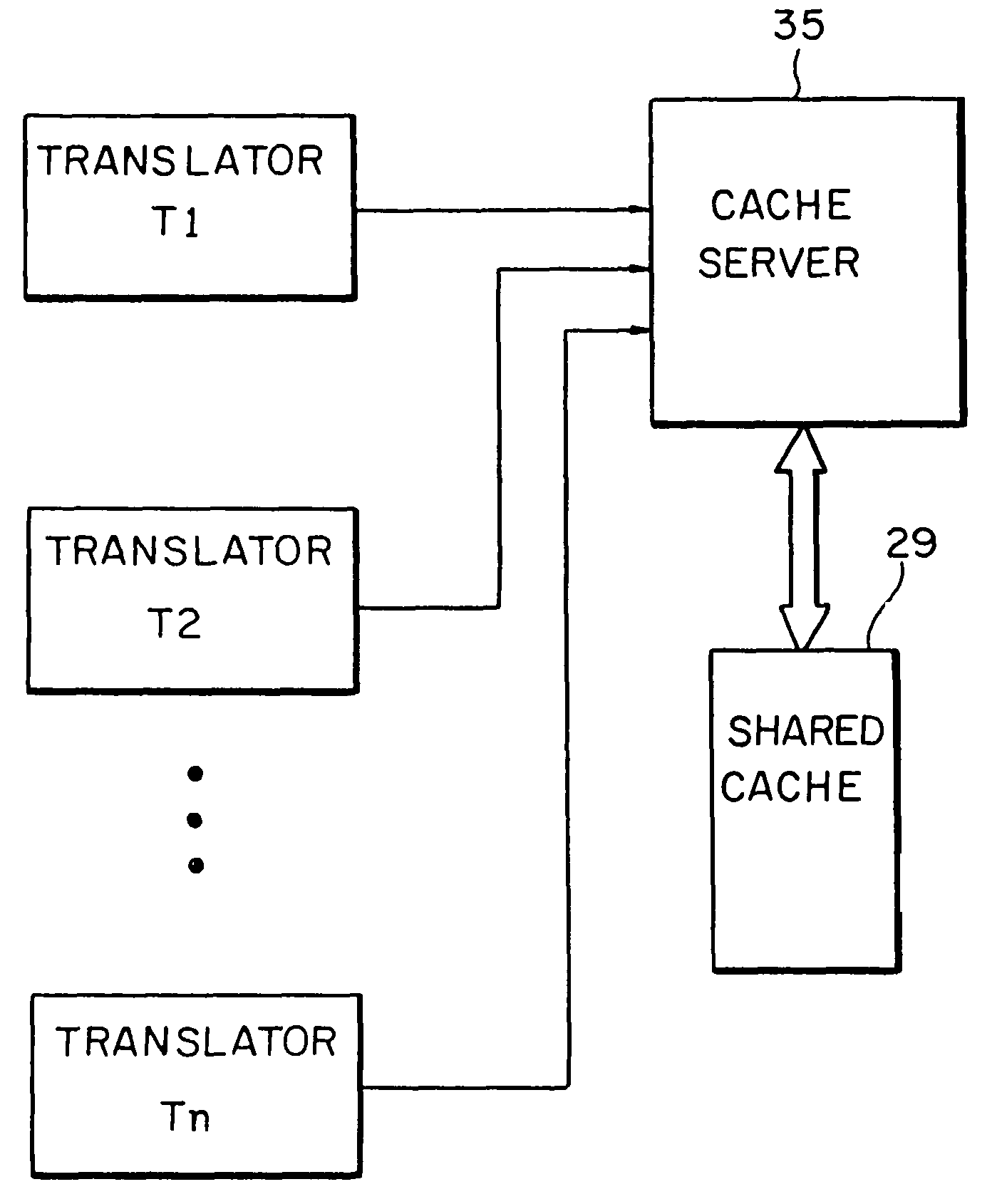

Shared code caching for program code conversion

ActiveUS7805710B2General purpose stored program computerProgram loading/initiatingCode TranslationObject code

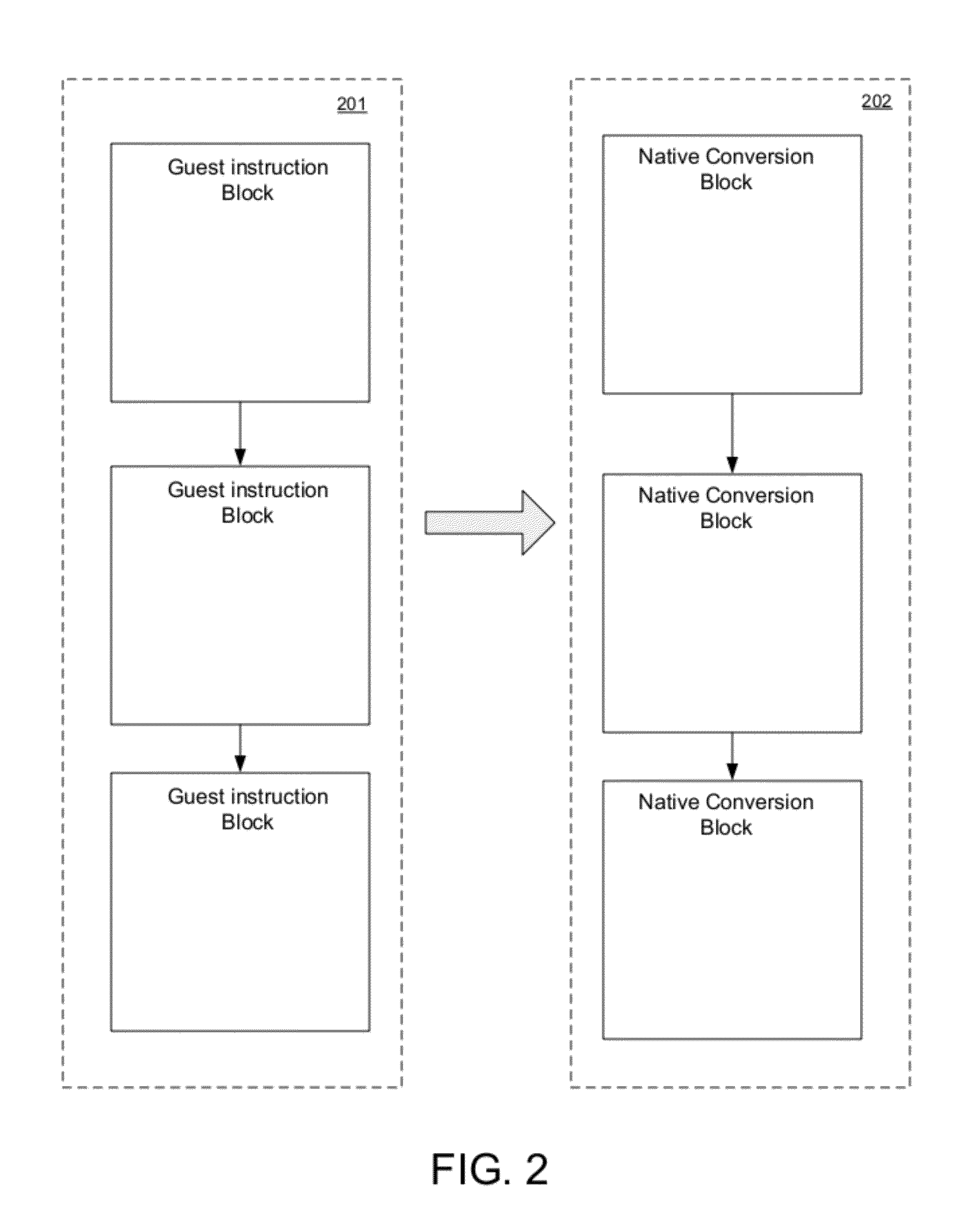

Subject program code is translated to target code in basic block units at run-time in a process wherein translation of basic blocks is interleaved with execution of those translations. A shared code cache mechanism is added to persistently store subject code translations, such that a translator may reuse translations that were generated and / or optimized by earlier translator instances.

Owner:IBM CORP

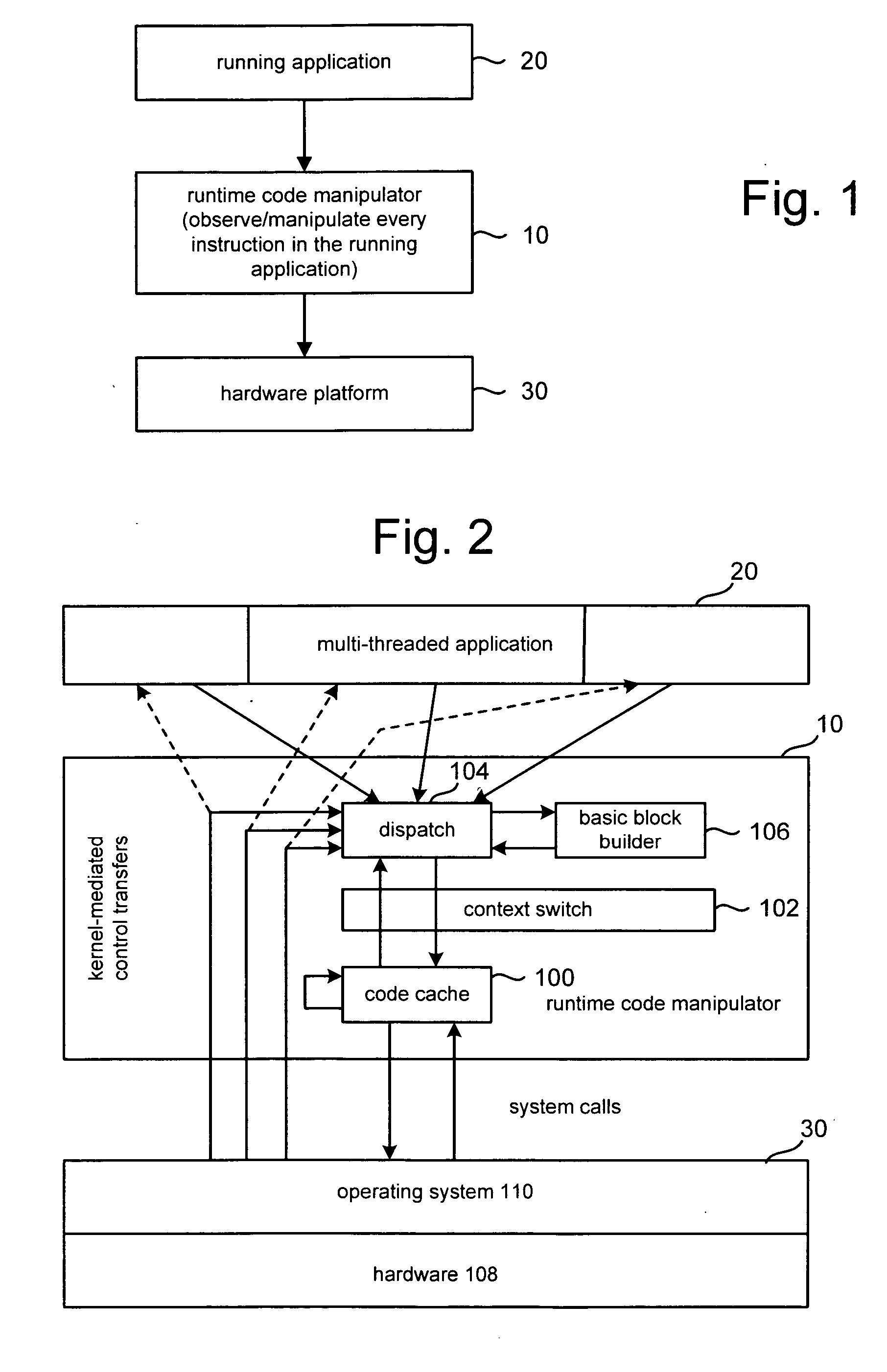

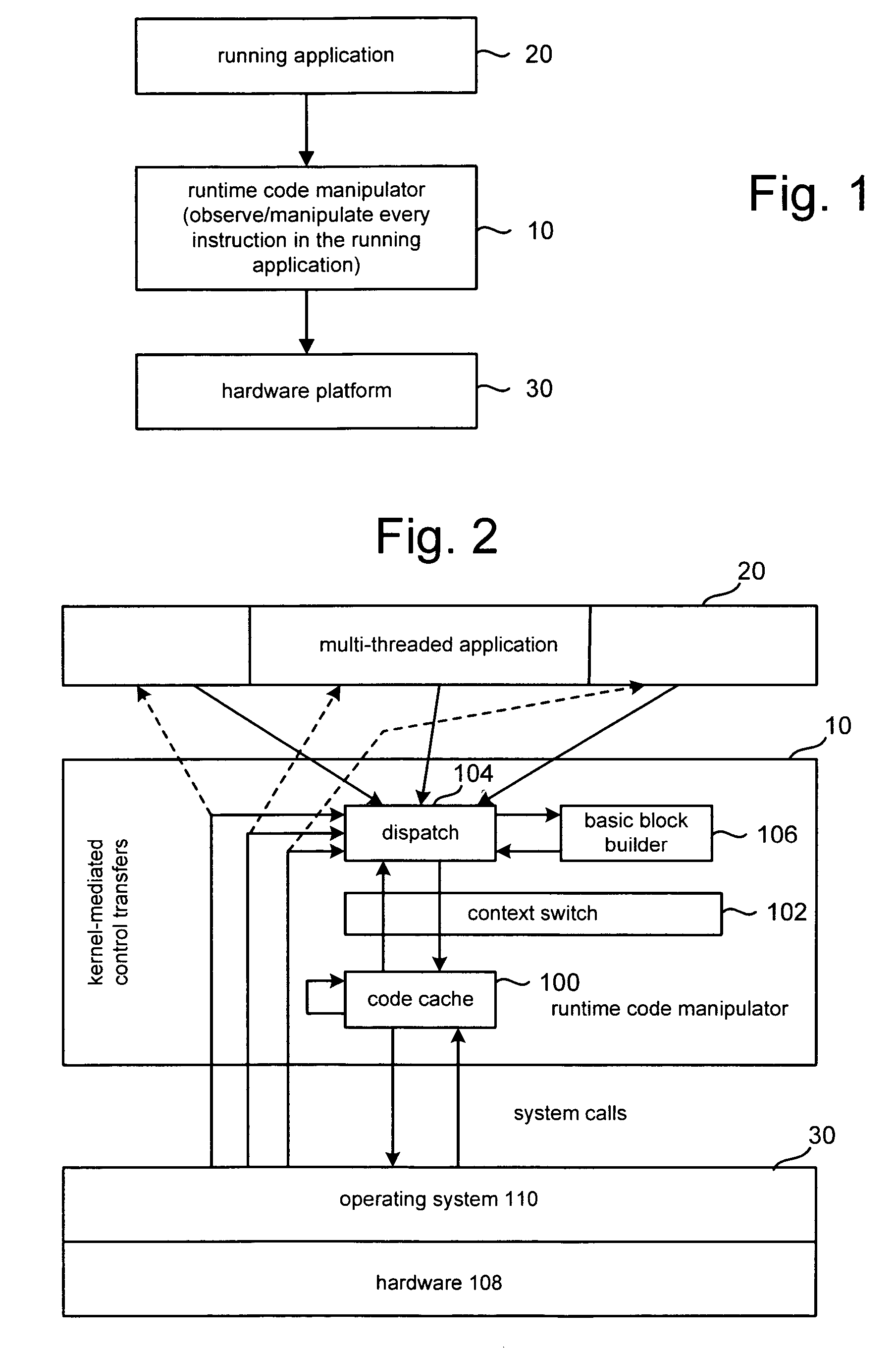

Adaptive cache sizing

ActiveUS20060190924A1Small sizeMemory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemWorking set

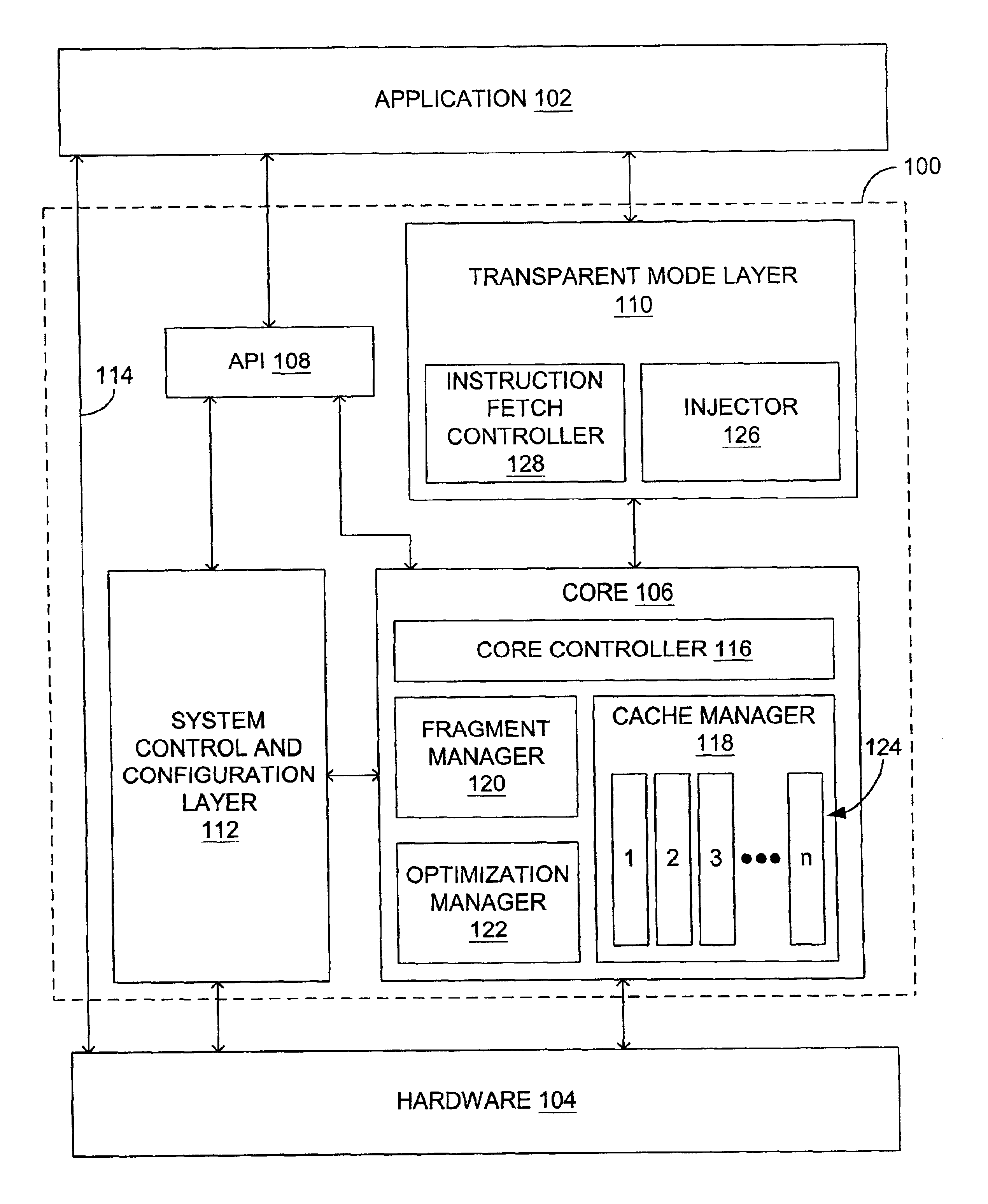

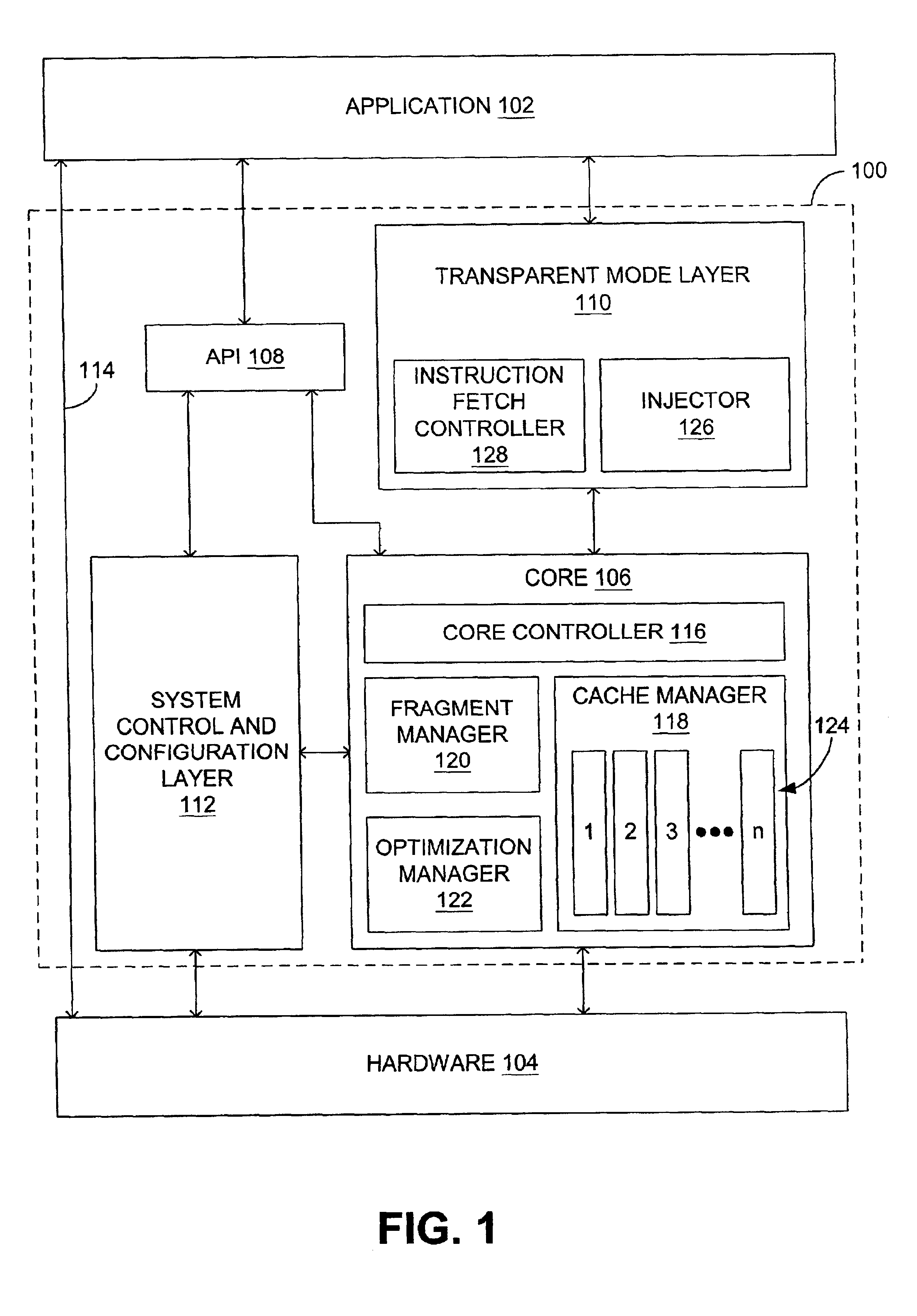

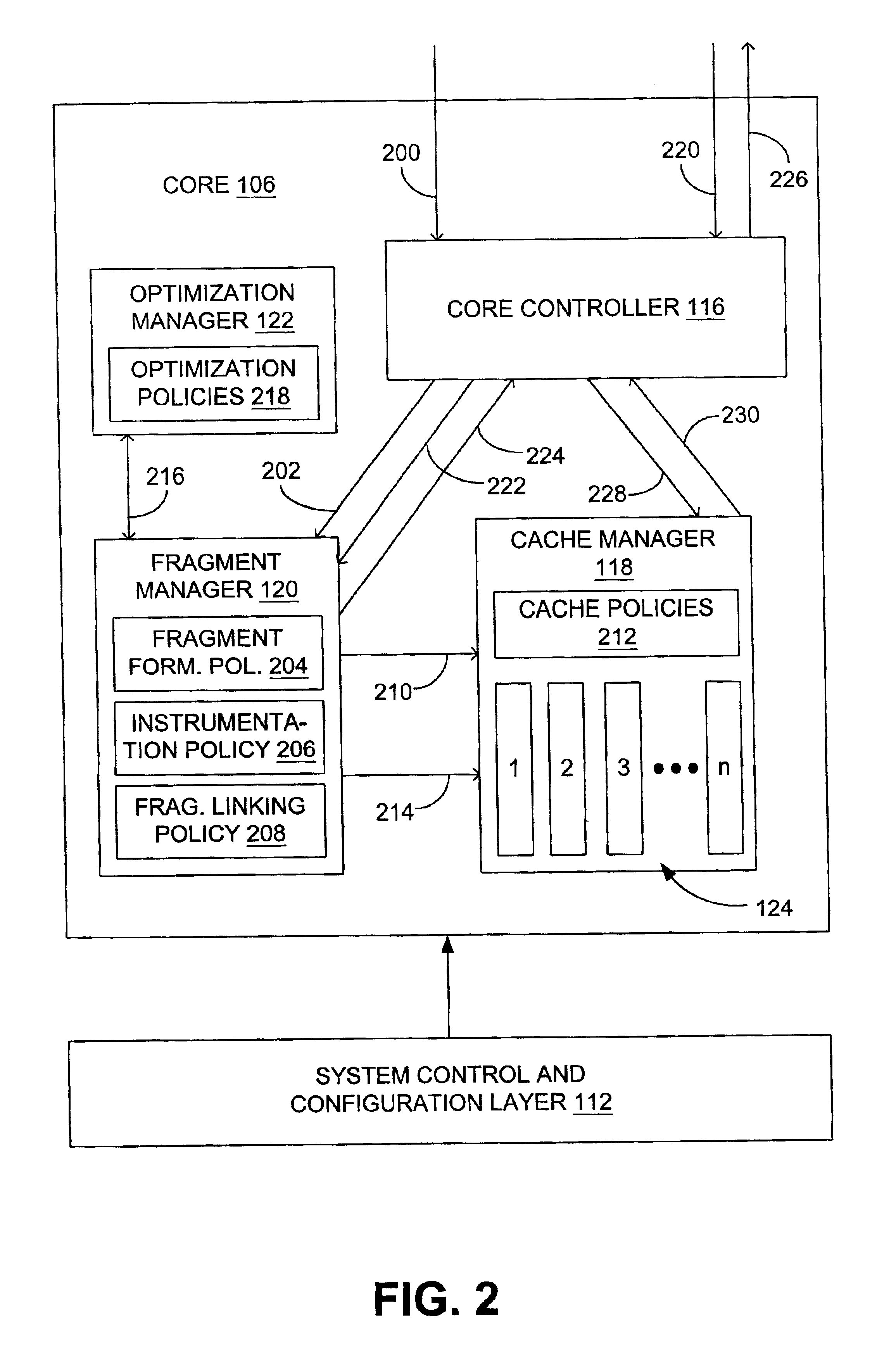

A runtime code manipulation system is provided that supports code transformations on a program while it executes. The runtime code manipulation system uses code caching technology to provide efficient and comprehensive manipulation of an application running on an operating system and hardware. The code cache includes a system for automatically keeping the code cache at an appropriate size for the current working set of an application running.

Owner:MASSACHUSETTS INST OF TECH

Dynamic execution layer interface for replacing instructions requiring unavailable hardware functionality with patch code and caching

InactiveUS6928536B2Runtime instruction translationGeneral purpose stored program computerComputer architectureProgram instruction

A system and method for dynamically patching code. In one embodiment, a method includes intercepting original program instructions during execution of the program using a software interface, determining whether associated instructions have been cached in a code cache of the software interface and, if so, executing the cached instructions from the code cache, if associated instructions have not been cached, determining if the original program instructions require unavailable hardware functionality, and dynamically replacing the original program instructions with replacement instructions that do not require unavailable hardware functionality if it is determined that the original program instructions require unavailable hardware functionality, the dynamic replacing including fetching replacing instructions, storing the replacement instructions in the code cache, and executing the replacement instructions from the code cache.

Owner:VALTRUS INNOVATIONS LTD +1

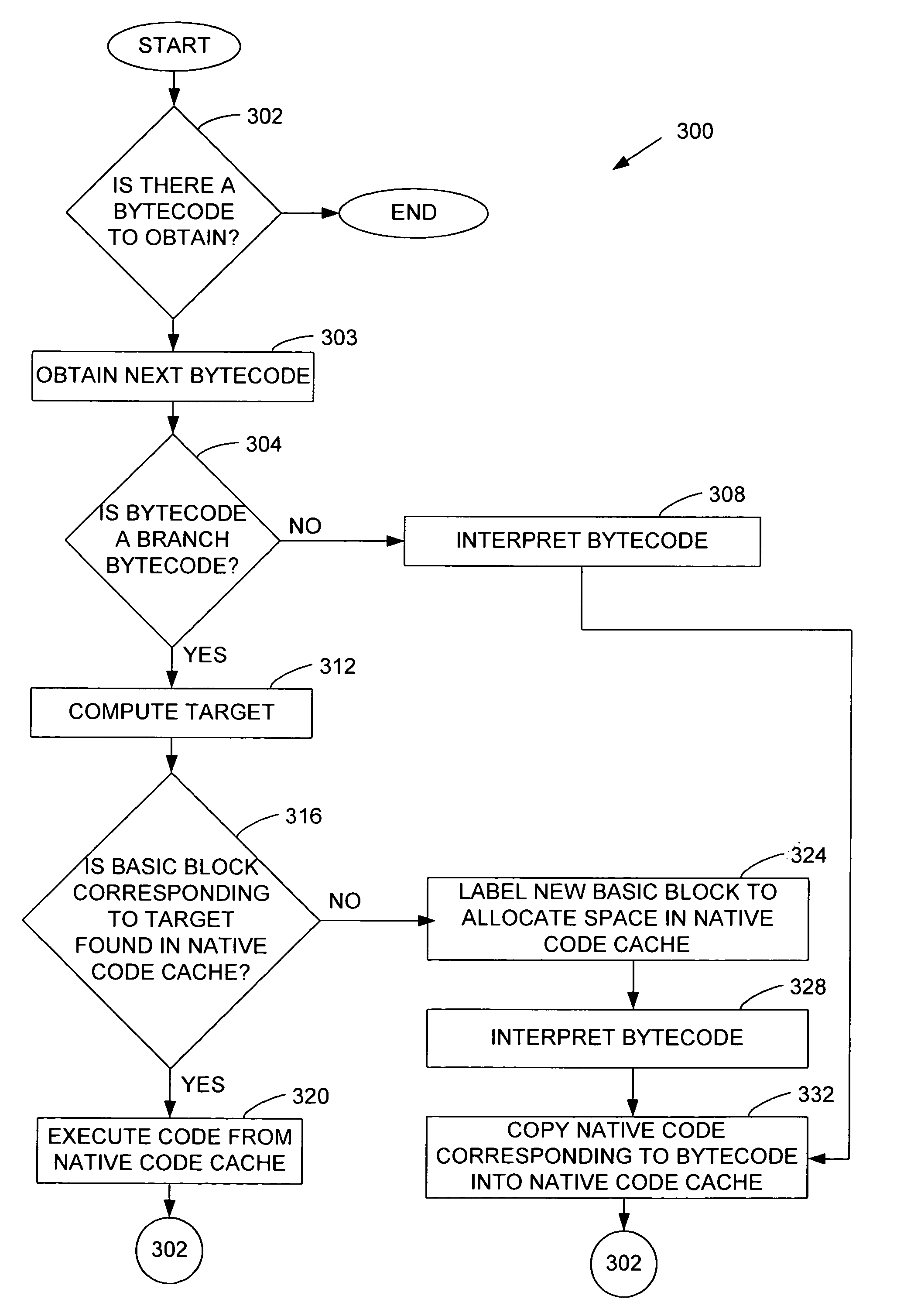

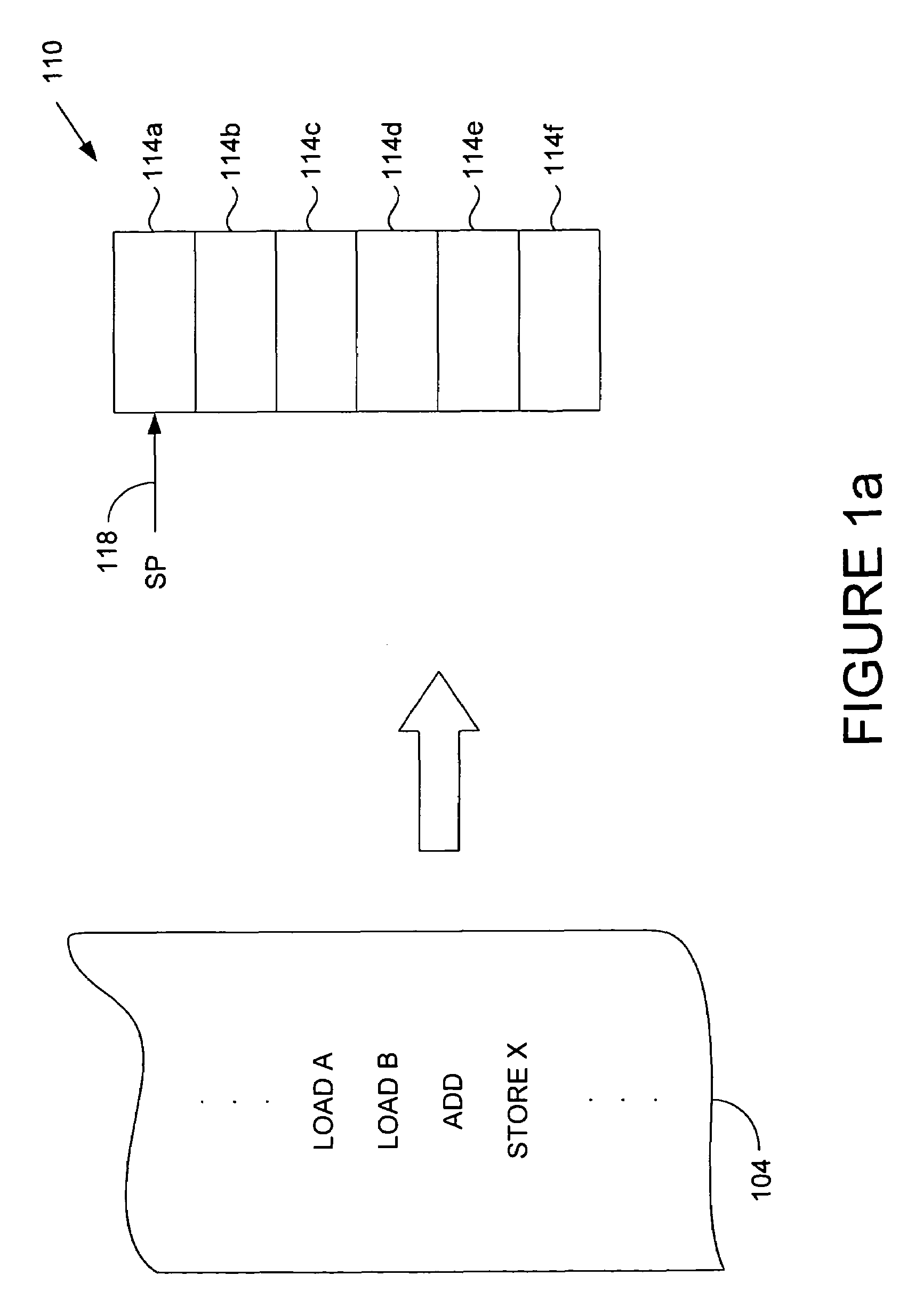

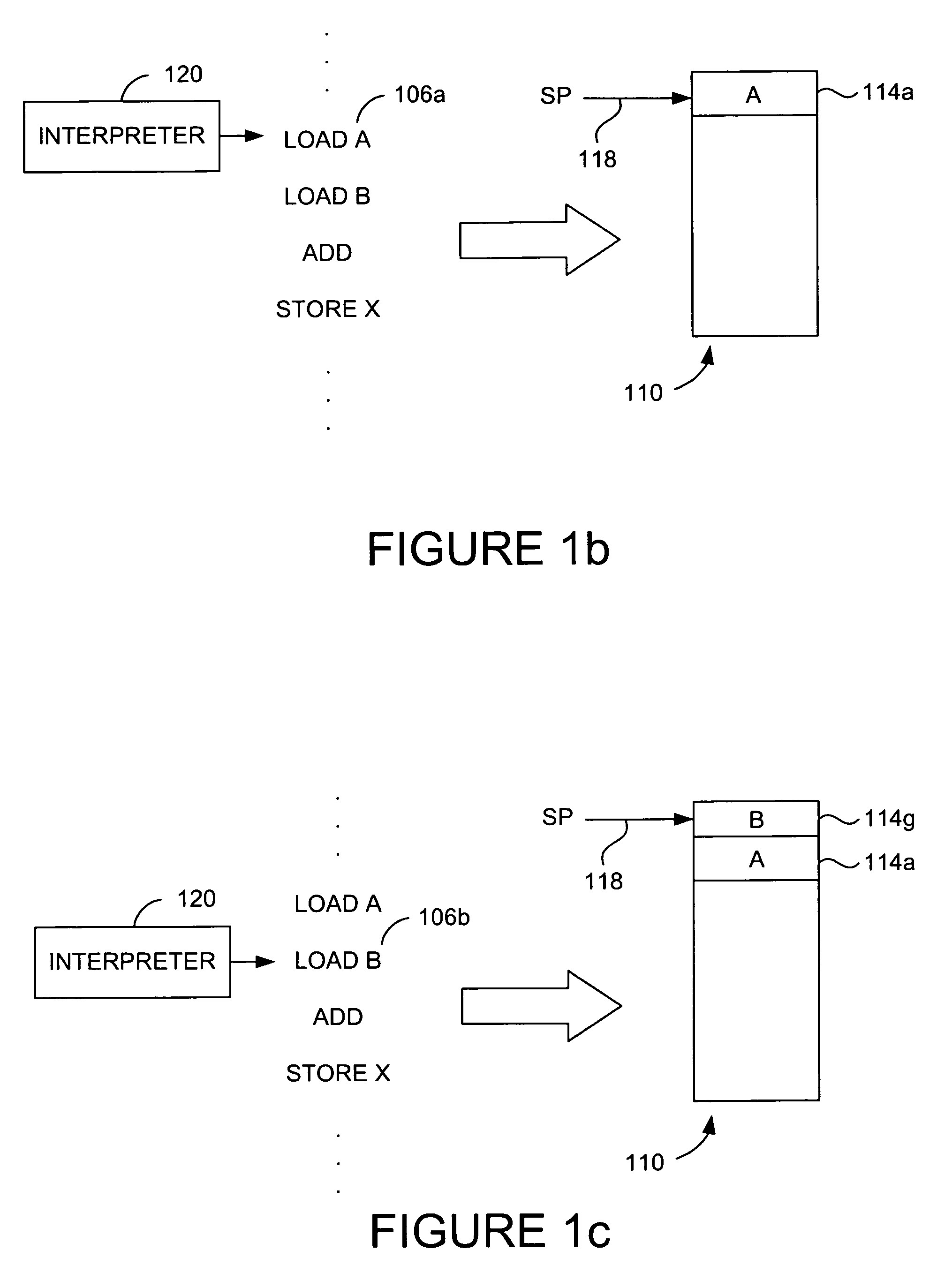

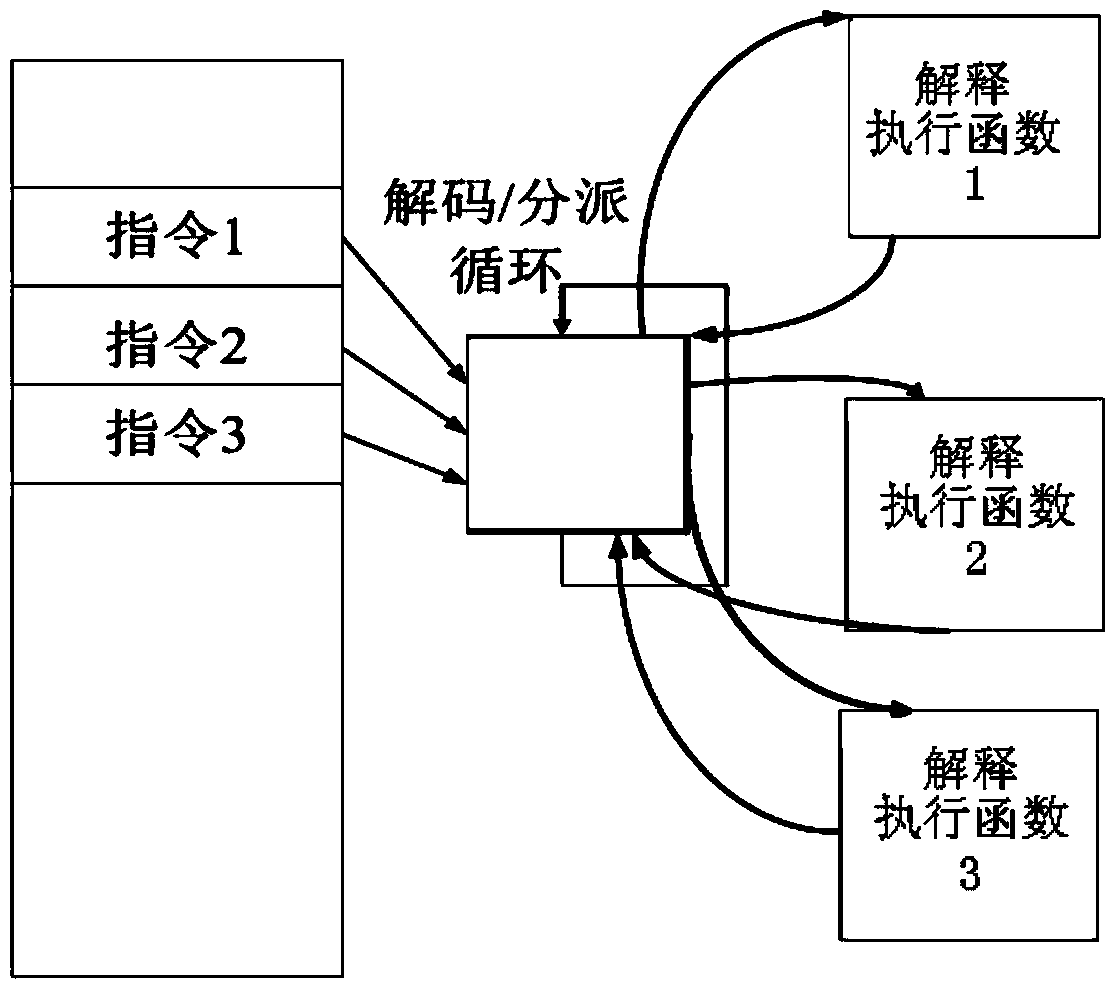

Method and apparatus for caching native code in a virtual machine interpreter

InactiveUS7124407B1Efficient implementationImprove performanceSoftware simulation/interpretation/emulationMemory systemsProgram instructionBasic block

Techniques for increasing the performance of virtual machines are disclosed. It can be determined whether a program instruction which is to be executed by the virtual machine is a branch instruction, and whether a basic block of code is present in a code cache. If so, the basic block of code can be executed. The basic block includes code that can be executed for the program instruction. A cache can be used to store the basic block for program instructions that are executed by the virtual machine. The program instruction may be a bytecode and the code cache can be implemented as a native code cache.

Owner:ORACLE INT CORP

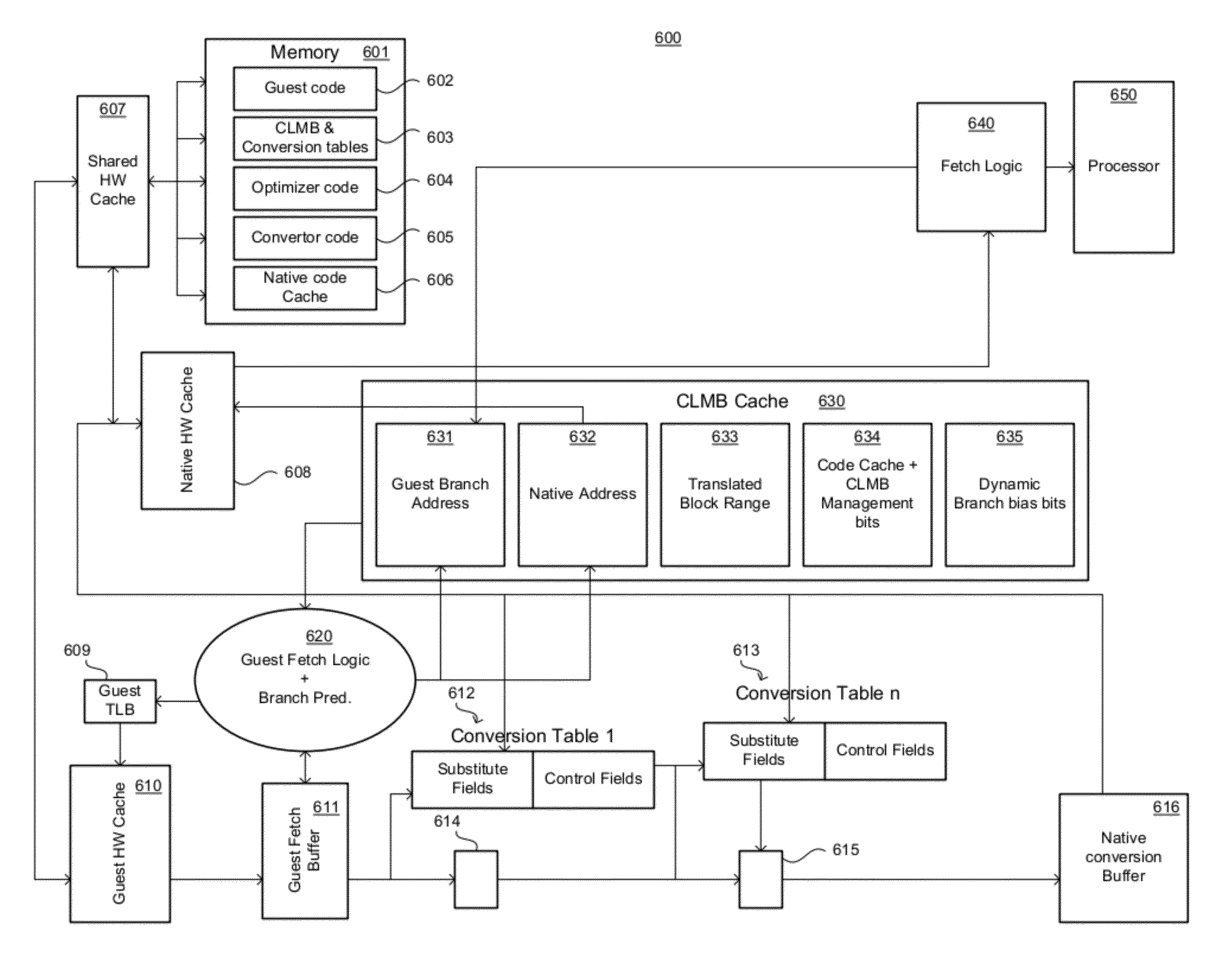

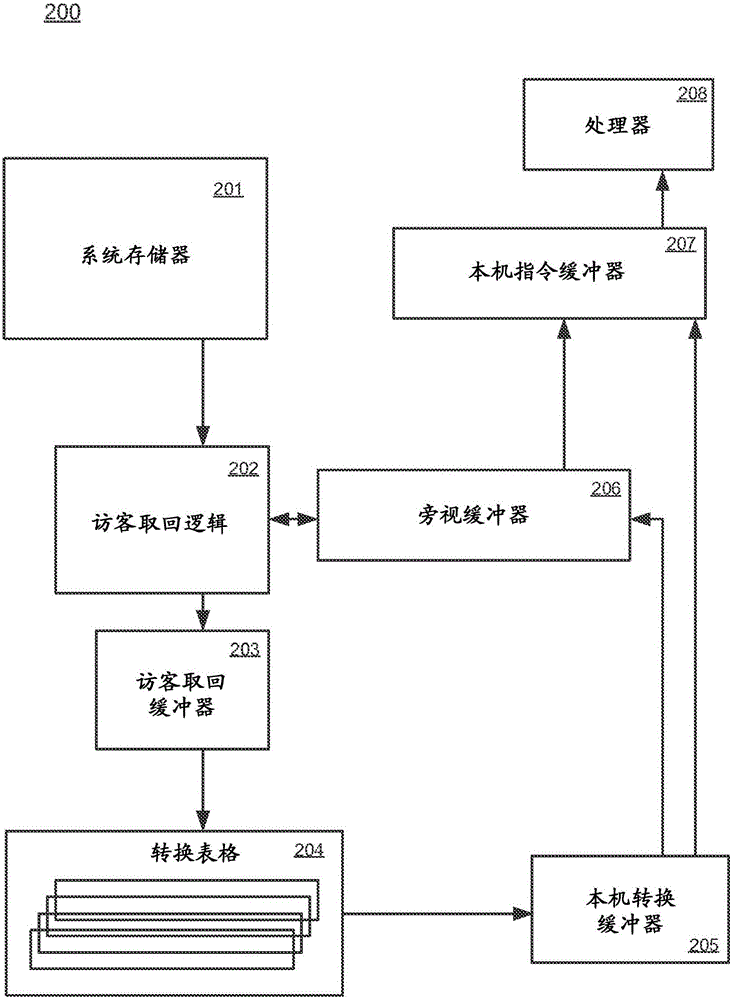

Guest to native block address mappings and management of native code storage

ActiveUS20120198122A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingAddress mapping

A method for managing mappings of storage on a code cache for a processor. The method includes storing a plurality of guest address to native address mappings as entries in a conversion look aside buffer, wherein the entries indicate guest addresses that have corresponding converted native addresses stored within a code cache memory, and receiving a subsequent request for a guest address at the conversion look aside buffer. The conversion look aside buffer is indexed to determine whether there exists an entry that corresponds to the index, wherein the index comprises a tag and an offset that is used to identify the entry that corresponds to the index. Upon a hit on the tag, the corresponding entry is accessed to retrieve a pointer to the code cache memory corresponding block of converted native instructions. The corresponding block of converted native instructions are fetched from the code cache memory for execution.

Owner:INTEL CORP

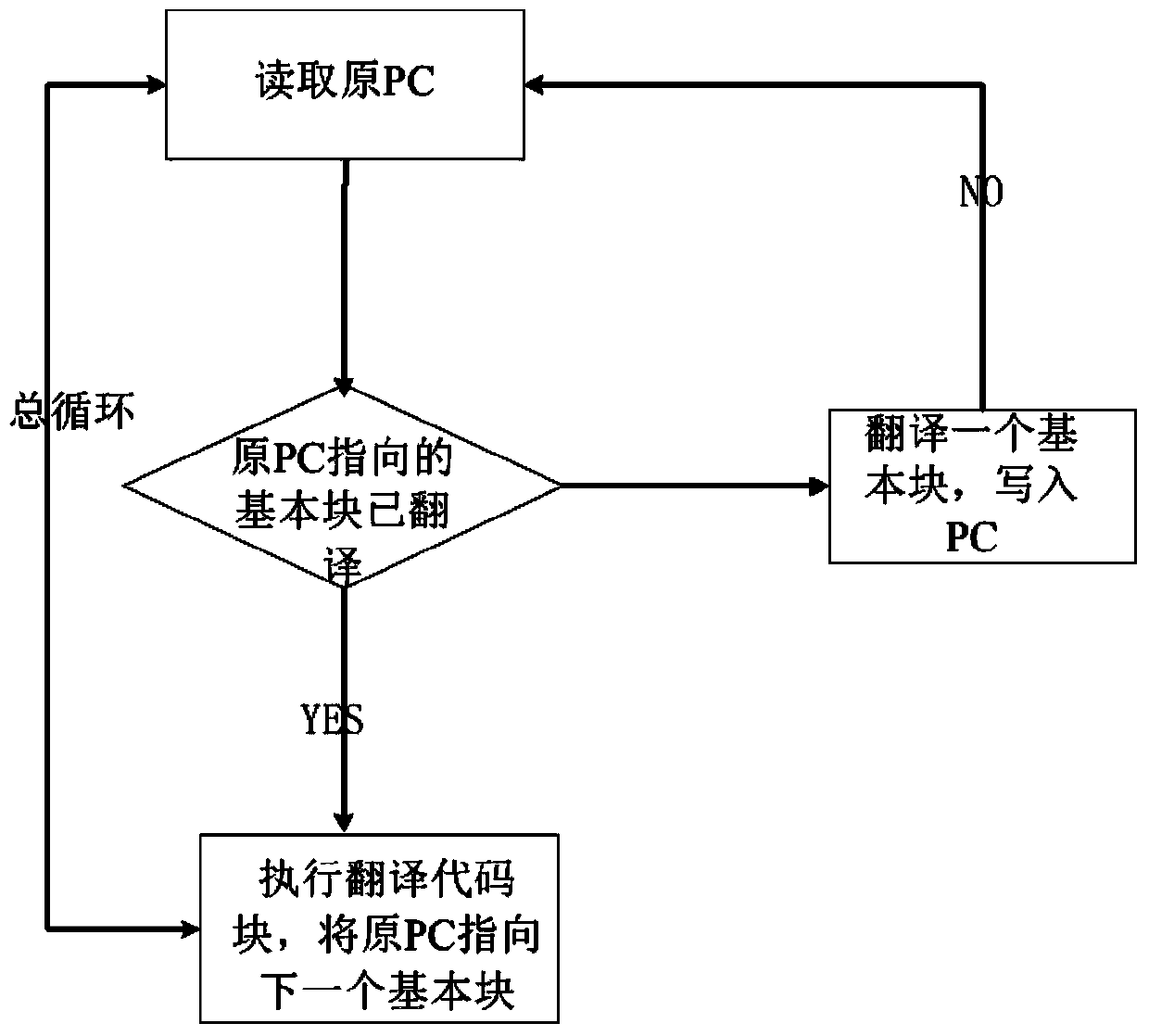

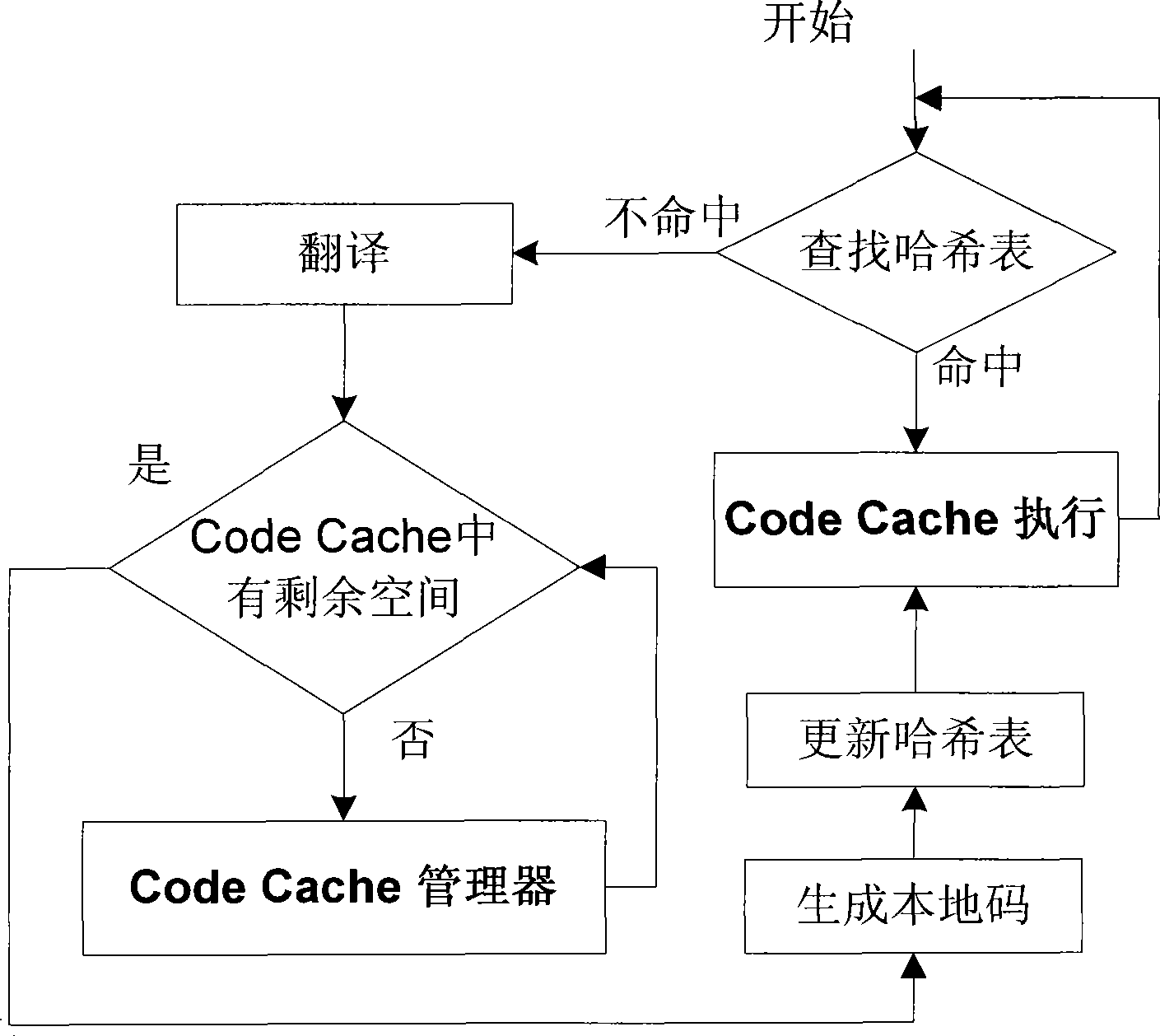

Multi-core multi-threading construction method for hot path in dynamic binary translator

InactiveCN101477472AImprove stabilityImprove efficiencyMultiprogramming arrangementsMemory systemsObject codeDual code

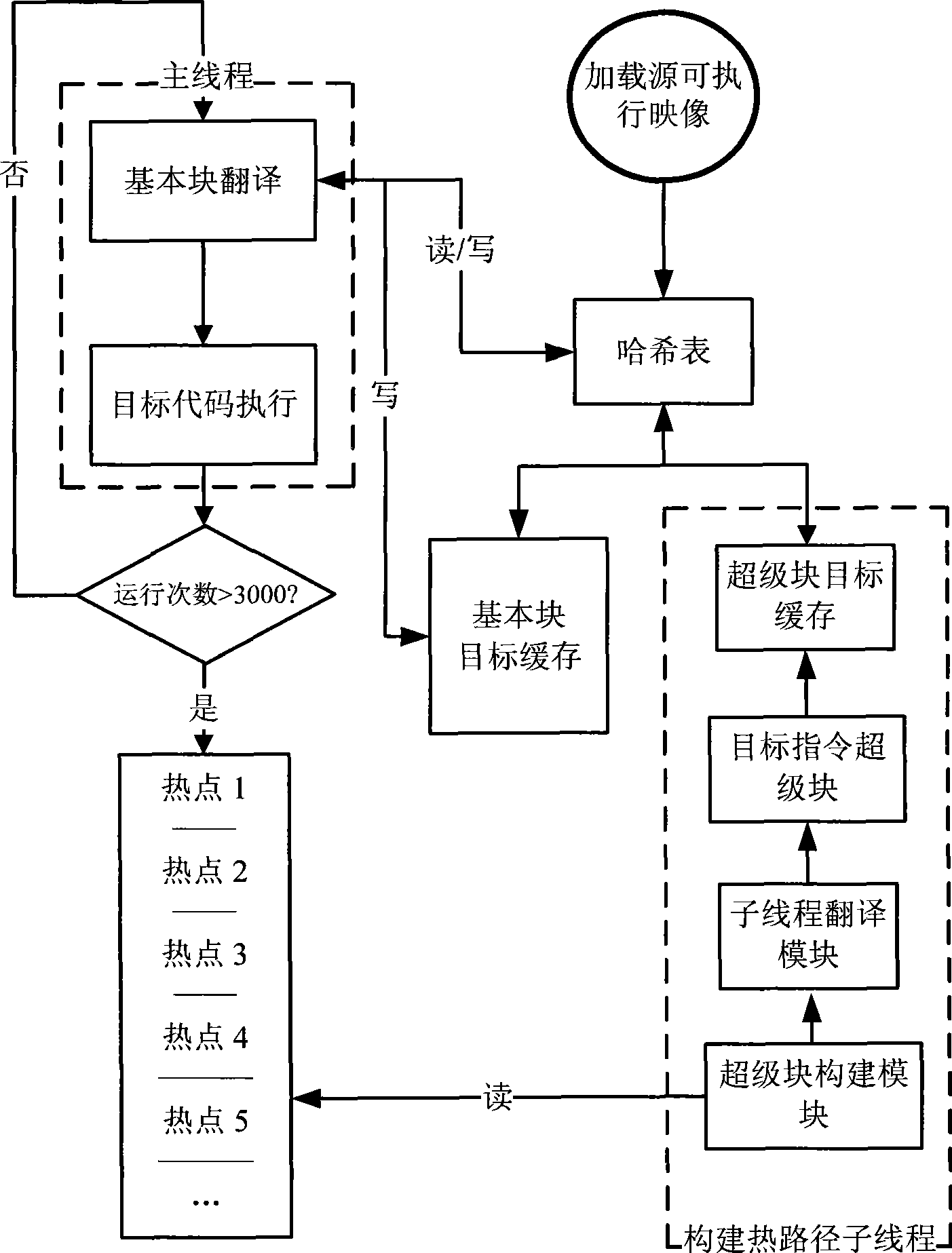

The invention discloses a multi-core and multi-thread construction method for a heat path in a dynamic binary translator. The method comprises the following steps: a basic block translation and object code execution part works as a main thread, and a heat path construction and super block translation part works as a sub-thread; an independent code cache structure in a dynamic binary translator for adaption adopts a design mode of dual code cache, the cache of two codes is under unified management of a hash table function, so that the main thread and the sub-thread can be conducted in parallel in the process of data inquiry and data renewal; and the main thread and the sub-thread are appointed to work on different cores of a multi-core processor combined with hard affinity, and a continuous segment of memory space and two counters are utilized to stimulate a segment of queue, so as to carry out the communication between the threads in machine language level and high-level language level. The invention has the favorable characteristics of high parallelism and low synchronous consumption, and provides new conception and new frame for the optimization work of dynamic binary translators in the future.

Owner:SHANGHAI JIAO TONG UNIV

Thread-shared software code caches

ActiveUS8402224B2Avoiding brute-force all-thread-suspensionAvoiding monolithic global locksMemory adressing/allocation/relocationMultiprogramming arrangementsBrute forceTimestamp

A runtime system using thread-shared code caches is provided which avoids brute-force all-thread-suspension and monolithic global locks. In one embodiment, medium-grained runtime system synchronization reduces lock contention. The system includes trace building that combines efficient private construction with shared results, in-cache lock-free lookup table access in the presence of entry invalidations, and a delayed deletion algorithm based on timestamps and reference counts. These enable reductions in memory usage and performance overhead.

Owner:VMWARE INC

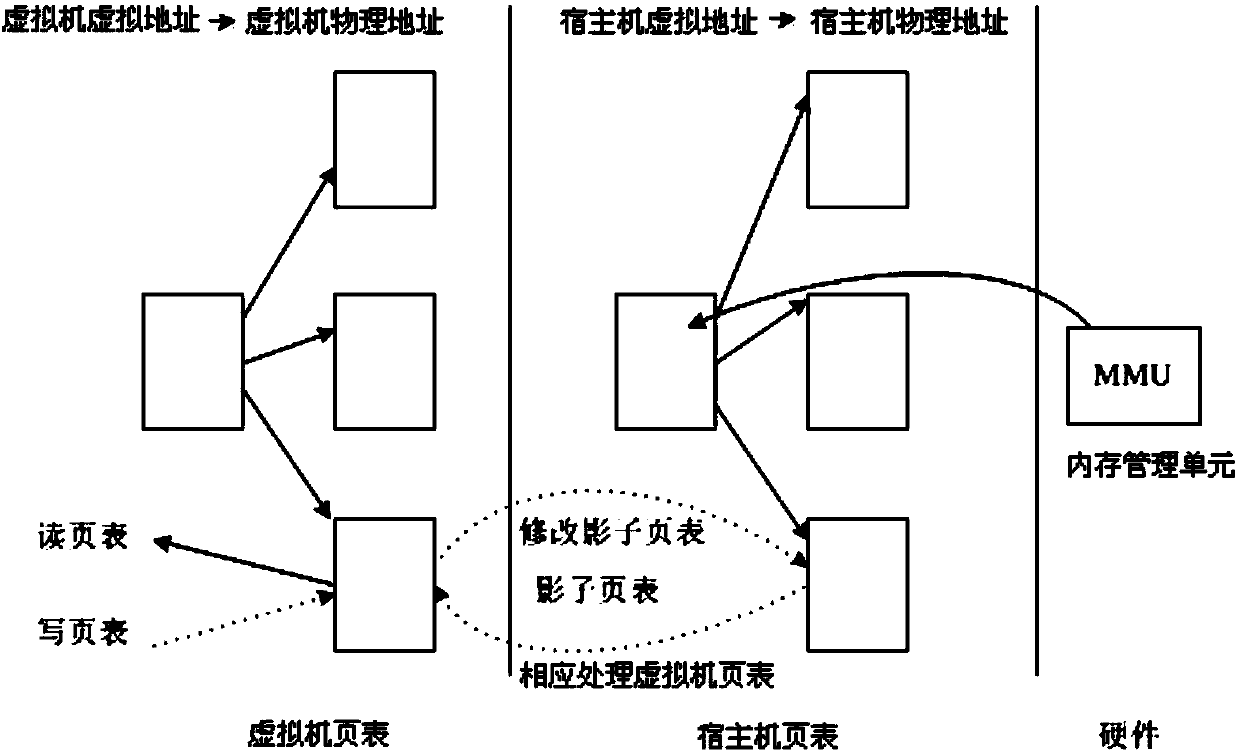

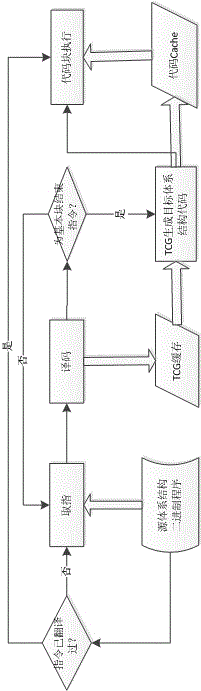

Platform virtualization system

InactiveCN103793260AEase system deploymentRelieve pressureSoftware simulation/interpretation/emulationCode TranslationMemory virtualization

The invention relates to a platform virtualization system comprising a CPU simulator, a memory virtualization module, and an external virtualization module. The CPU simulator reads an X86 architecture code instruction and judges whether an instruction basic block is translated or not; a binary translator is used for translation and comprises a translation engine and an execution engine; the translation engine translates an X86 architecture code into a Loongson platform code; the execution engine prepares the operational context of the Loongson platform code, locates the Loongson platform code corresponding to the X86 architecture code from a Loongson platform code cache and executes the code. The memory virtualization module uses a shadow page-table method. The external virtualization module establishes a corresponding device model for each external device. An X86 architecture virtual machine interacts with the external devices through the device models, thereby discovering and accessing the devices. The platform virtualization system allows information systems not matching with the domestic Loongson hardware platform yet to run in the domestic software-hardware environments in a virtualized manner, and contributions are made for the smooth transition between new and old technical systems in the automatic upgrading process of the information systems.

Owner:INST OF CHINA ELECTRONICS SYST ENG CO +1

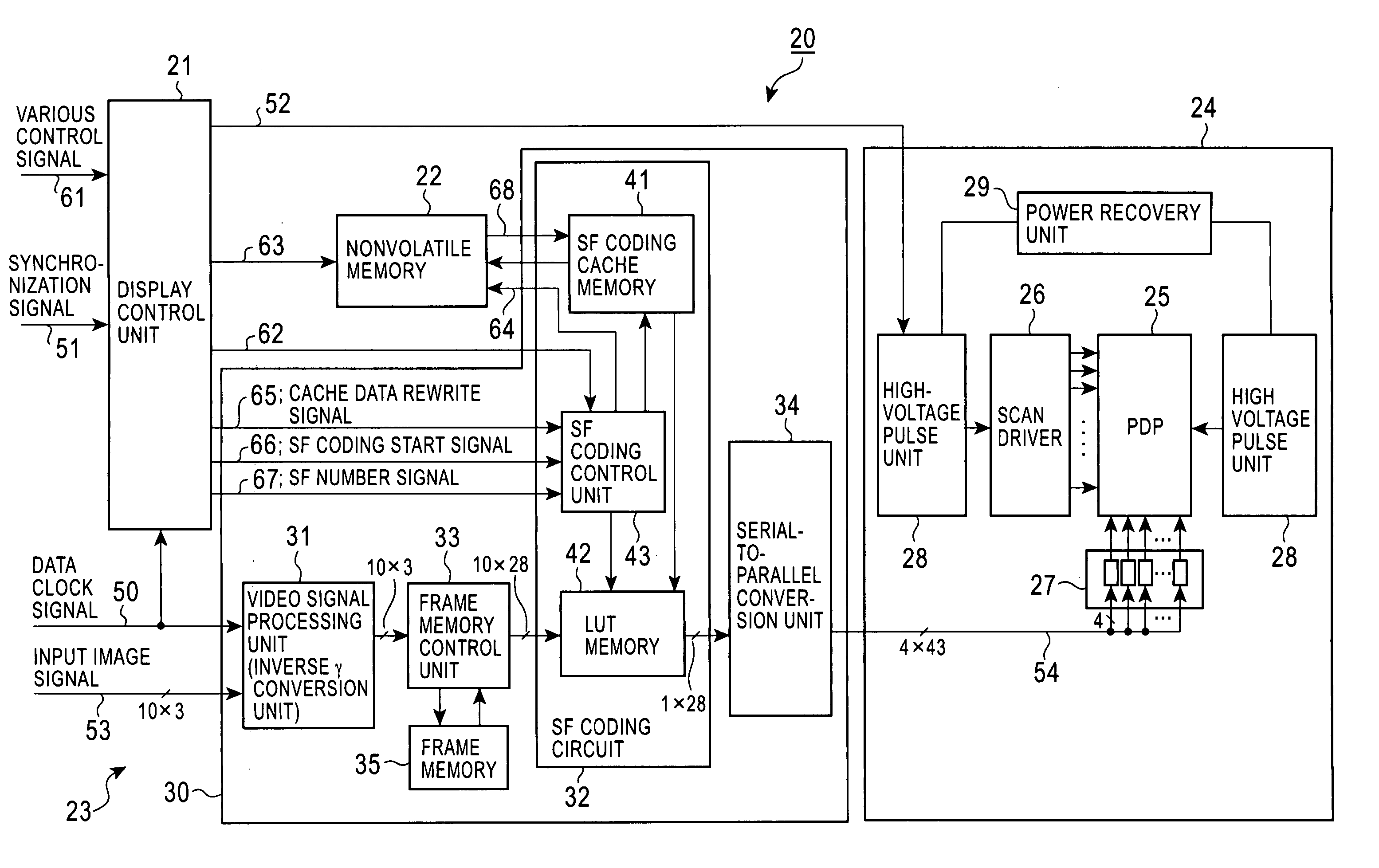

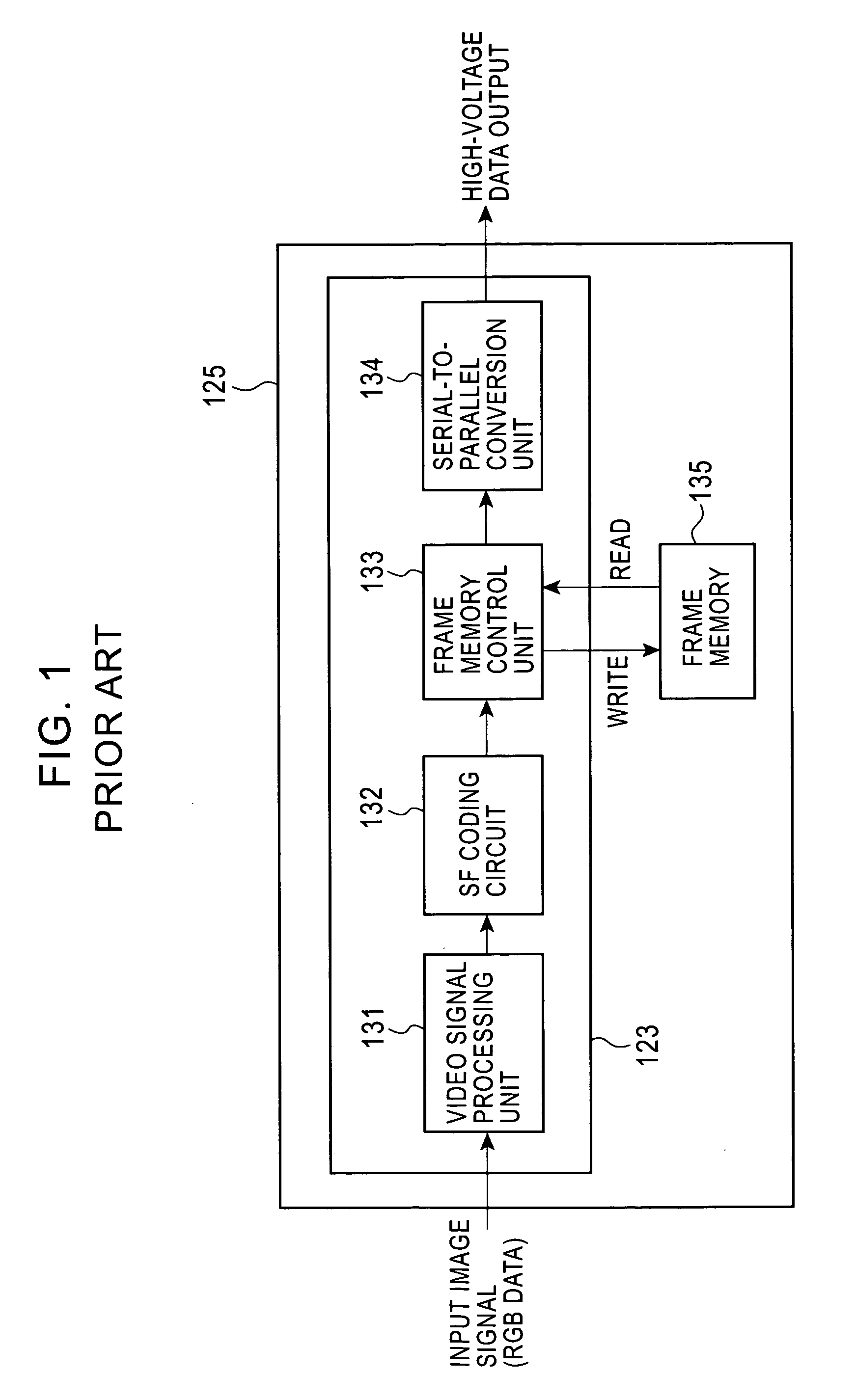

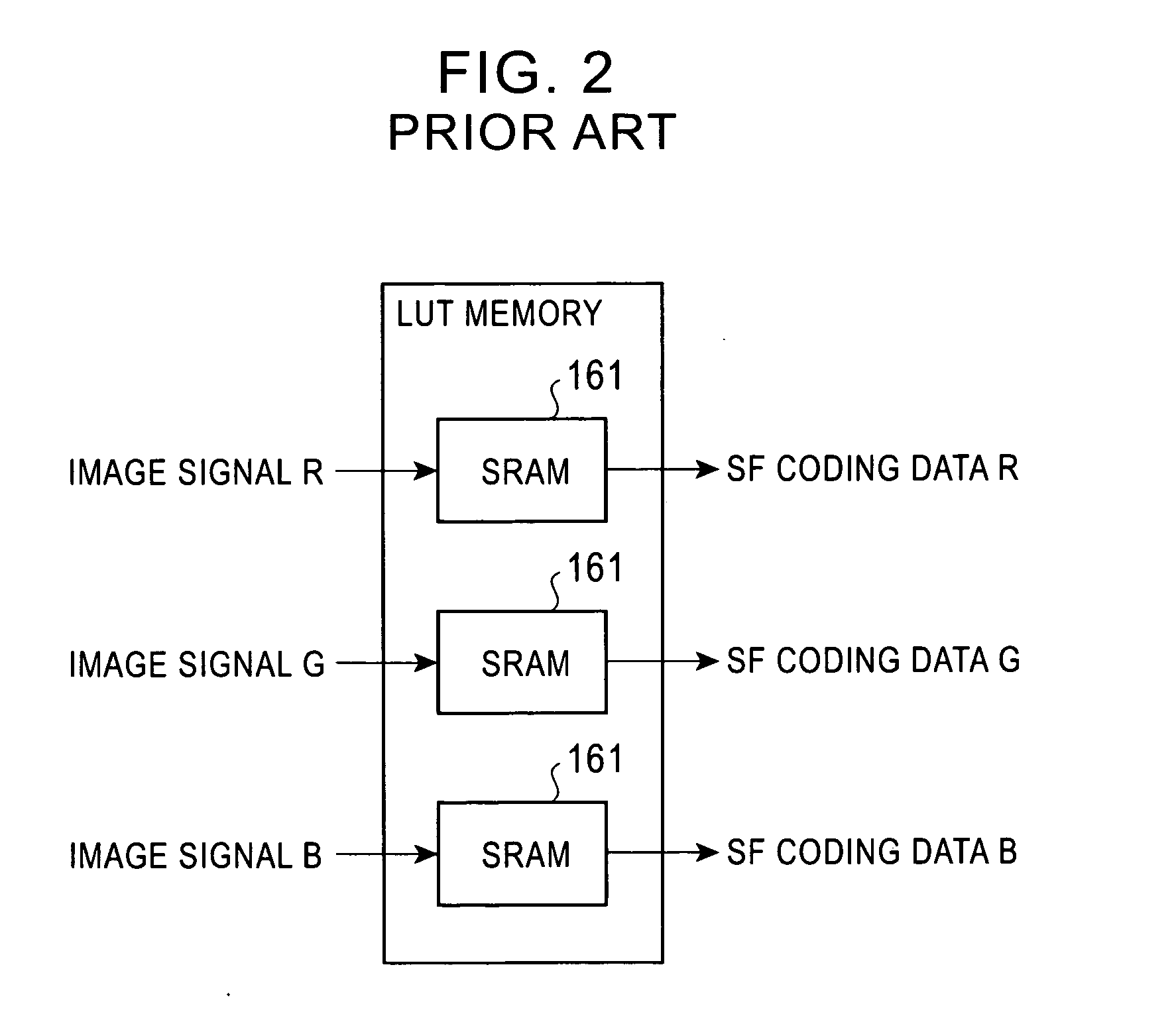

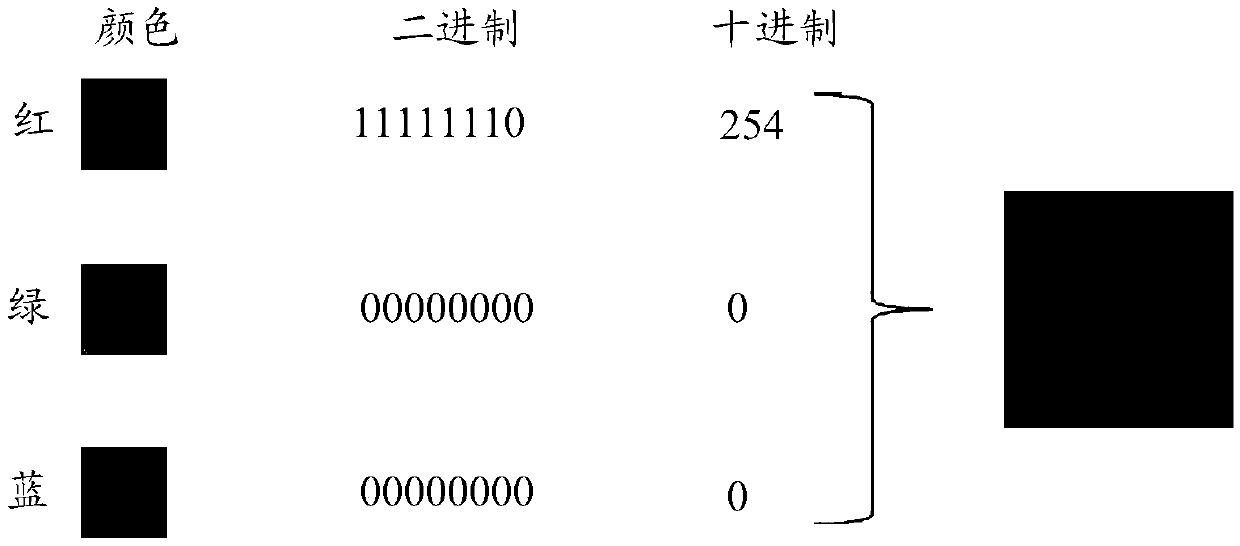

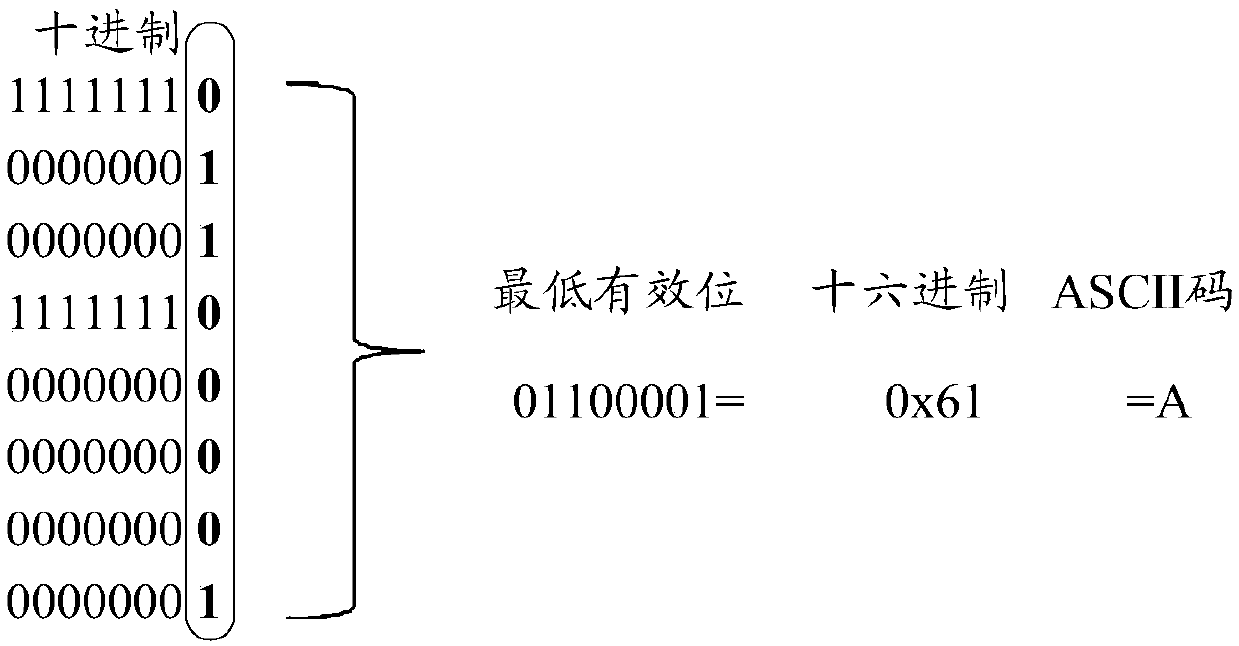

Subfield coding circuit, image signal processing circuit, and plasma display

InactiveUS20050190124A1Reduce memory capacityGuaranteed high speed operationTelevision system detailsStatic indicating devicesSignal processing circuitsImage signal

A subfield (SF) coding circuit including an SF coding cache memory, a look-up table (LUT) memory, and an SF coding control unit. The coding control unit reads setting gradation values and SF coding data from the coding cache memory for writing to the LUT memory SF by SF. The control unit accesses the LUT memory by using the gradation value of an image signal from a frame memory control unit as an address, and outputs the SF coding data corresponding to the gradation value of the image signal input to the LUT memory to a serial-to-parallel conversion unit.

Owner:PIONEER CORP

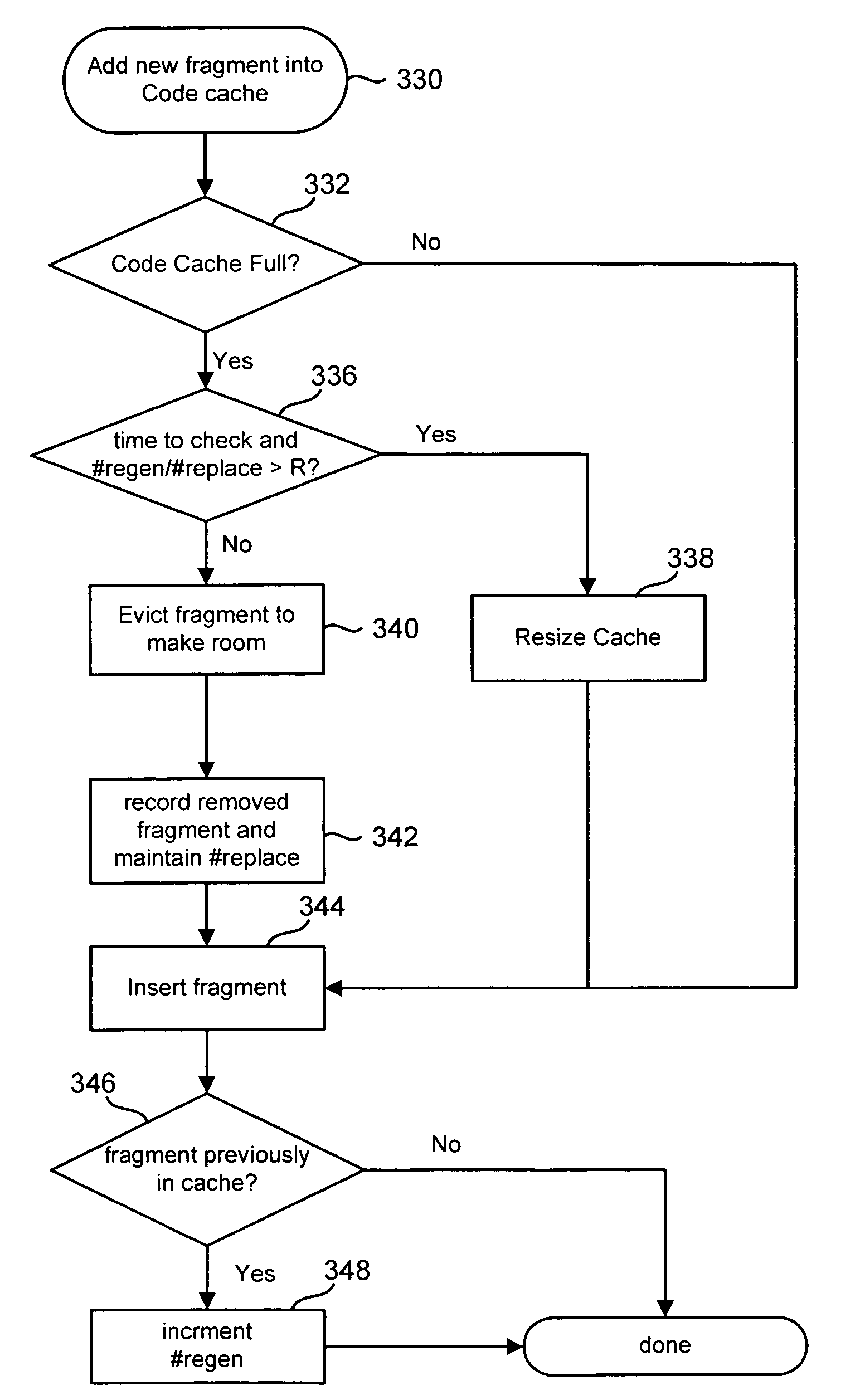

Adaptive cache sizing based on monitoring of regenerated and replaced cache entries

ActiveUS7478218B2Memory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemWorking set

A runtime code manipulation system is provided that supports code transformations on a program while it executes. The runtime code manipulation system uses code caching technology to provide efficient and comprehensive manipulation of an application running on an operating system and hardware. The code cache includes a system for automatically keeping the code cache at an appropriate size for the current working set of an application running.

Owner:MASSACHUSETTS INST OF TECH

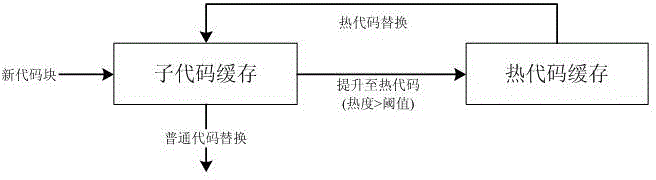

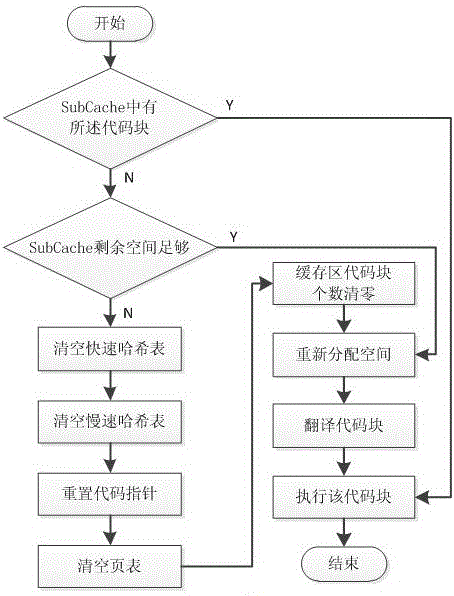

TransCache management method based on hot degree of code in dynamic binary translation

InactiveCN105843664AImprove hit rateExtended retention timeBinary to binaryProgram controlCoding blockBasic block

The present invention provides a translation cache management method based on code popularity in dynamic binary translation. The method includes the steps of: dividing the entire translation cache into two levels of cache; when there is a new basic block to be translated, according to the basic block Find the code block in the hot code cache according to the address; look up the code block in the sub-code cache according to the address of the basic block; calculate the space required for caching the code block; clear the sub-code cache; translate the basic block, and store the translated code block into the sub-code cache; execute the code block; count the number of executions of the code block, if the number of executions is greater than the threshold, the code block is cached into The hot code cache; adding the basic block into the slow hash table, and adding the result into the fast hash table.

Owner:COMP APPL RES INST CHINA ACAD OF ENG PHYSICS

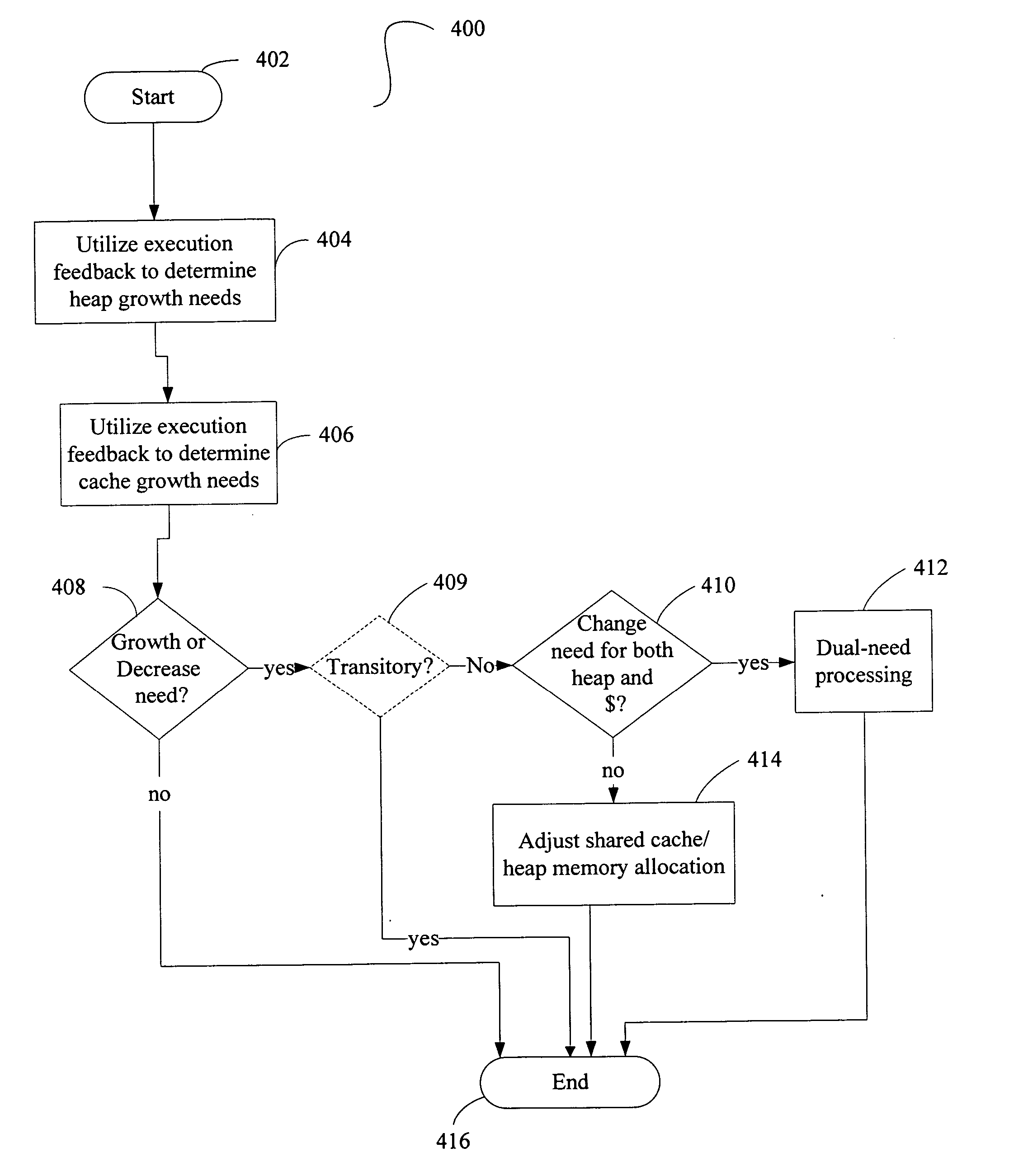

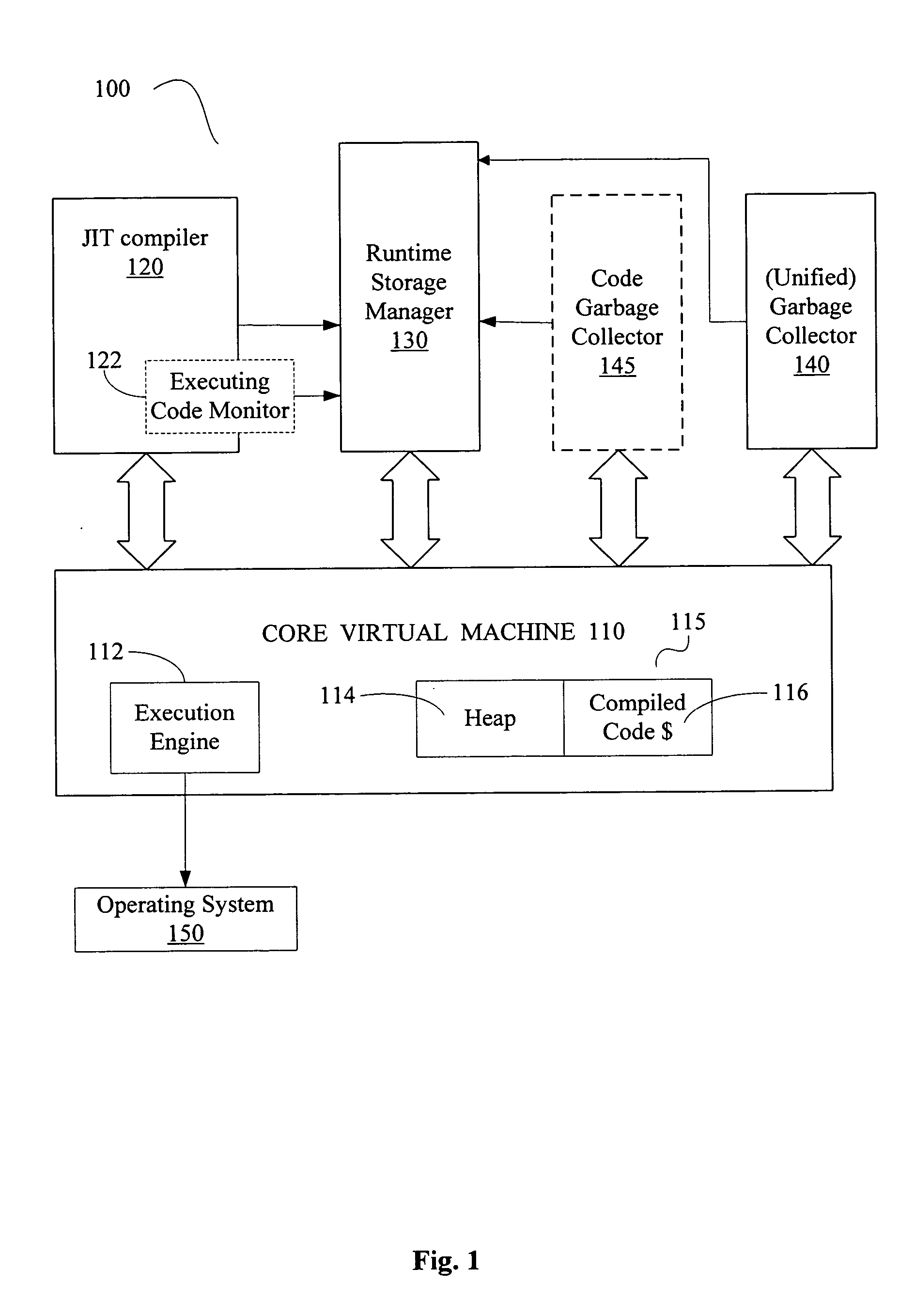

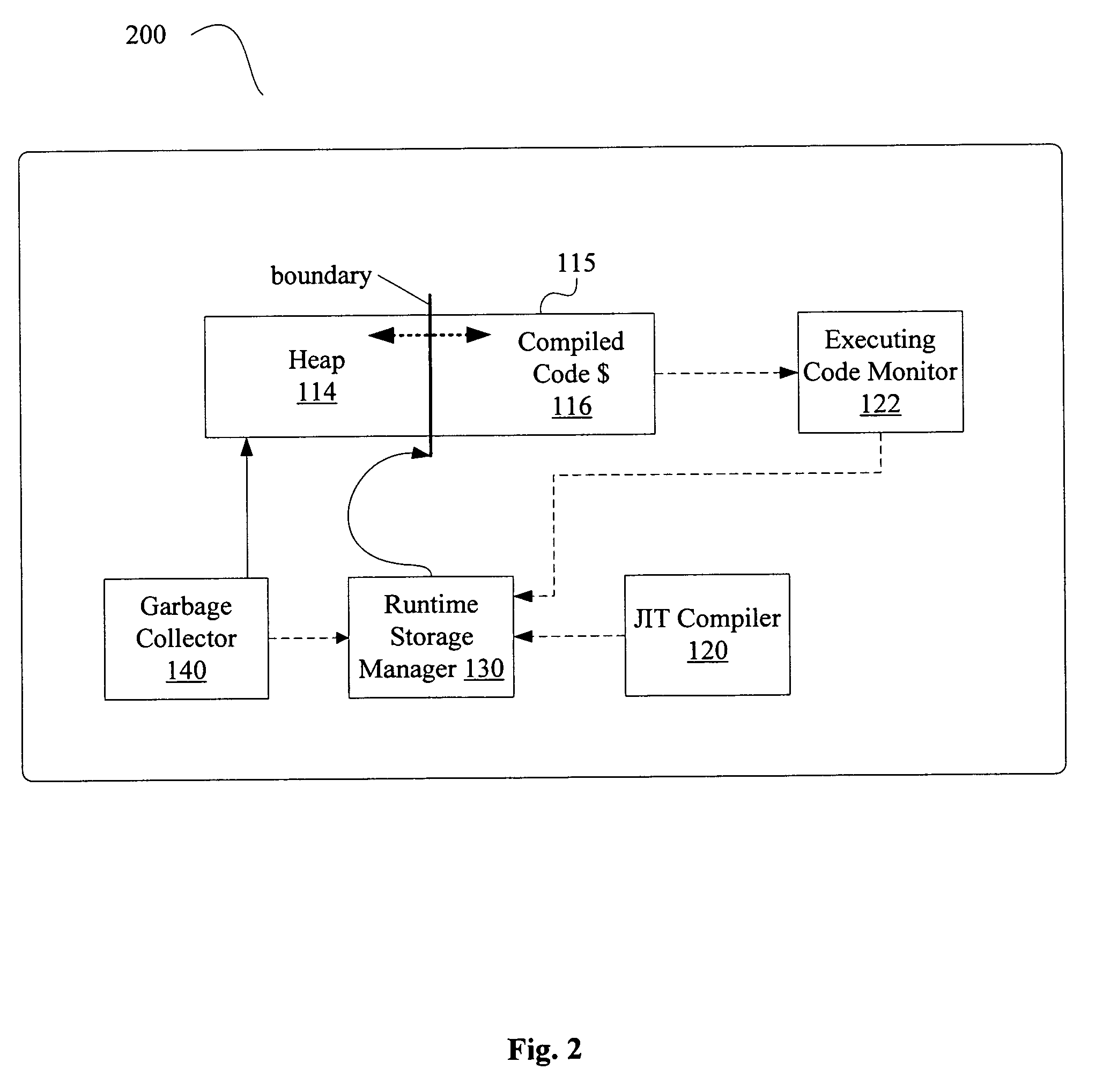

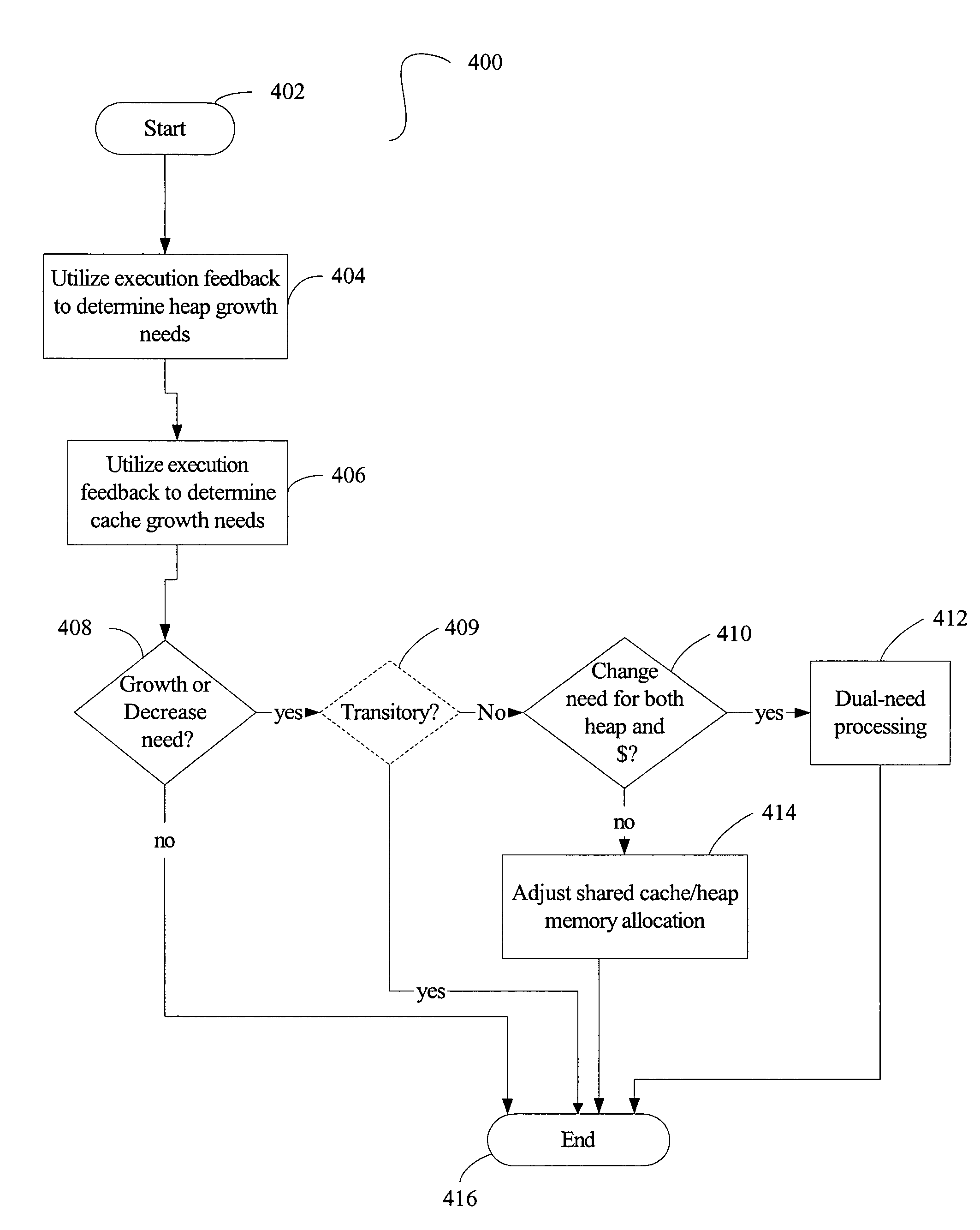

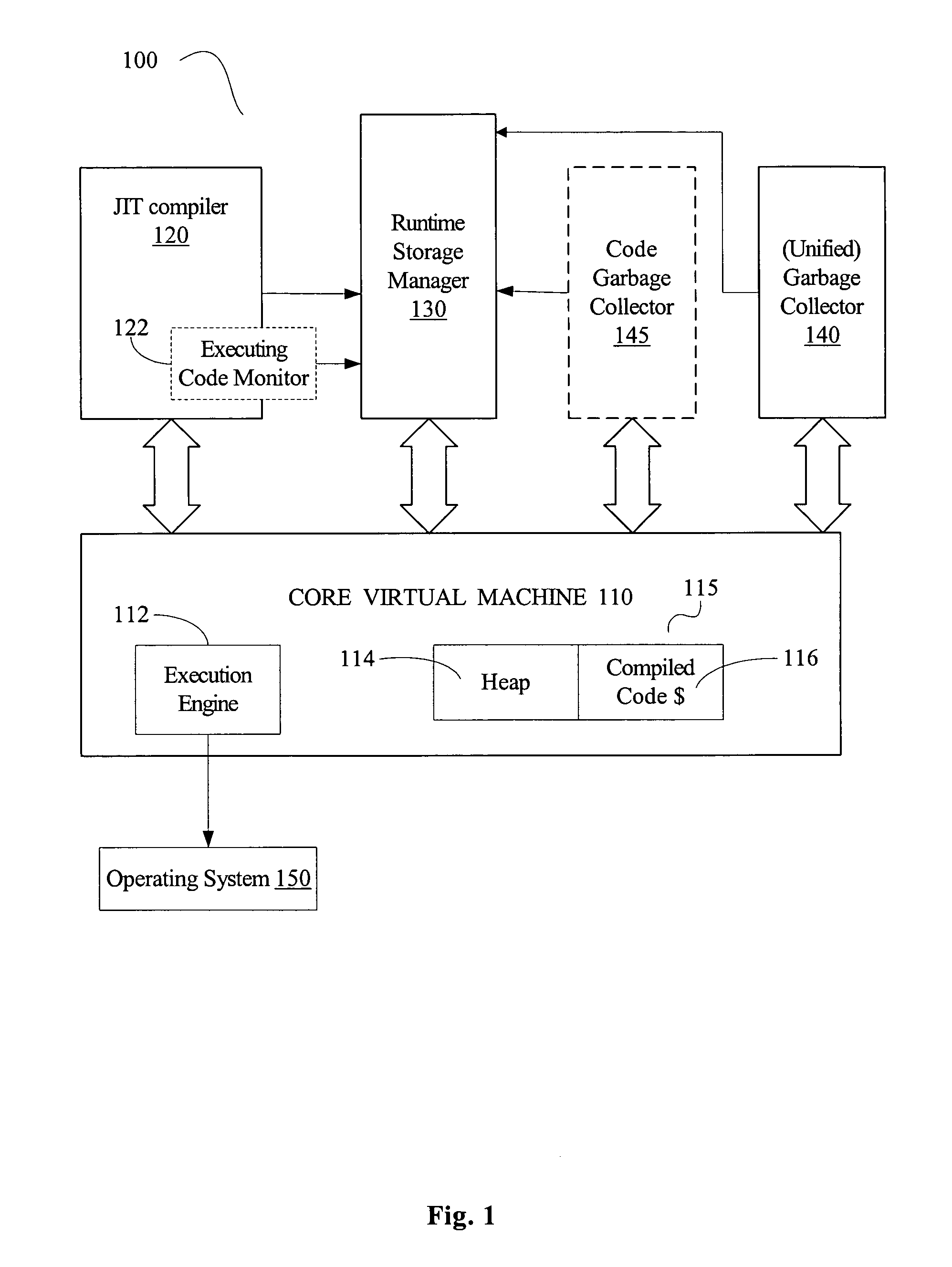

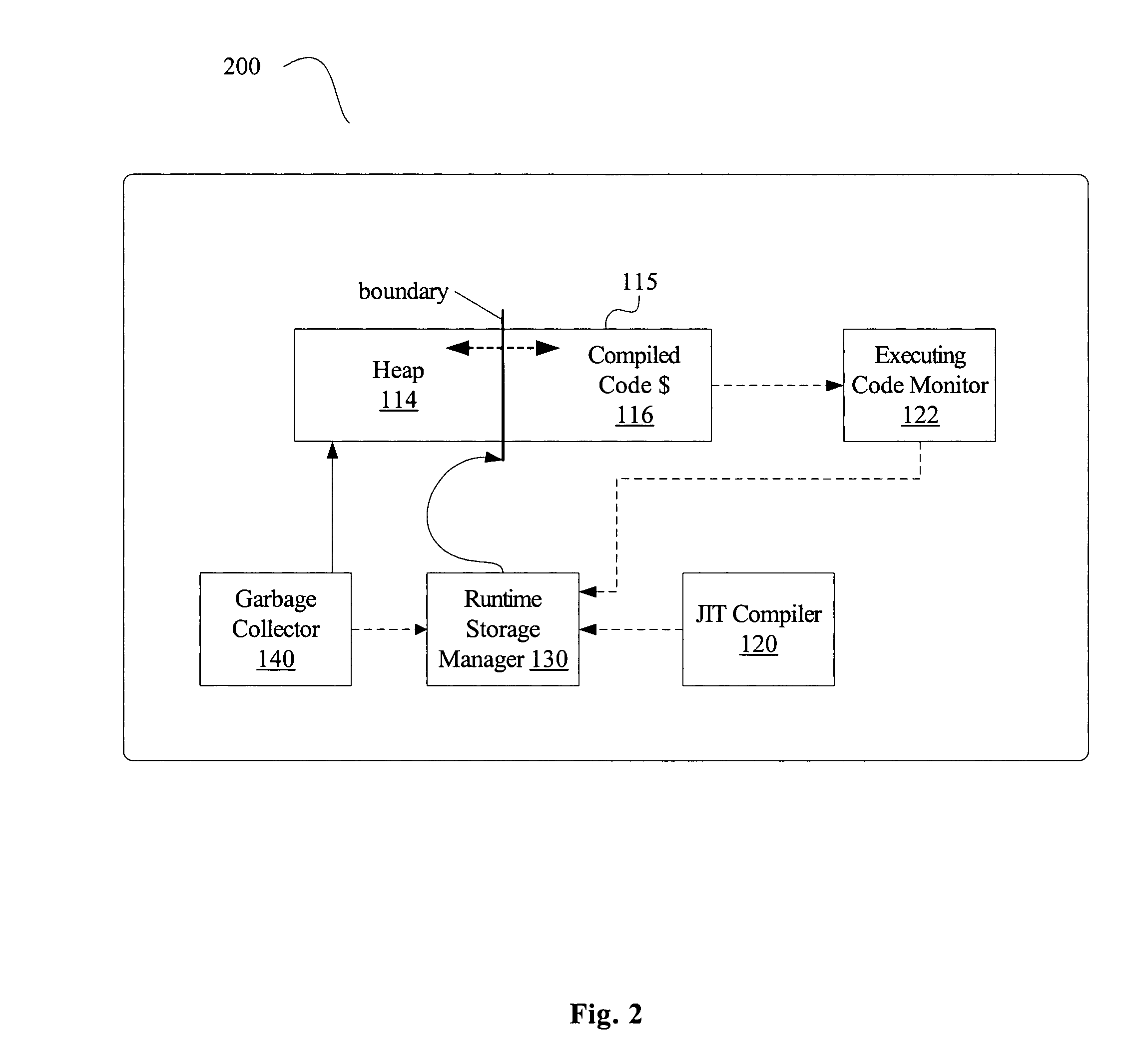

Method and apparatus for feedback-based management of combined heap and compiled code caches

InactiveUS20060277368A1Memory adressing/allocation/relocationProgram controlParallel computingStorage management

Disclosed are a method, apparatus and system for managing a shared heap and compiled code cache in a managed runtime environment. Based on feedback generated during runtime, a runtime storage manager dynamically allocates storage space, from a shared storage region, between a compiled code cache and a heap. For at least one embodiment, the size of the shared storage region may be increased if a growth need is identified for both the compiled code cache and the heap during a single iteration of runtime storage manager processing.

Owner:LEWIS BRIAN T

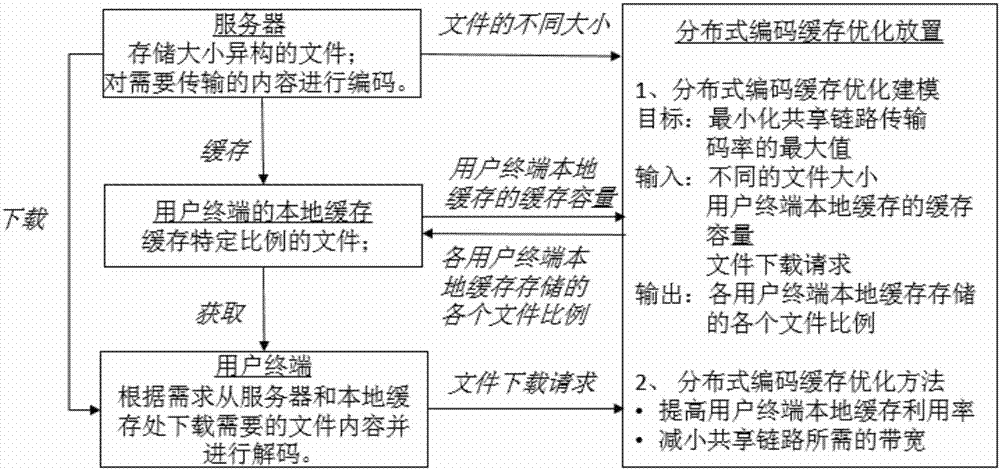

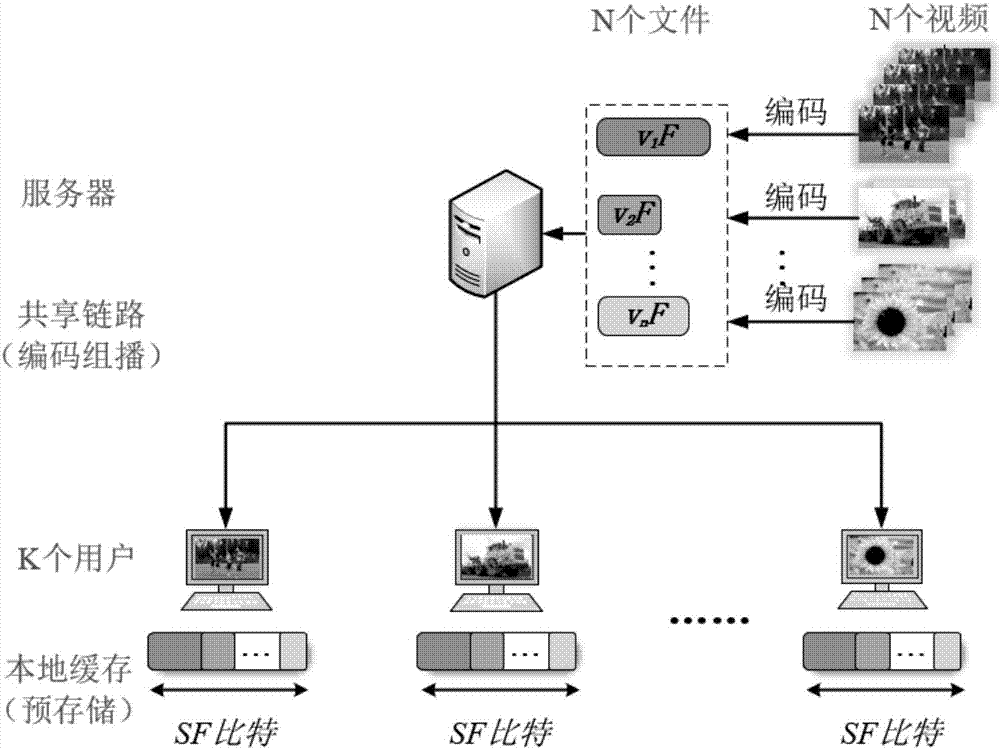

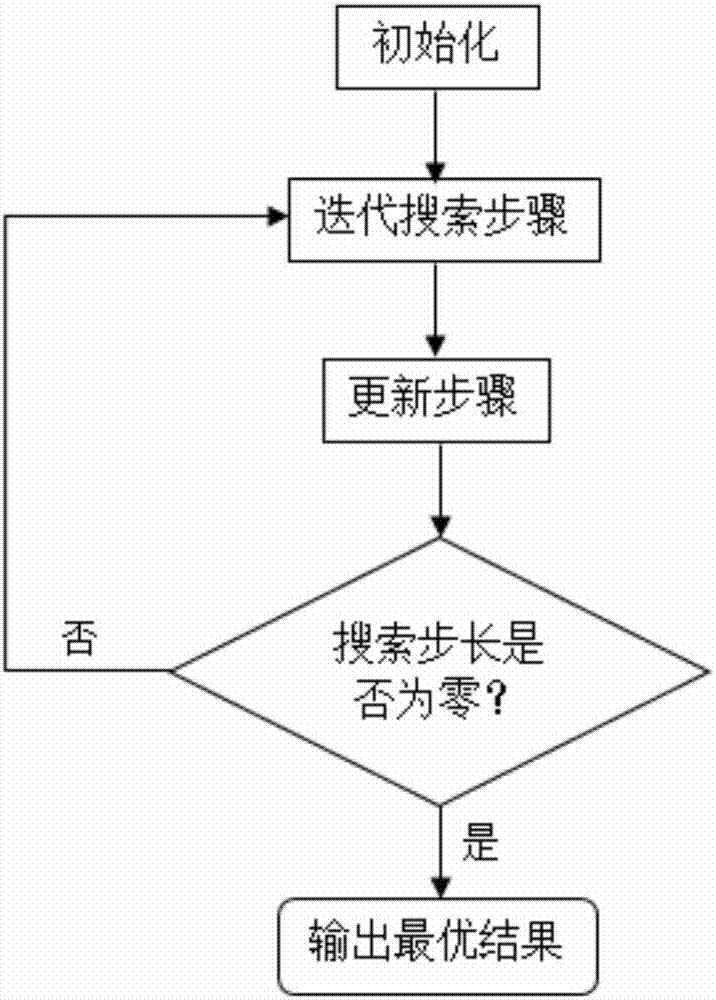

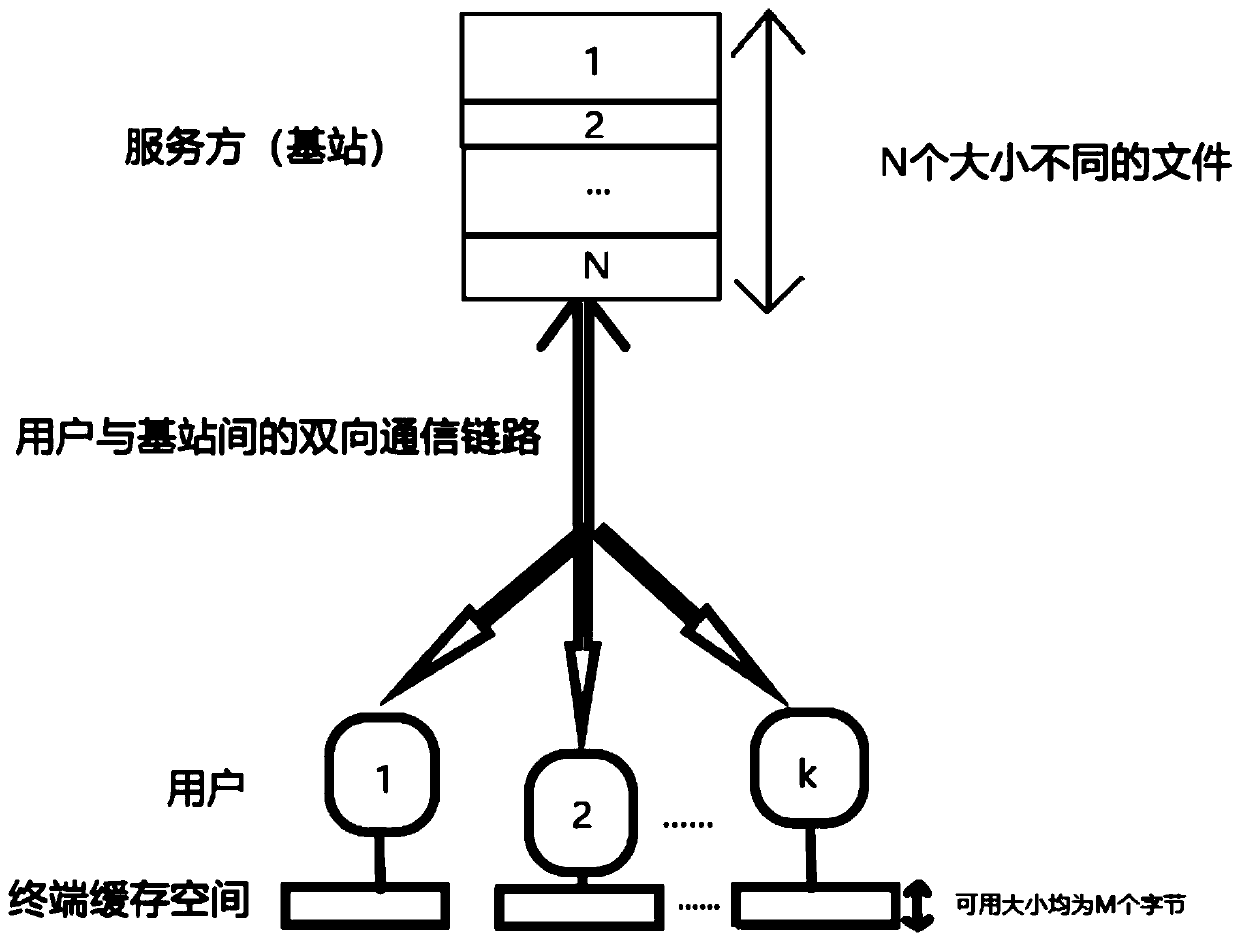

File size heterogeneous distributed coding cache placement method and system

ActiveCN107295070AIncrease profitReduce the maximum bit rateTransmissionPlacement methodUtilization rate

The invention provides a file size heterogeneous distributed coding cache placement method and system; the method considers file sizes in the server, local cache capacity limitations of each user terminal and file download request conditions of different user terminals, and employs a distributed coding cache optimal placement method to determine cache proportions of all files cached by all user terminals, thus finally minimizing the server shared link code rate that satisfies various file download requests of the user terminals. The method and system can improve the local cache utilization rate of all user terminals, thus effectively mitigating the server shared link load.

Owner:SHANGHAI JIAO TONG UNIV

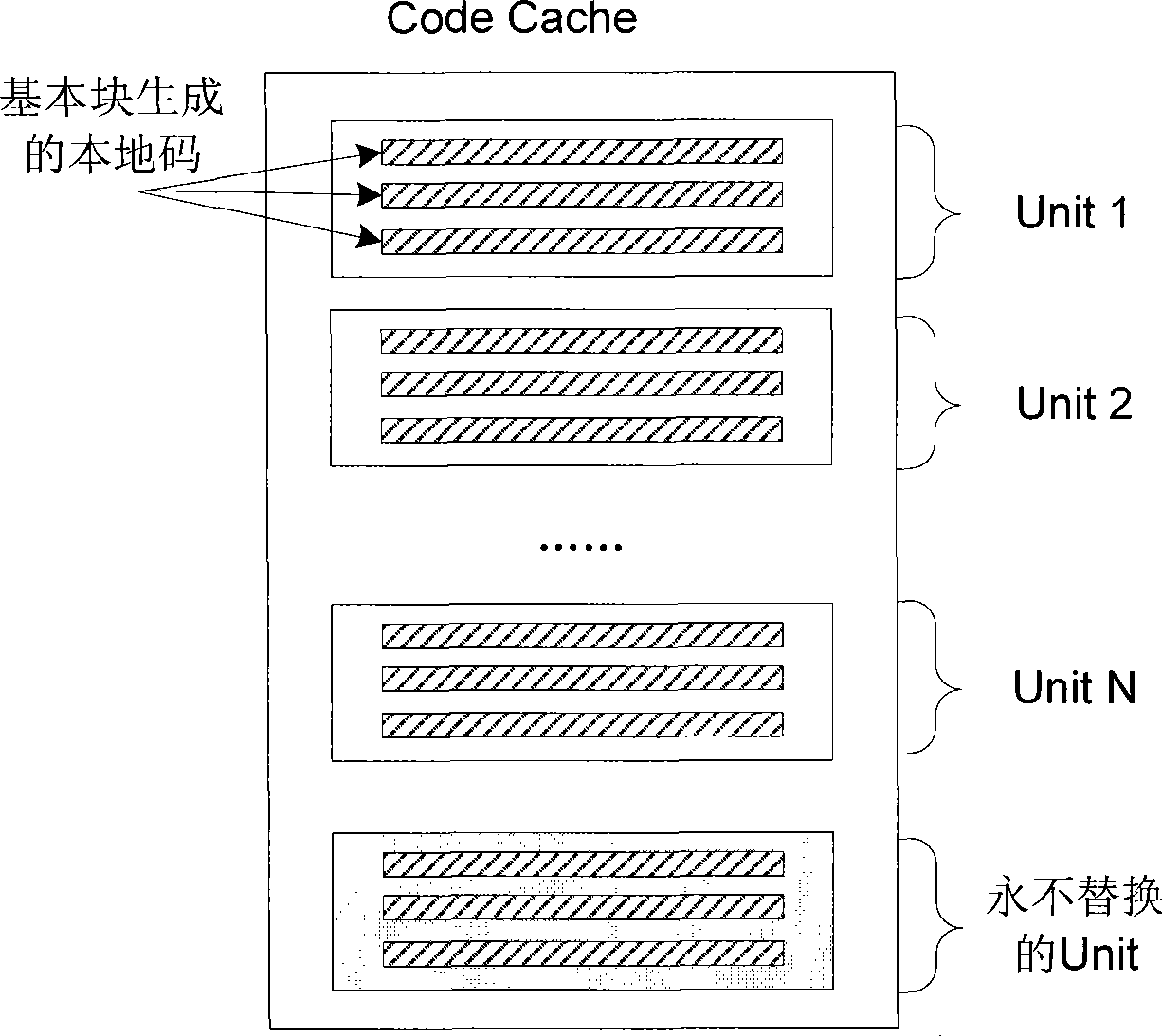

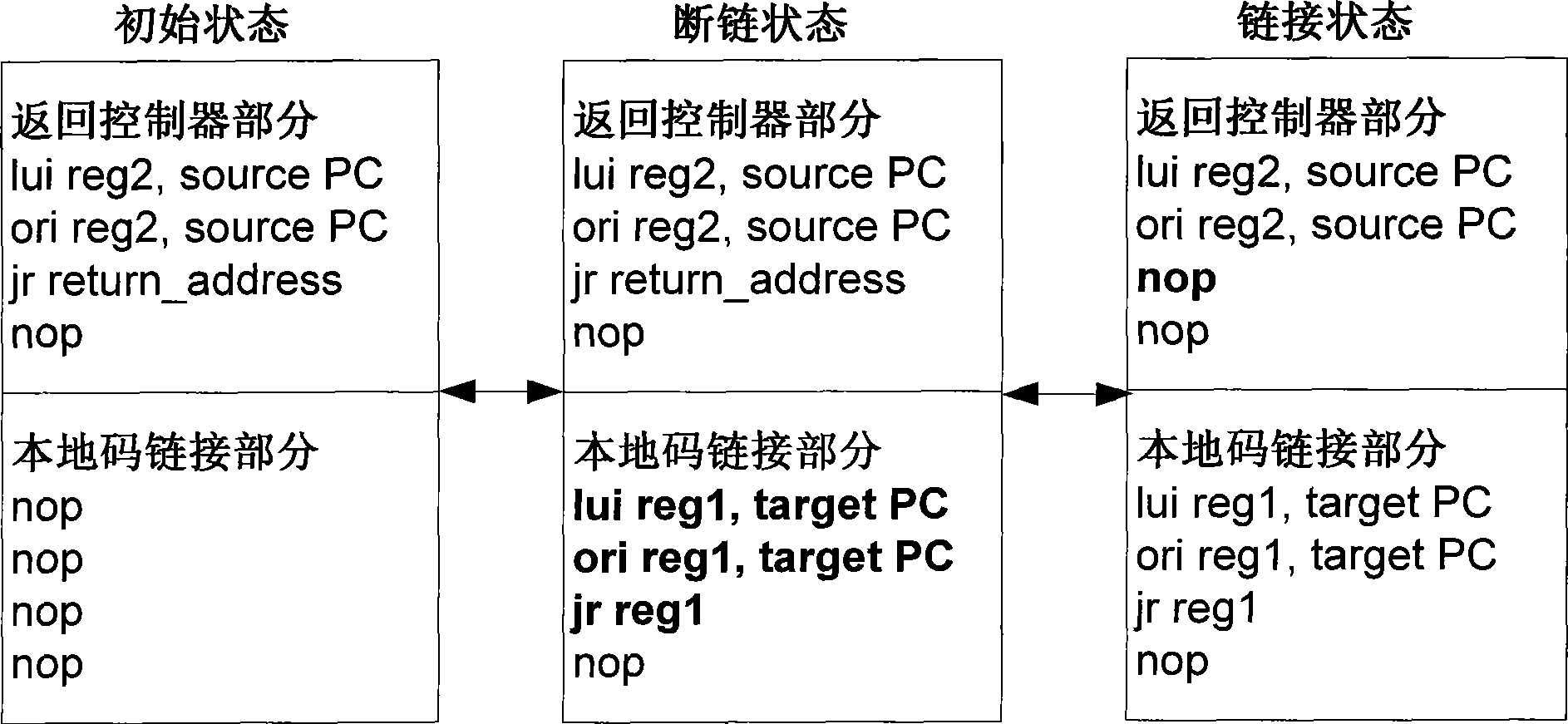

Threading sharing target local code cache replacement method and system in binary translator

ActiveCN101482851AGuaranteed concurrencyReduce overheadMemory adressing/allocation/relocationProgram controlCode spaceParallel computing

The present invention relates to a replacement method of thread sharing code cache in binary translator and a system thereof. The replacement method comprises the following steps: step 10, dividing local code space into N replaceable units with same magnitude and a never-replaceable unit; step 20, allocating local code from the present replaceable unit until the volume capacity of present replaceable unit obtains a high limit, then allocating local code from the next replaceable unit; and step 30, when the space of local code obtains the high limit of volume capacity, executing local code replacement with the replaceable unit as unit according to a first-in first-out strategy. In the steps N is a natural number. The replaceable unit is used for allocating the common local code and actualizing replacement strategy. The invention presents and realizes the replacement plan of multithread code cache. The replacement plan can be widely suitable for the binary translator on various architectures, and guarantees the concurrency of threads with less expenditure.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

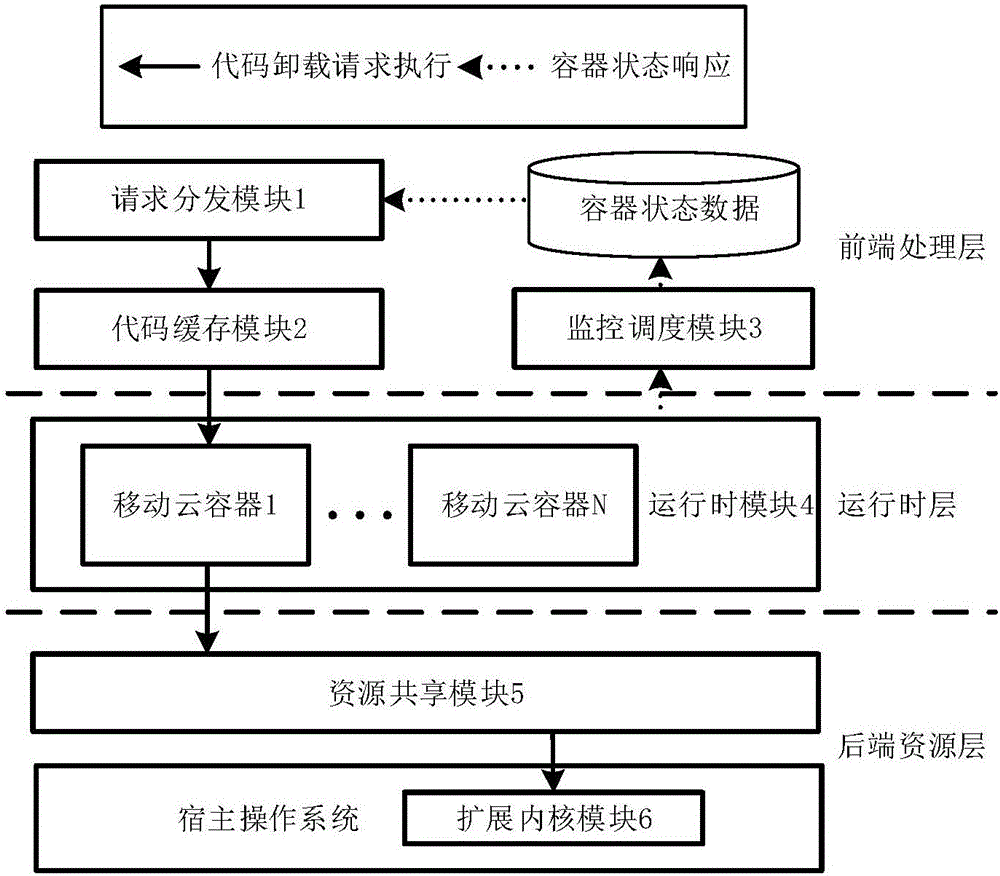

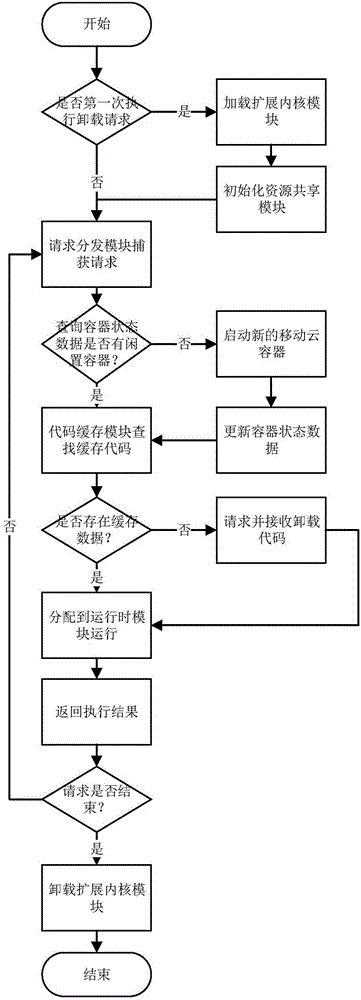

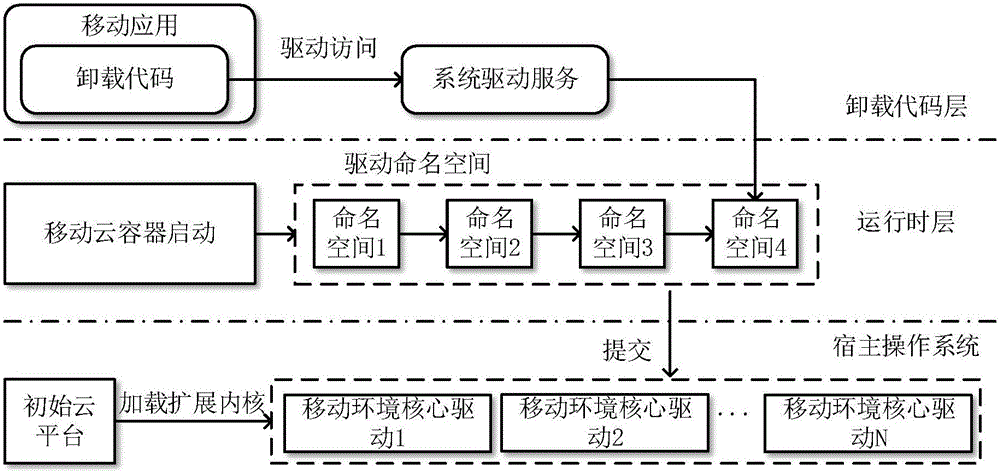

Container-based mobile code unloading support system under cloud environment and unloading method thereof

ActiveCN105893083APrevent Duplicate Sending IssuesAvoid multiple transfersResource allocationOther databases retrievalSupporting systemMobile cloud

The invention discloses a container-based mobile code unloading support system under cloud environment and an unloading method thereof. The mobile code unloading support system comprises a front-end processing layer, a runtime layer and a rear-end resource layer, wherein the front-end processing layer is responsible for responding arrived requests and management container states, and is realized through a request distribution module, a code cache module and a monitoring and dispatching module; the runtime layer is used for providing execution environment same as a terminal for code unloading, and is realized through a runtime module which consists of a plurality of mobile cloud containers; the rear-end resource layer is used for solving the incompatibility, with mobile terminal environment, of a cloud platform and providing bottom resource support for runtime, and is realized through a resource sharing module and a kernel extension module in a host operation system. According to the mobile code unloading support system and the unloading method, the constructed mobile cloud containers are used as runtime environment for code unloading, so that the execution demands of unloading tasks are ensured, the calculated performance of cloud is improved and the request response time is shortened; through the cooperative relationship among the modules, the platform performance is optimized and guarantee is provided for the efficient work of the system.

Owner:HUAZHONG UNIV OF SCI & TECH

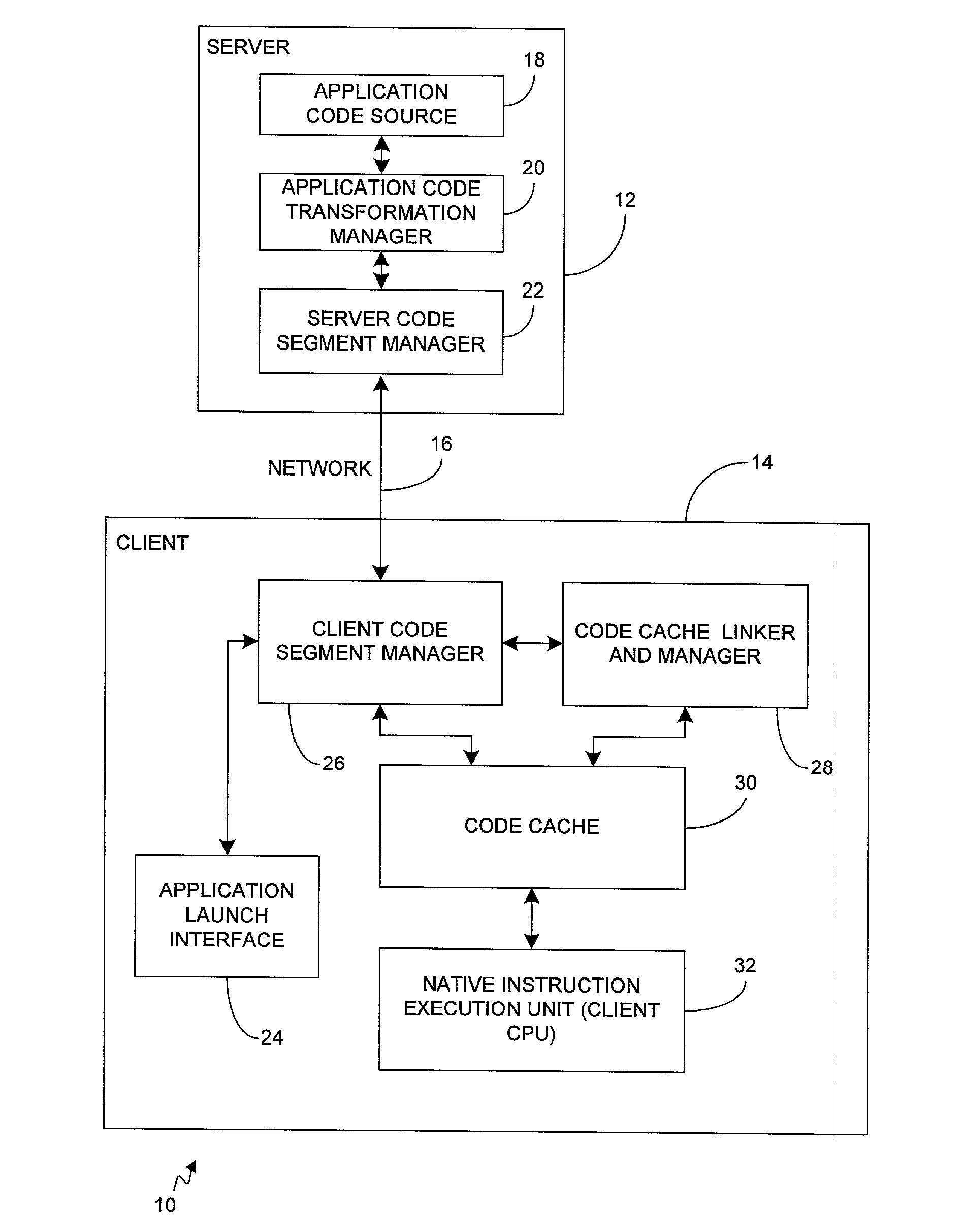

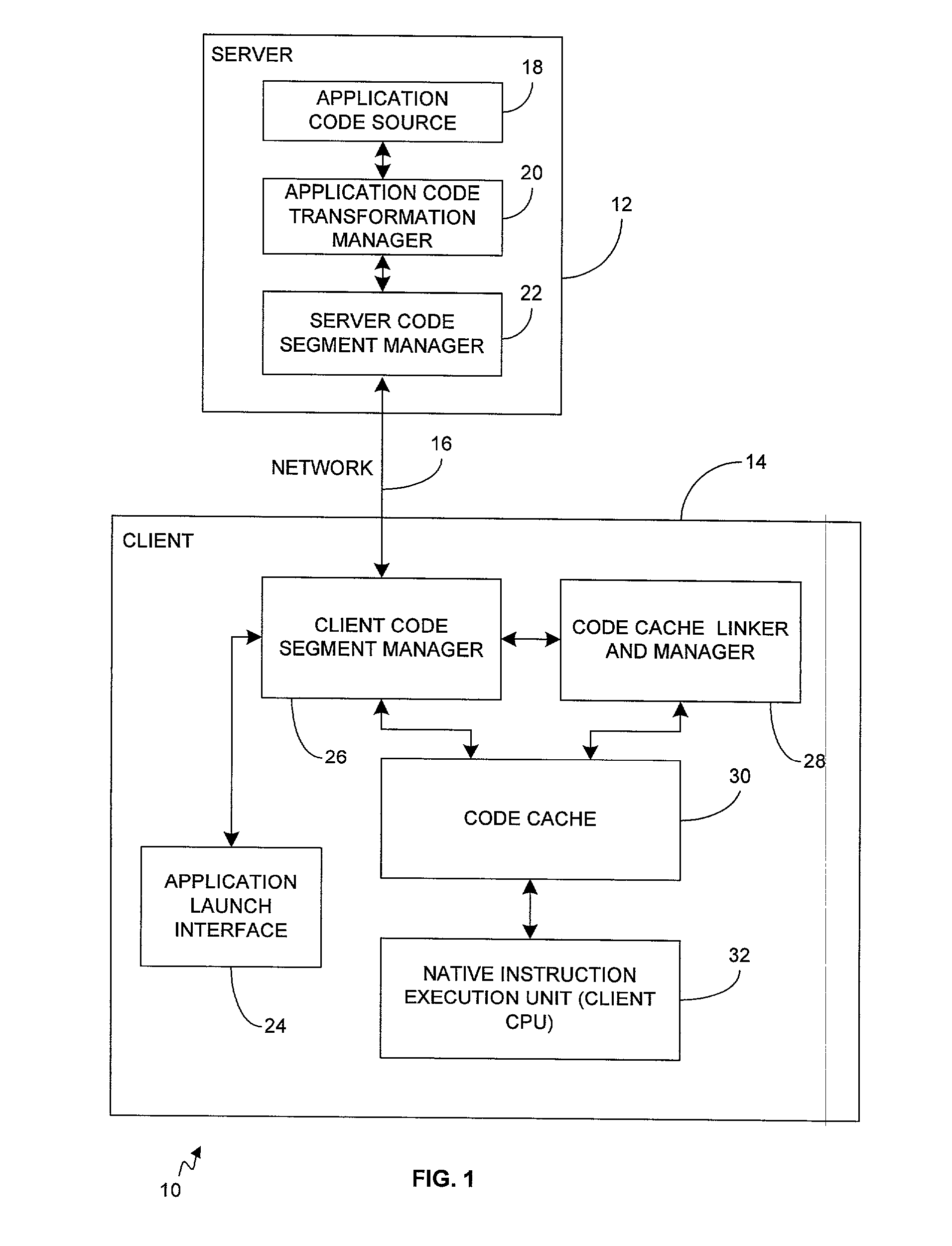

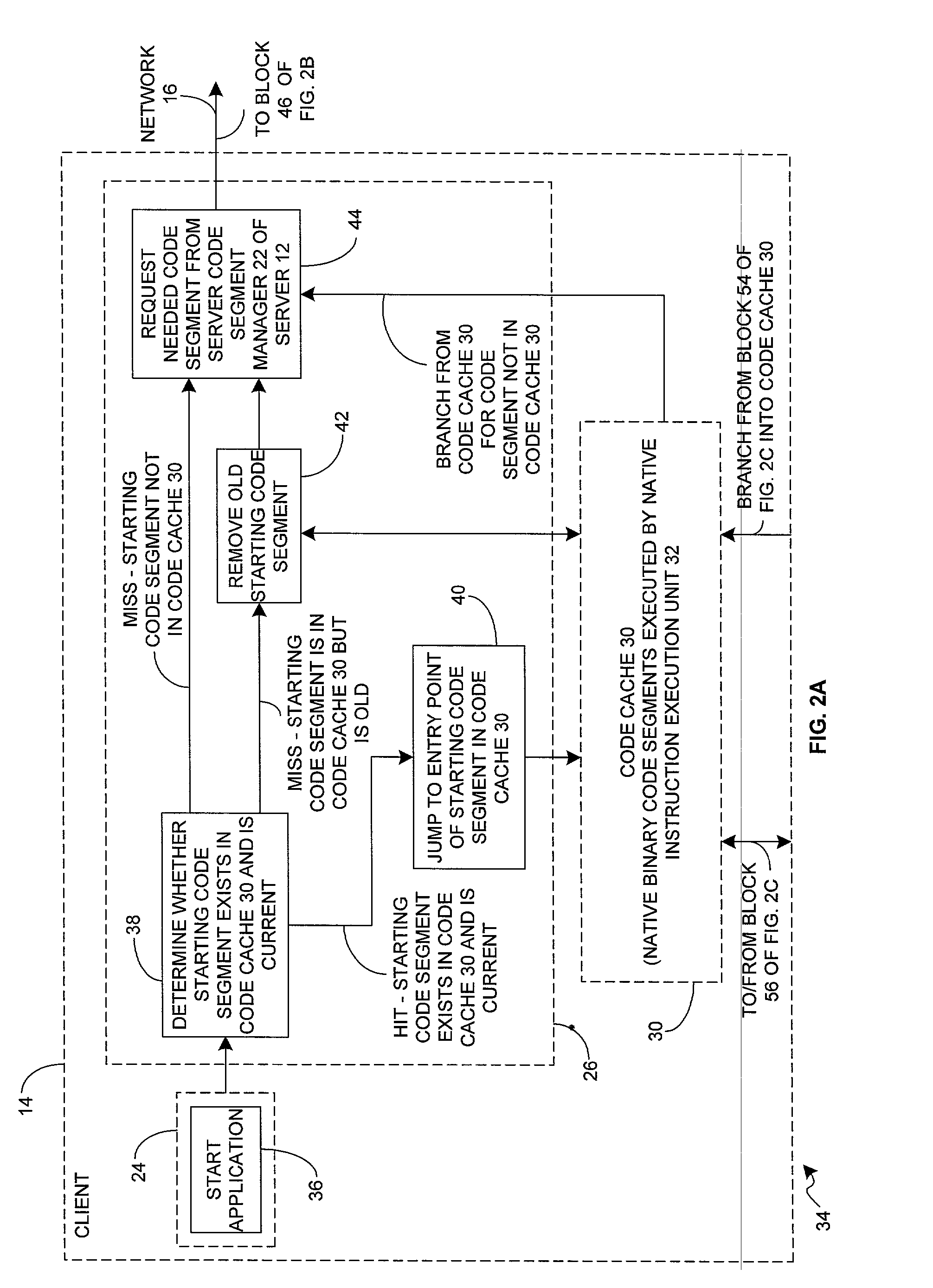

Networked client-server architecture for transparently transforming and executing applications

InactiveUS7640153B2Improve scalabilityBurden of applicationProgram loading/initiatingSoftware simulation/interpretation/emulationClient-sideApplication software

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

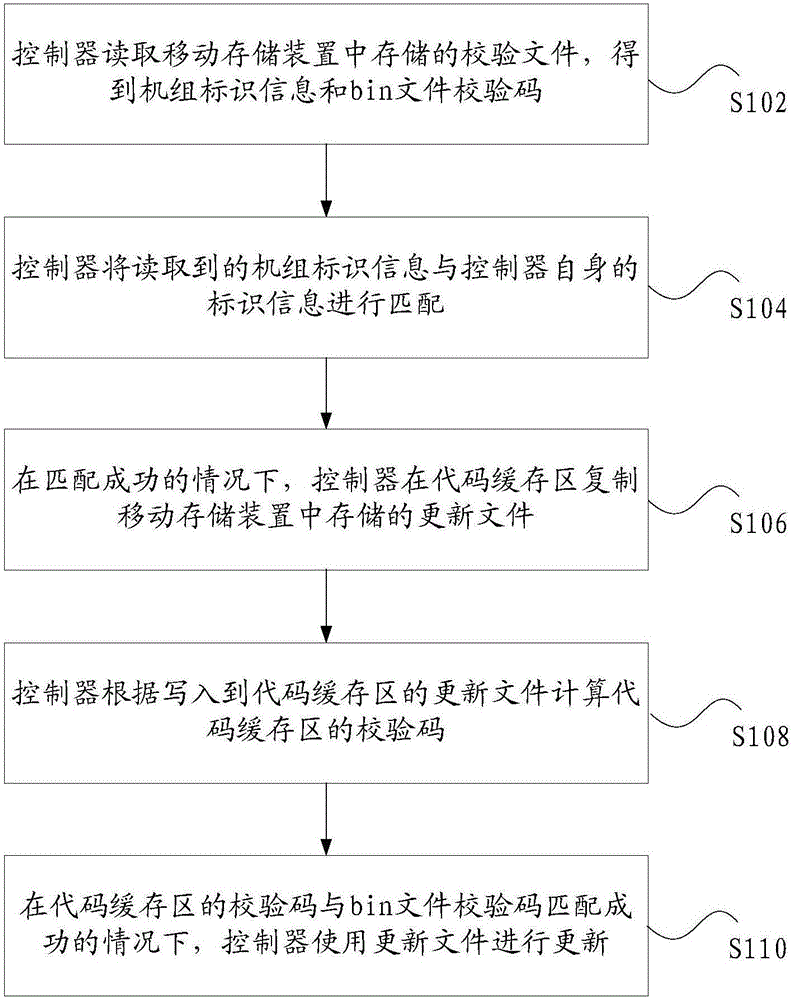

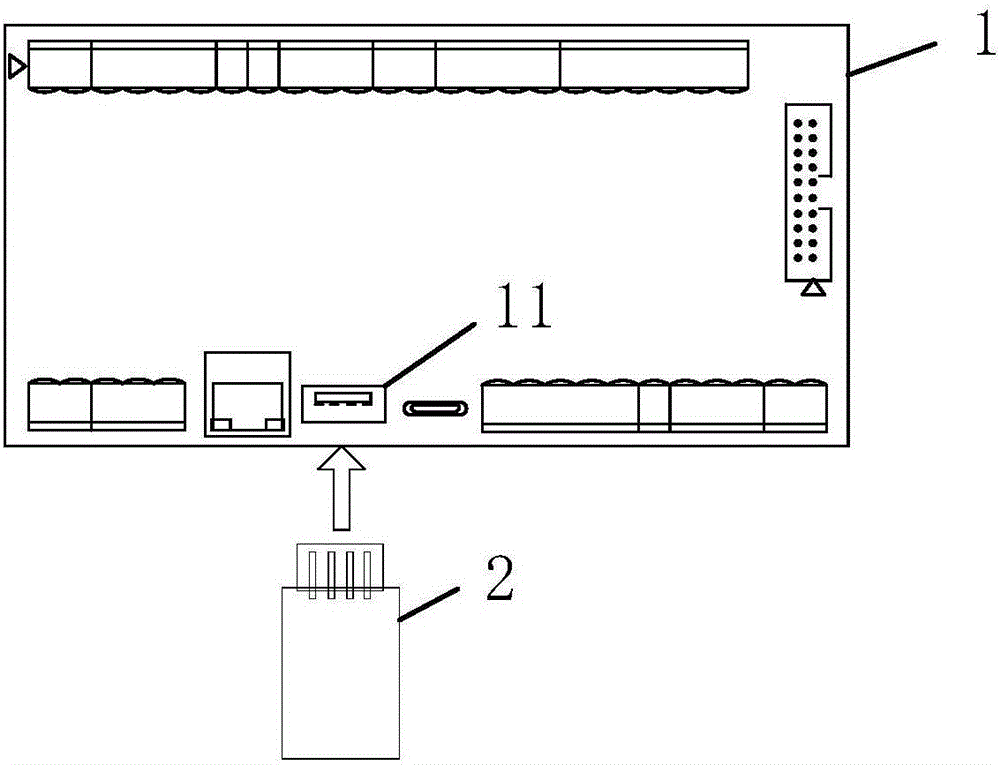

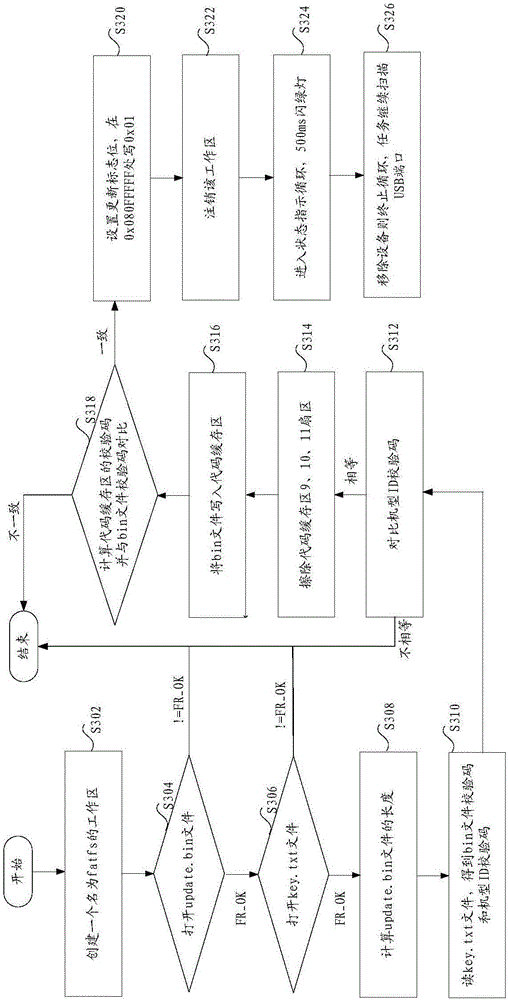

Method and device for program upgrading, and controller

ActiveCN106502718AThere will be no false updatesSolve the technical problems that are prone to false file updatesProgram loading/initiatingSoftware deploymentRemovable mediaUSB

The invention discloses a method and device for program upgrading, and a controller. The method comprises the steps that a controller reads a verification document stored in a mobile storage device, and unit identification information and a bin document verification code are obtained, wherein the unit identification information and the bin document verification code are stored in the verification document; the controller matches the read unit identification information with identification information of the controller; when matching is successful, the controller copy an update document stored in the mobile storage device in a code cache area; the controller computes a verification code of the code cache area according to the update document written into the code cache area; and the controller uses the update document to achieve updating when the verification code of the code cache area is matched with the bin document verification code. According to the invention, the technical problem in the prior art that wrong document updating can take place easily in application of a USB-based program updating method can be solved.

Owner:GREE ELECTRIC APPLIANCES INC

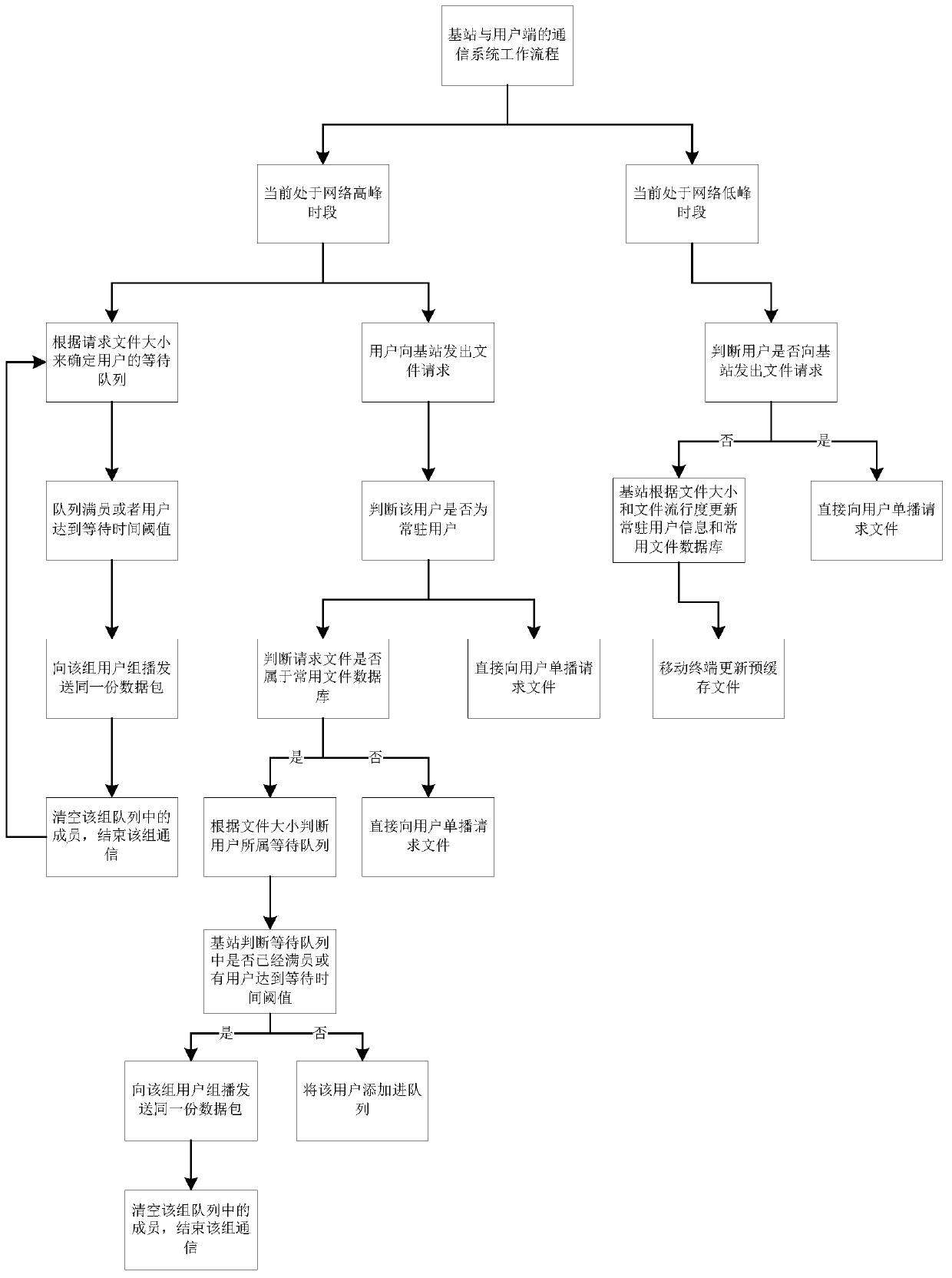

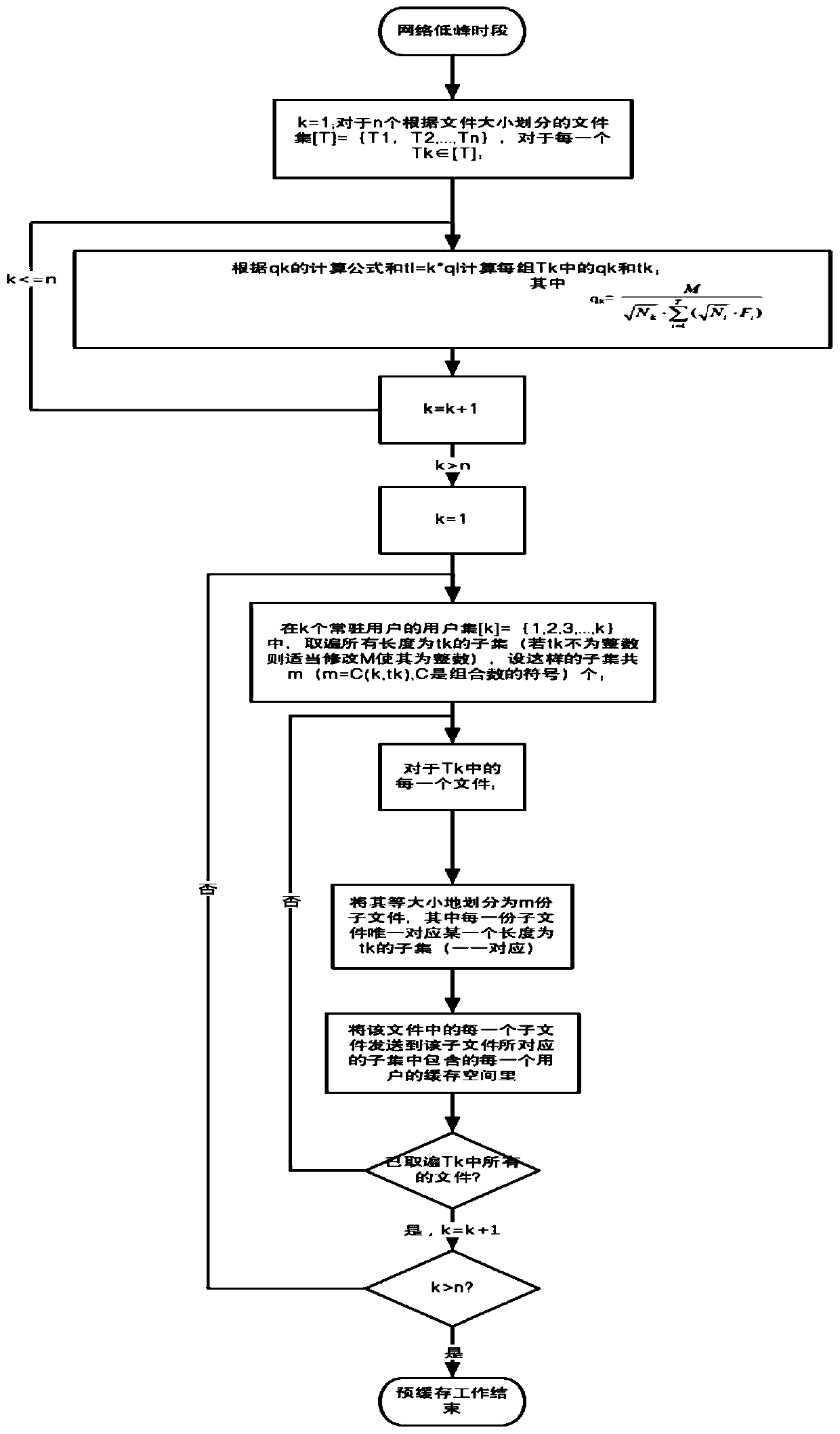

Communication method and system based on coding cache, and storage medium

ActiveCN110351780ARelieve blockageRelieve pressureNetwork traffic/resource managementTransmissionBase codeFile size

The invention discloses a communication method and a system based on coding cache and a storage medium. The method comprises the steps of acquiring resident user information in an area; recording a request file of the resident user, and constructing a common file database; when determining that the current time period is the network low-peak time period, updating the resident user information andthe common file database by adopting a file size-based coding cache scheme, and sending a file to the user according to the file size and the file popularity; and when the current time period is determined to be the network peak time period, identifying the size of the request file and determining the group to which the user belongs according to the received user request information, and then sending the same data packet file to the users in the same group by adopting a multicast transmission technology. According to the communication method, the network congestion during peak hours can be relieved, the network pressure is reduced through the multicast transmission technology, the efficiency and the practicability are improved, and the method can be widely applied to the technical field ofcommunication.

Owner:SUN YAT SEN UNIV

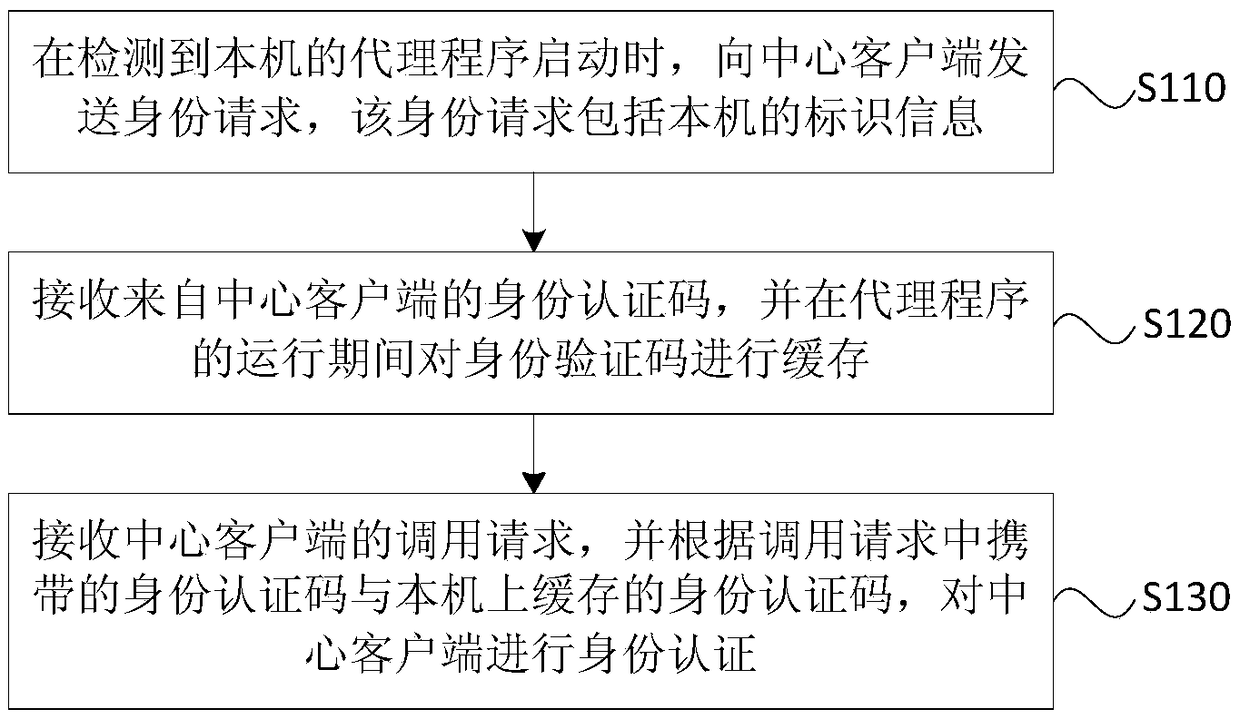

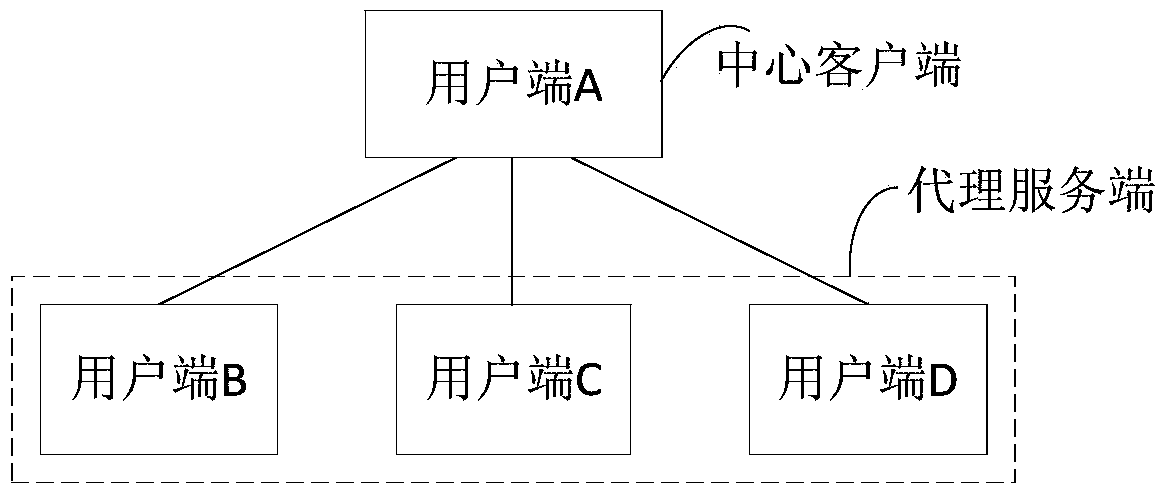

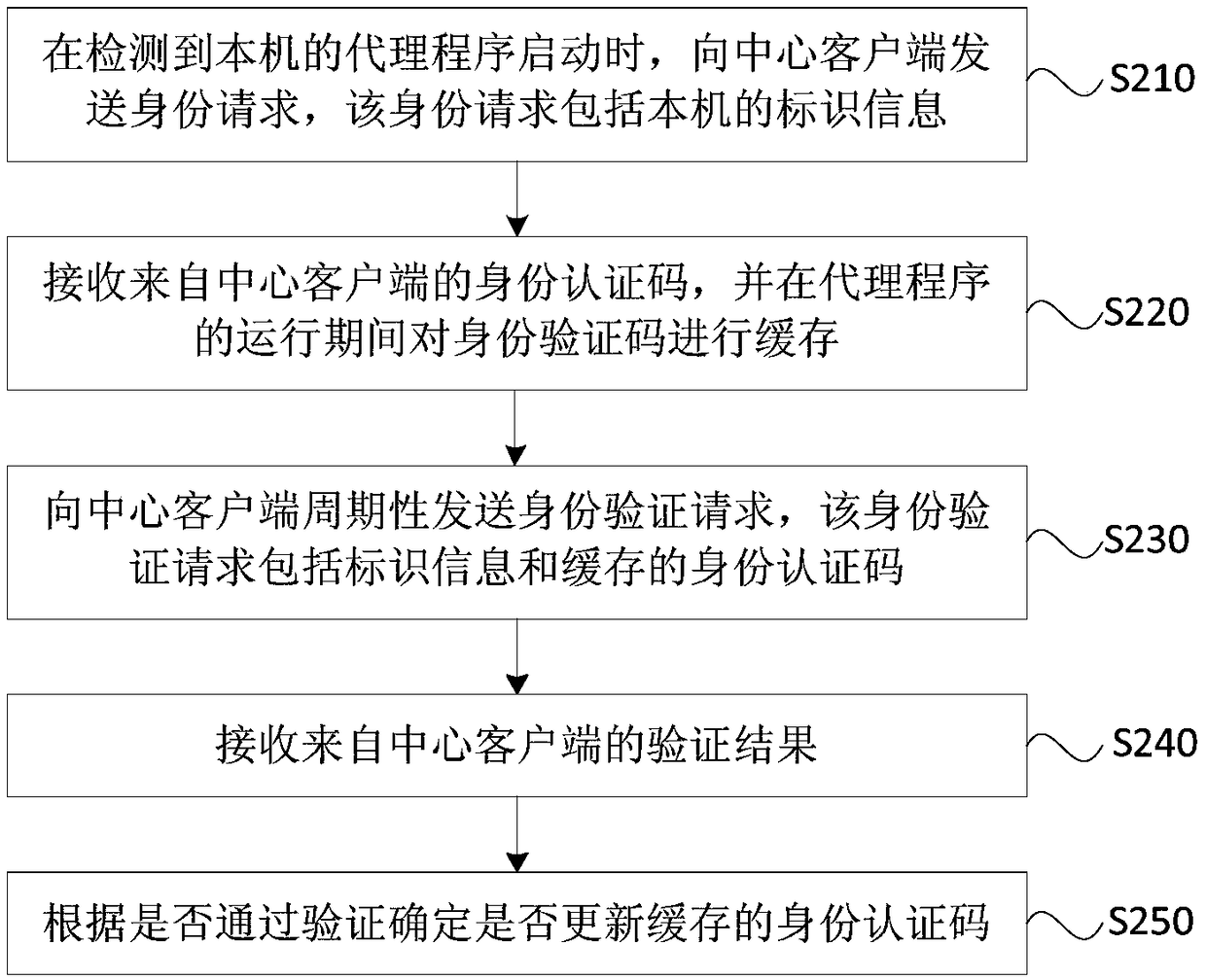

Identity authentication method and device, agent server and storage medium

The embodiment of the invention discloses an identity authentication method and device, an agent server and a storage medium. The method comprises the steps of: when it is detected that an agent program of a local is started, sending an identity request to a center client, wherein the identity request comprises the identification information of the local; receiving an identity authentication codefrom the center client, and performing cache for the identity authentication codes during the operation of the agent program; receiving a transfer request of the center client, and performing identityauthentication for the center client according to the identity authentication codes carried in the transfer request and the identity authentication codes cached on the local. According to the technical scheme provided by the embodiment of the invention, the instant obtaining and the cache for the identity authentication codes by the agent server when the agent program is started each time so as to ensure the timeliness of identity authentication information and reduce the risk caused by verification failure and illegal authentication because identity authentication information is easily lostand easily obtained.

Owner:SHANGHAI DAMENG DATABASE

Method and apparatus for feedback-based management of combined heap and compiled code caches

Disclosed are a method, apparatus and system for managing a shared heap and compiled code cache in a managed runtime environment. Based on feedback generated during runtime, a runtime storage manager dynamically allocates storage space, from a shared storage region, between a compiled code cache and a heap. For at least one embodiment, the size of the shared storage region may be increased if a growth need is identified for both the compiled code cache and the heap during a single iteration of runtime storage manager processing.

Owner:INTEL CORP

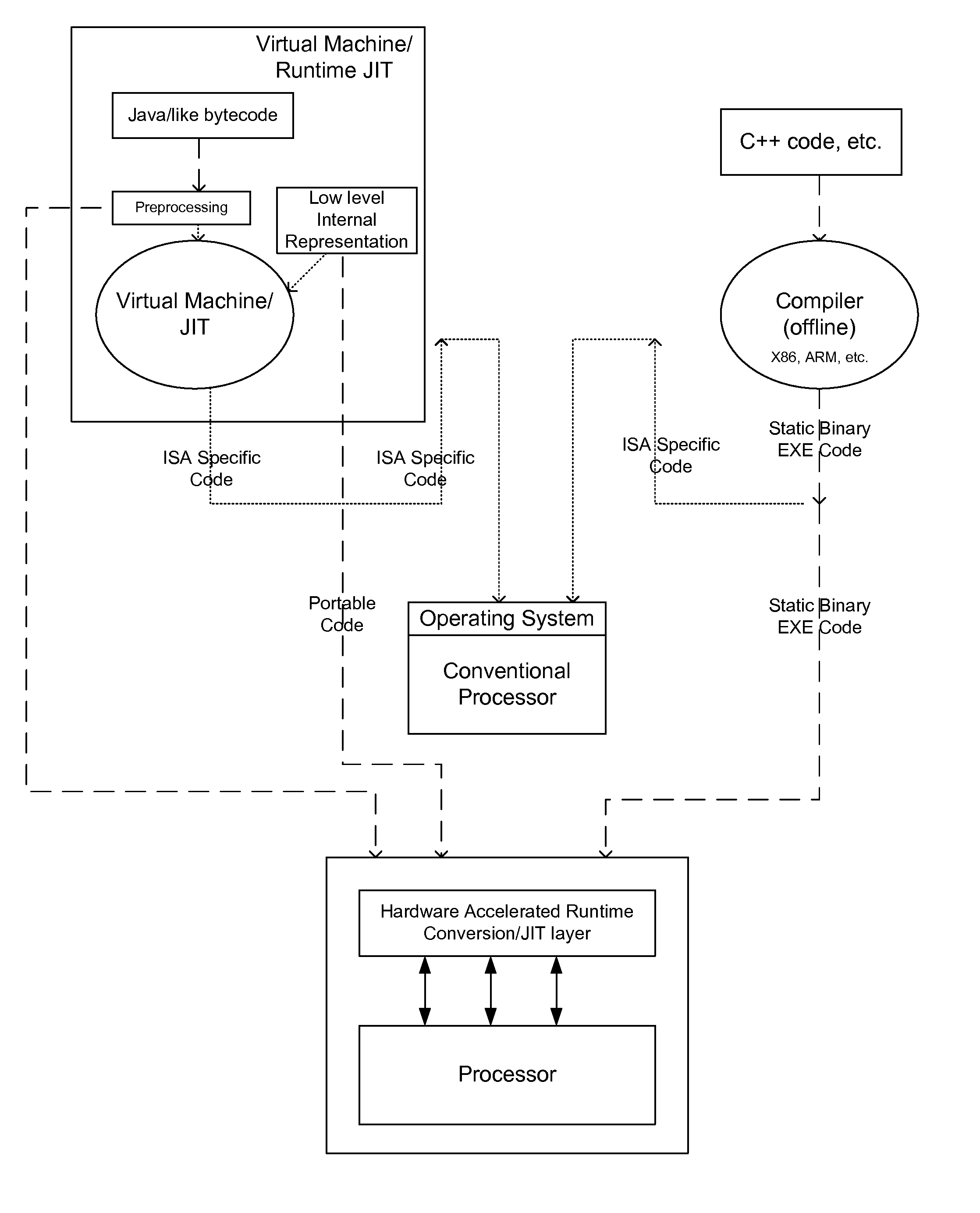

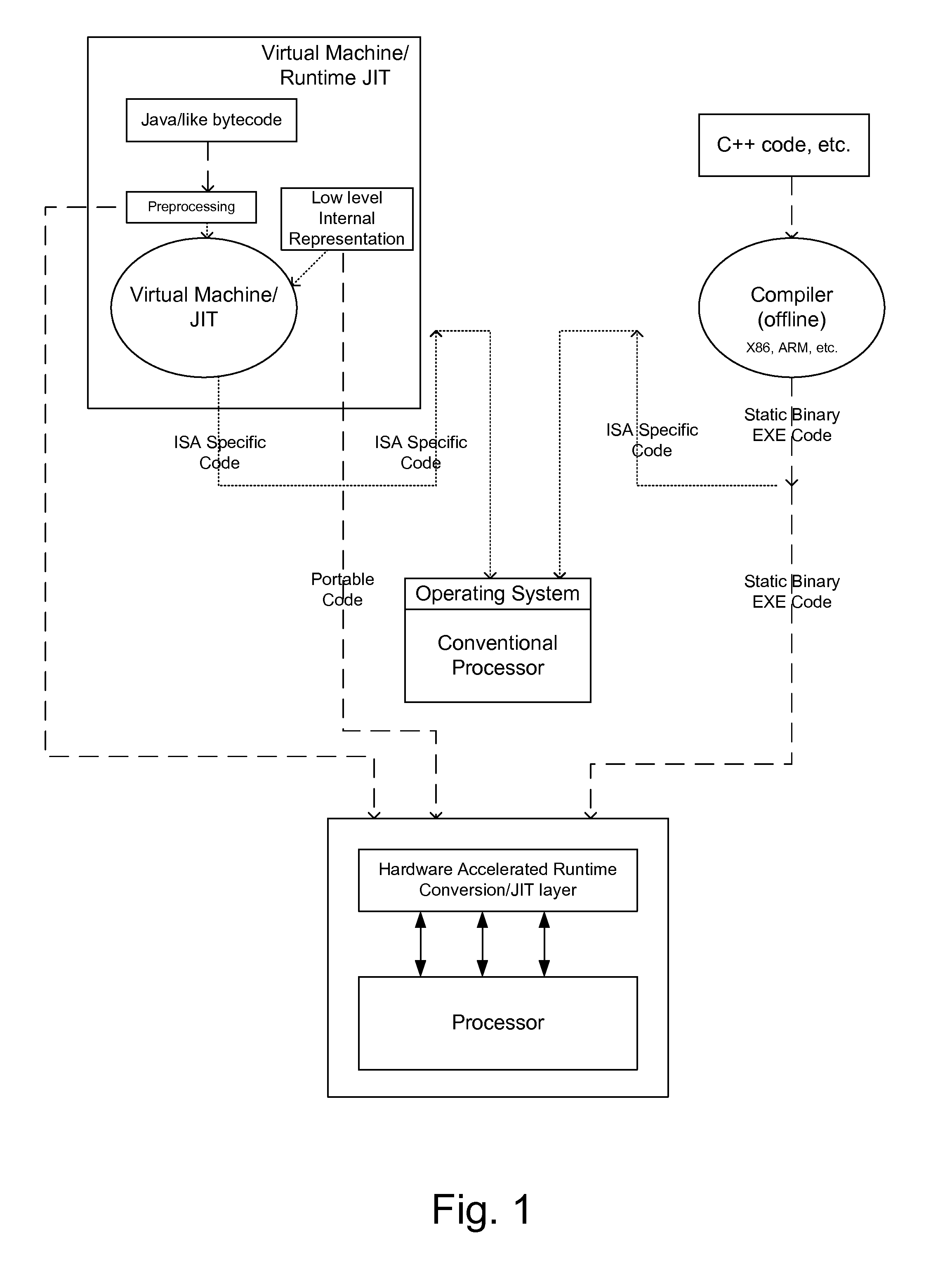

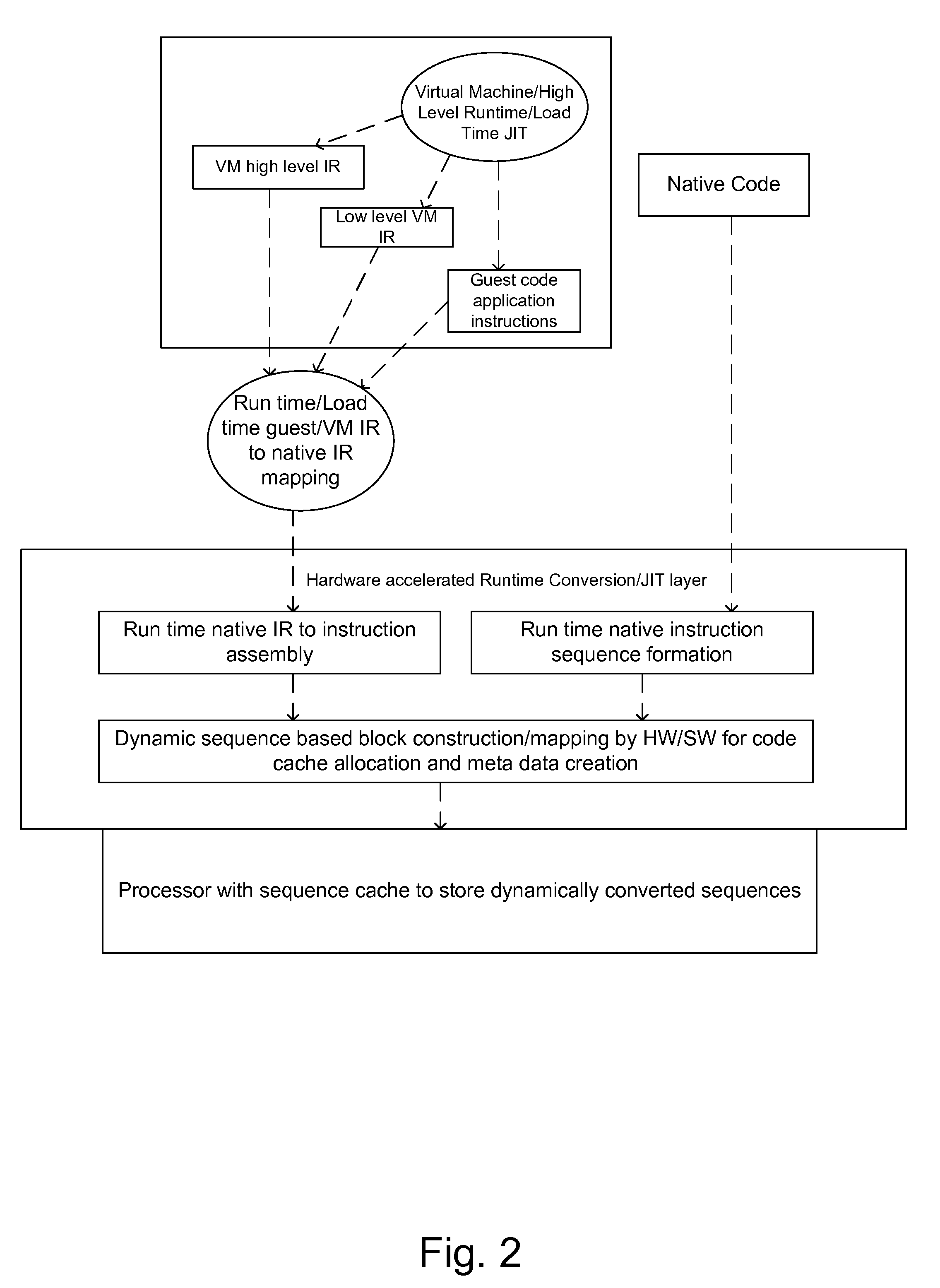

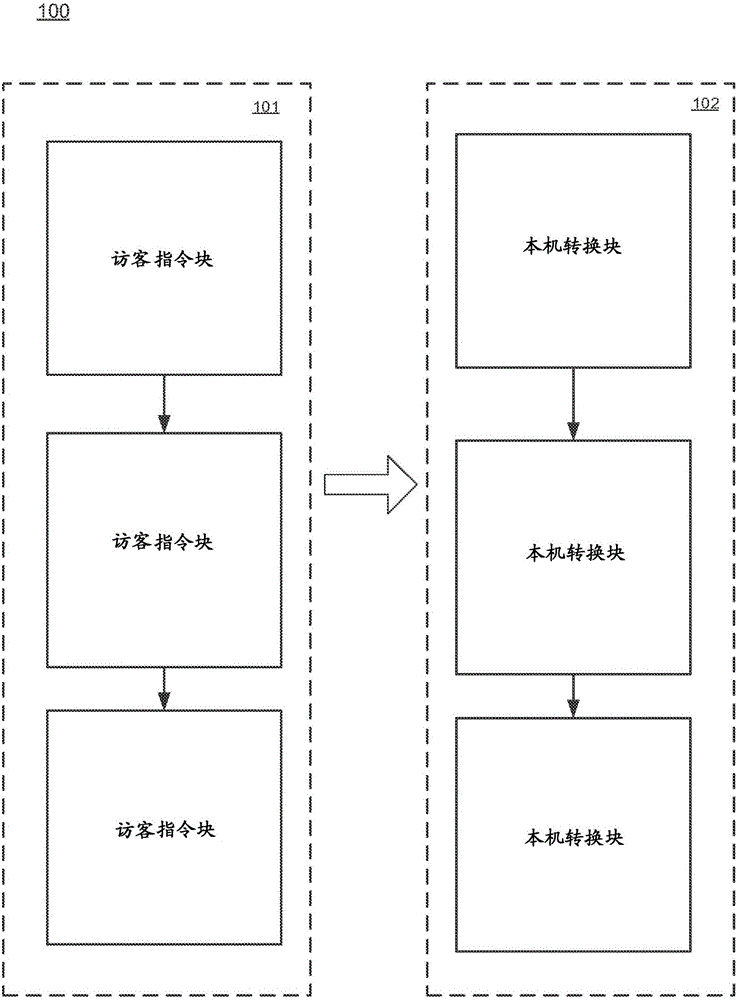

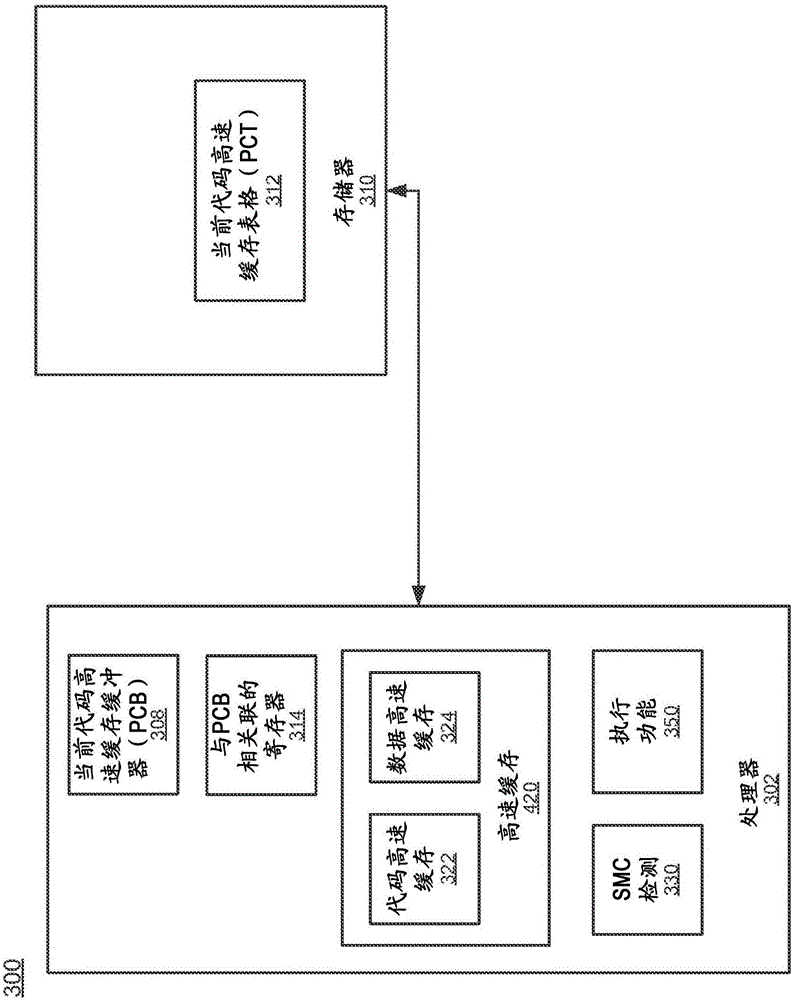

System for an instruction set agnostic runtime architecture

ActiveUS20160026483A1Execution paradigmsSoftware simulation/interpretation/emulationProcessing InstructionInstruction sequence

A system for an agnostic runtime architecture. The system includes a close to bare metal JIT conversion layer, a runtime native instruction assembly component included within the conversion layer for receiving instructions from a guest virtual machine, and a runtime native instruction sequence formation component included within the conversion layer for receiving instructions from native code. The system further includes a dynamic sequence block-based instruction mapping component included within the conversion layer for code cache allocation and metadata creation, and is coupled to receive inputs from the runtime native instruction assembly component and the runtime native instruction sequence formation component, and wherein the dynamic sequence block-based instruction mapping component receives resulting processed instructions from the runtime native instruction assembly component and the runtime native instruction sequence formation component and allocates the resulting processed instructions to a processor for execution.

Owner:INTEL CORP

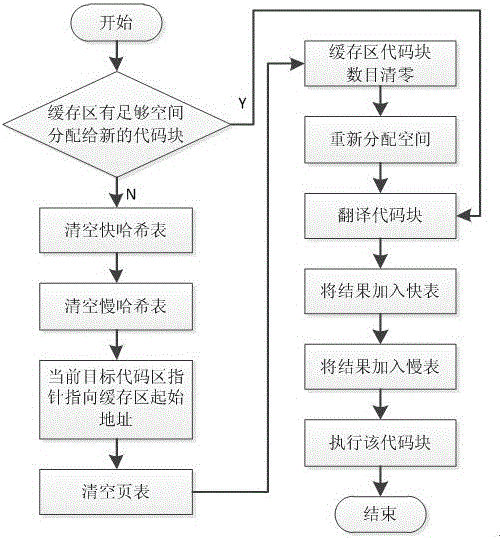

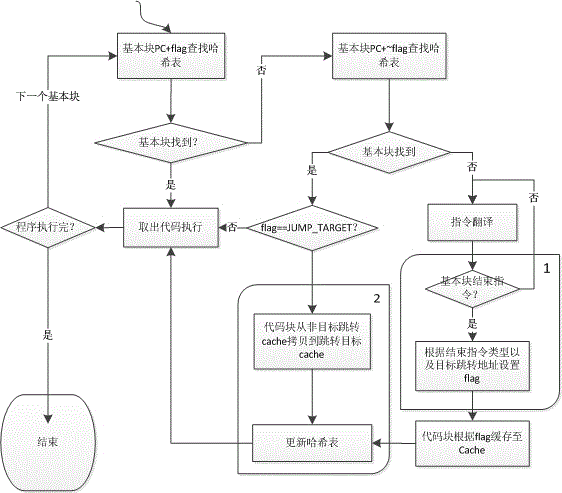

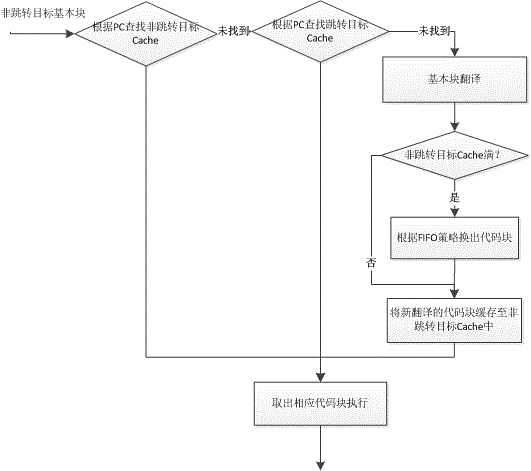

Code Cache management method in dynamic binary translation

ActiveCN103150196AGood technical effectAvoid duplicate translationsMemory adressing/allocation/relocationProgram controlBasic blockCode cache

The invention relates to a dynamic binary translation technology in the field of computer application, and discloses a code Cache management method in the dynamic binary translation. The code Cache comprises a non-skip target Cache and a skip target Cache, and adopts an FIFO (first in and first out) strategy. The method comprises the following steps: determining types of translation basic blocks; executing a non-skip target basic block processing procedure when the basic blocks are non-skip target basic blocks; and executing a skip target basic block processing procedure when the basic blocks are skip target basic blocks. The code Cache management method in the dynamic binary translation has the advantages that translated codes can be cached effectively, and the repeated translation is reduced.

Owner:ZHEJIANG UNIV

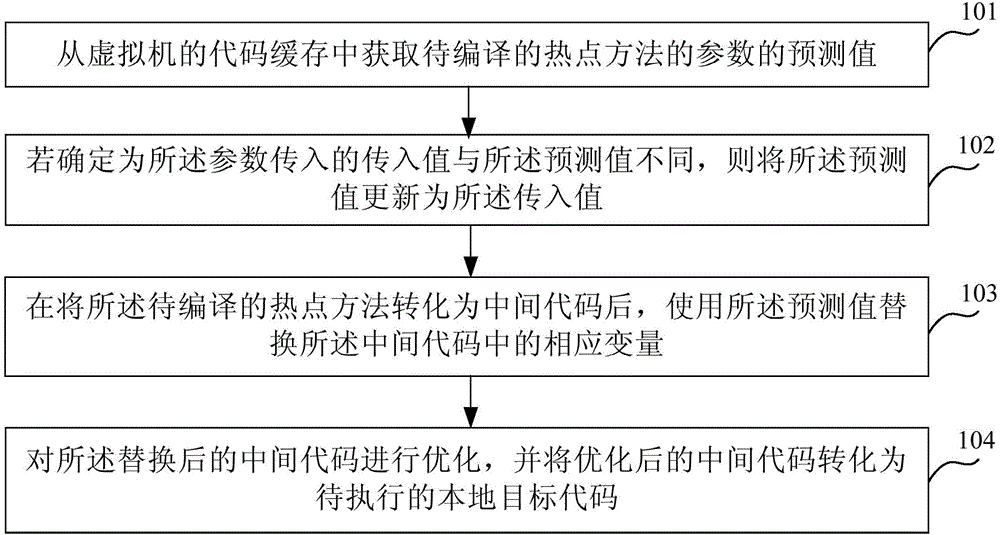

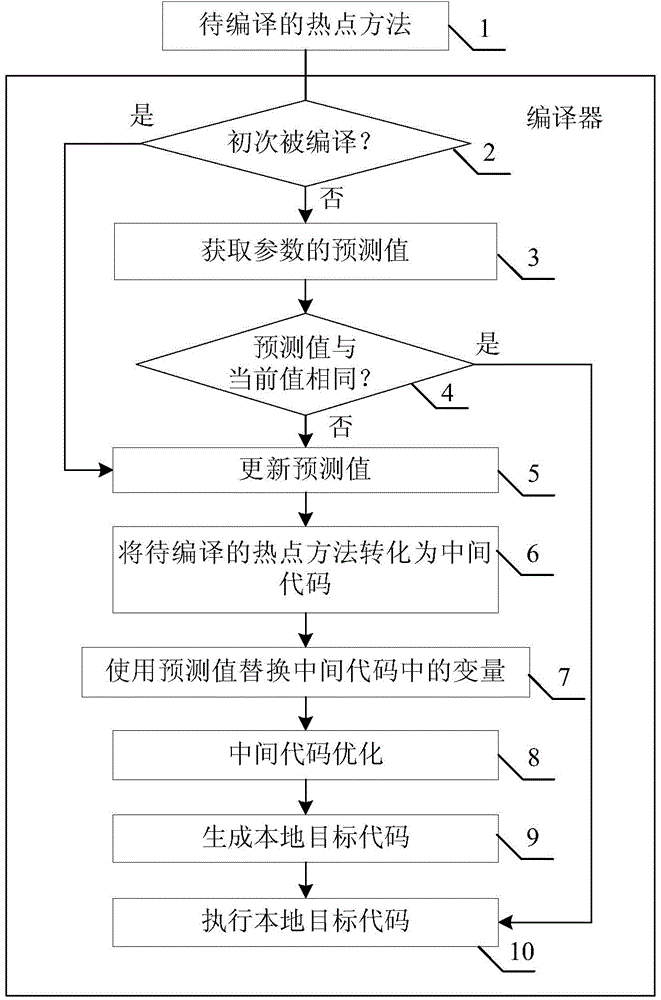

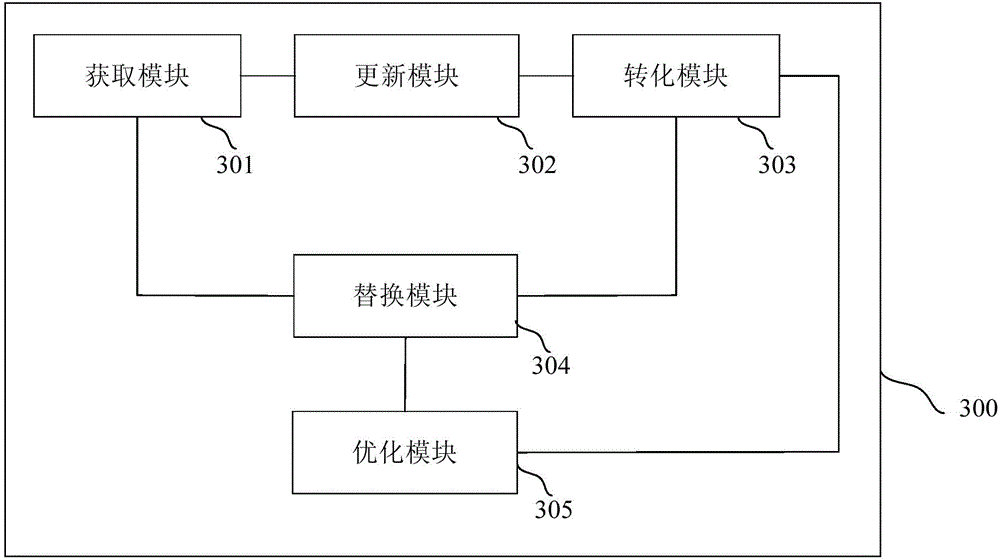

Dynamic compilation method and apparatus

ActiveCN105988854AReduce the number of instructionsSmall scaleProgram controlMemory systemsDynamic compilationParallel computing

Embodiments of the invention provide a dynamic compilation method and apparatus. The method comprises the steps of obtaining a predicted value of a parameter of a to-be-compiled hotspot method from a code cache of a virtual machine; if it is determined that an input value of parameter input is different from the predicted value, updating the predicted value to the input value; replacing a corresponding variable in an intermediate code with the predicted value after converting the to-be-compiled hotspot method into the intermediate code; and optimizing the replaced intermediate code and converting the optimized intermediate code into a to-be-executed local target code. According to the dynamic compilation method and apparatus provided by the embodiments of the invention, the scale of codes generated by compilation can be reduced and the system performance of the virtual machine can be improved.

Owner:LOONGSON TECH CORP

Method and apparatus for providing hardware support for self-modifying code

InactiveCN106796506ARuntime instruction translationConcurrent instruction executionSelf-modifying codeComputer hardware

A method and apparatus for providing support for self modifying guest code. The apparatus includes a memory, a hardware buffer, and a processor. The processor is configured to convert guest code to native code and store converted native code equivalent of the guest code into a code cache portion of the processor. The processor is further configured to maintain the hardware buffer configured for tracking respective locations of converted code in a code cache. The hardware buffer is updated based a respective access to a respective location in the memory associated with a respective location of converted code in the code cache. The processor is further configured to perform a request to modify a memory location after accessing the hardware buffer.

Owner:INTEL CORP

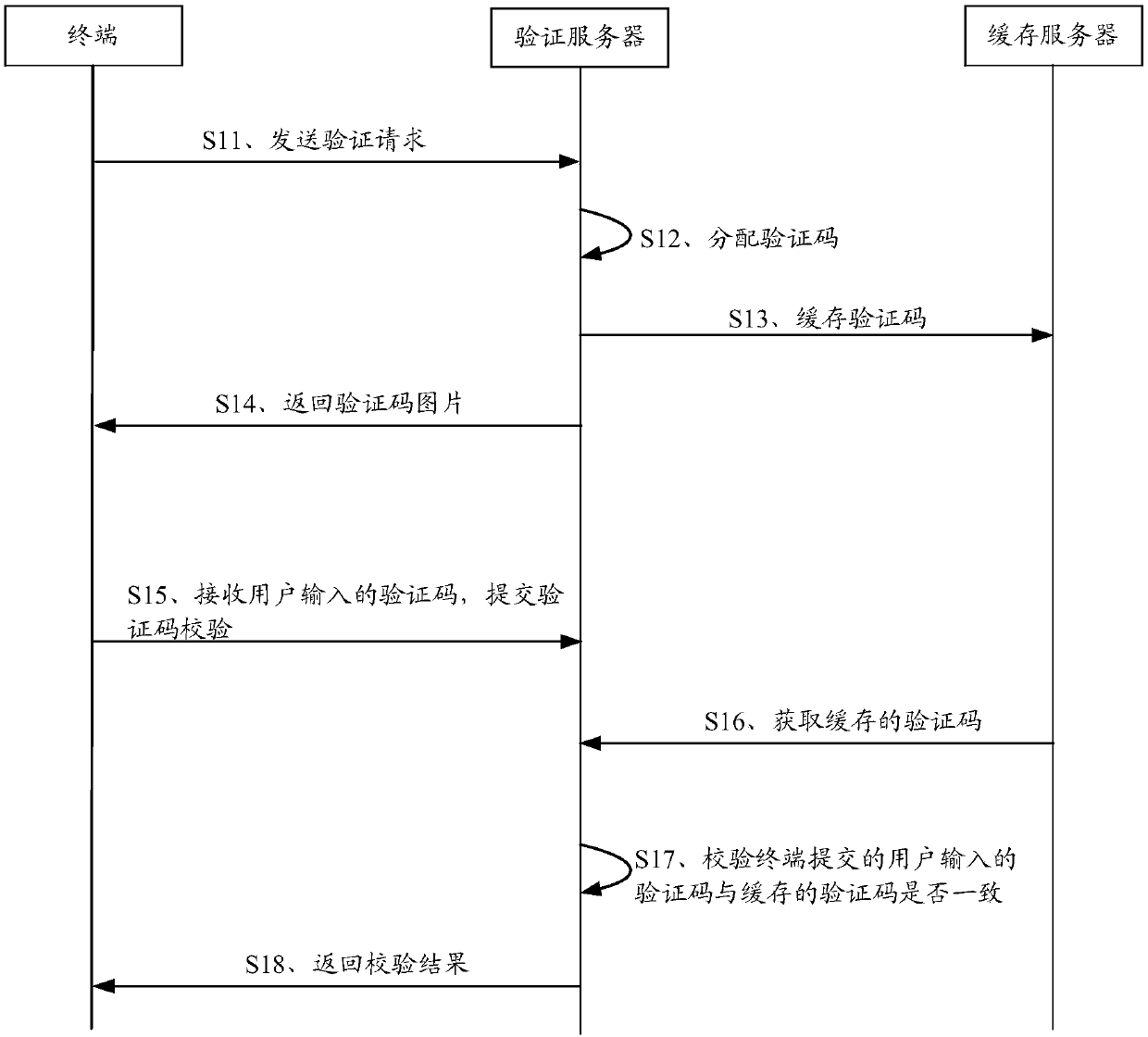

Verification code verification method and device

ActiveCN110798433AAvoid validation failuresImprove verification efficiencyTransmissionProgramming languageCache server

The invention discloses a verification code verification method and device, which are used for solving the problem of verification code verification failure when a cache server crashes or a network isabnormal in the prior art. The verification code verification method comprises: enabling a verification server to receive a verification request sent by a terminal; allocating a verification code tothe terminal according to the verification request, and sending the allocated verification code to a cache server for caching; encrypting the allocated verification code and the validity period thereof by using a private key to generate an encrypted string; writing the encrypted string into a verification code picture carrying the allocated verification code by using picture steganography, and returning the verification code picture to the terminal; receiving a verification code input by a user and sent by a terminal, obtaining the verification code cached by the cache server and allocated tothe terminal, and performing verification code verification; and when it is determined that acquisition of the verification code cached by the cache server fails, returning cache exception informationto the terminal, so as to enable the terminal to perform local verification code verification according to the verification code input by the user and the verification code picture.

Owner:GUANGZHOU XIAOPENG MOTORS TECH CO LTD

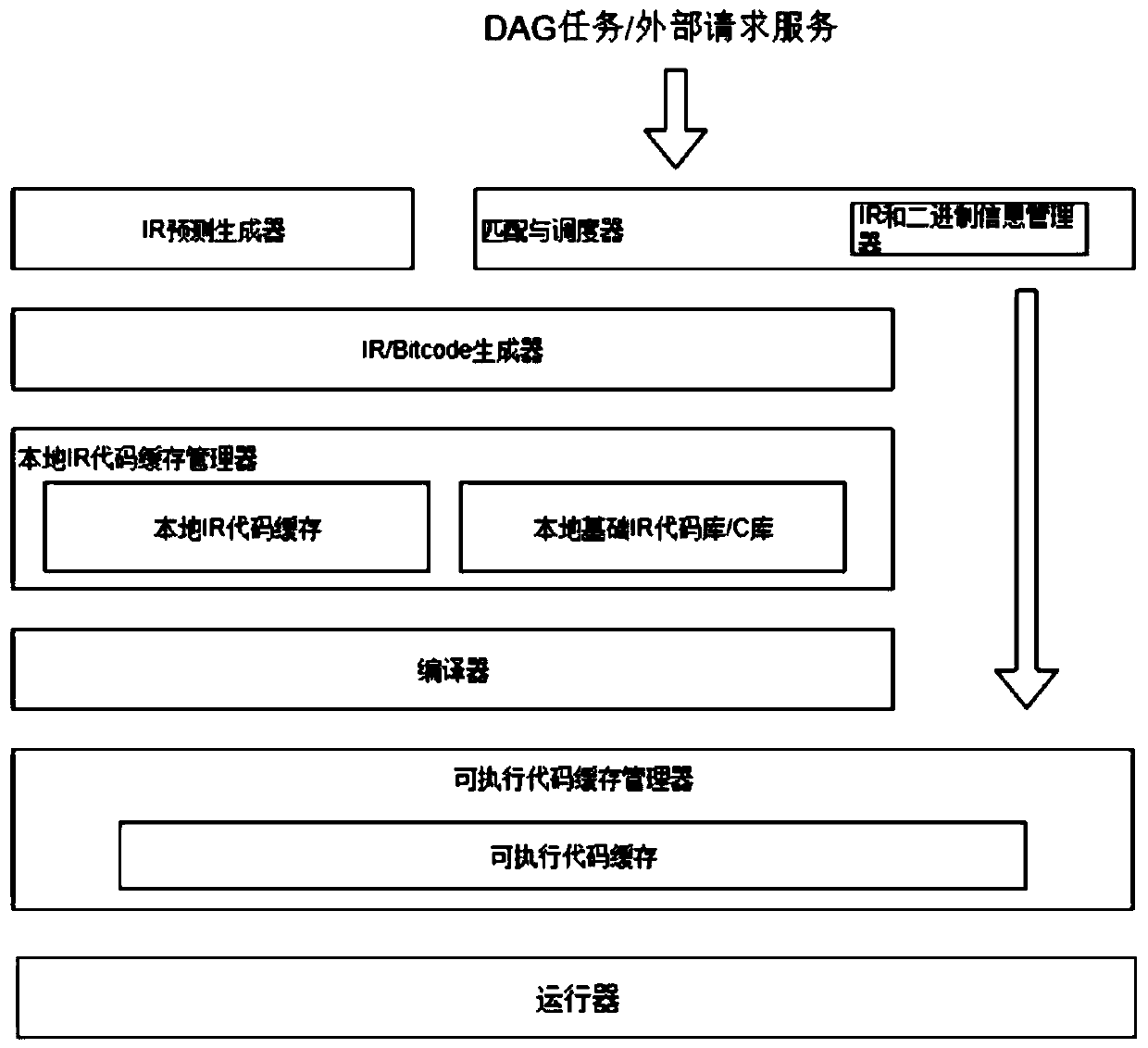

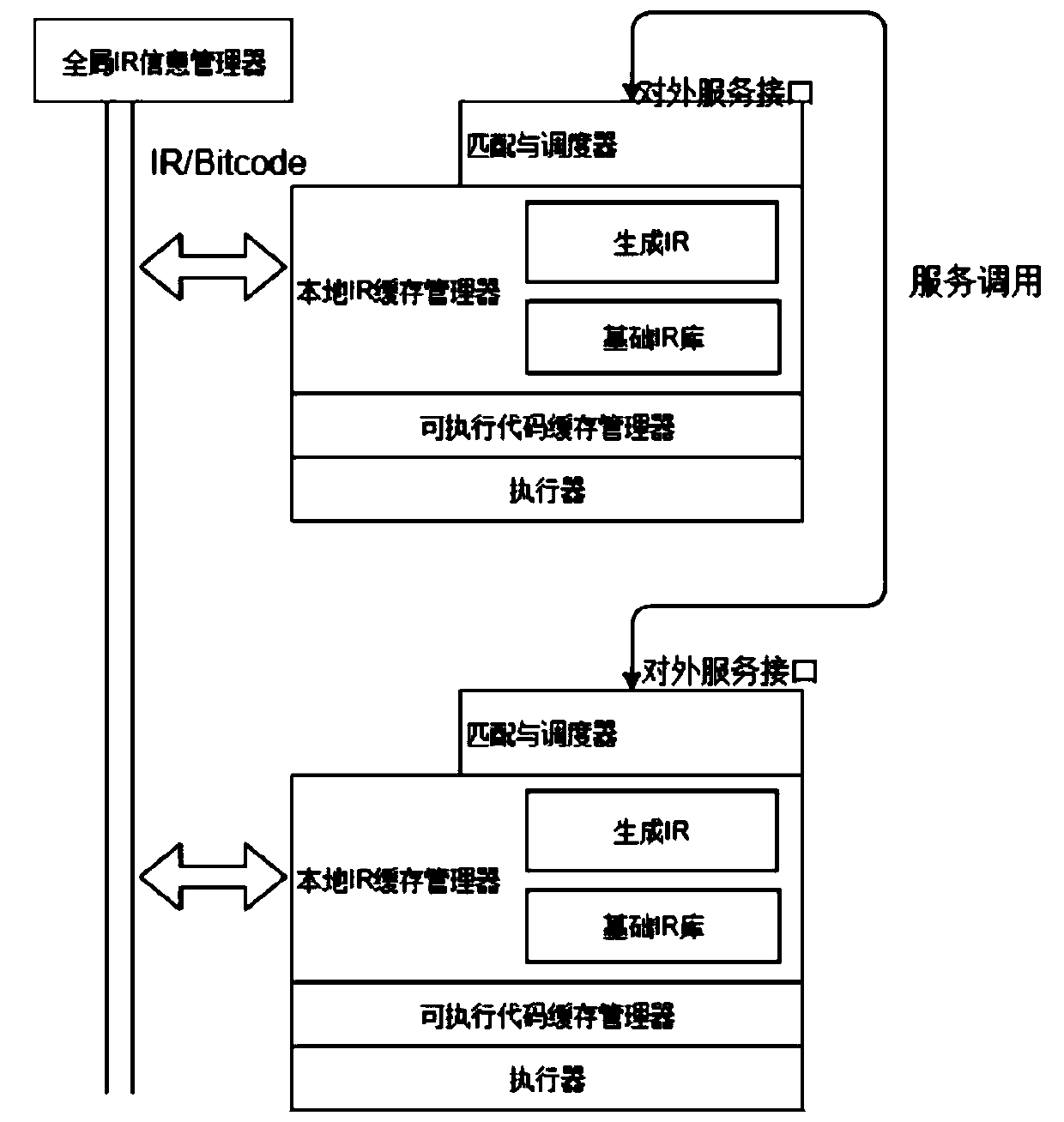

Distributed memory column type database compilation actuator architecture

ActiveCN110119275AFill in design gapsCalculation speed blockCode compilationDistributed memoryParallel computing

The invention discloses a distributed memory column type database compilation actuator architecture, which solves the problem of execution speed defect on a DAG operator execution mode in a distributed memory column type database. A compiling actuator system on a single machine comprises a matching and scheduling device, an IR / Bitcode generator, a local IR code cache manager, a compiler, an executable code cache manager and an actuator. The local IR code cache manager comprises a local IR code cache unit, and the executable code cache manager comprises an executable code cache unit. The architecture also comprises a matching and scheduling device, an IR / Bitcode generator and a local IR code caching unit. The device is used for coordinating the local IR code cache manager and the executablecode cache manager, managing mapping information, carrying out matching code execution on an DAG task of an external request or generating a code, and responding to the external request. The IR / Bitcode generator is used for generating IR codes for the DAG task which are not matched with the executable code. The local IR code caching unit is used for performing caching management on the IR code generated by the IR / Bitcode generator.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com