AI model privacy protection method for resisting member reasoning attack based on adversarial sample

An anti-sample, privacy protection technology, applied in the field of AI model privacy protection against member reasoning attacks, can solve the problems of target model performance impact, lengthening target model training time, and difficulty in convergence of target model training, to eliminate gradient instability, The effect of defending against inference attacks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

[0048] This example is carried out in Colab, and the deep learning framework used is Pytorch. ResNet-34 is used as the target model; the data set is CIFAR-10, and there are 60,000 32×32 3-channel (RGB) pictures in the data set, which are divided into 10 categories, of which 50,000 are used as training sets and 10,000 are used as test sets . When training the target model, set the loss function to the cross entropy function (CrossEntropyLoss), use the Adam optimization method, set the learning rate to 0.0005, and the number of iteration steps to 20 rounds. After training, the target model is 99% accurate on the training set and 56% accurate on the test set.

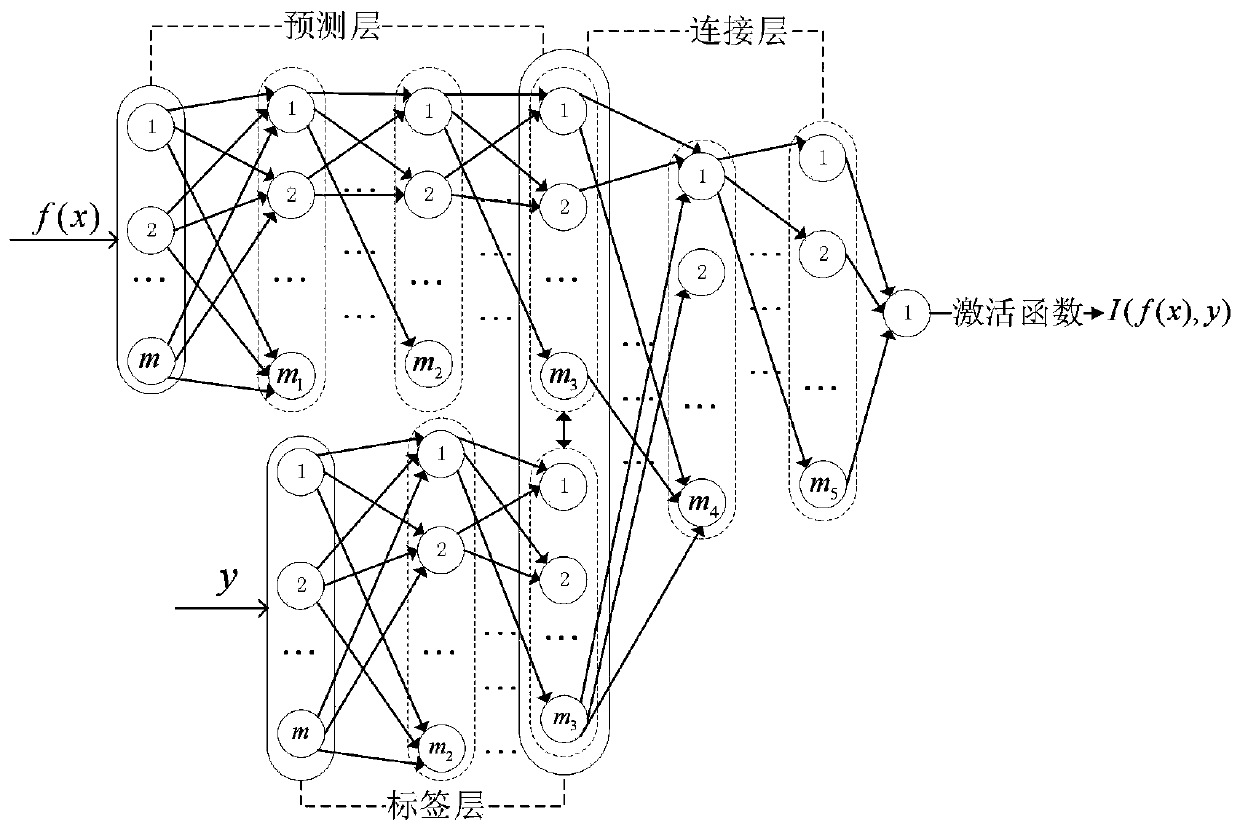

[0049] The membership inference model architecture for constructing adversarial examples is as follows: figure 2 As shown, it has two inputs, which are the output of the target model for a certain data and the label of the data. Among them, the dimension parameters of each layer of the three sub-networks of the predictio...

example 2

[0060] This example is carried out in the Colab environment, and the deep learning framework is Pytorch. This example uses a linear neural network that only includes input and output layers as the target model. The dataset used is the AT&T face picture set, which contains 400 pieces of 112×92 Grayscale single-channel images are divided into 40 categories, each category has 10 images, 300 images are randomly selected as the training set, and 100 images are used as the test set. The loss function of the target model is the cross entropy function, the optimization method is SGD, the learning rate is 0.001, and the iteration is 30 rounds. After training, the target model has 100% accuracy on the training set and 95% accuracy on the test set.

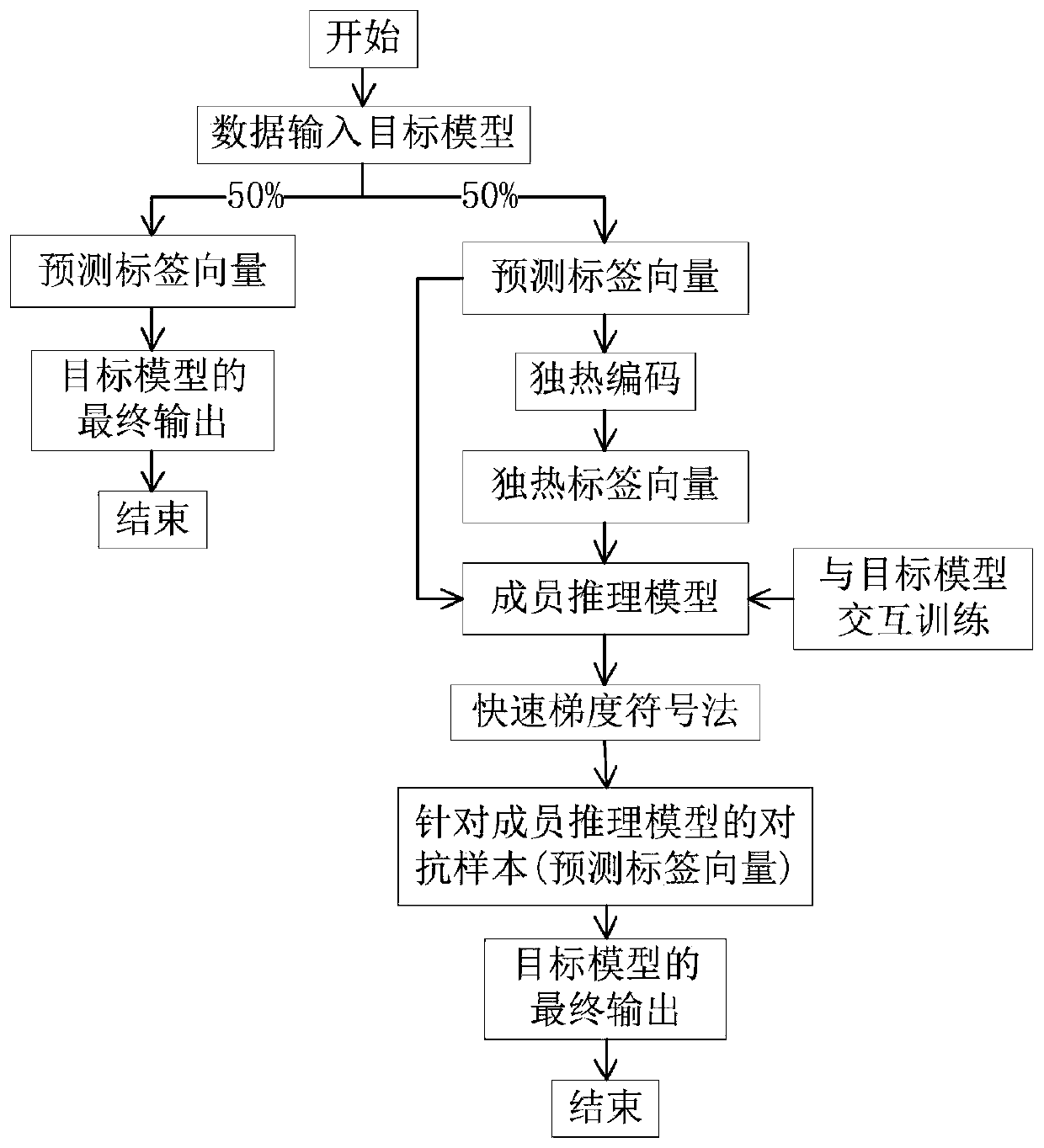

[0061] In this instance, the architecture of the membership reasoning model for constructing adversarial examples is as follows: Figure 1 As shown, the structure of the member inference model used to construct the adversarial example in Ex...

example 3

[0069] This example is carried out in the Colab environment, the deep learning framework is Pytorch, the target model used is VGG-16, and the data set is CIFAR-10. The data set contains 60,000 32×32 3-channel (RGB) pictures, divided into 10 categories , where the training set contains 50,000 images and the test set contains 10,000 images. When training the target model, set the loss function to the cross entropy function (CrossEntropyLoss), use the Adam optimization method, where the learning rate is set to 0.0001, and the number of iteration steps is 20 rounds. After training, the target model has an accuracy of 89% on the training set and 63% on the test set.

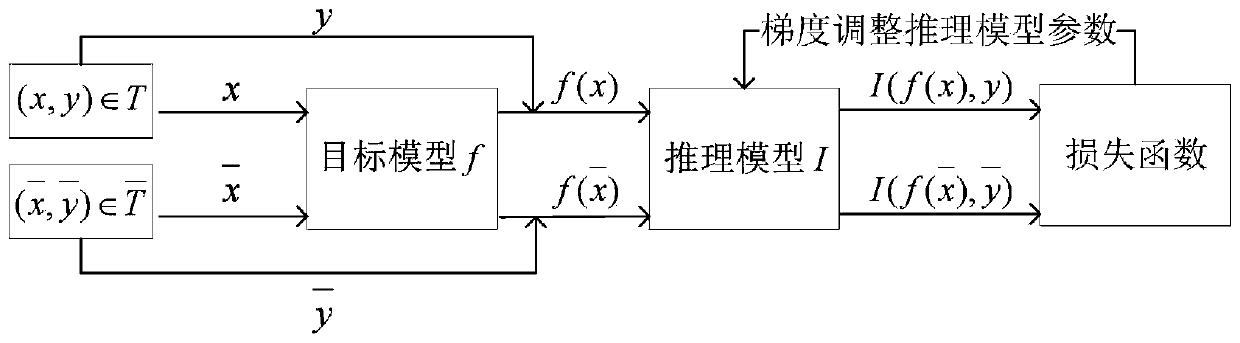

[0070] When training the membership inference model for constructing adversarial samples, use the first 5000 images of the test set of the target model as non-training set data Select the first 5000 pictures in the target model training set as the target model training set data T, and the training set of the member ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com