Patents

Literature

56 results about "Inference attack" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

An Inference Attack is a data mining technique performed by analyzing data in order to illegitimately gain knowledge about a subject or database. A subject's sensitive information can be considered as leaked if an adversary can infer its real value with a high confidence. This is an example of breached information security. An Inference attack occurs when a user is able to infer from trivial information more robust information about a database without directly accessing it. The object of Inference attacks is to piece together information at one security level to determine a fact that should be protected at a higher security level.

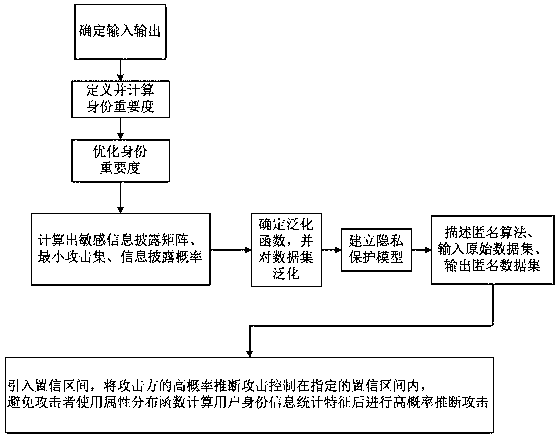

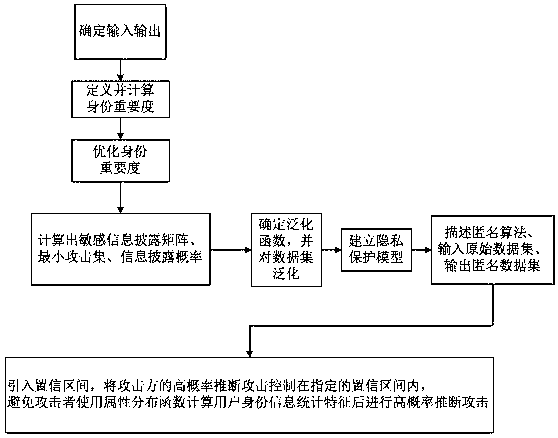

Method for protecting privacy of identity information based on sensitive information measurement

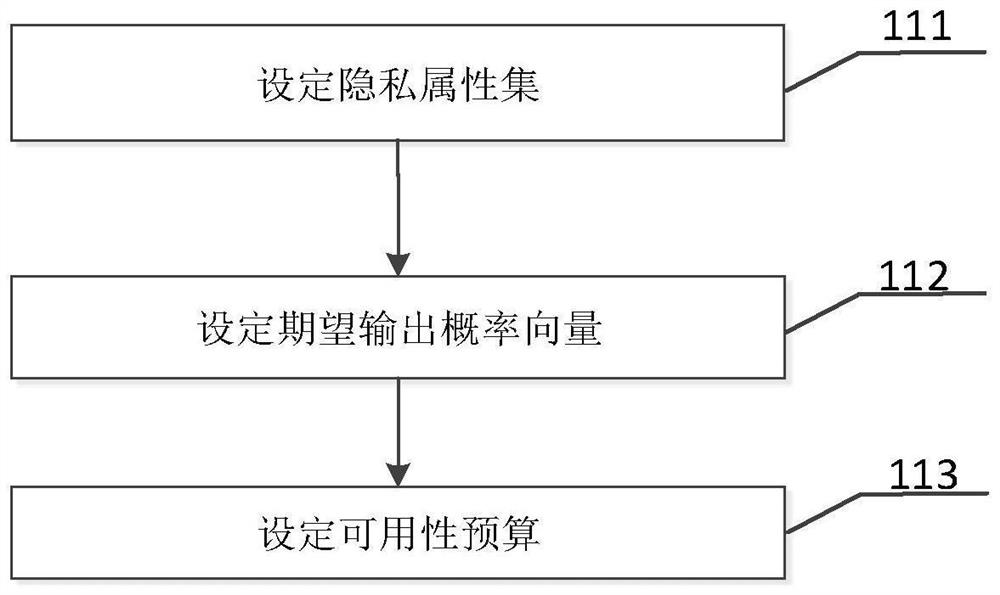

ActiveCN106940777AEffective protectionImprove protectionDigital data protectionData setInference attack

The invention discloses a method for protecting privacy of identity information based on sensitive information measurement. The method comprises the comprises the following steps of S1, determining input and output; S2, defining and calculating identity importance degree; S3, optimizing the identity importance; S4, calculating a sensitive information disclosing matrix, a minimum attack set and an information disclosing probability; S5, determining a generalizing function, and generalizing a dataset; S6, establishing a background knowledge attack-avoidance privacy protection model; S7, describing a (gamma, eta)-Risk anonymity algorithm, inputting an original dataset D, and outputting an anonymity dataset D'; S8, introducing a confidence interval, controlling the high-probability inference attack of an attacking party within the specified confidence interval, so as to avoid a user using an attribute distribution function to calculate the identity information of the user, calculate features, and perform high-probability inference attack. The method has the advantages that the problem of difficulty in effectively treating the privacy information attack based on background knowledge attack in the existing privacy protection method is solved, and the key identity and identity sensitive information are more comprehensively and effectively protected.

Owner:湖南宸瀚科技有限公司

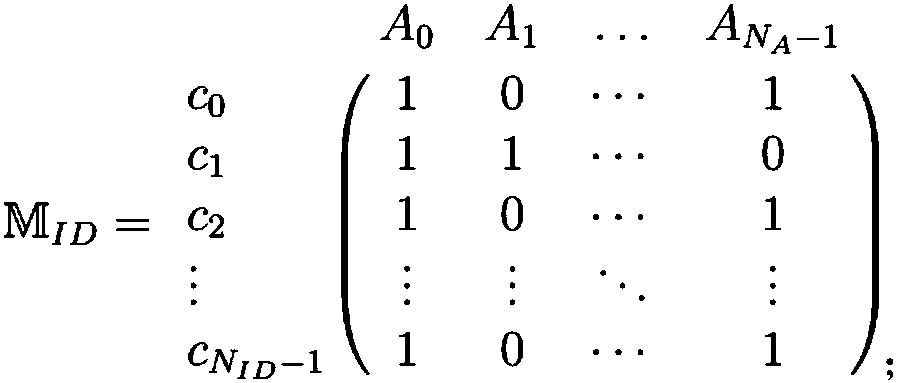

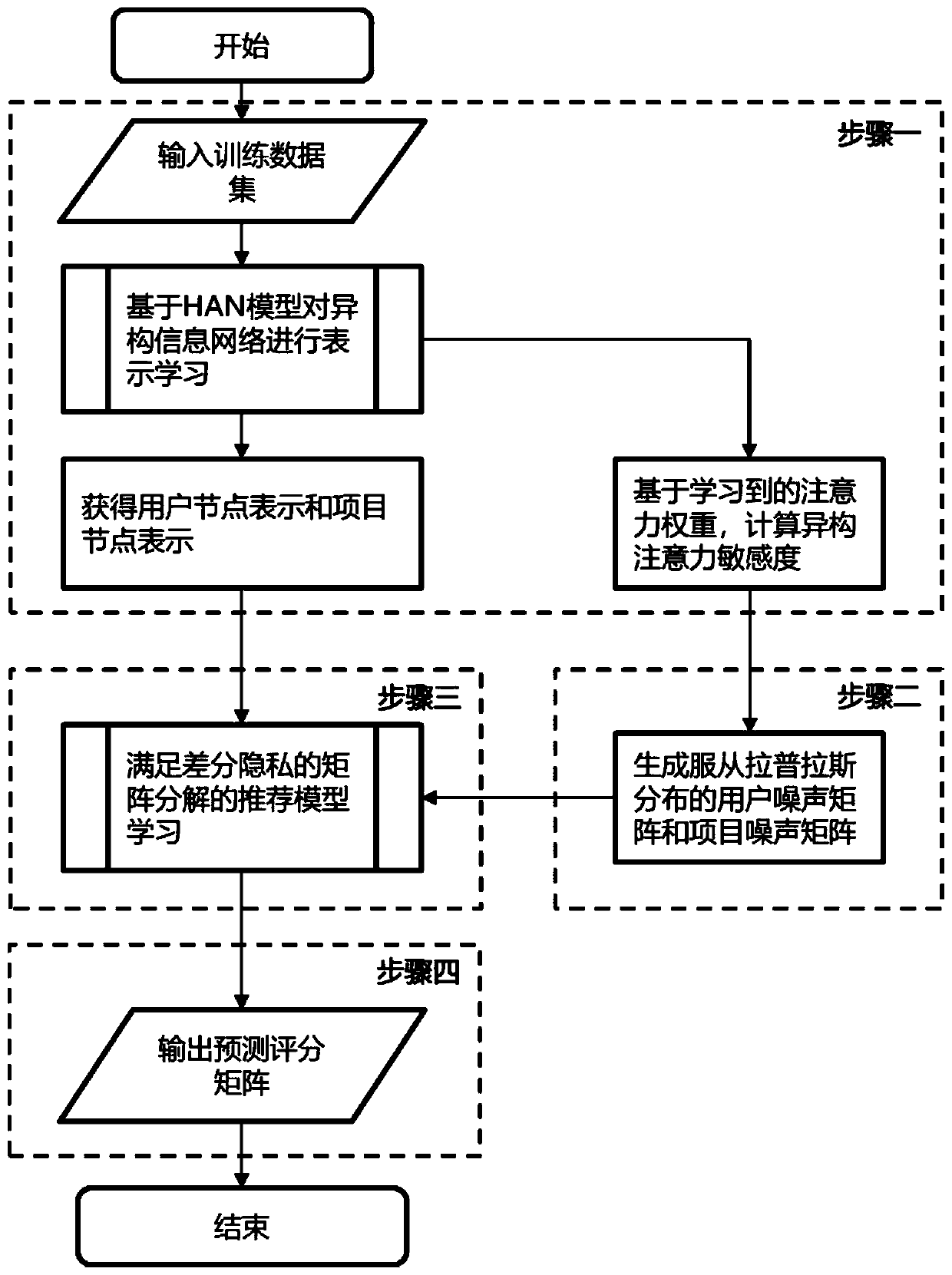

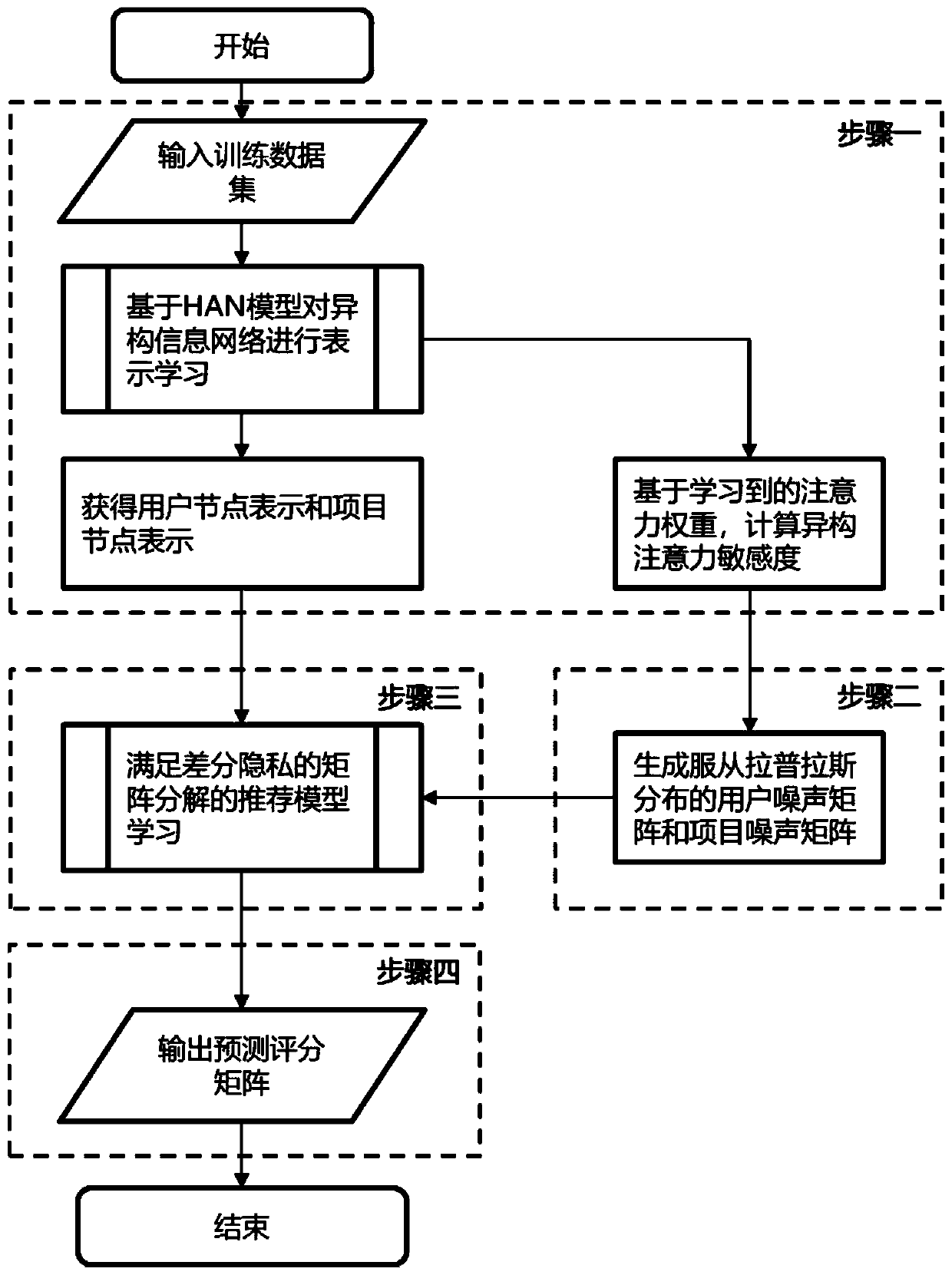

Differential privacy recommendation method based on heterogeneous information network embedding

PendingCN111177781ALearning Probabilistic CorrelationsMitigating Privacy LeakageDigital data information retrievalDigital data protectionAttackInference attack

The invention realizes a set of differential privacy recommendation method based on heterogeneous information network embedding. The differential privacy recommendation method comprises the followingfour steps of: performing network representation learning by using HAN, and calculating heterogeneous attention sensitivity by using characterizations of HAN and an attention weight result; based on adifferential privacy definition, using the heterogeneous attention sensitivity to generate corresponding random noise, and generating a random noise matrix through using a heterogeneous attention random disturbance mechanism; constructing an objective function of differential privacy recommendation embedded with heterogeneous information for learning to obtain a prediction score matrix; and outputting the score matrix as a prediction score capable of keeping privacy. Therefore, the original scoring data is protected for the recommendation system scene under the heterogeneous information network, an attacker is prevented from improving the reasoning attack capability by utilizing the heterogeneous information network data acquired by other channels, and the original scoring data can be guessed or learned again with high probability by observing the recommendation result change of the score.

Owner:BEIHANG UNIV

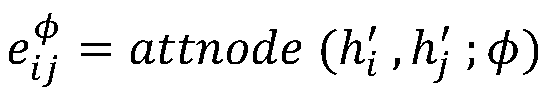

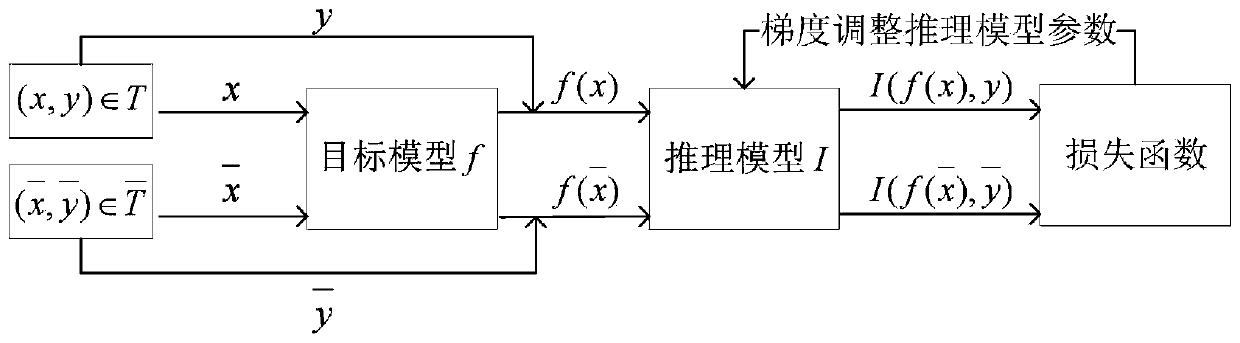

AI model privacy protection method for resisting member reasoning attack based on adversarial sample

InactiveCN110516812AEliminate stabilityElimination training does not convergeMachine learningInference methodsOne-hotInference attack

The invention discloses AI model privacy protection method for resisting member reasoning attack based on an adversarial sample. The method comprises the following steps: (1) training a target model in a common mode; (2) obtaining a trained member reasoning model in a mode of interactive training with a target model; (3) when the target model receives an input, obtaining a target model; inputtinga prediction label vector output by the target model and a one-hot label vector obtained after the prediction label vector is subjected to one-hot coding conversion into a trained member reasoning model; disturbing the prediction label vector output by the target model by using the output of the member inference model and a rapid gradient symbolic method to construct an adversarial sample for themember inference model; and (4) outputting the adversarial sample by the target model at a probability of 50%, otherwise, keeping the original output unchanged. According to the method, the problems of gradient instability, long training time, low convergence speed and the like caused by a traditional defense mode are solved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

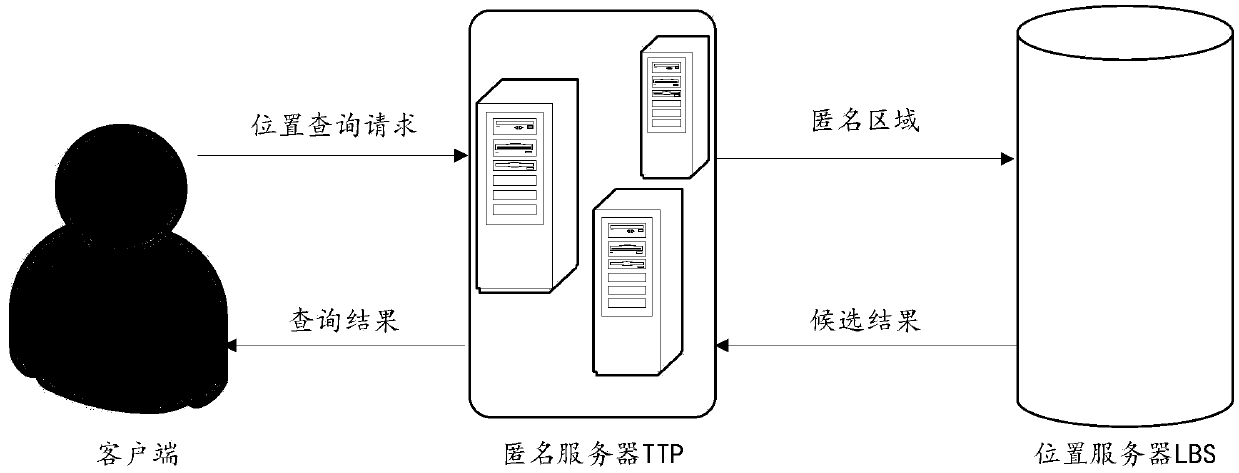

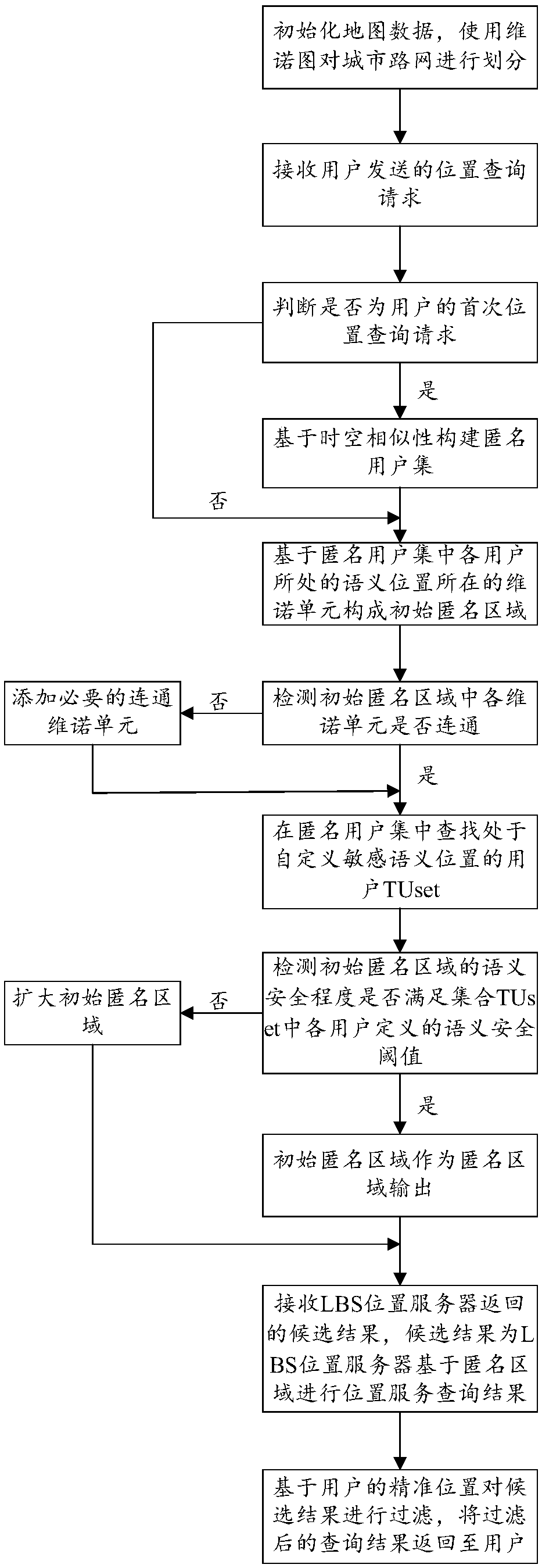

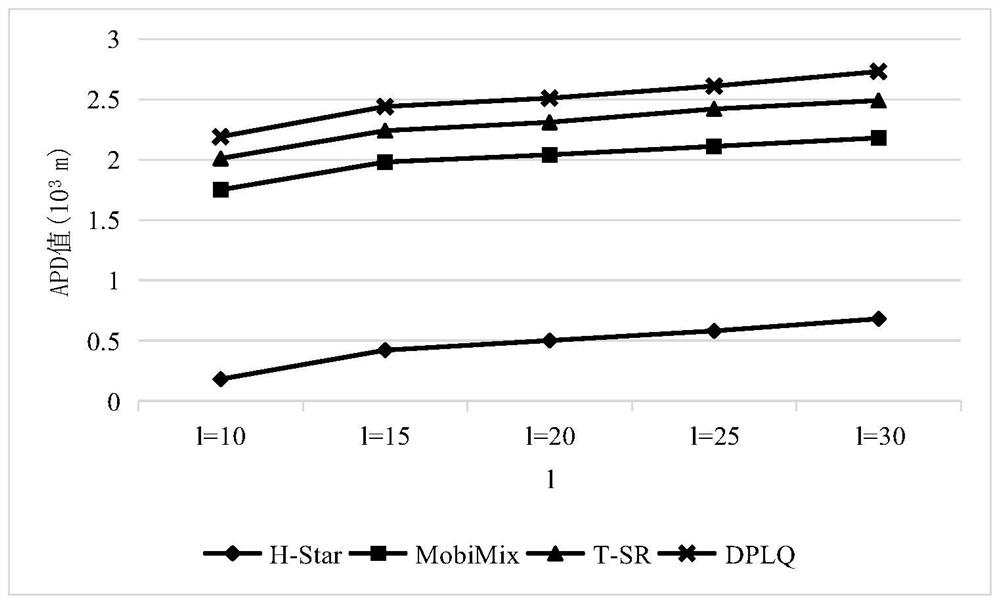

Method for protecting sensitive semantic location privacy for continuous query in road network environment

InactiveCN109618282AAvoid attackIncreased protectionLocation information based serviceSecurity arrangementInference attackRoad networks

The invention is applicable to the technical field of privacy protection, and provides a method for protecting sensitive semantic location privacy for continuous query in a road network environment. The method comprises the following steps: S1, receiving a location query request sent by a user; S2, judging whether the location query request is a first time location query request of the user, if the detection result is yes, constructing an anonymous user set based on the space-time similarity, and if the detection result is no, executing a step S3; S3, constructing a semantic secure anonymous area of the anonymous user set, and sending the semantic secure anonymous area to an LBS location server; S4, receiving candidate results returned by the LBS location server; and S5, filtering the candidate results based on a precise location of the user, and returning the filtered query result to the user. By adoption of the method provided by the invention, an attacker can be effectively prevented from using a semantic inference attack to attack the user location privacy under the continuous query location service; and by constructing the semantic secure anonymous area, the protection degreeof a K-anonymous algorithm on the user location privacy under the continuous query location service is enhanced.

Owner:ANHUI NORMAL UNIV

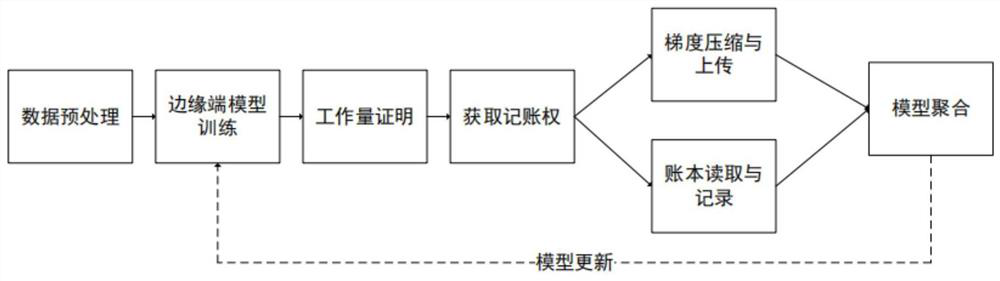

Federal learning member reasoning attack defense method and device based on block chain decentration

PendingCN113467928AGuarantee data securityProtect data privacy and securityResource allocationDatabase distribution/replicationAlgorithmInference attack

The invention discloses a federated learning member reasoning attack defense method and device based on block chain decentration. The method comprises the following steps: an edge end participating in federated learning performs model training by using local data to obtain a gradient of a local model; the edge end carries out workload proving through computing power, obtains the accounting right of the account book block according to the workload proving, compresses the gradient of the local model, uploads the compressed model gradient to the account book block, and uplinks the account book block containing the compressed gradient as a block chain node; edge ends corresponding to other account book blocks are broadcast on the block chain by the account book block; and one edge end on the block chain is randomly selected as a temporary central server end in each round, the compressed model gradient of each edge end on the block chain is aggregated to obtain an aggregation model of each round, and the aggregation model is issued to the edge end for next training. The aim of defending the data member reasoning attack can be fulfilled.

Owner:HANGZHOU QULIAN TECH CO LTD

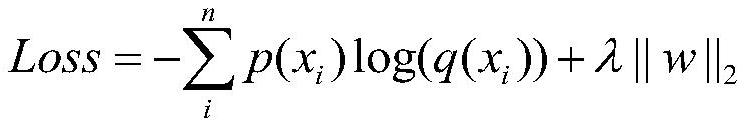

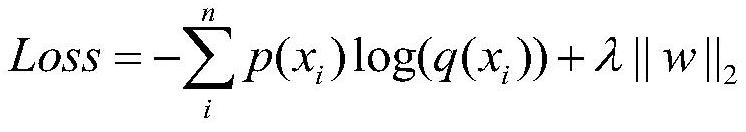

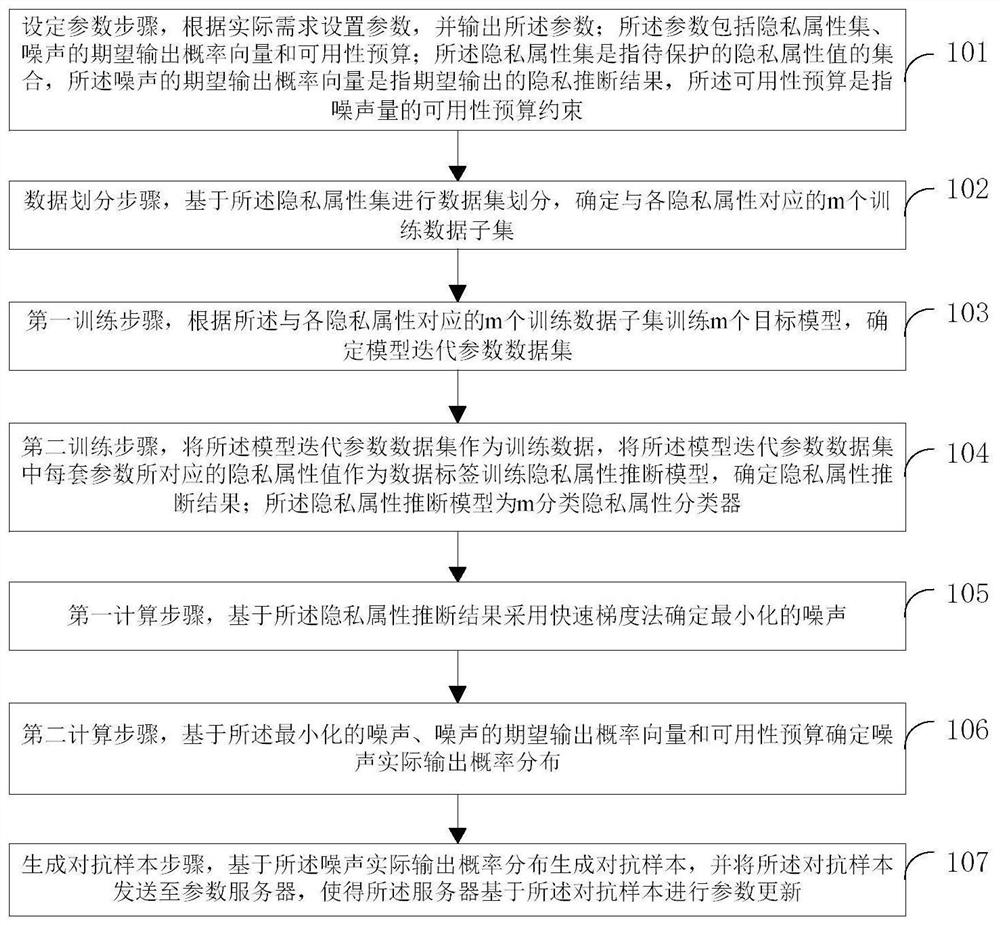

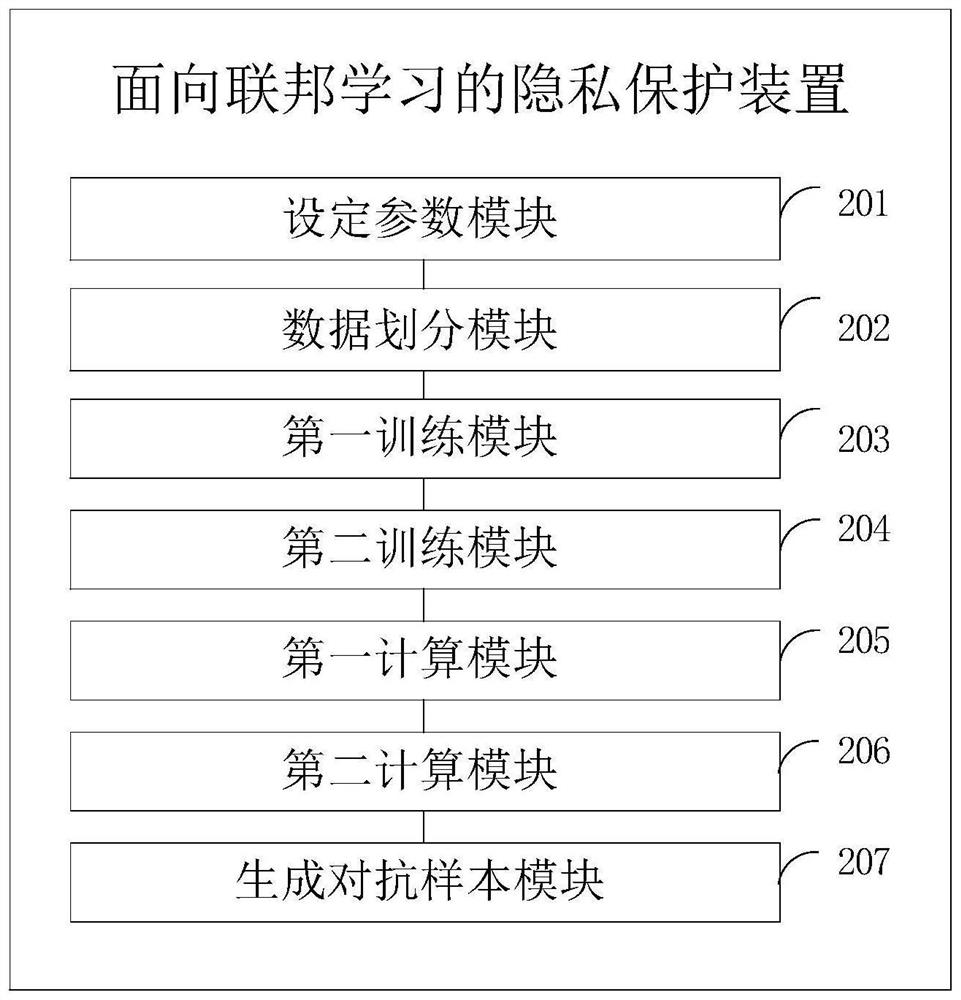

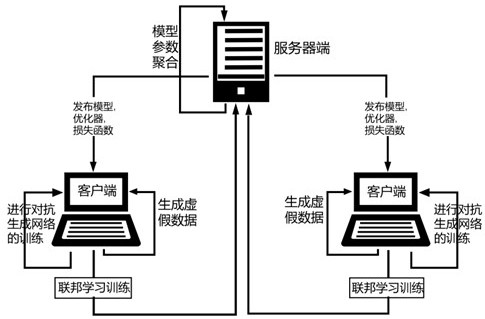

Federated learning-oriented privacy protection method and federated learning-oriented privacy protection device

ActiveCN112668044ABalance accuracyEnsemble learningDigital data protectionInference attackPrivacy protection

The embodiment of the invention provides a federated learning-oriented privacy protection method and device. The federated learning-oriented privacy protection method comprises a parameter setting step, a data partitioning step, a first training step, a second training step, a first calculation step, a second calculation step and an adversarial sample generation step. According to the embodiment, the idea of confronting samples is adopted, a certain amount of noise is added in parameter updating to disturb the distribution characteristics of the parameters, the privacy inference result is randomly output according to the probability distribution expected by the user after the noise passes through the privacy attribute inference model, so that the privacy attribute inference attack is resisted, and the problem of privacy attribute leakage of federated learning is relieved.

Owner:INST OF INFORMATION ENG CAS

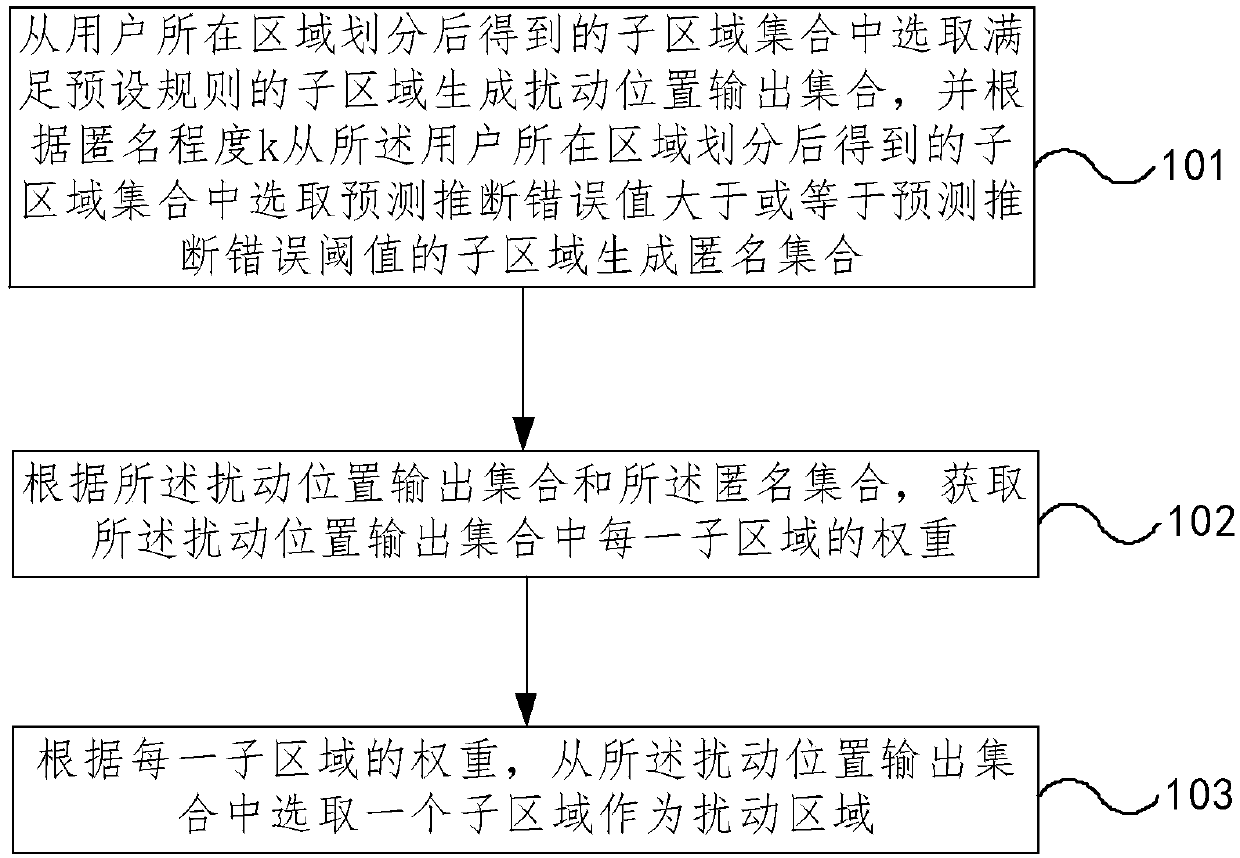

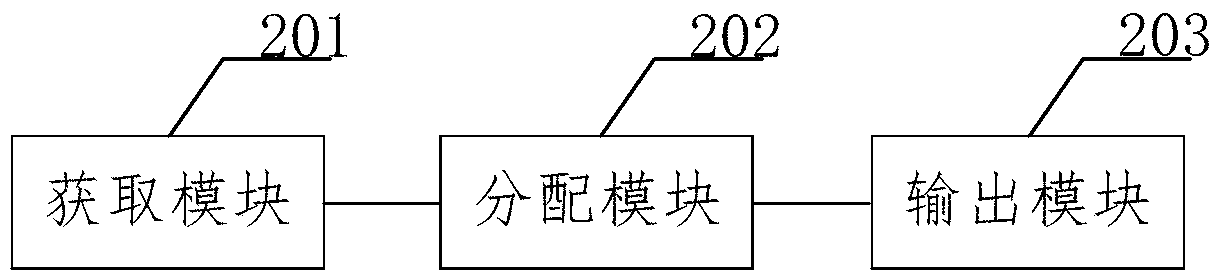

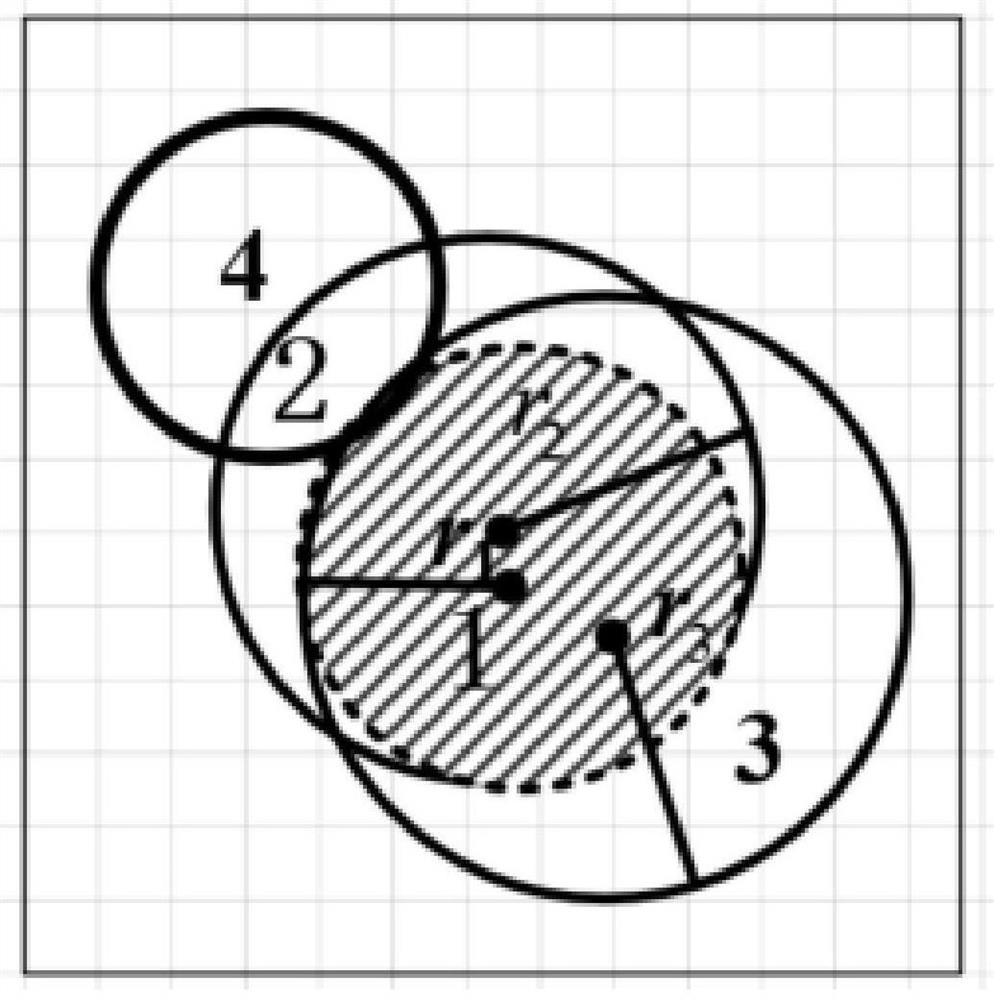

Location privacy protection method and device

The embodiments of the invention provide a location privacy protection method and device. The method comprises the following steps of: selecting a sub-region satisfying a preset rule to generate a perturbed location output set from a sub-region set obtained by dividing user regions, and selecting the sub-region in which the prediction inference error value is greater than or equal to a predictioninference error threshold to generate an anonymous set from the sub-region set obtained by dividing the user regions according to the anonymity degree k; obtaining a weight of each sub-region in the perturbed location output set according to the perturbed location output set and the anonymous set; and selecting one sub-region from the perturbed location output set as a perturbation region according to the weight of each sub-region. The attacker cannot calculate the probability that each sub-region is the real location of the user, and cannot predict the real location of the user, and finally the effect of resisting multiple inference attacks is achieved since the perturbation region is selected according to the weight, and the weight is obtained by calculating the anonymous set.

Owner:INST OF INFORMATION ENG CAS

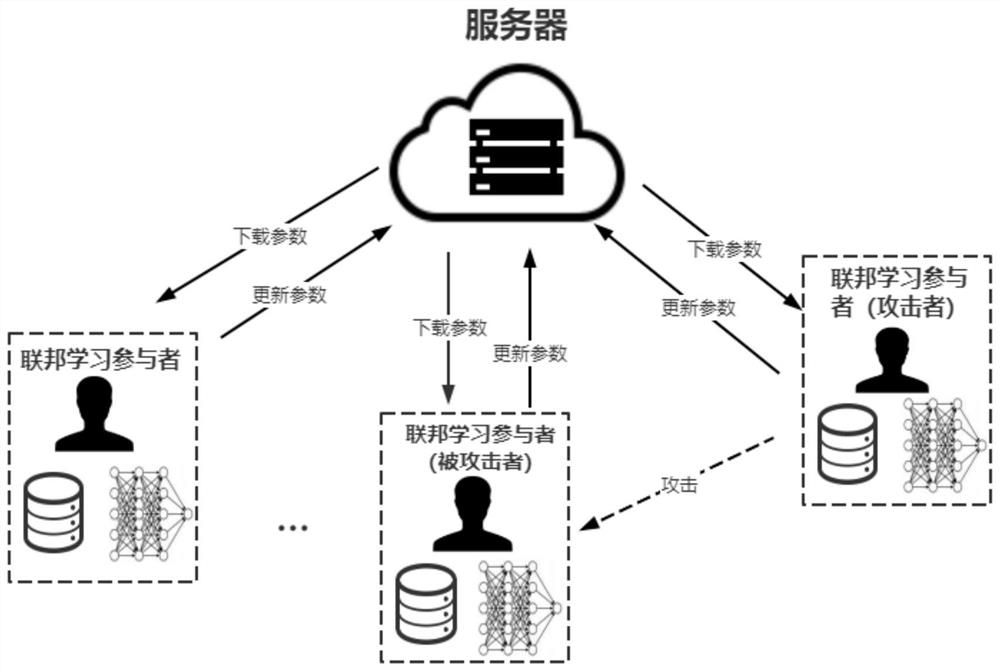

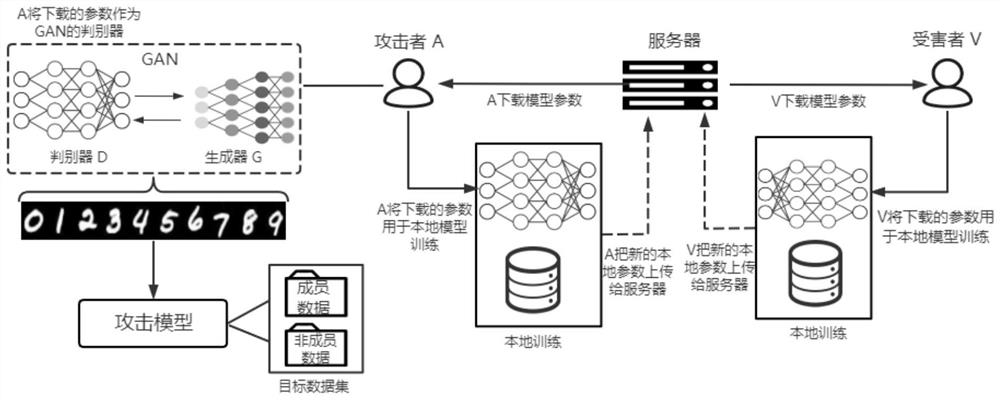

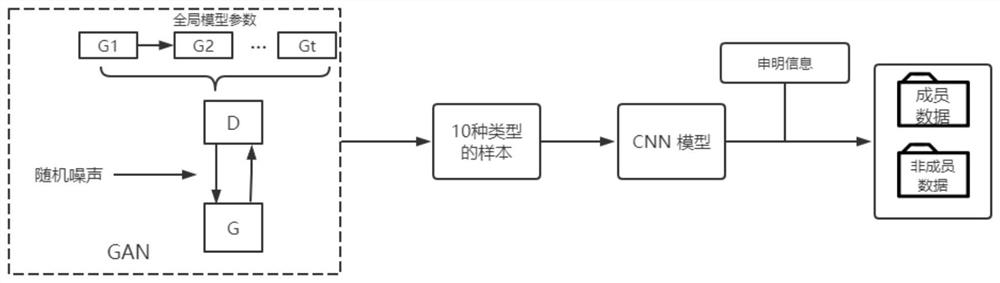

User-level member reasoning method based on generative adversarial network

The invention discloses a user-level member reasoning scheme based on a federated learning environment. A GANs (Generative Adversarial Networks) method is adopted to acquire data distribution so as tostart member reasoning. In a federated learning environment, an attacker initiates a member reasoning attack without accessing user data to deduce whether a given data record belongs to a target training set so as to steal data privacy of a target data set. The method comprises the following steps: 1) user-level member reasoning: a malicious user initiates a member reasoning attack to steal member privacy of a specific user, and further reveals safety vulnerabilities of current joint learning; and 2) performing data expansion by using a locally deployed generative adversarial network so as toobtain data distribution of other users. The effectiveness of the attack method provided by the invention is fully considered under the condition that the user data has multiple tags.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

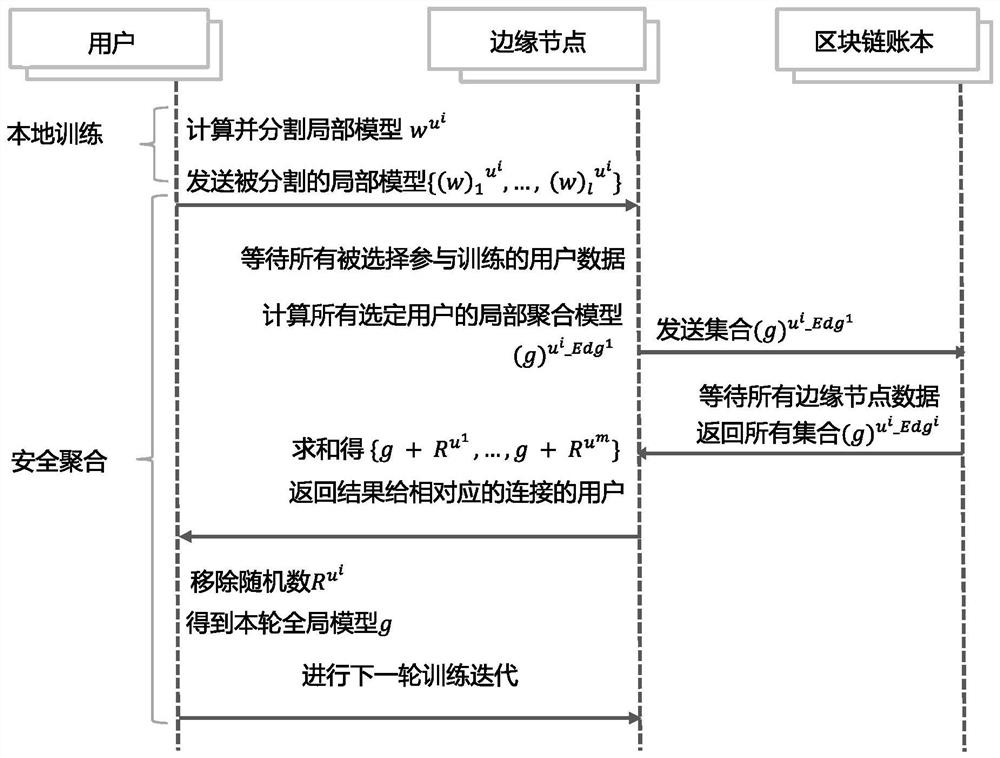

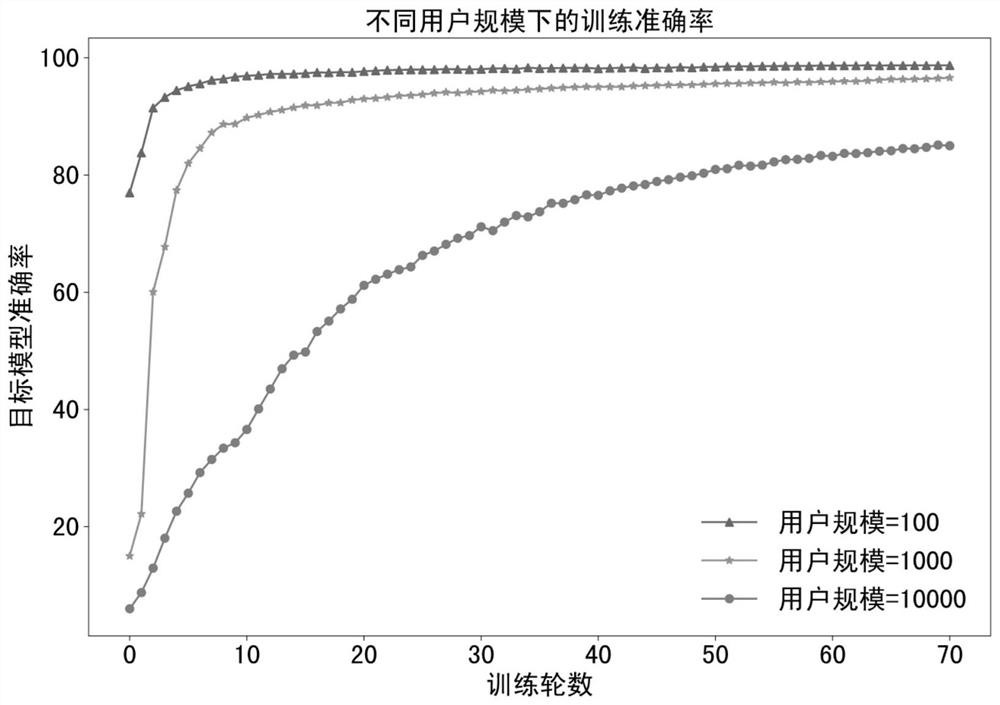

Lightweight federated learning privacy protection method based on decentralized security aggregation

PendingCN113806768AWithout loss of accuracyAvoid Privacy LeakageDigital data protectionMachine learningData privacy protectionAttack

The invention relates to a lightweight federated learning privacy protection method based on decentralized security aggregation, and belongs to the technical field of data privacy protection. A safe decentralized aggregation platform is constructed on a user side by utilizing edge nodes and an alliance block chain, and an aggregation process is collaboratively performed on the platform. And each user segments the local model and sends the local model to each connected edge node. And each user generates a global random number, divides the global random number and respectively shares the global random number to the connected edge node. Then, all the edge nodes are subjected to safe decentralized aggregation, each user can receive a global model added with self-defined global random number disturbance, the edge nodes participating in aggregation cannot know the global model, each user can remove the added disturbance, and an original global model is obtained. According to the method, privacy protection can be realized without encryption operation, and the method is superior to the prior art in the aspects of calculation efficiency, model accuracy and privacy protection on member reasoning attacks.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

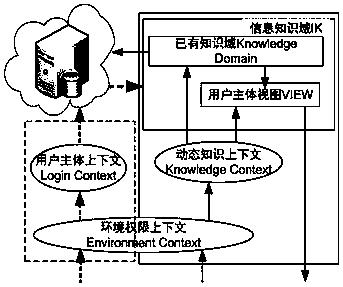

Data privacy protection method based on dynamic context

ActiveCN109409102AImprove securityImprove data utilization efficiencyDigital data protectionPersonalizationData privacy protection

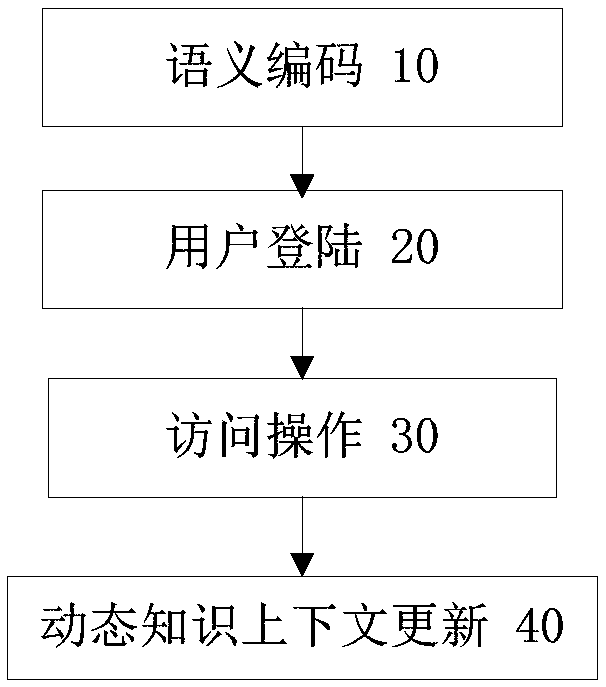

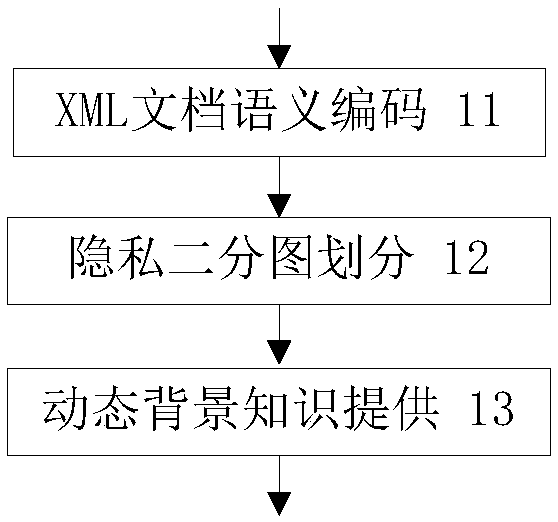

The invention discloses a data privacy protection method based on dynamic context, which is characterized in that the method comprises the following steps: (10) semantic coding: storing and coding thesource data object by adopting an XML document semantic coding scheme; the user's personal bipartite privacy graph and dynamic knowledge context are provided according to the encoding scheme. (20) user login: obtaining the visual range of the user and obtaining the dynamic knowledge context; 30) access operation, wherein that query is taken as a basic operation, the path of the visible range is locate, and the access operation result is determined accord to the matching judgment of the bipartite graph of privacy; (40) dynamic knowledge context updating: updating the dynamic knowledge contextaccording to the superposition of user query operations and the accumulation of acquired prior knowledge. The data privacy protection method of the invention has the advantages of high information security, high data utilization efficiency and good real-time resistance to inference attack.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

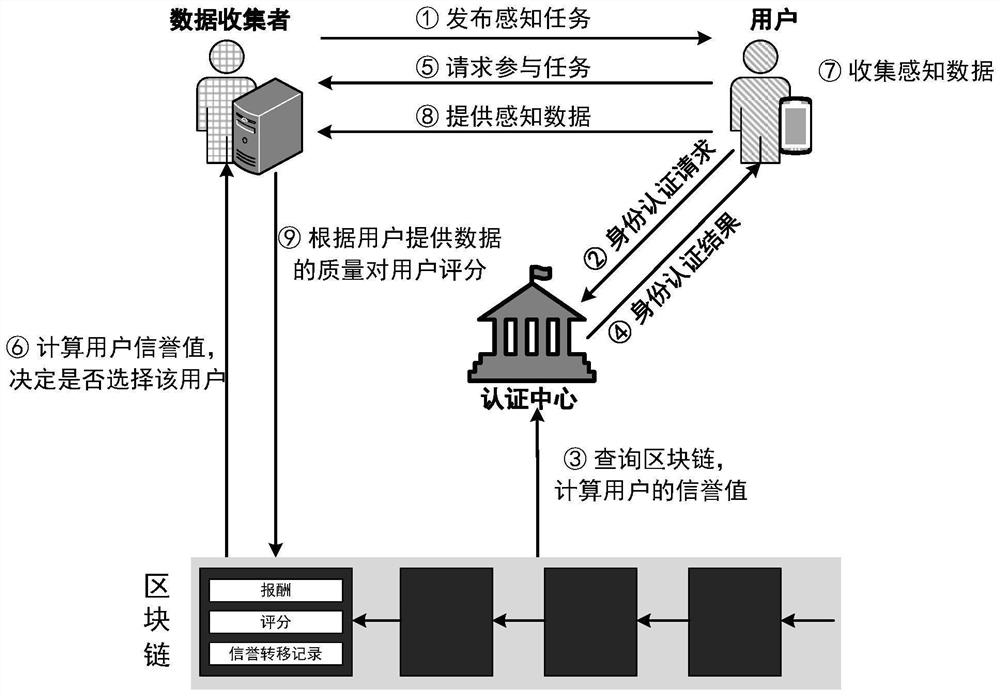

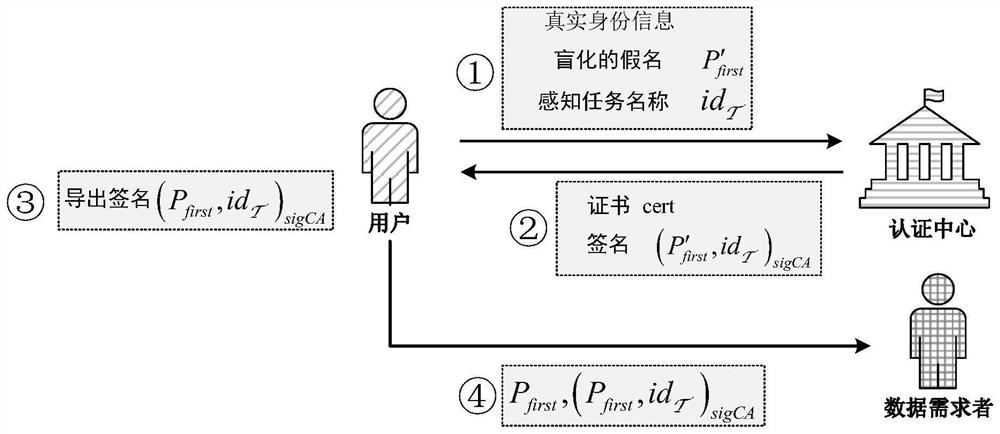

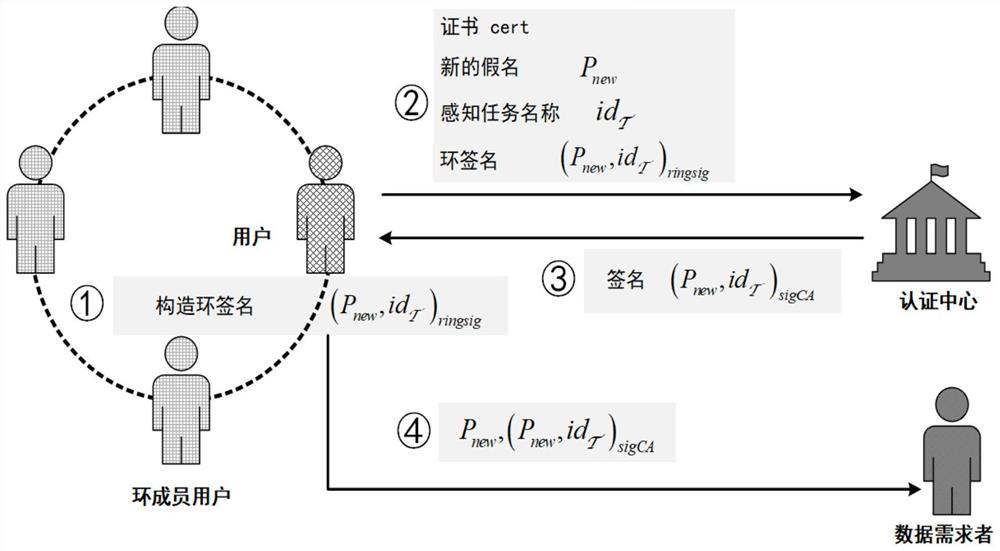

Decentralized privacy preserving reputation evaluation method for crowd sensing

PendingCN114386043AGuaranteed privacyAccurate assessmentDigital data protectionPlatform integrity maintainanceRing signatureAttack

The invention relates to a crowd sensing-oriented decentration privacy preservation reputation evaluation method, and belongs to the technical field of network data processing. According to the method, a reputation evaluation mechanism which can keep anonymity and can prevent a person from randomly modifying a reputation value is constructed by utilizing blind signature and ring signature technologies based on a crowd sensing scene of a block chain. Wherein the real identity of the user participating in the crowd sensing task is hidden by means of the anonymity of the block chain account, and the data demander issuing the sensing task scores the user according to the quality of the data provided by the user and issues the score on the block chain in a transaction form. A user who participates in a sensing task for multiple times allows to create a plurality of accounts, and secret transfer of a reputation value among different accounts is realized by using technologies such as ring signature and the like, so that inference attacks from a data demander are resisted. According to the identity authentication method and device, the relevance between the user account and the real identity of the user can be hidden, and the identity authentication of the user white washing attack can be resisted.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

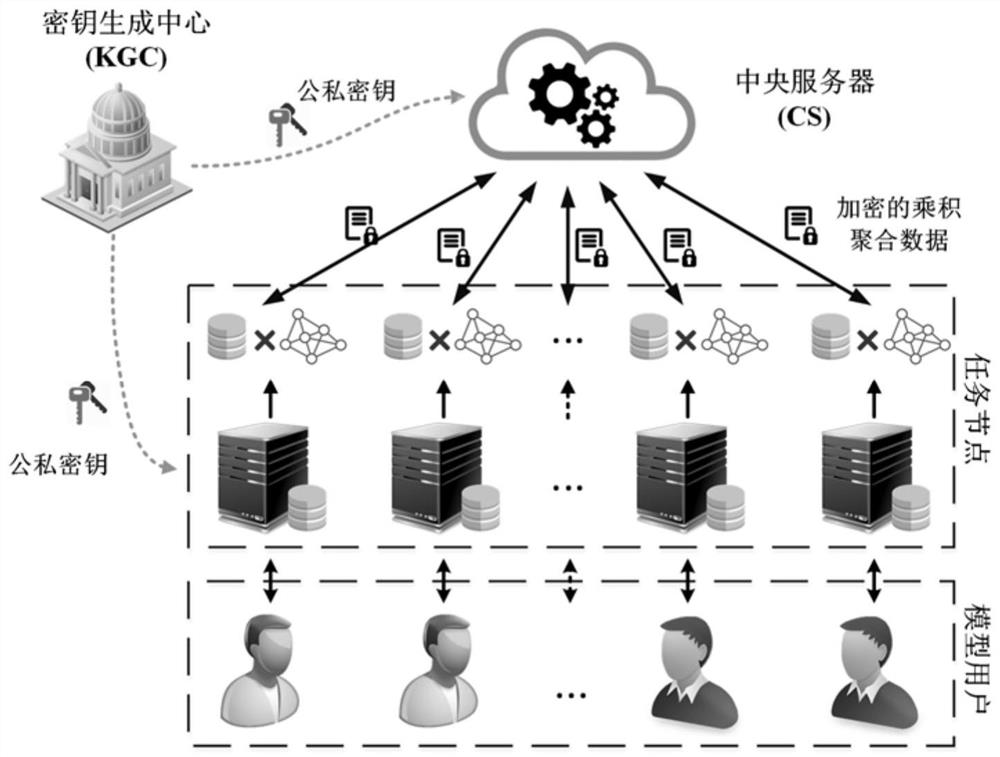

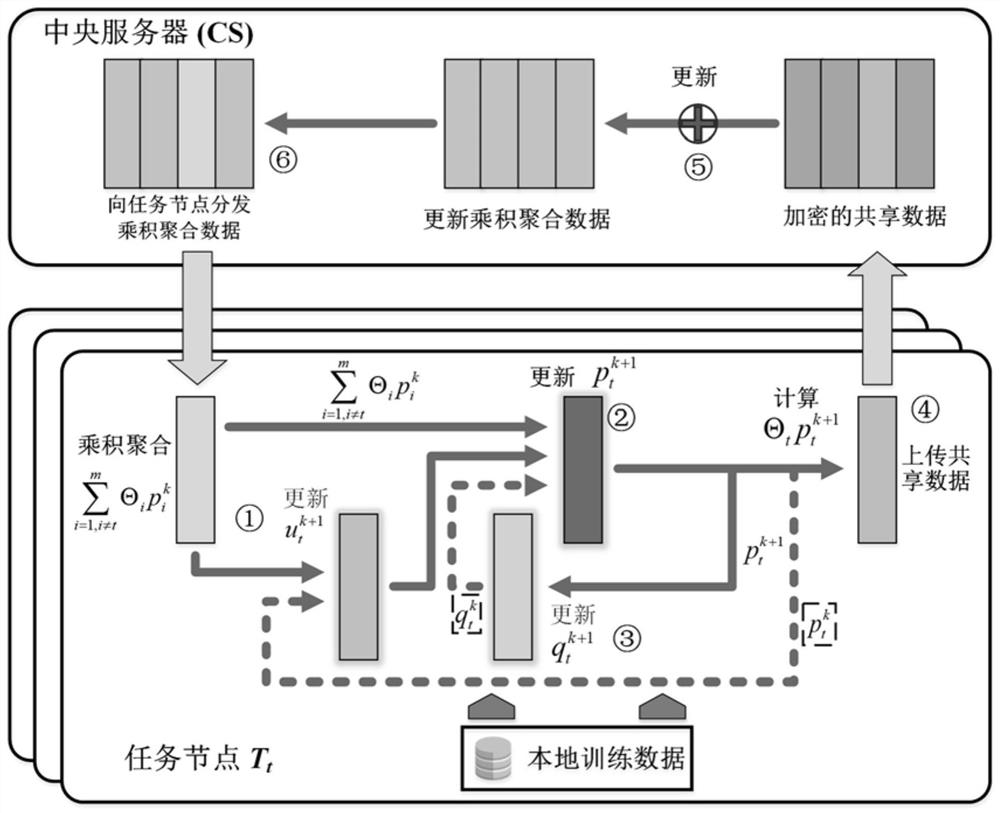

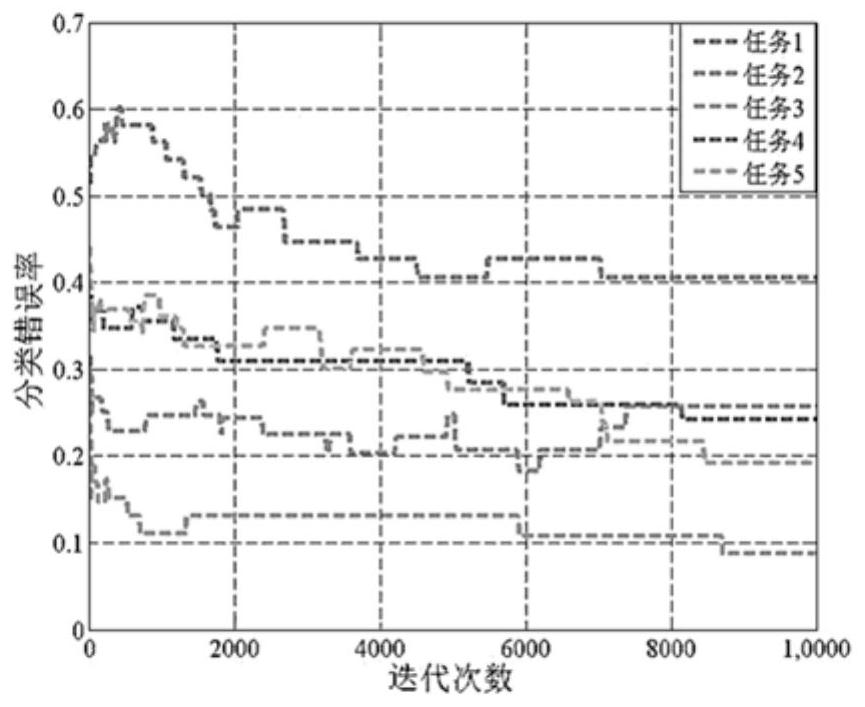

Inference attack resistant distributed multi-task learning privacy protection method and system

ActiveCN112118099AAddress safety training issuesEnsure safety trainingKey distribution for secure communicationDesign optimisation/simulationKey (cryptography)Privacy protection

According to an inference attack resistant distributed multi-task learning privacy protection method and system, model training is carried out through various task nodes based on local data, and jointmodel training is realized through a knowledge sharing mode. According to the method, a privacy protection model training mechanism based on homomorphic cryptography is provided, so that task nodes realize multi-task learning model training on the premise of ensuring the privacy of training data, the model training efficiency is independent of a sample data volume, and the machine learning modeltraining efficiency is improved. A model publishing method based on differential privacy is designed, and an identity inference attack initiated can be resisted when a model user accesses a machine learning model . The system comprises a key generation center, a central server, a task node and a model user. According to the method, the data privacy of the task nodes in the model training process and after the release of the model can be ensured, and large-scale application of multi-task machine learning is promoted.

Owner:XIDIAN UNIV +1

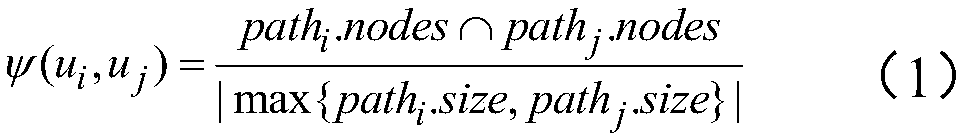

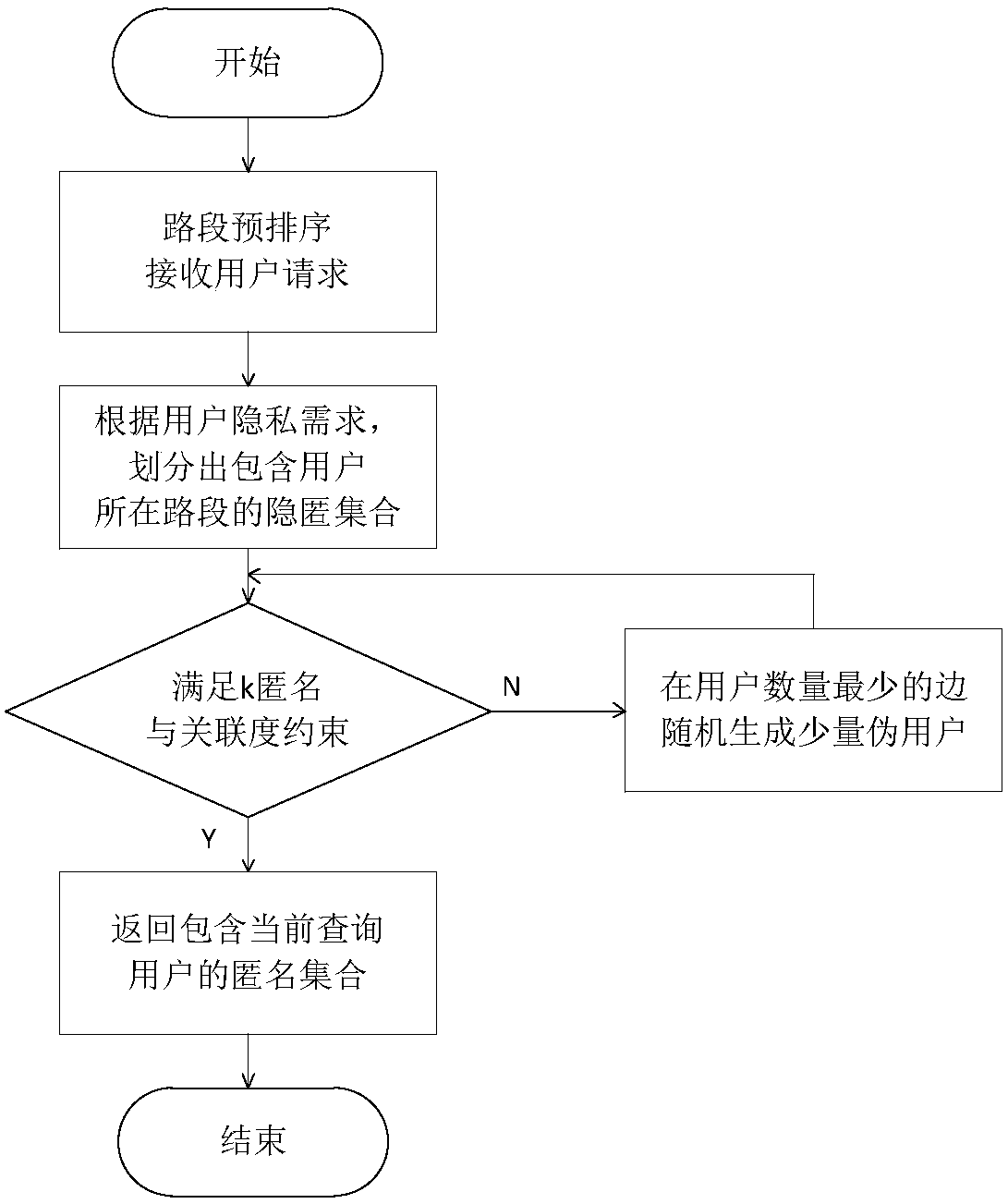

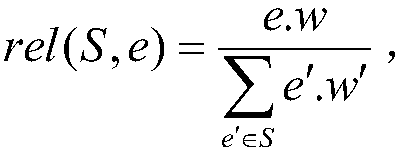

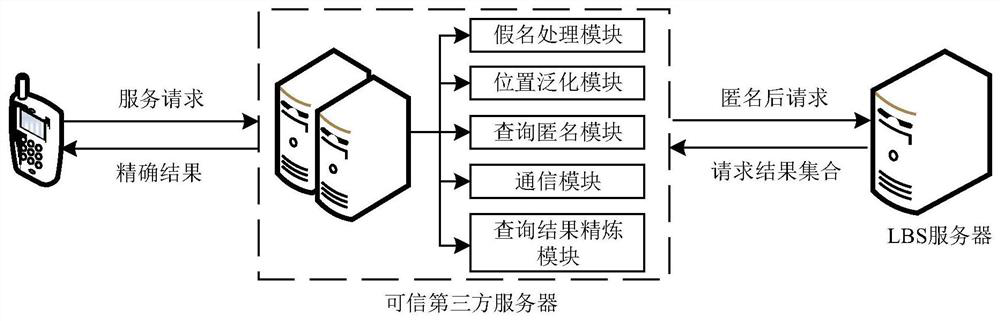

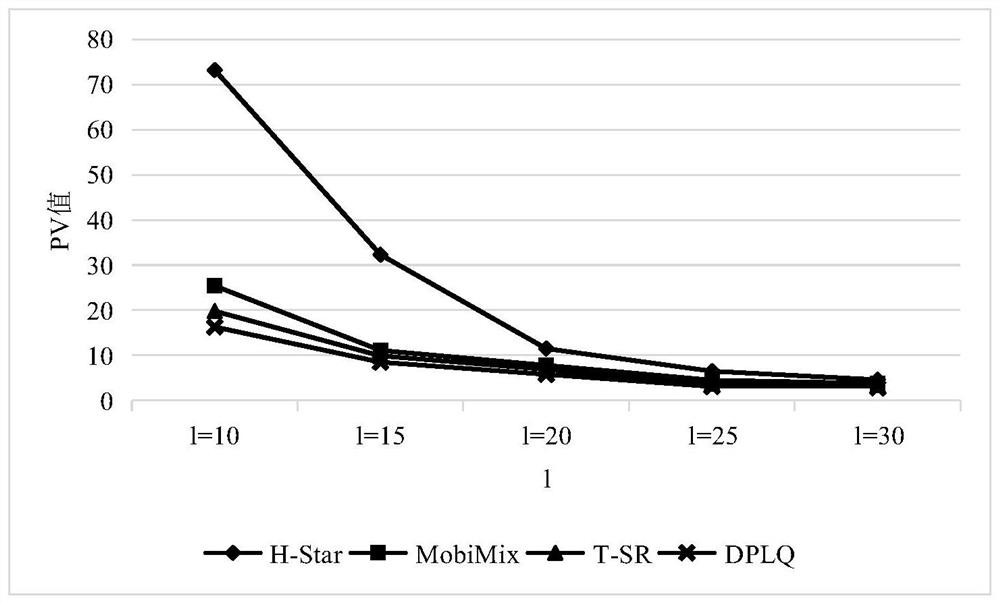

Location anonymous method for resisting replay attack in road network environment

ActiveCN108040321AReduce query costQuick service responseLocation information based serviceSecurity arrangementInference attackRoad networks

The invention discloses a location anonymous method for resisting a replay attack in a road network environment. The location anonymous method for resisting the replay attack in the road network environment comprises the steps that: (1), road sections are pre-processed; the road sections are pre-sorted for one time by adoption of breadth-first sorting; and the location query cost is reduced; and (2), an anonymous set is constructed; an equivalent partition is obtained from the sorted road sections; an anonymous and equivalent road section concealment set is obtained; various road sections in the concealment set are equalized by adoption of a pseudo-user adding mechanism, so that the edge weight association degree satisfies a pre-set threshold value; and thus, a privacy threat due to an edge weight inference attack also can be prevented while the replay attack is resisted. By means of the location anonymous method for resisting the replay attack in the road network environment disclosedby the invention, the common replay attack and edge weight inference attack in the road network environment can be resisted while the concealment set can be effectively generated; and furthermore, the method is low in query cost and quick in service response.

Owner:HOHAI UNIV

LBS service privacy protection method based on differential privacy

ActiveCN111797433AProtect location privacyAvoid questions that are not entirely believableDigital data protectionGeographical information databasesComputer networkData set

The invention discloses an LBS service privacy protection method based on differential privacy. A query k-anonymity set conforming to differential privacy is constructed according to background knowledge of a cluster where a user is located and an area where the user is located obtained in a DP-(k1, l)-means algorithm, and the anonymity set is sent to an LSP, so that leakage of a real query request of a user is avoided, the query privacy of the user is protected, and reasoning attacks and space-time correlation attacks of attackers are avoided. In a DP-k2-anonymy algorithm, according to the time characteristics, query requests sent in the same time period t are selected to construct a query k-anonymity set, and the data set is processed by using an index mechanism, so that the reasonability of the query requests in time is ensured, and the privacy of users is protected from being leaked.

Owner:LIAONING UNIVERSITY OF TECHNOLOGY

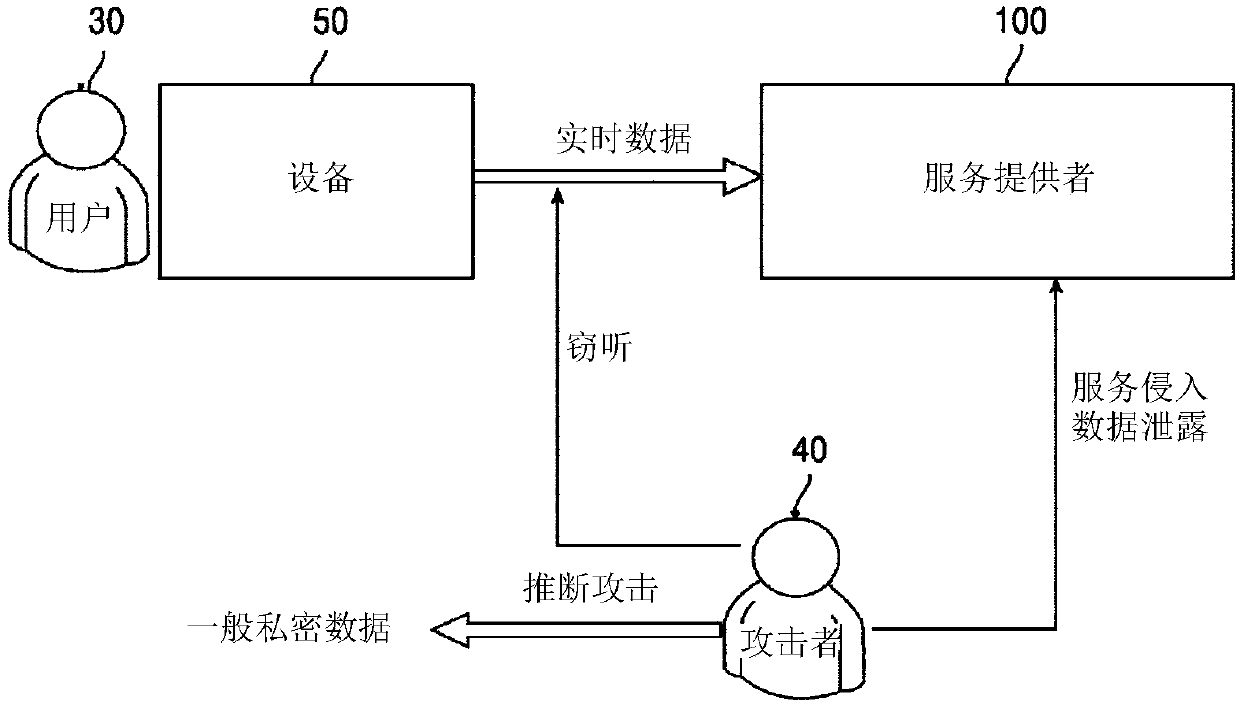

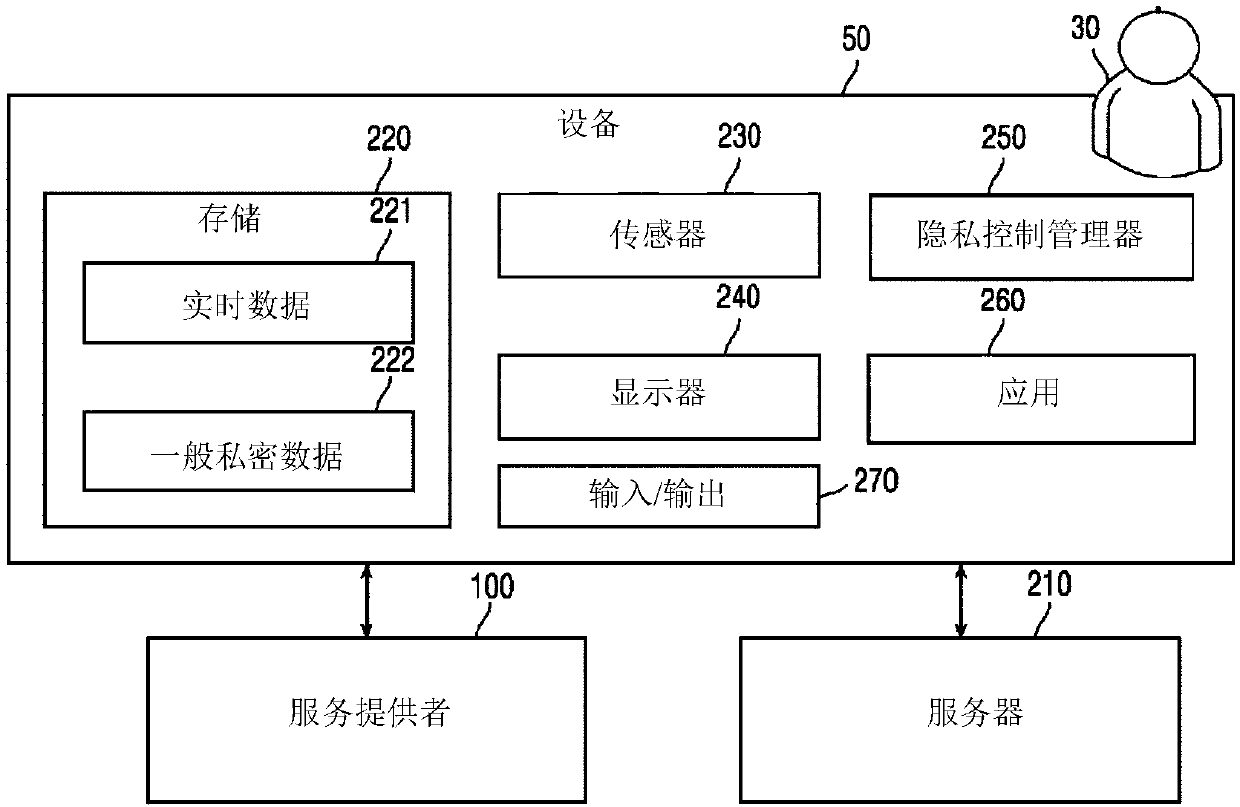

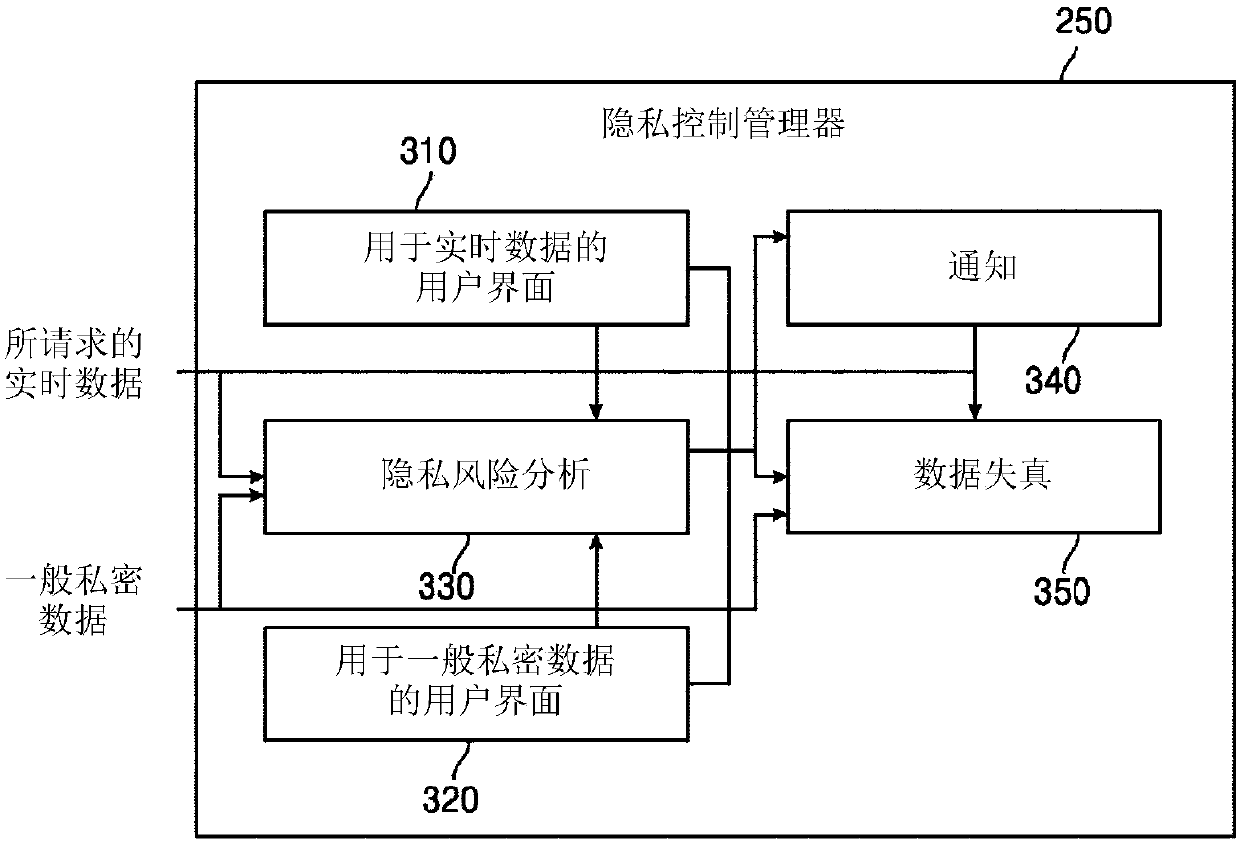

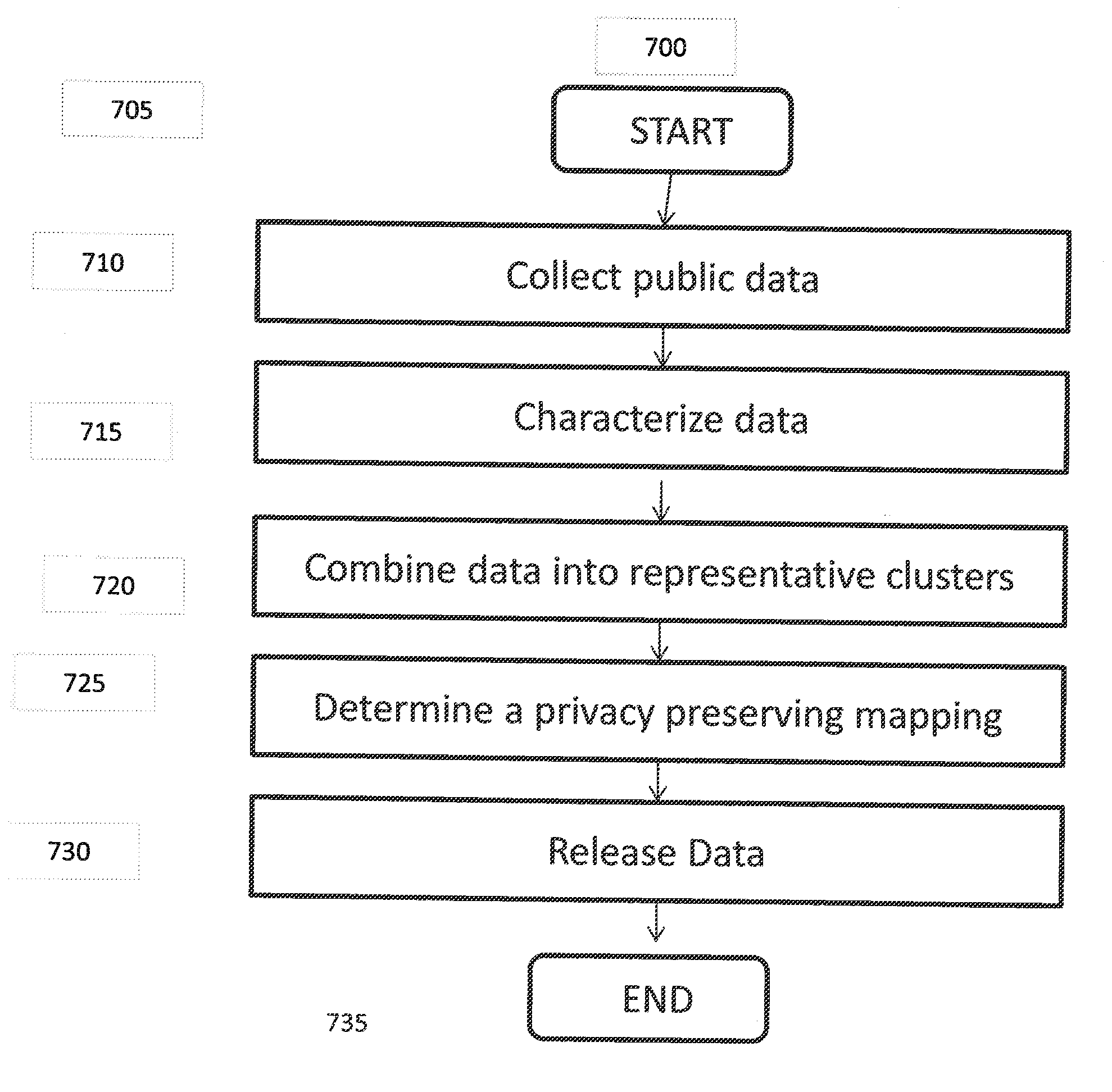

System and method to enable privacy-preserving real time services against inference attacks

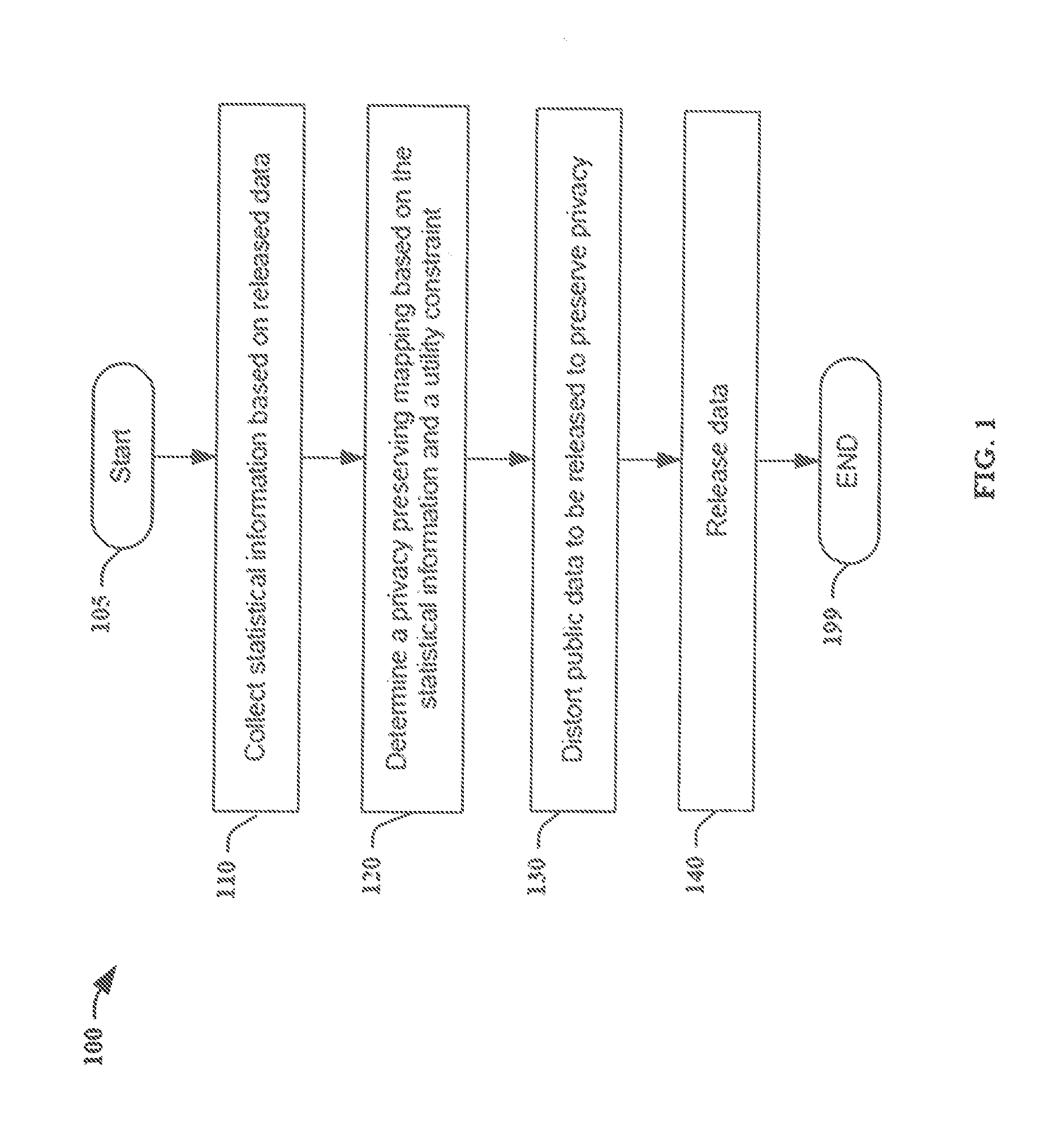

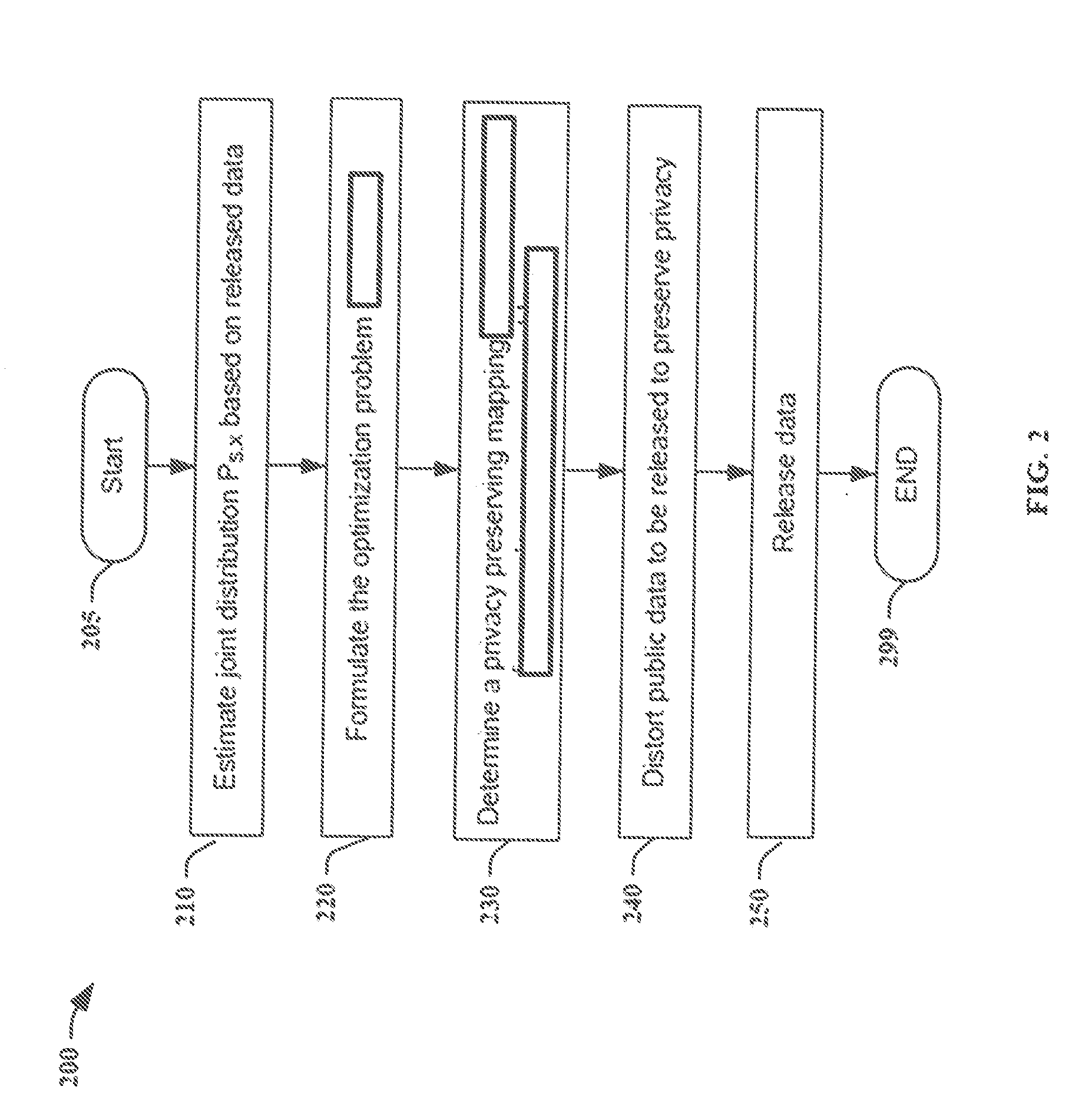

One embodiment provides a method comprising receiving general private data identifying at least one type of privacy-sensitive data to protect, collecting at least one type of real-time data, and determining an inference privacy risk level associated with transmitting the at least one type of real-time data to a second device. The inference privacy risk level indicates a degree of risk of inferringthe general private data from transmitting the at least one type of real-time data. The method further comprises distorting at least a portion of the at least one type of real-time data based on theinference privacy risk level before transmitting the at least one type of real-time data to the second device.

Owner:SAMSUNG ELECTRONICS CO LTD

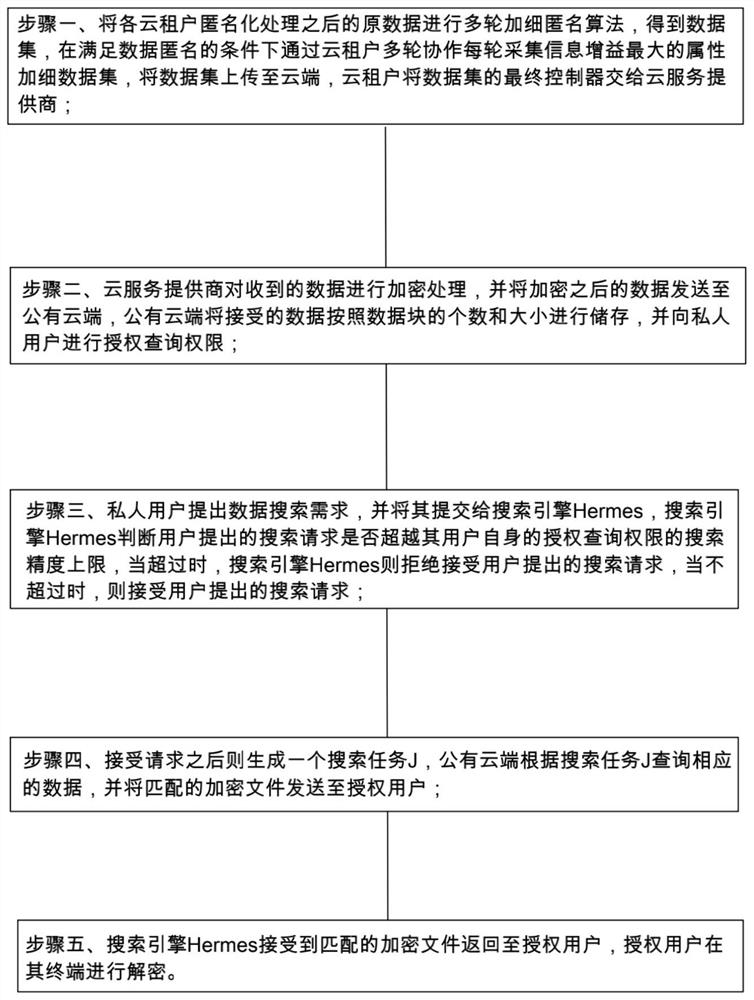

Data privacy protection and security search method in cloud environment

PendingCN114297714APrevent leakagePrevent theftDigital data protectionWeb data queryingData privacy protectionOriginal data

The invention relates to the technical field of data security, in particular to a data privacy protection and security search method in a cloud environment, which comprises the following steps of: performing multiple rounds of fine anonymous algorithm processing on original data when the data exists, and performing secondary encryption storage on the processed data through a cloud service provider, so that the original data can be prevented from being leaked, and the security of the data is improved. Moreover, an authorized user access system is adopted, the data can be prevented from being stolen, the security of the data is provided, operations such as association and aggregation on original source data attributes can possibly generate potential fusion privacy leakage risks, and most traditional data privacy protection schemes are provided for single-source and static data, so that the security of the data is greatly improved. And inference attacks in the multi-source dynamic fusion data publishing and searching process cannot be resisted, so that data storage and access are safer and more reliable by adopting the method disclosed by the invention.

Owner:INST OF ELECTRONICS & INFORMATION ENG OF UESTC IN GUANGDONG

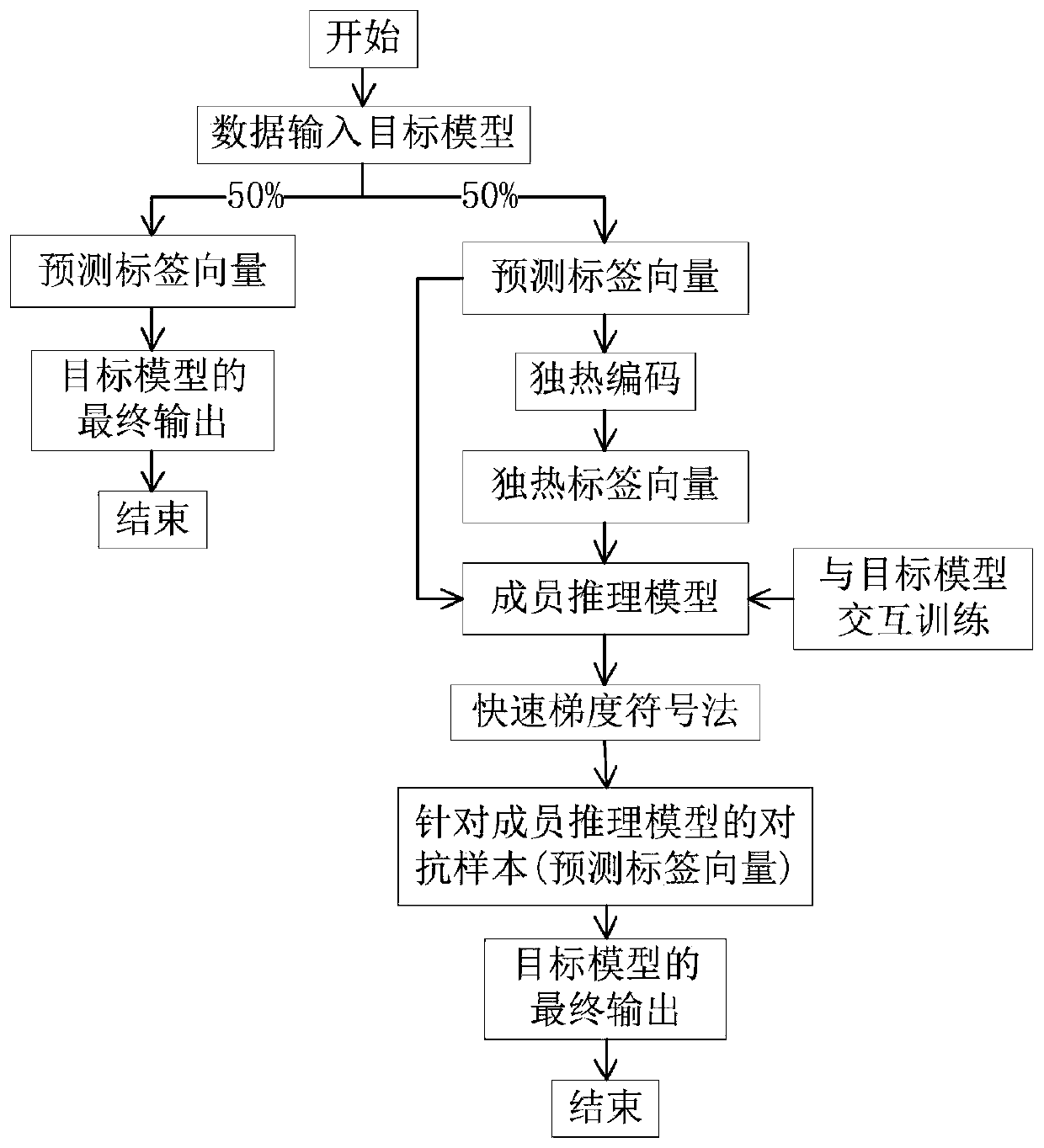

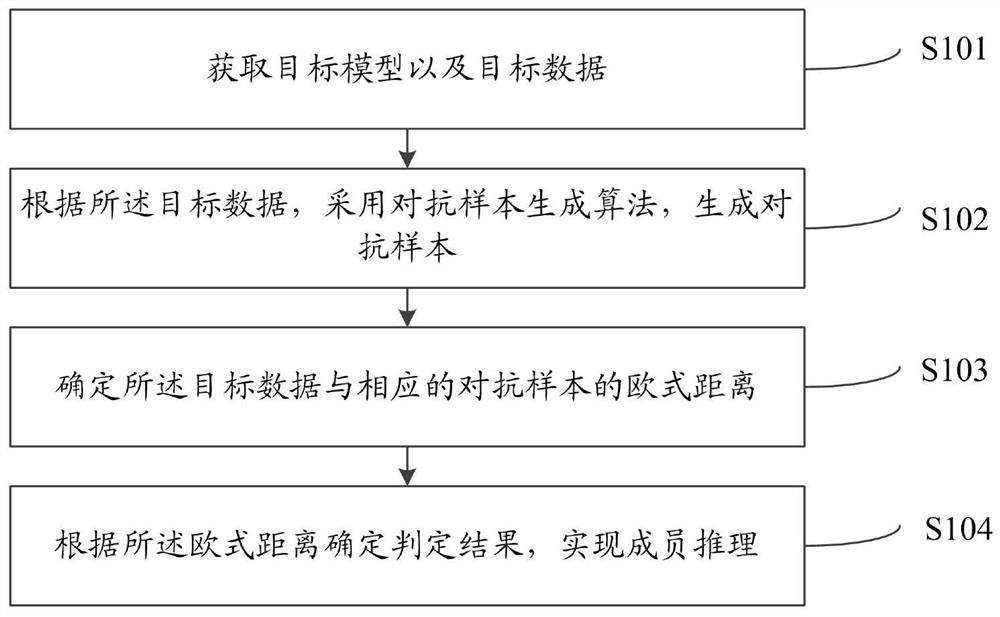

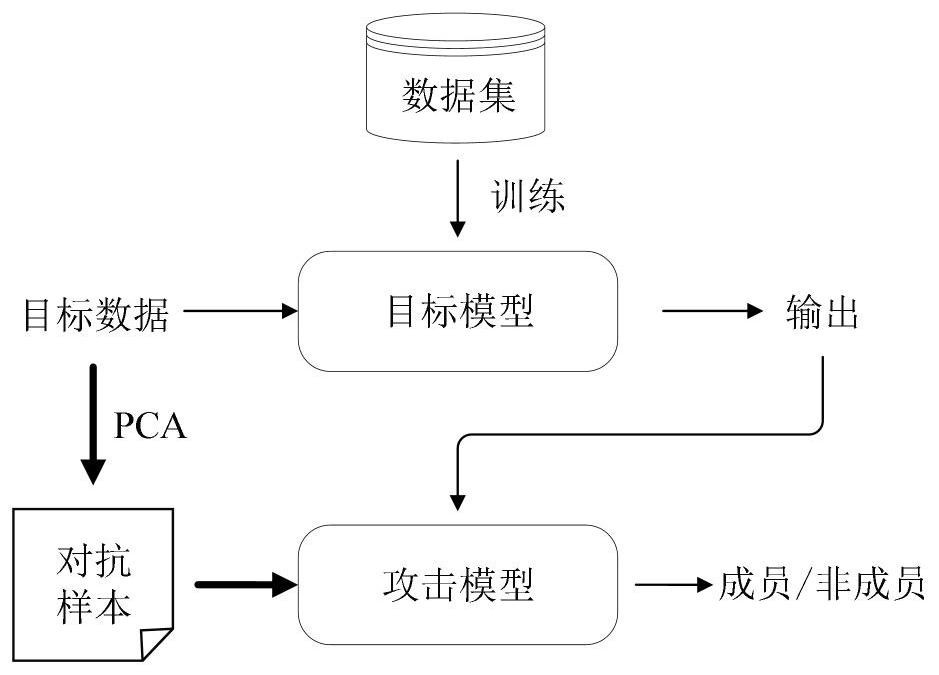

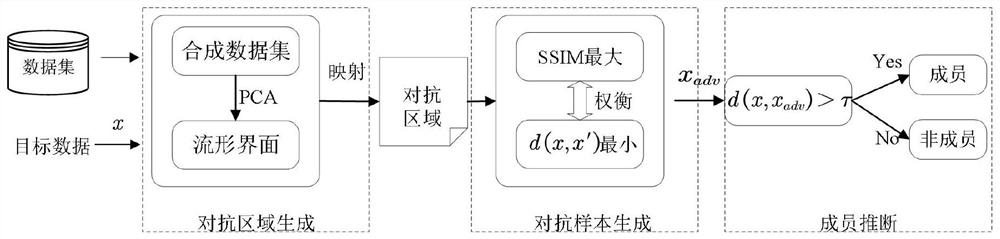

Machine learning model-oriented member reasoning privacy attack method and system

PendingCN113988312AEnsure attack robustnessEnsure Attack AccuracyCharacter and pattern recognitionPlatform integrity maintainanceData setEngineering

The invention relates to a machine learning model-oriented member reasoning privacy attack method and system. The method comprises the steps of obtaining a target model and target data; generating an adversarial sample by adopting an adversarial sample generation algorithm according to the target data, the adversarial sample generation algorithm comprising an adaptive greedy algorithm and binary search algorithm combined method or an embedded mapping algorithm on a manifold interface by means of a principal component technology; determining an Euclidean distance between the target data and the corresponding adversarial sample; determining a judgment result according to the Euclidean distance, and realizing member reasoning; the judgment result comprises that the target data belongs to a training data set or a test data set. According to the method, the problems of high access cost, weak mobility and poor robustness of black box member reasoning attacks can be solved.

Owner:GUIZHOU UNIV

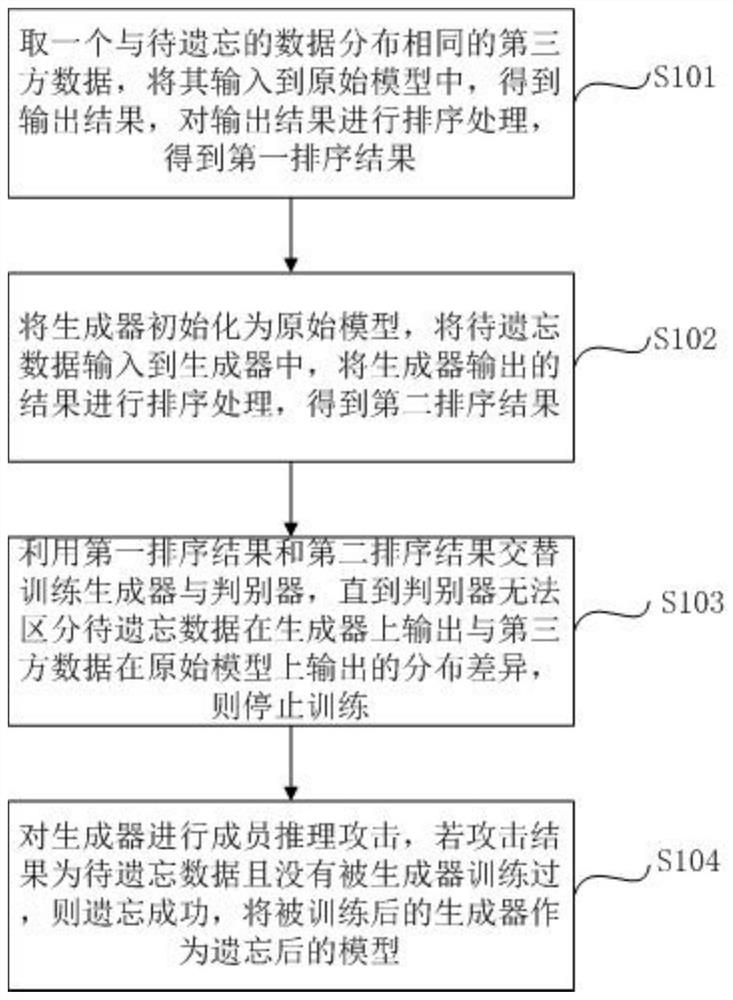

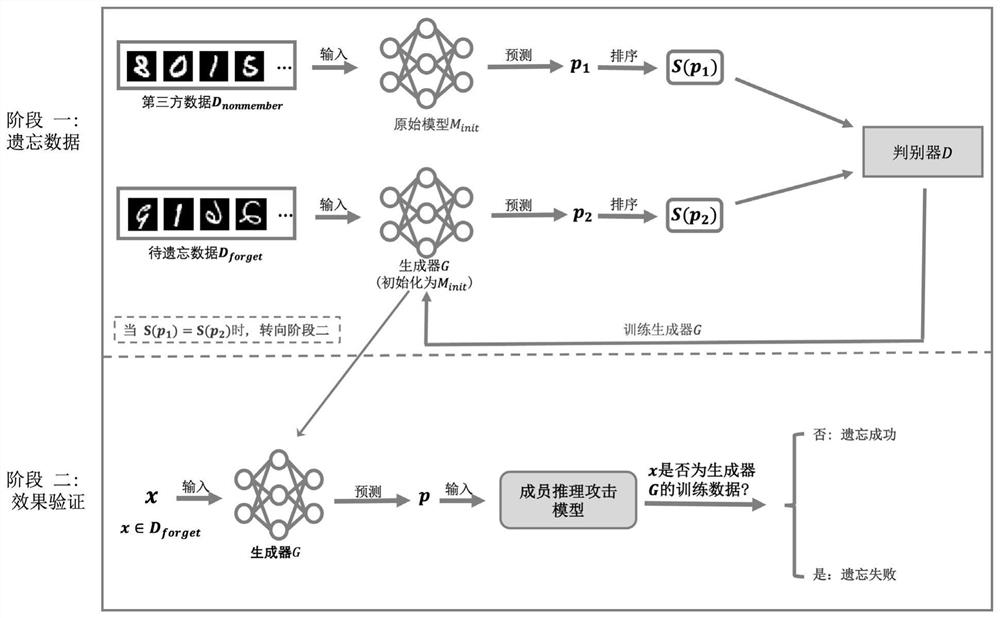

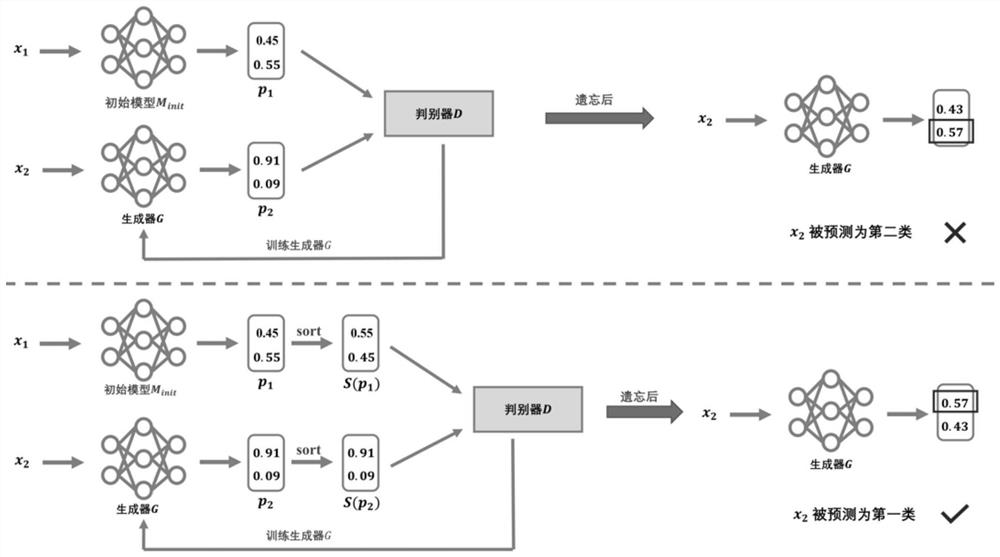

Rapid model forgetting method and system based on generative adversarial network

PendingCN114580530AEffective forgettingSave storage spaceCharacter and pattern recognitionMachine learningThird partyInference attack

The invention discloses a rapid model forgetting method and system based on a generative adversarial network, and the method comprises the steps: inputting third-party data with the same distribution as to-be-forgotten data into an original model, sorting the output results of the original model, obtaining a first sorting result, initializing a generator into the original model, inputting the to-be-forgotten data into the generator, and obtaining a second sorting result; and sorting results output by the generator to obtain a second sorting result, alternately training the generator and the discriminator by using the first sorting result and the second sorting result, and stopping training until the discriminator cannot distinguish the distribution difference between the output of the to-be-forgotten data on the generator and the output of the third-party data on the original model. Member reasoning attacks are carried out on the generator, if an attack result is to-be-forgotten data and is not trained by the generator, forgetting succeeds, and the trained generator is used as a forgotten model; according to the method, the speed of forgetting the data in the model can be increased, and the effect is more obvious especially in a complex scene.

Owner:GUANGZHOU UNIVERSITY

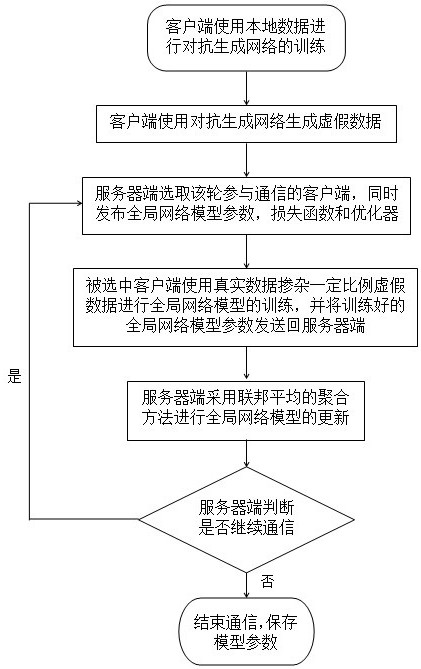

Differential privacy federal learning method for resisting member reasoning attack

PendingCN114785559AImprove performanceImprove accuracyMachine learningNeural architecturesOriginal dataAttack

The invention discloses a differential privacy federal learning method for resisting member reasoning attacks. The method specifically comprises the steps that each client uses local data for training to generate an adversarial generative network model and generate false data; for each round of federated learning communication, the server side randomly selects a client side participating in the round of communication, and issues global network model parameters and a loss function and an optimizer adopted in the training process; the selected client uses the false data to train a global network model and sends parameters of the trained global network model back to the server; the server side adopts a federated average aggregation method to update global network model parameters; and the server side judges whether the next communication is continued or not, if so, the global network model parameters are continued to be published, otherwise, the communication is ended, and the global network model parameters are saved. According to the method, the data privacy of the client is further protected under the condition of original data islands, and member reasoning attacks can be resisted.

Owner:NANJING UNIV OF SCI & TECH

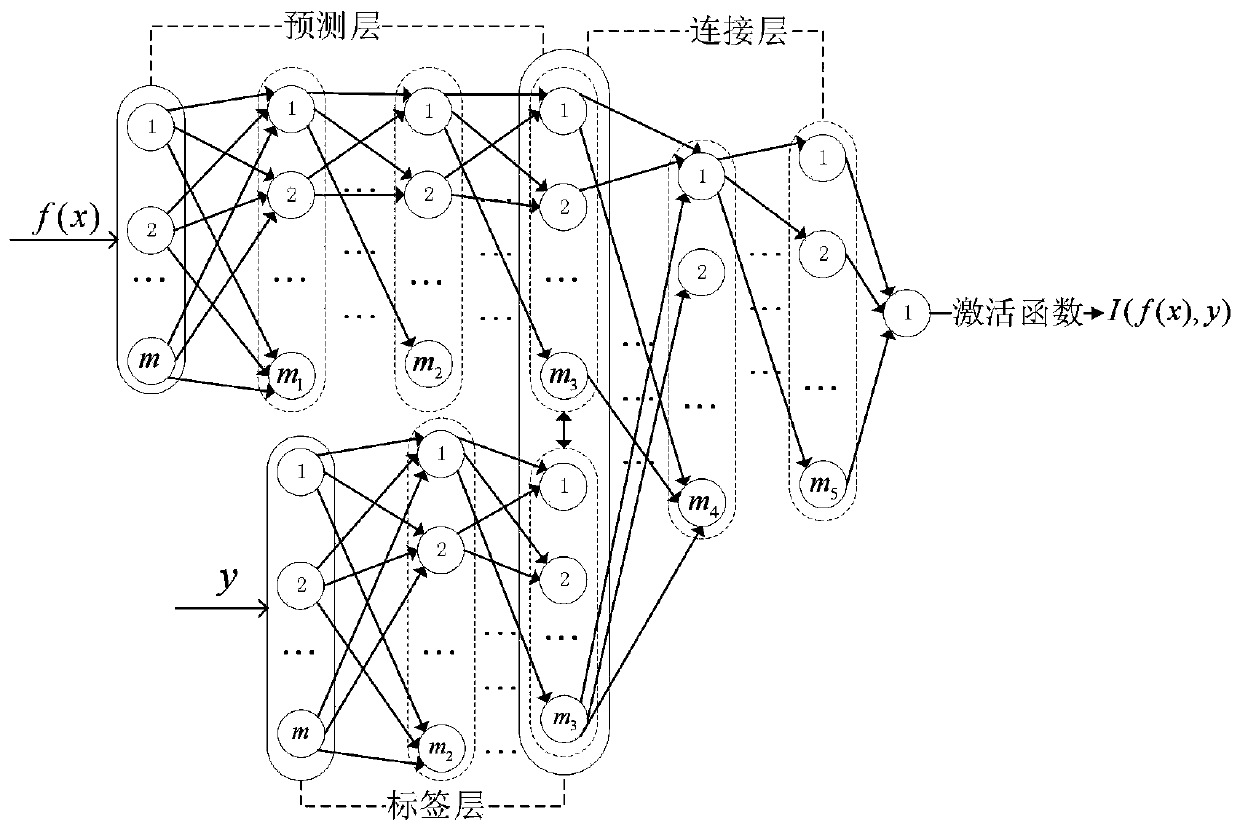

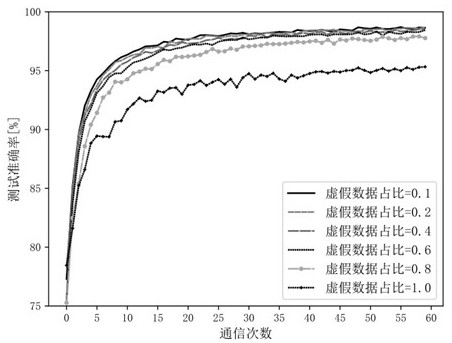

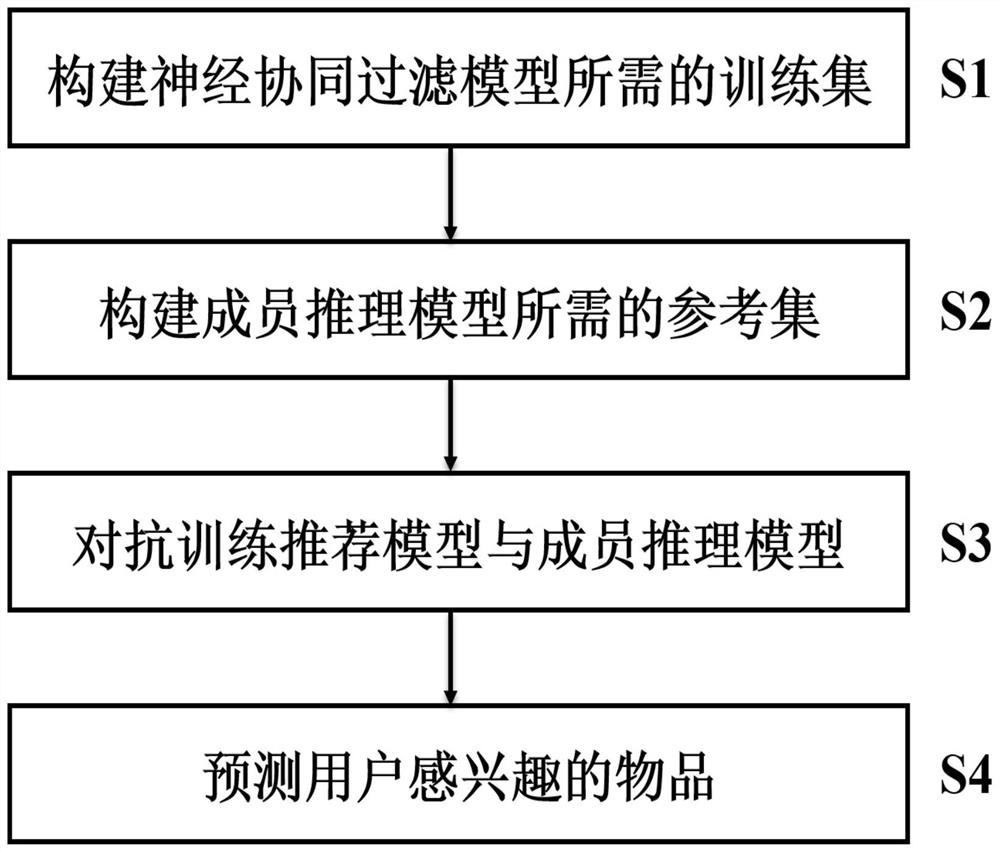

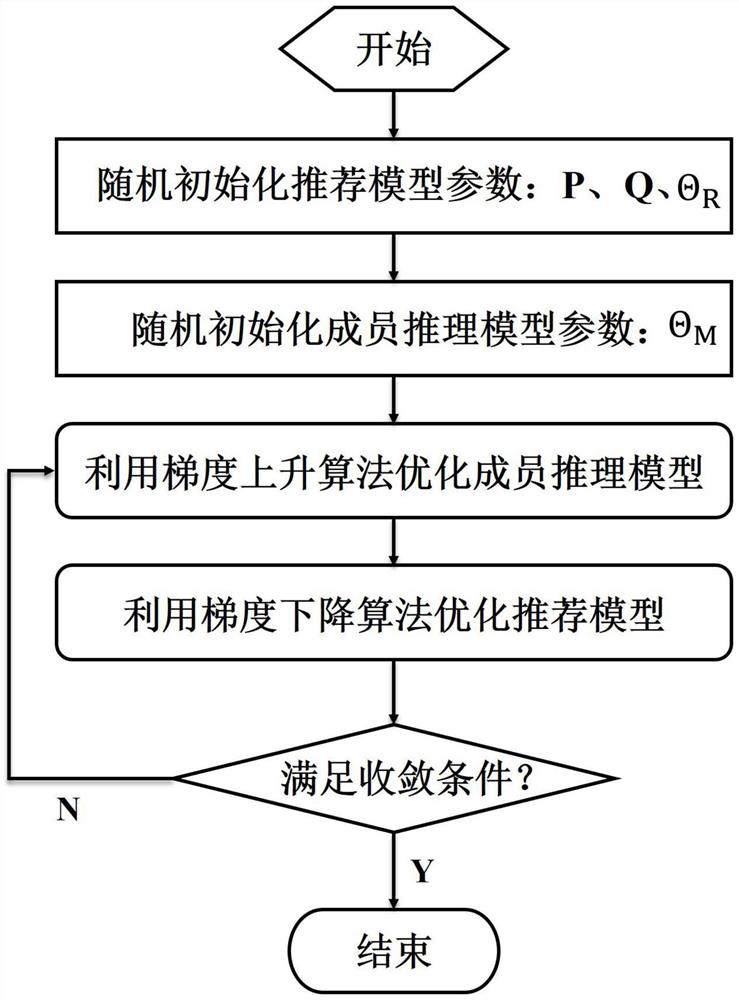

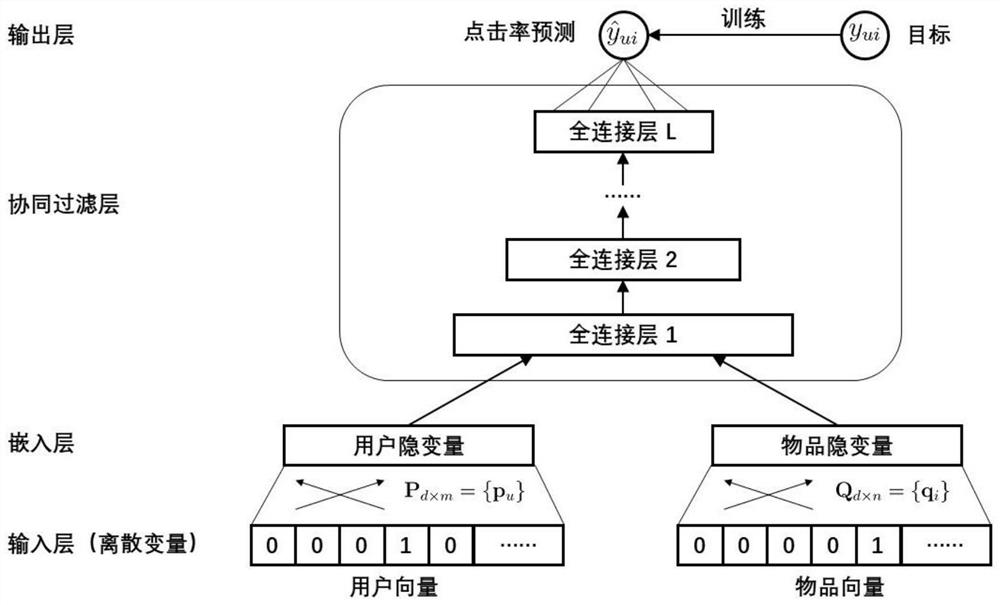

High-robustness privacy protection recommendation method based on adversarial learning

PendingCN113918814APrivacy protectionTwo-way improvement in privacy protectionData processing applicationsDigital data information retrievalInference attackMachine learning

The invention provides a high-robustness privacy protection recommendation method based on adversarial learning. The method comprises the following steps: constructing a training set required for optimizing a neural collaborative filtering model and a reference set required for training a member reasoning model; designing a neural collaborative filtering joint model with member reasoning regular terms, and performing iterative optimization of a confrontation training mode on the joint model by using the training set and the reference set to obtain a robust user and article feature representation matrix; predicting an unobserved score according to the obtained user feature matrix and the article feature matrix; and recommending the corresponding item set which is relatively high in prediction score and does not generate behaviors to the corresponding user. According to the invention, a unified minimum and maximum objective function is designed in an adversarial training mode to explicitly endow the recommendation algorithm with the ability of defending member reasoning attacks, so that the member reasoning attacks can be defended, overfitting of the recommendation model can be relieved, and bidirectional improvement of personalized recommendation model algorithm performance and training data privacy protection is realized.

Owner:BEIJING JIAOTONG UNIV

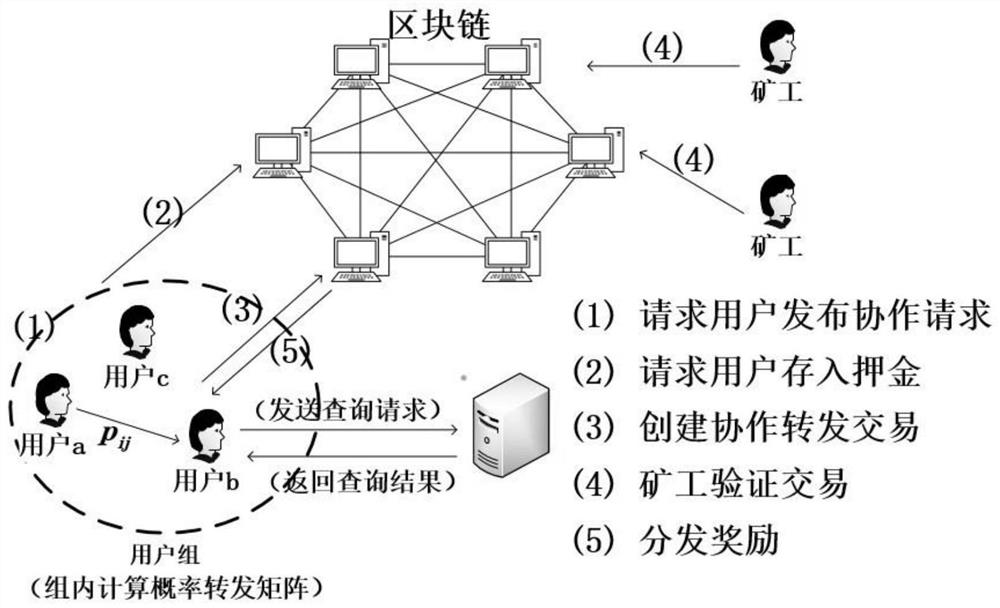

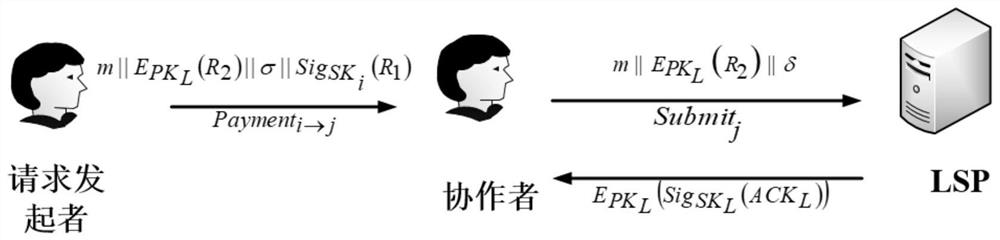

Collaborative location privacy protection method based on blockchain

ActiveCN113595738ABlock Malicious BehaviorPromoting honest cooperationUser identity/authority verificationComputer networkInference attack

The invention discloses a collaborative location privacy protection method based blockchain. Firstly, a reputation mechanism is designed, transaction behaviors are stored through blockchain, and once dishonest behaviors are found, reputation value scores of the dishonest behaviors are reduced; secondly, the safety is analyzed by using a game theory; in addition, during the calculation of the probability forwarding matrix, the utility cost and the service quality of user forwarding and the expected distortion error of an attacker with background knowledge are considered, so the privacy level of the user is improved, and the service quality of the user is ensured; finally, according to the invention, a simulation experiment and security analysis show that the experiment can effectively prevent the self-benefit behavior of the user in the cooperation process, and can also promote the user to actively participate in forwarding. The position privacy protection effect of the user can be improved, and inference attacks are prevented.

Owner:NANJING UNIV OF TECH

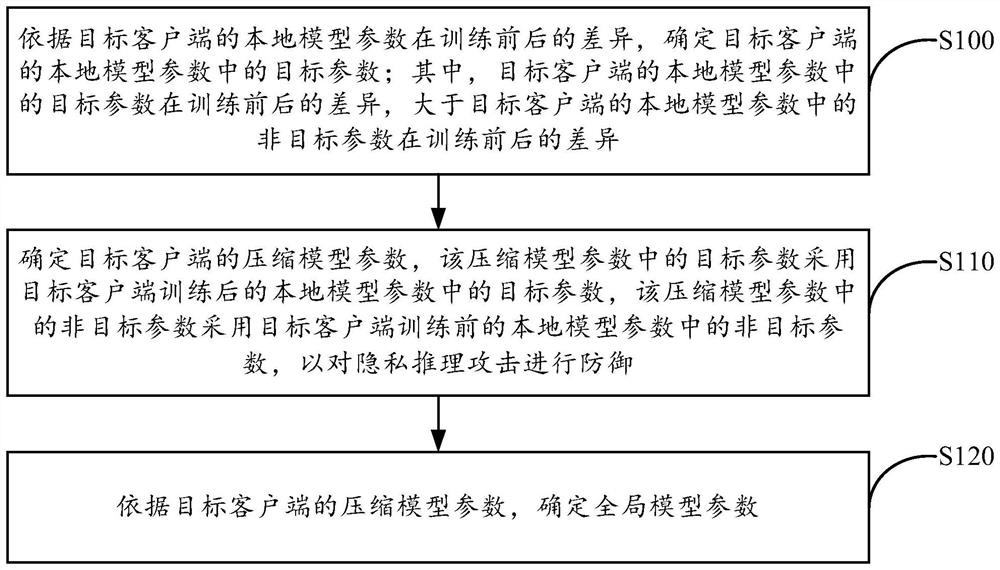

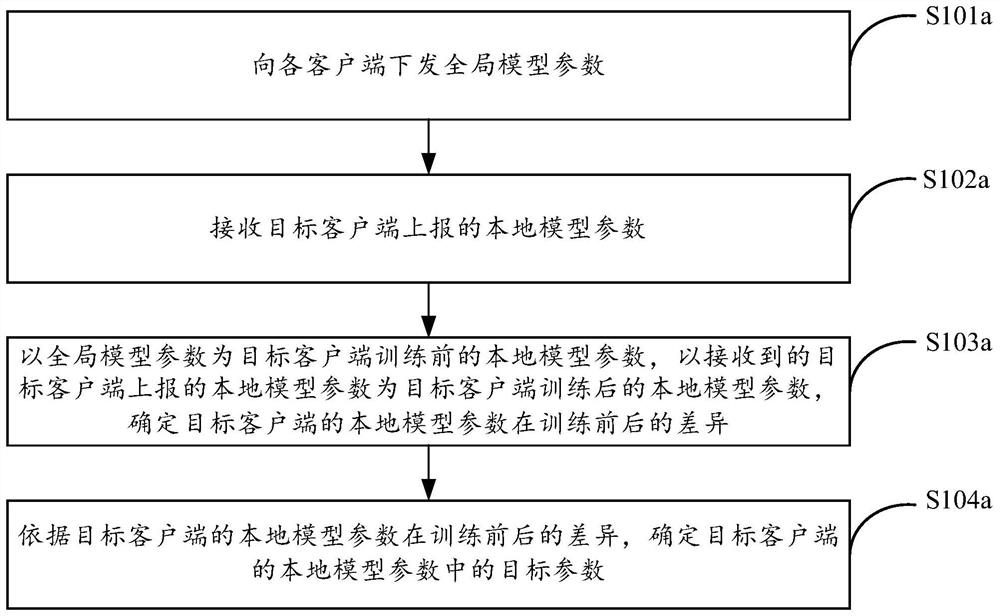

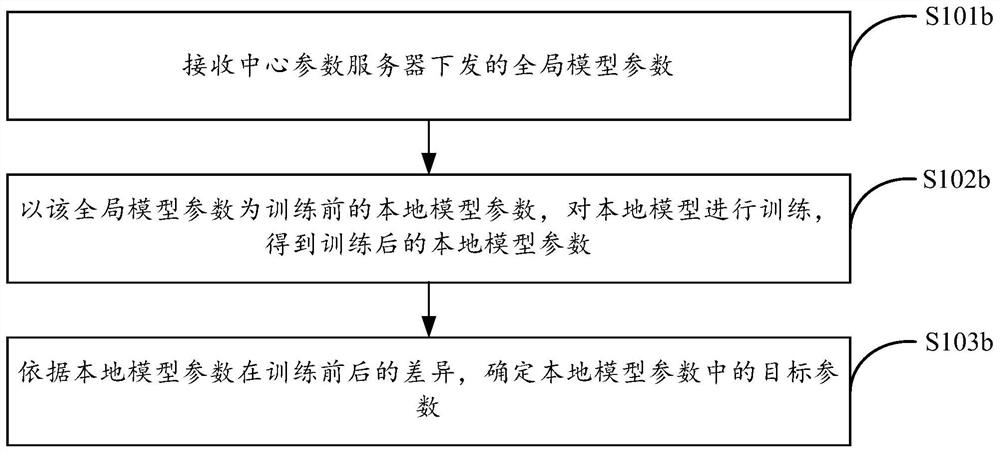

Federal learning privacy reasoning attack-oriented defense method based on parameter compression

PendingCN114239049AProtect local private data featuresAchieve sparsificationDigital data protectionMachine learningInference attackAttack

The invention provides a federated learning privacy inference attack-oriented defense method based on parameter compression, and the method comprises the steps: determining a target parameter in local model parameters of a target client according to the difference of the local model parameters of the target client before and after training, so as to defend the privacy inference attack; determining compression model parameters of the target client; and determining global model parameters according to the compression model parameters of the target client. According to the method, the local private data features of the client can be protected under the condition of ensuring the accuracy of the global model, and the defense against the privacy reasoning attack is realized.

Owner:HANGZHOU HIKVISION DIGITAL TECH

Privacy against inference attacks under mismatched prior

InactiveUS20160006700A1Digital data processing detailsRelational databasesInference attackMachine learning

Owner:THOMSON LICENSING SA

Depth model privacy protection method and device oriented to member reasoning attack and based on parameter sharing

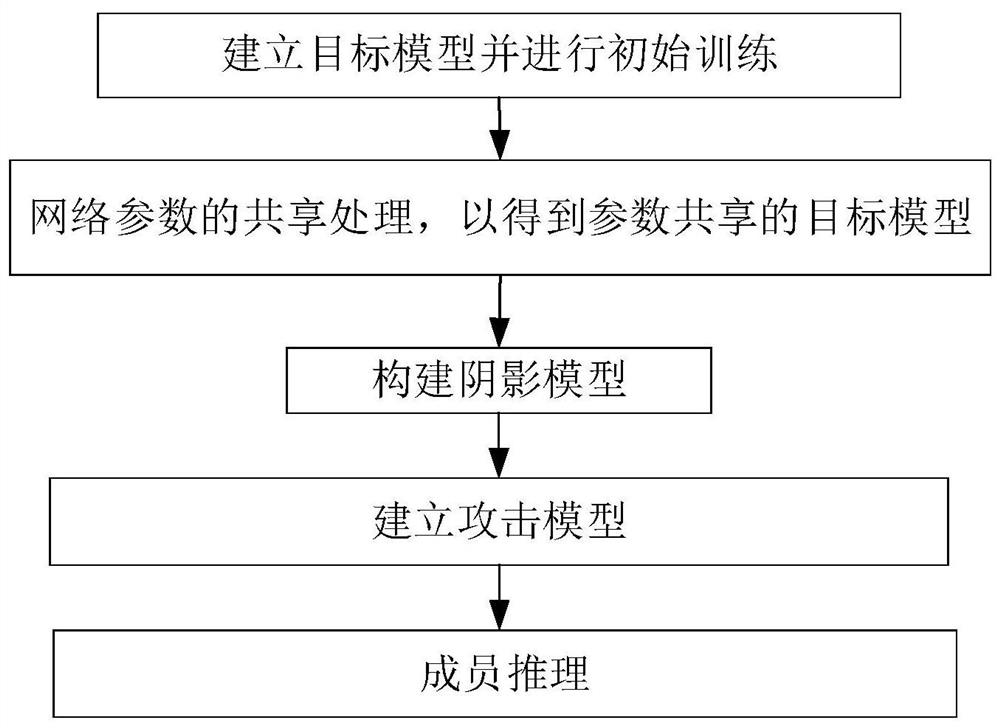

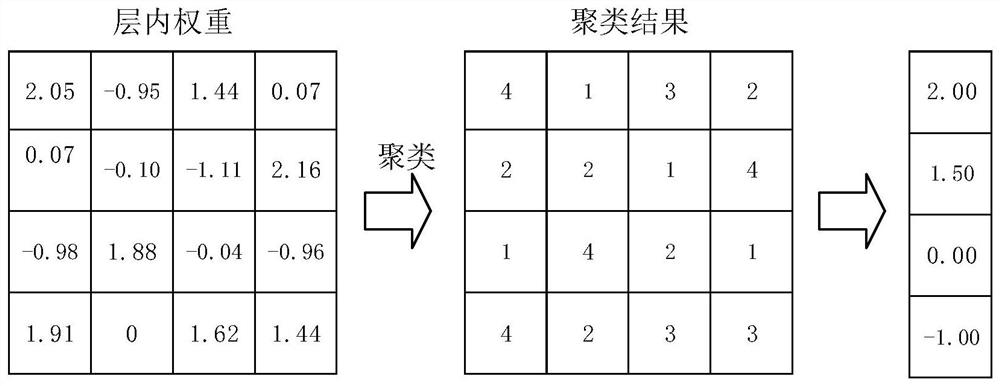

PendingCN113283537AAlleviate the problem of slow convergenceReduce overfittingCharacter and pattern recognitionDigital data protectionAttack modelAlgorithm

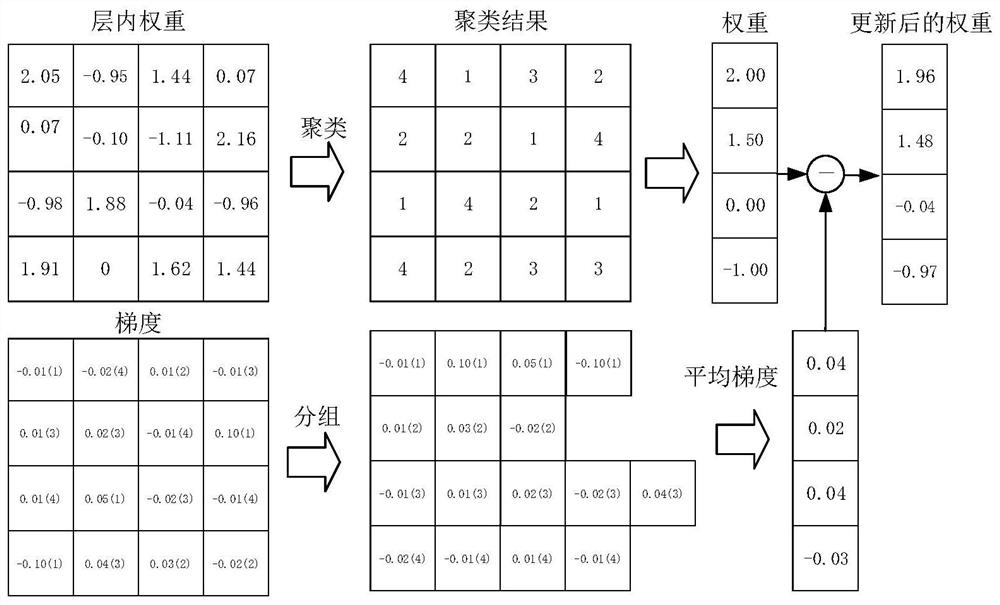

The invention discloses a depth model privacy protection method and device oriented to member reasoning attack and based on parameter sharing. The method comprises the following steps: constructing a target model, and optimizing the network parameters of the target model through an image sample; after optimization is finished, carrying out clustering processing on each layer of network parameters of the target model, and after the network parameters belonging to the same class are replaced by the network parameter average value of the class cluster to which the network parameters belong, the network parameters are optimized; constructing a shadow model having the same structure as the target model, and optimizing network parameters of the shadow model by using the training image sample; constructing a new image sample according to the shadow model; constructing an attack model, and optimizing model parameters of the attack model by using the new image sample; and obtaining a prediction confidence coefficient of the input test image by using the parameter-shared enhanced target model, inputting the prediction confidence coefficient into the parameter-optimized attack model, obtaining a prediction result of the attack model through calculation, and judging whether the test image is a member sample of the target model or not according to the prediction result.

Owner:ZHEJIANG UNIV OF TECH

Member reasoning attack-oriented deep model privacy protection method based on abnormal point detection

PendingCN113283536AReduced classification performanceImprove defenseCharacter and pattern recognitionDigital data protectionReference modelingFeature vector

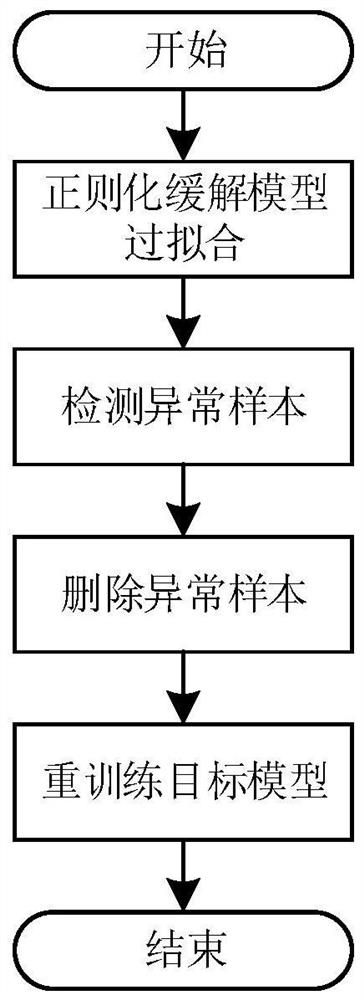

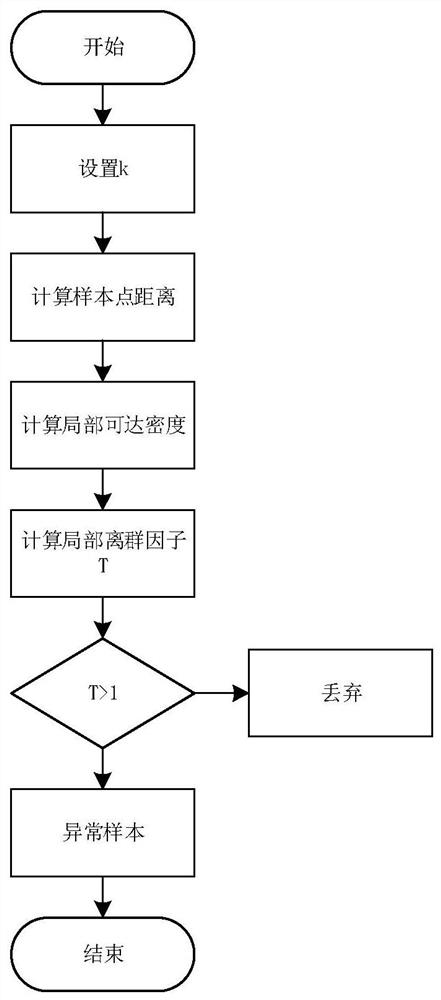

The invention discloses a member reasoning attack-oriented deep model privacy protection method based on abnormal point detection, which comprises the following steps of: relieving the overfitting degree of a target model through a regularization method, finding out abnormal samples which are easily attacked by member reasoning on the model, and deleting the samples from a training set of the target model, so as to improve the privacy of the target model; and finally, re-training the target model to achieve a defense effect. In order to determine samples susceptible to member reasoning attacks, the method comprises the following steps: firstly, establishing a reference model training set, establishing a reference model, training the reference model by using the reference model training set, inputting a to-be-tested sample into the reference model, obtaining feature vectors of the to-be-tested sample, and determining the distance between the feature vectors of different to-be-tested samples; and calculating a local outlier factor of the to-be-detected sample, wherein the sample with the local outlier factor greater than 1 is an abnormal sample. By utilizing the method, the problems of unstable gradient, non-convergent training, low convergence speed and the like of a traditional defense method can be eliminated, and relatively good defense performance is achieved.

Owner:ZHEJIANG UNIV OF TECH

Patient medical privacy data protection method and device and computer storage medium

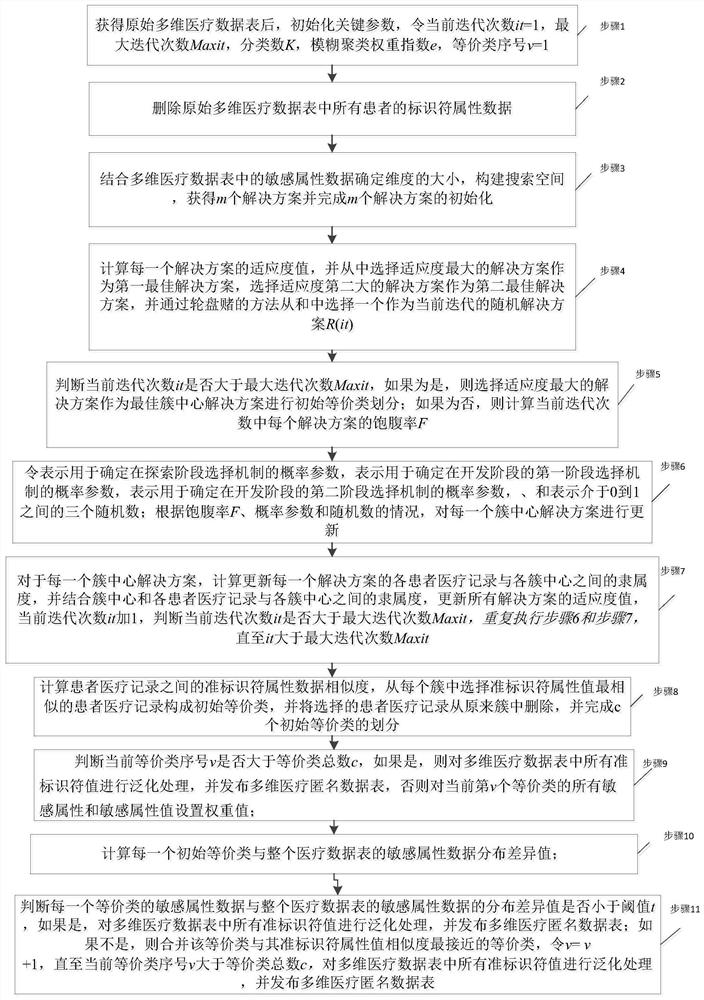

PendingCN114091097AImproved fitness calculation methodAvoid local optimaDigital data protectionArtificial lifeInference attackAttack

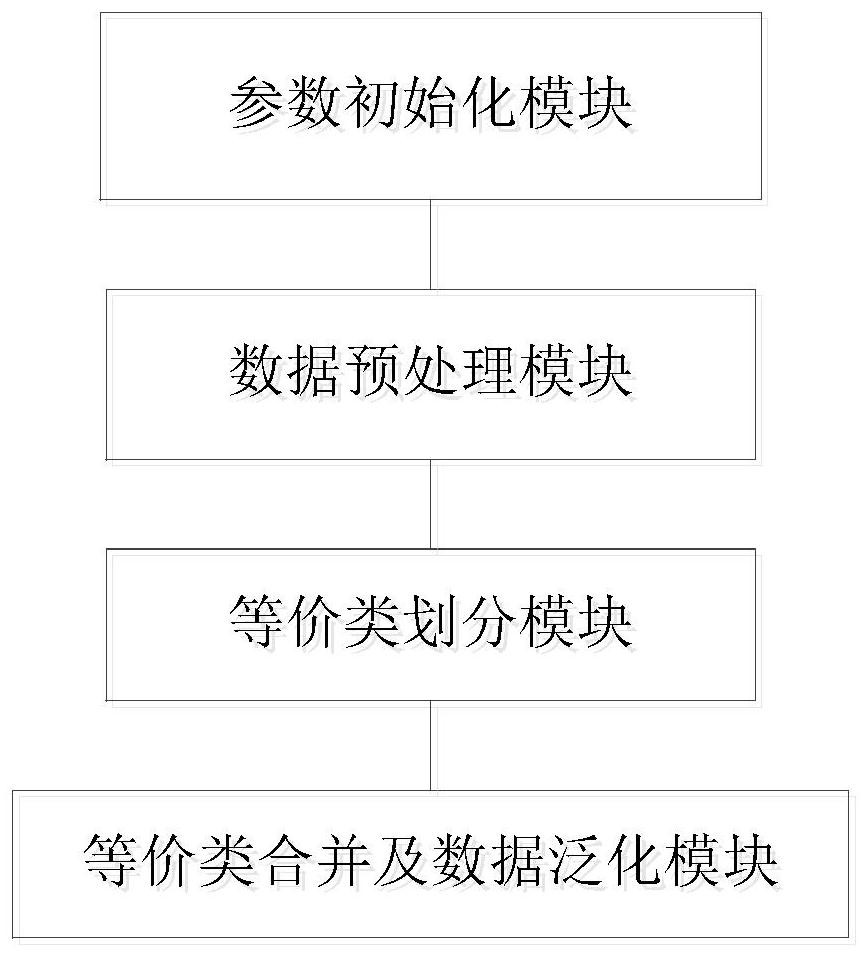

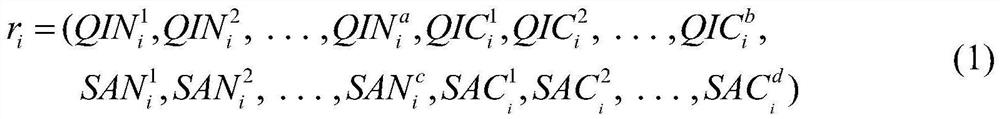

The invention provides a patient medical privacy data protection method and device and a computer storage medium, and relates to the field of privacy data protection. The method realizes multi-dimensional K anonymous release of medical data through parameter initialization, data preprocessing, equivalence class division, equivalence class merging based on distribution distance measurement and data generalization. The privacy security of medical data is protected, a multi-dimensional K anonymous algorithm is applied to an intelligent medical scene, and the problems that a mainstream protection algorithm aiming at common inference attacks such as homogeneity attacks, similarity attacks and skewness attacks in the prior art focuses on research on the privacy protection situation only aiming at one piece of sensitive attribute data in the aspect of protection methods, the situation that multiple pieces of sensitive attribute data exist in one medical data table in the actual anonymous process is not considered, the situation is difficult to directly apply to medical information release with the multiple pieces of sensitive attribute data, and the problem of privacy disclosure exists are solved.

Owner:ZHEJIANG SHUREN UNIV

Data set authentication method and system based on machine learning member inference attack

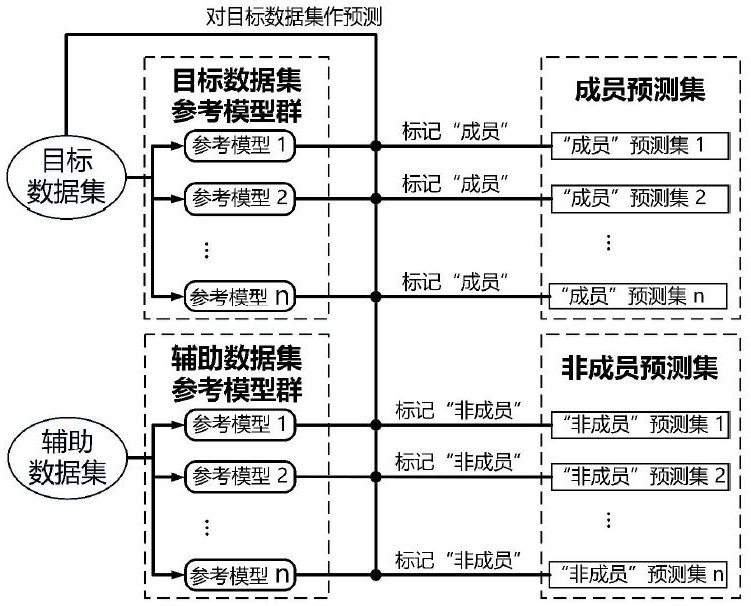

ActiveCN113259369AProtect interestsPrivacy protectionCharacter and pattern recognitionMachine learningInference attackMachine learning

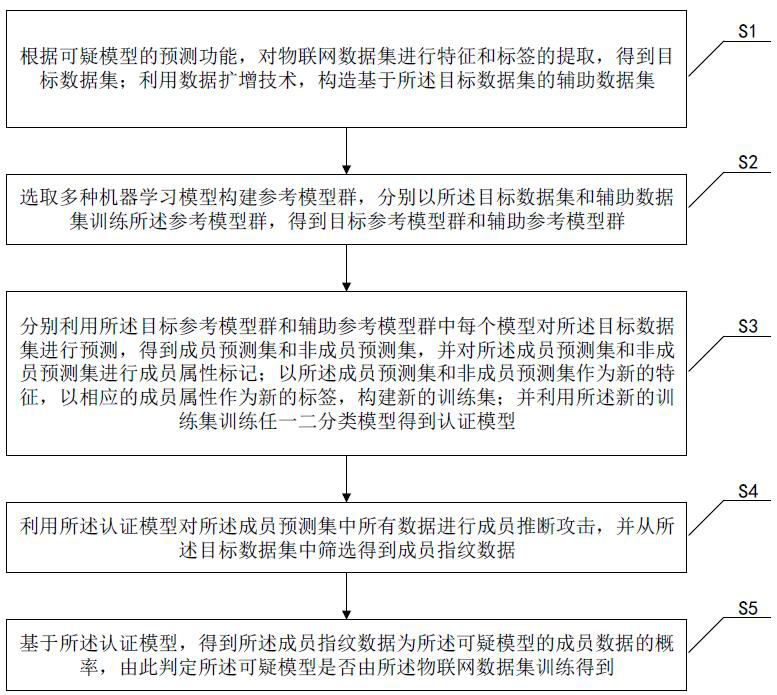

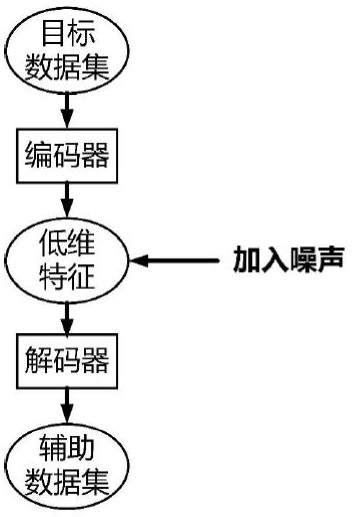

The invention discloses a data set authentication method and system based on a machine learning member inference attack, and belongs to the field of data protection of the Internet of Things, and the method comprises the steps: selecting a plurality of machine learning models after obtaining a target data set and an auxiliary data set, and respectively constructing reference model groups based on the two data sets; predicting the target data set by using the two types of reference model groups to obtain a member prediction set and a non-member prediction set; taking the member prediction set and the non-member prediction set as features, taking corresponding member attributes as labels, and training to obtain an authentication model; performing member inference attack on all data in the member prediction set by using the authentication model, and screening member fingerprint data from the target data set; and based on the authentication model, obtaining the probability that the member fingerprint data is member data of the suspicious model, thereby determining whether the suspicious model is obtained by training an Interne-of-Things data set. Therefore, the interests and privacy of the data owner can be effectively protected.

Owner:HUAZHONG UNIV OF SCI & TECH

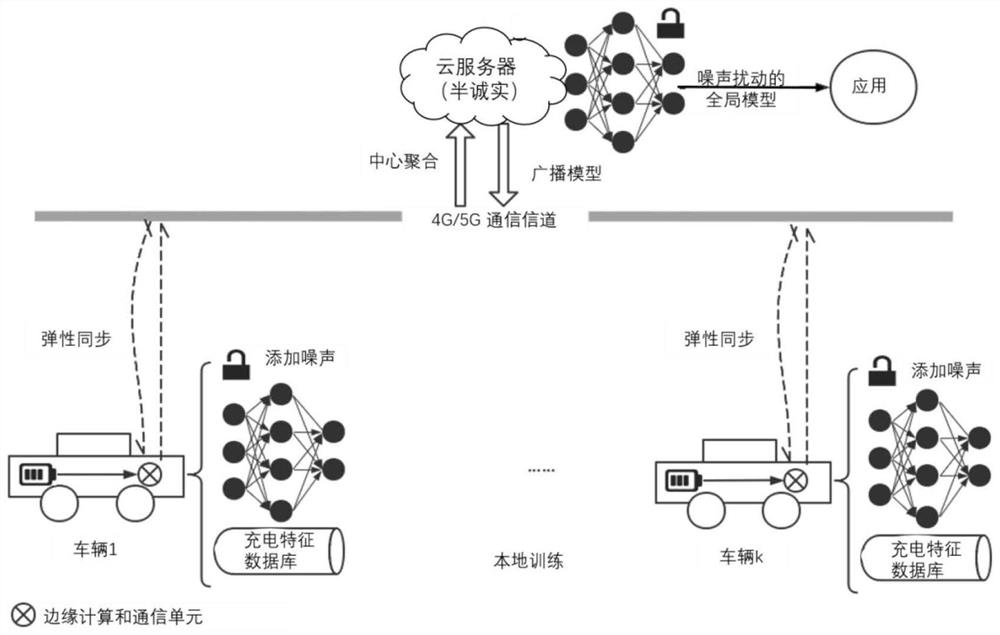

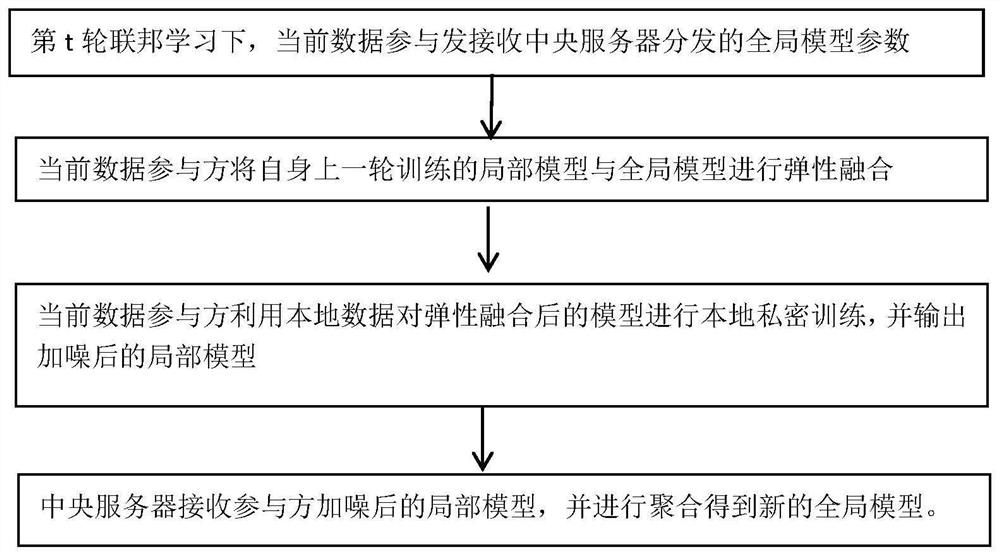

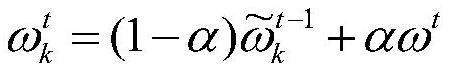

Differential privacy federal modeling method and device for resisting inference attack of semi-honest server

PendingCN114817922ALittle impact on accuracyHigh precisionCharacter and pattern recognitionPlatform integrity maintainanceInference attackDifferential privacy

The invention provides a differential privacy federated modeling method and device for resisting inference attack of a semi-honest server. The method comprises the following steps: initializing a global model; the semi-honesty central server selects a plurality of data participants to participate in the federation training according to a preset probability; downloading the global model by the selected data participant, and performing elastic fusion on the local model trained in the previous round and the global model to obtain a local model after elastic fusion; the selected data participant performs local private training on the elastically fused local model by using local data to obtain a local model after noise disturbance, and then sends the local model to a semi-honest central server; and the semi-honesty central server aggregates the local models after noise disturbance to obtain a global model of disturbance. According to the method, the robustness in the joint modeling process can be improved, and the precision of the model can also be improved.

Owner:WUHAN UNIV OF TECH

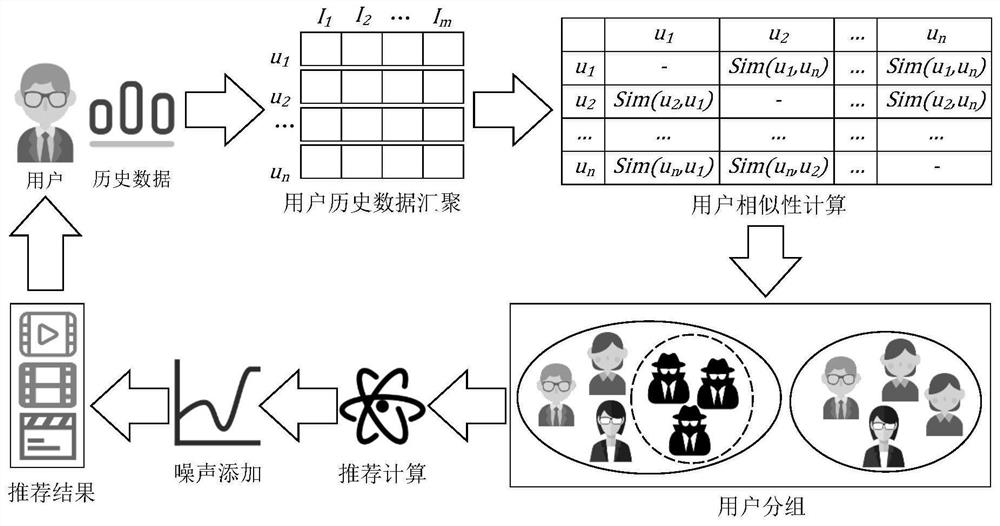

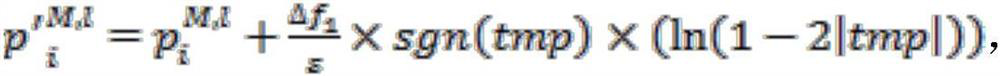

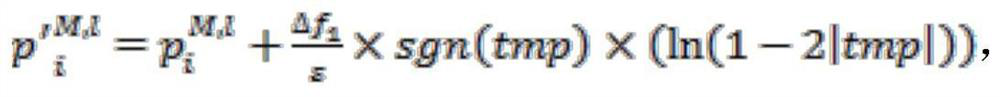

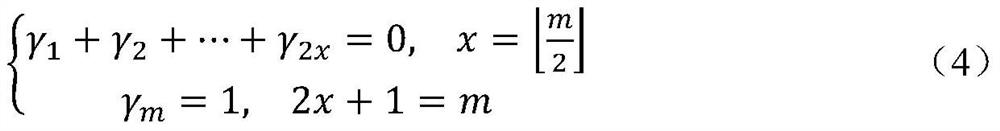

Differential privacy protection method for collusion inference attacks in collaborative filtering

PendingCN112487473AReduce differential privacy noise sizeDoes not change the calculation processDigital data information retrievalDigital data protectionInference attackAttack

The invention discloses a differential privacy protection method for collusion inference attacks in collaborative filtering, which comprises the following steps of: grouping users according to user similarity, recommending the users according to a grouping result, associating strongly similar users into an integral user on the basis, and returning the same recommendation result to the integral user; making sensitivity calculation before differential privacy noise is added, and analyzing the possible maximum influence of user data on any other data recommendation result; adding differential privacy noise to the recommendation functionality, and carrying out recommendation for the user according to the interference functionality. Users who may be subjected to collusion attacks are associatedand subjected to unified interference, inference attacks are prevented, the differential privacy noise is reduced on the basis, and the recommendation accuracy is improved.

Owner:SOUTHEAST UNIV

Federal learning member reasoning attack defense method based on adversarial interference

PendingCN113792331AProtect local data privacyReduce accuracy lossDigital data protectionNeural architecturesData privacy protectionAttack

The invention relates to a federated learning member reasoning attack defense method based on adversarial interference, and belongs to the technical field of federated learning privacy protection in machine learning. According to the method, a federal learning member reasoning attack defense mechanism is established, and before each participant uploads model parameters trained by using local data, meticulously designed antagonistic interference is added into the model parameters, the attack accuracy rate obtained after an attacker carries out member reasoning attack on the model trained by using the defense mechanism is as close as 50% as possible, and the influence on the performance of the target model is reduced as far as possible, so that the requirements of user data privacy protection and cooperative training of a high-performance model in a federated learning scene are met at the same time.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com