No-reference contrast distortion image quality evaluation method

A technology for image quality evaluation and distorted images, applied in image enhancement, image analysis, image data processing, etc., can solve problems such as poor performance, and achieve the effect of ensuring accuracy and effectiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

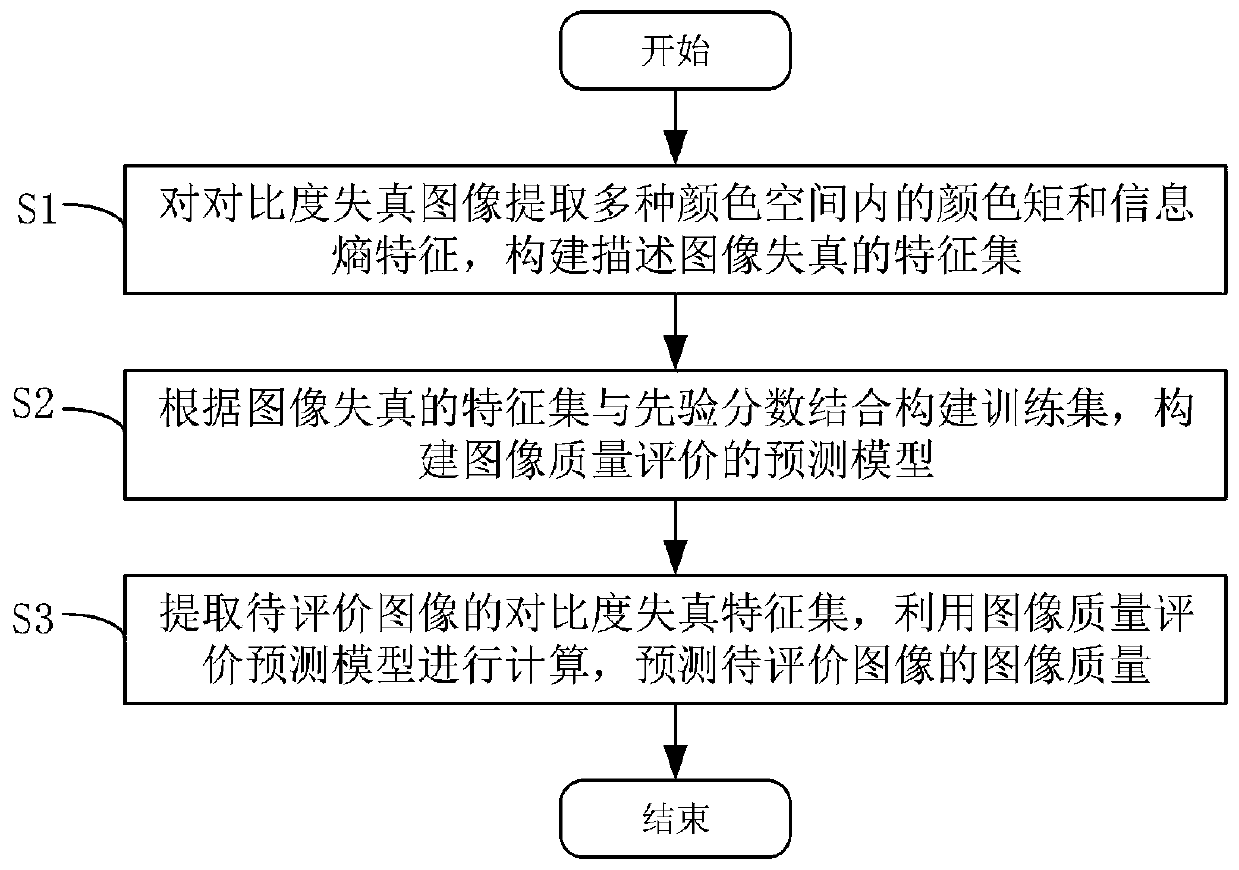

[0053] Such as figure 1 As shown, a no-reference contrast distortion image quality evaluation method includes the following steps:

[0054]S1: Extract color moments and information entropy features in various color spaces for contrast-distorted images, and construct a feature set describing image distortion;

[0055] S2: Construct a training set based on the feature set of image distortion and the prior score, and construct a prediction model for image quality evaluation;

[0056] S3: extract the contrast distortion feature set of the image to be evaluated, and use the image quality evaluation prediction model to perform calculations to predict the image quality of the image to be evaluated.

[0057] In the specific implementation process, a kind of non-reference contrast distortion image quality evaluation method provided by the present invention first converts the input image from RGB to CIELab color space, and uses color moment and information entropy as the representation...

Embodiment 2

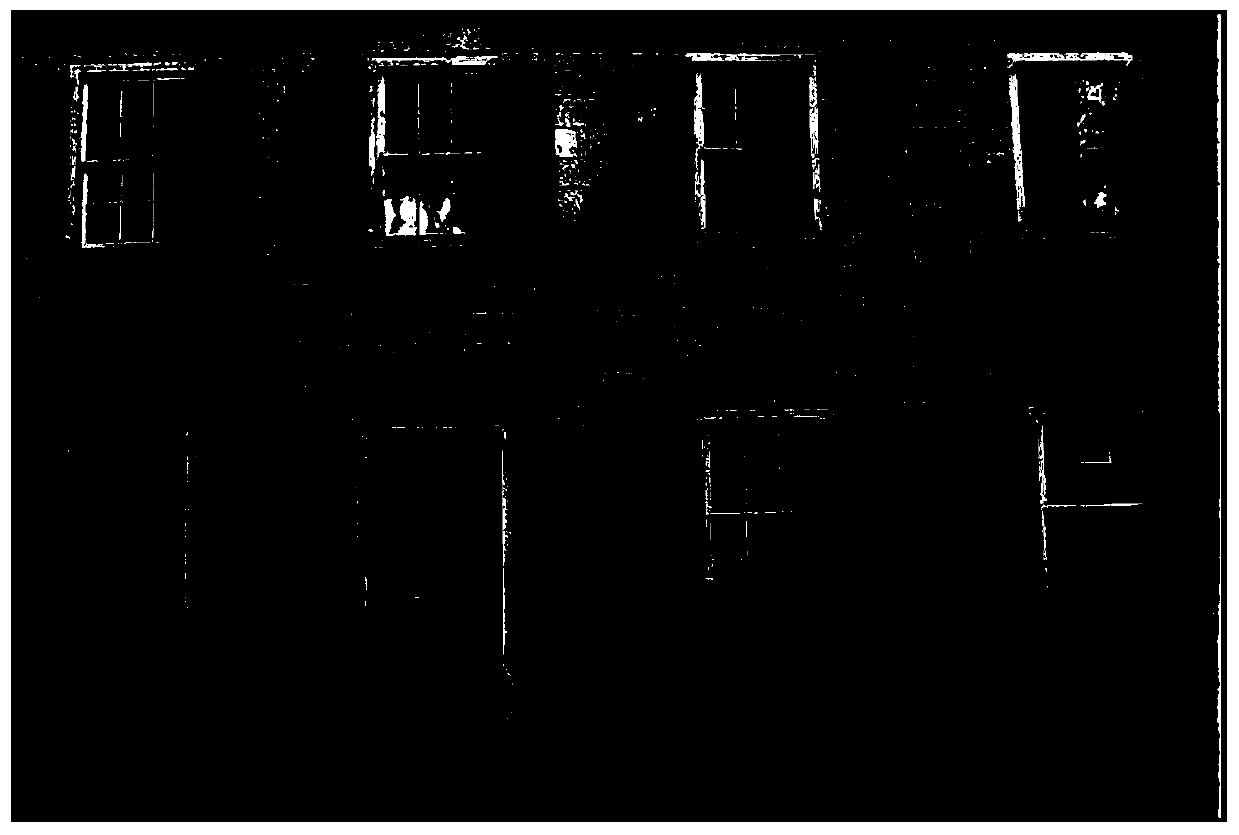

[0059] More specifically, on the basis of Example 1, for figure 2 As shown in the image for evaluation, the step S1 includes the following steps:

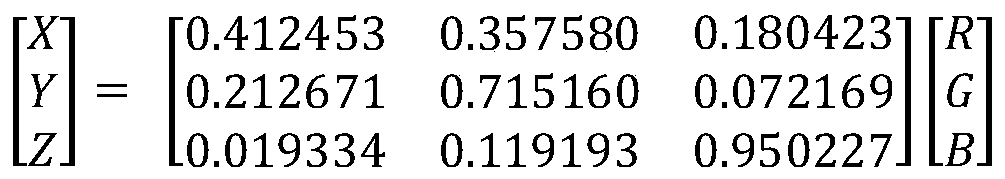

[0060] S11: Convert the image from the RGB color space to the XYZ color space, and then convert the image from the XYZ color space to the CIELab color space; wherein, the three channels of the RGB color space are respectively recorded as: R, G, B; the CIELab color space The three channels are respectively marked as: L, a, b;

[0061] S12: Extract the first to third-order central color moment features from the six color channels obtained in step S11, denoted as Wherein, i={1, 2, 3} represents the order of the color moments, and j={R, G, B, L, a, b} represents the color channels of different color spaces;

[0062] S13: Extract information entropy features from the 6 color channels obtained in step S11, denoted as H j , use j={R, G, B, L, a, b} to represent the color channels of different color spaces.

[0063] More specifically...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com