Generation method and device of synonymous sentence generation model and medium

A technology for generating models and generating devices, which is applied in semantic analysis, instruments, electrical digital data processing, etc., and can solve the problems of a large number of manual labeling costs and few parallel data databases

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

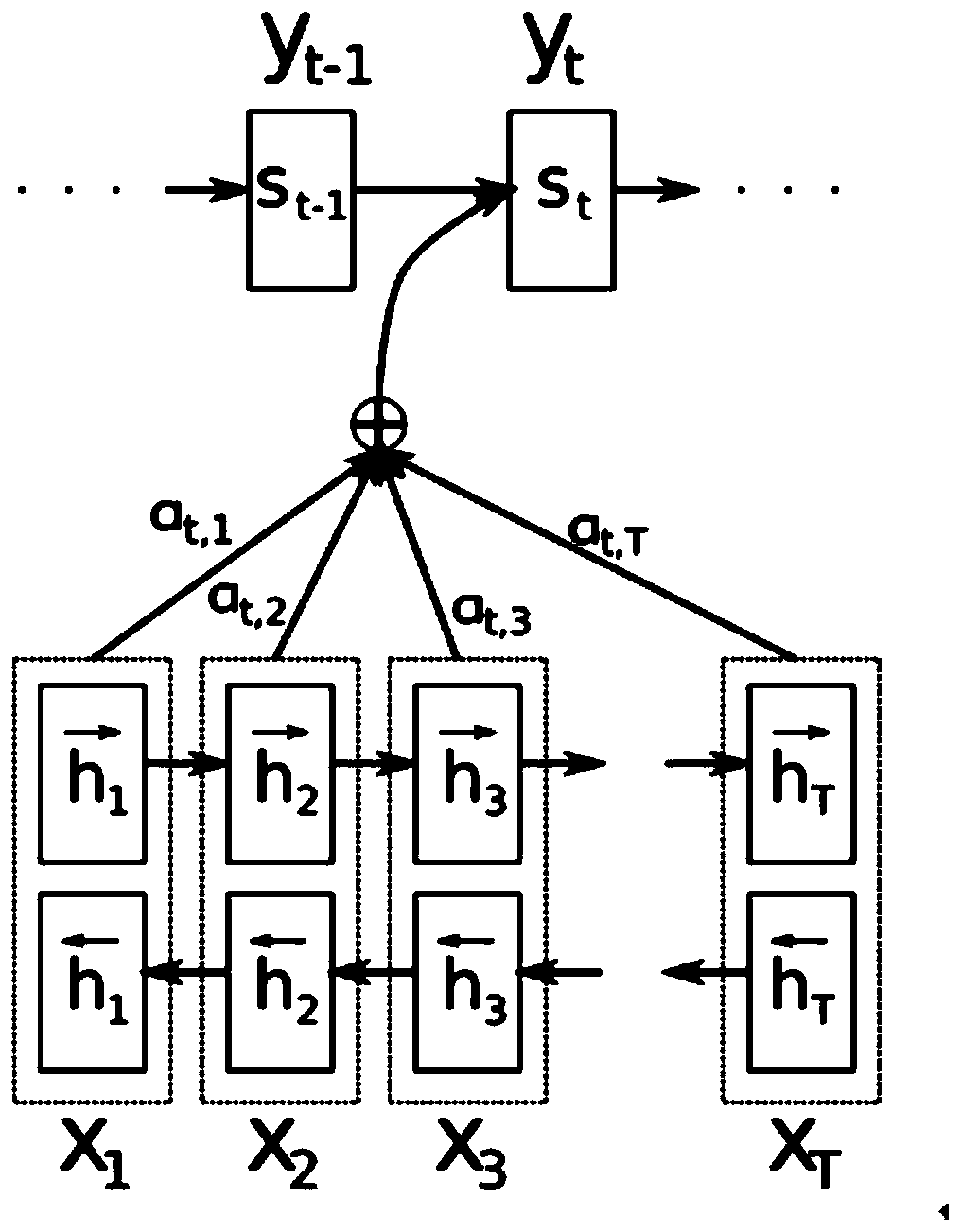

Method used

Image

Examples

example 1

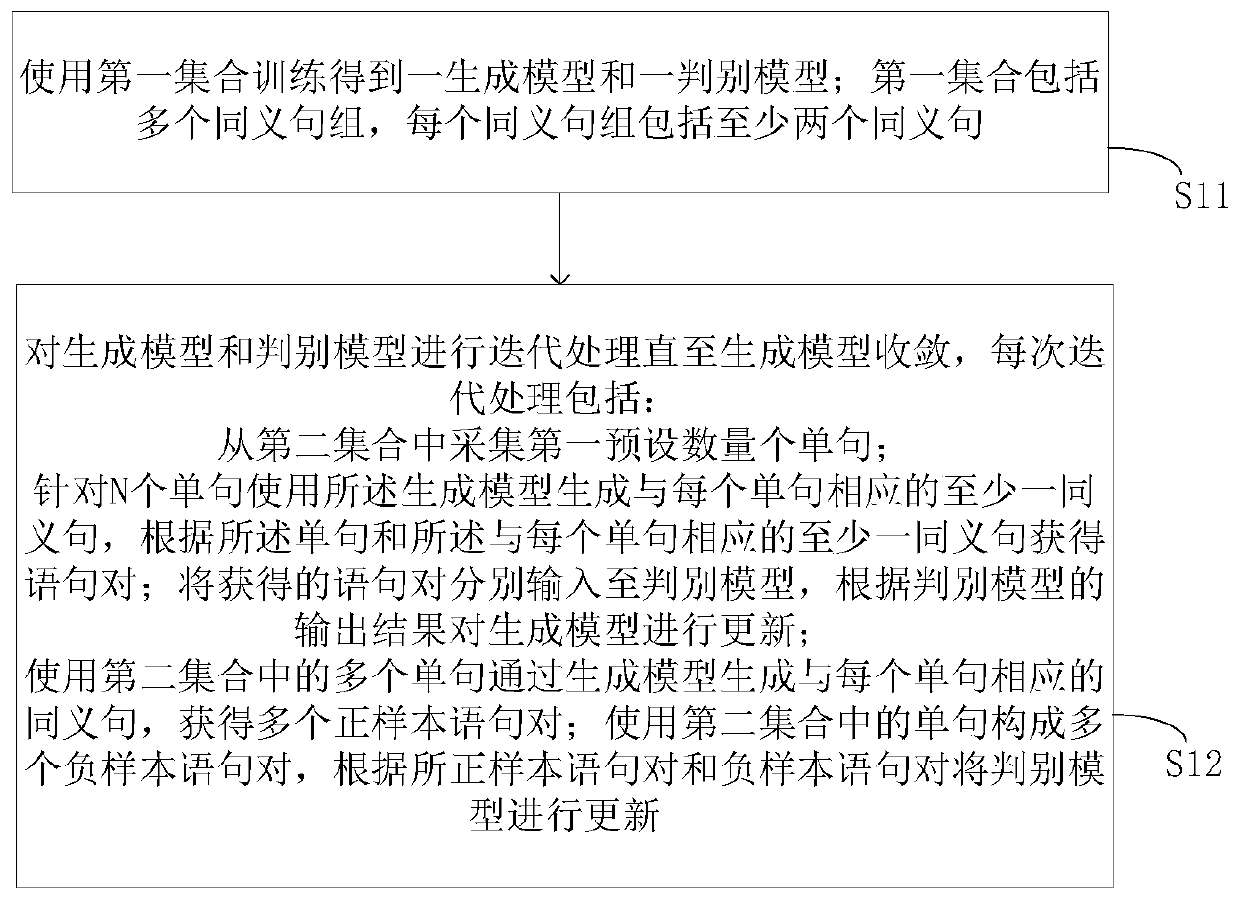

[0106] Collect N single sentences from the second set, use the generative model to generate synonymous sentences corresponding to each single sentence for M single sentences in the N single sentences, and obtain M positive sample sentence pairs; use M single sentences in the N single sentences and divide M N-M single sentences other than the single sentence form M negative sample sentence pairs. Among them, M is smaller than N. When using M single sentences and N-M single sentences to form M negative sample sentence pairs, when N-M is greater than M, use M single sentences and M single sentences selected from N-M to form M negative sample sentence pairs, and when N-M is less than M, use The same single sentence in the M single sentences and multiple single sentences in the N-M single sentences respectively form different correspondences, thereby forming M negative sample sentence pairs. That is to say, N-M single sentences can be reused to form M negative sample sentence pair...

example 2

[0108] Collect 2N single sentences from the second set, use the generative model to generate synonymous sentences corresponding to each single sentence for the first N single sentences in the 2N single sentences, and obtain N positive sample sentence pairs; use the last N single sentences in the 2N single sentences to form N negative sample sentence pairs.

[0109] Method 2: collect a fifth preset number of single sentences from the second set, use a generative model to generate synonymous sentences corresponding to each single sentence for the fifth preset number of single sentences, and obtain a fifth preset number of positive sample sentence pairs, Using the fifth preset number of single sentences and the sixth preset number of single sentences in the second set except the fifth preset number of single sentences to form a seventh preset number of negative sample sentence pairs.

[0110] E.g:

[0111] Collect X single sentences from the second set, use the generative model ...

specific Embodiment

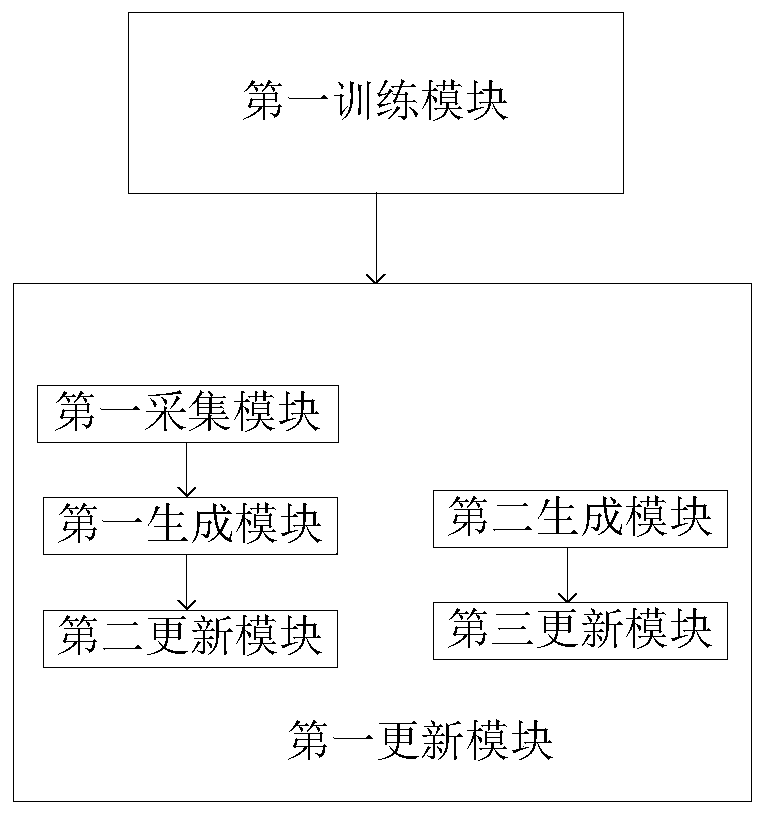

[0125] Step 1, data preparation process:

[0126] A large number of synonymous sentence groups are determined by manual labeling to form a first set S. The first set S includes multiple synonymous sentence groups, and each synonymous sentence group includes two or more synonymous sentences.

[0127] A million-level single sentences are collected from Chinese websites randomly or according to preset domain branches through the network, and the collected single sentences form the second set C.

[0128] Step 2, pre-training process:

[0129] Step 2.1, using the first set S to pre-train to obtain a generative model G, the expression of the generative model is: Y=G(X), X and Y are synonymous sentences. Comprise a plurality of synonymous sentence groups in the first collection S, when each synonymous sentence group comprises two synonymous sentences, make these two synonymous sentences train; Each synonymous sentence group comprises more than two synonymous sentences When , any tw...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com