Viewpoint invariant visual servoing of robot end effector using recurrent neural network

A technology of end effector and neural network model, applied in the direction of biological neural network model, probabilistic network, neural architecture, etc., can solve problems such as failure, time-consuming, robot wear and tear, and achieve the effect of improving robustness and/or accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

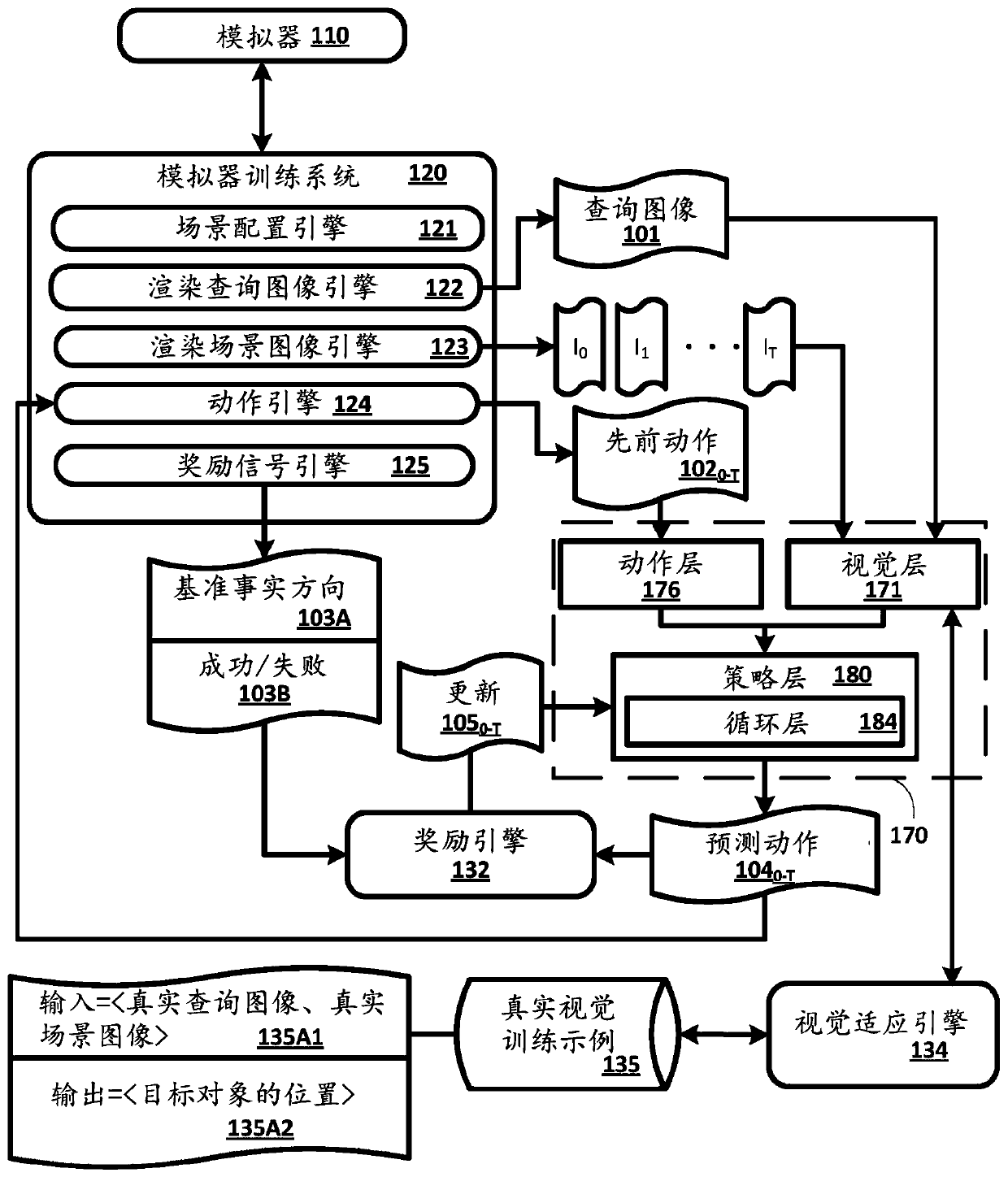

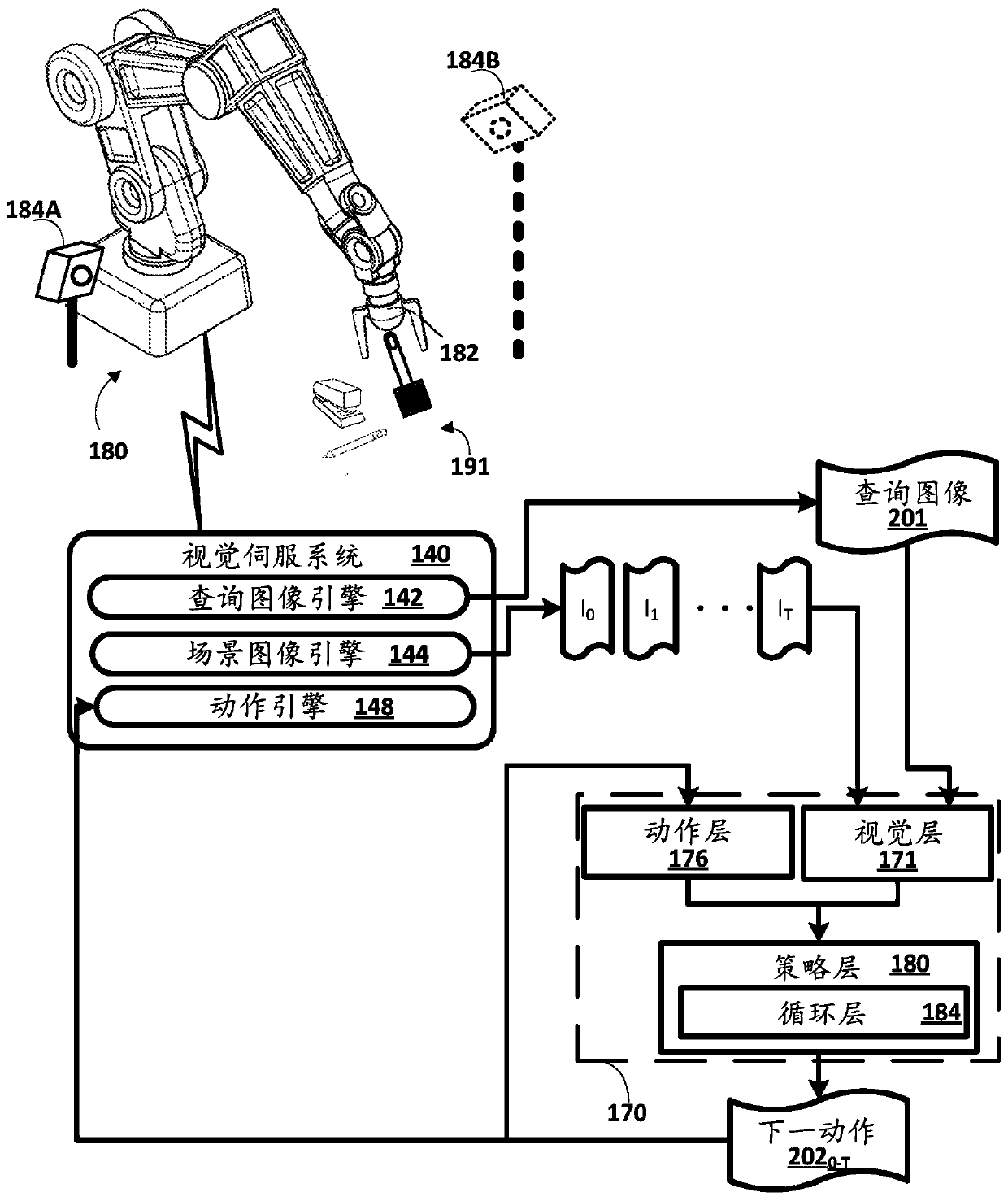

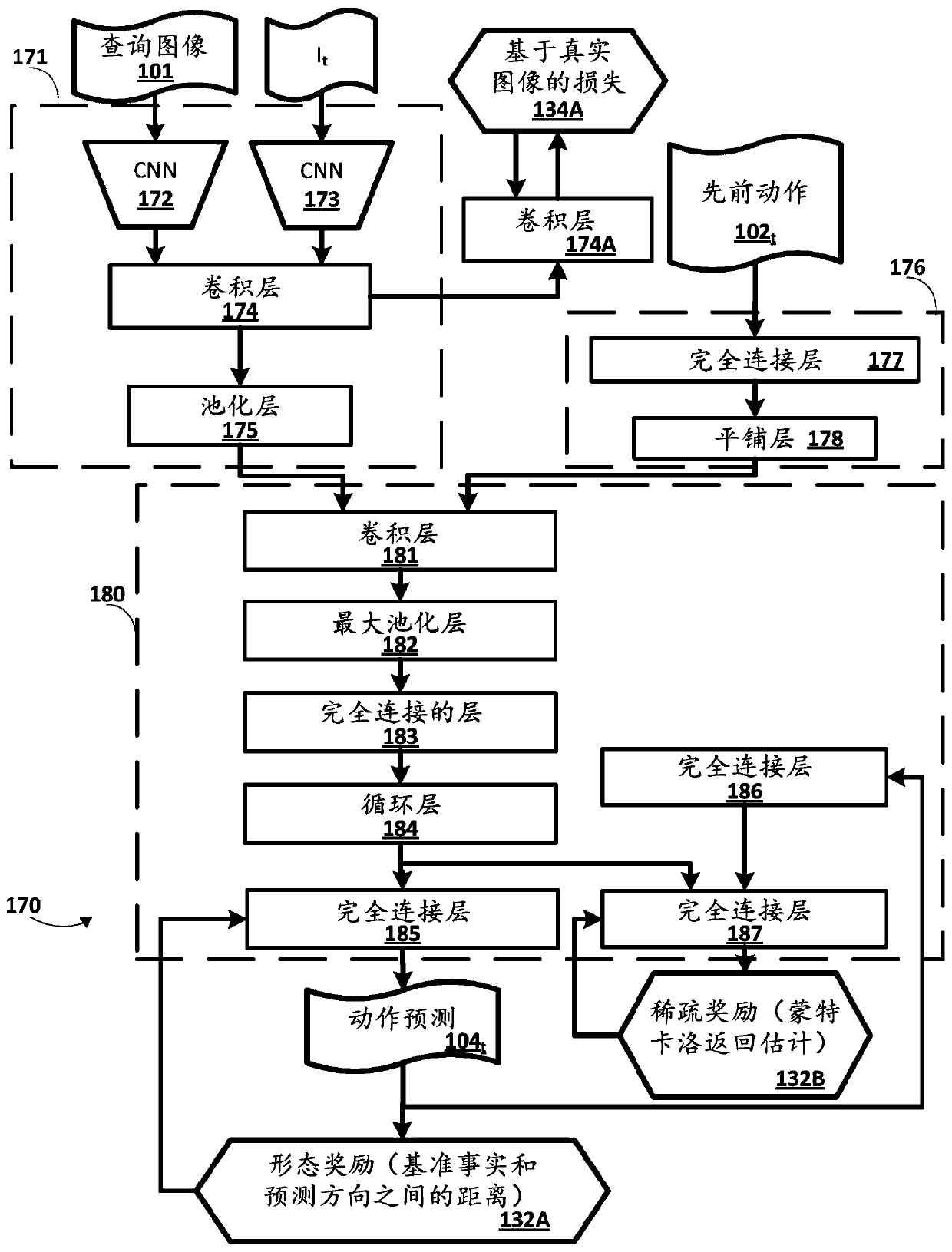

[0034] The implementation described herein trains and utilizes a recurrent neural network model that can be used at each time step to: process a query image of a target object, an image of the current scene including the target object and the robot's end effector, and a previous motion prediction; and generating a predicted motion based on the processing, the predicted motion indicating a prediction of how to control the end effector to move the end to the target object. A recurrent neural network model can be view-invariant in that it can be used across a variety of robots with vision components of various viewpoints, and / or can be used on a single robot even if the viewpoint of the robot's vision components varies dramatically. Furthermore, the recurrent neural network model can be trained based on a large amount of simulated data based on a simulator performing a simulation episode in view of the recurrent neural network model. One or more portions of the recurrent neural n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com