Garment image retrieval method fusing color feature and residual network depth feature

A technology based on color features and network depth, applied in still image data retrieval, digital data information retrieval, still image data clustering/classification, etc. Keep the spatial structure and other issues to achieve the effect of increasing the calculation time and difficulty, the effect of style and color similarity is obvious, and the effect of improving retrieval efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0065] 1. Multi-feature fusion clothing image retrieval framework based on deep network

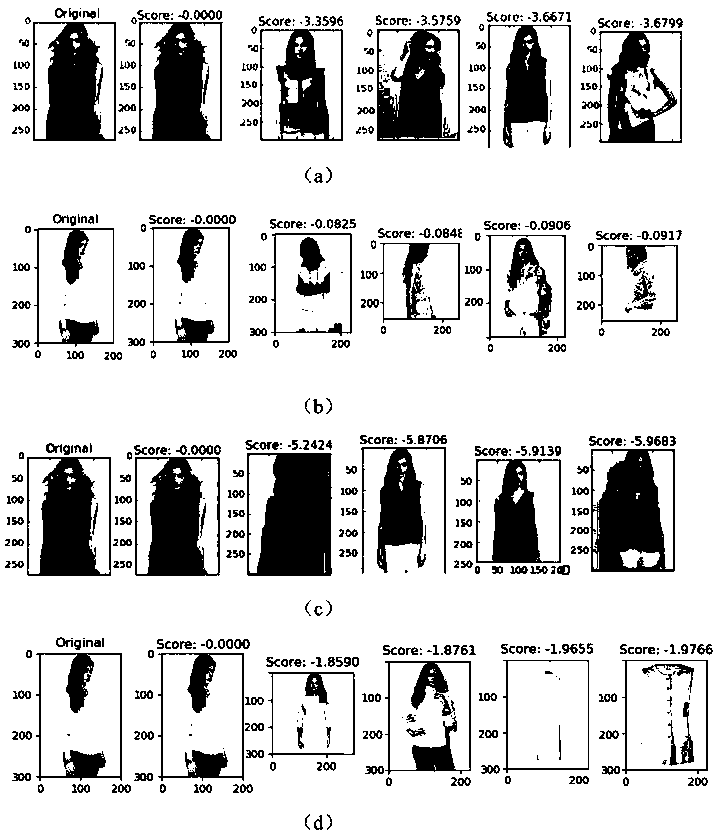

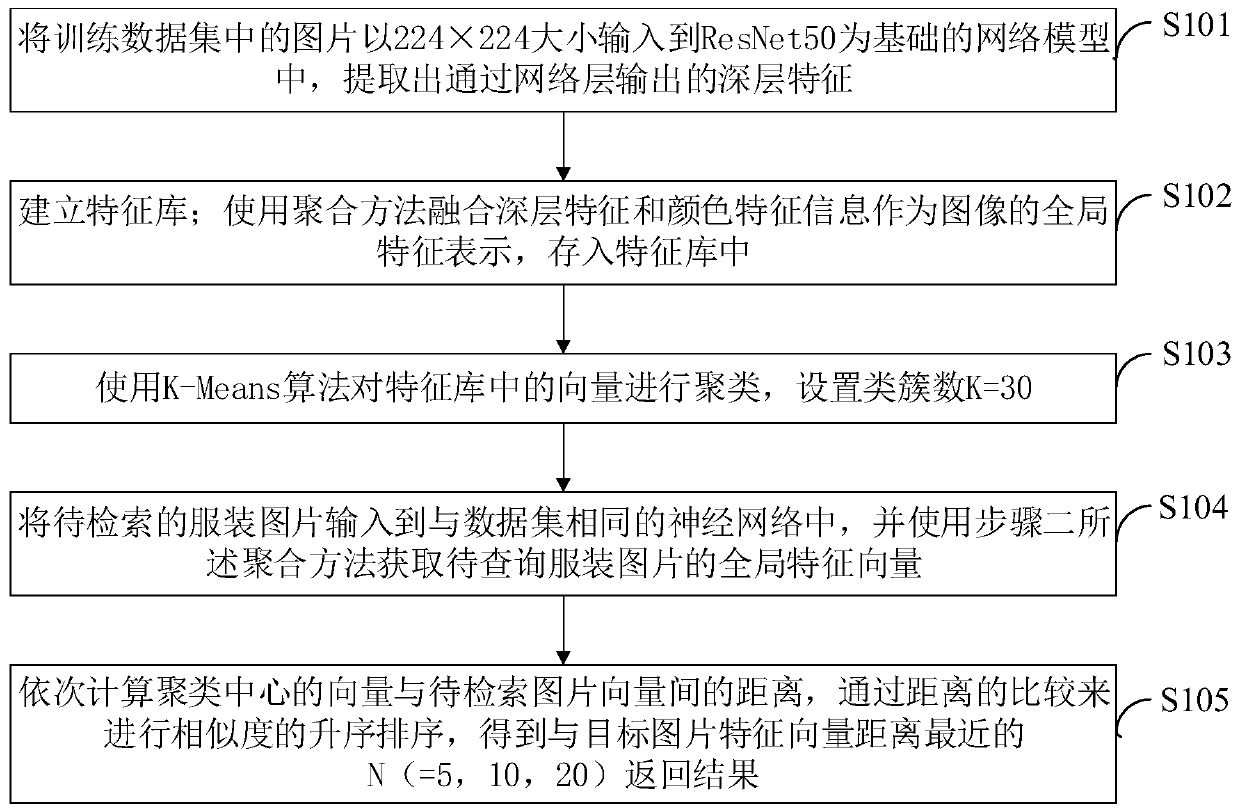

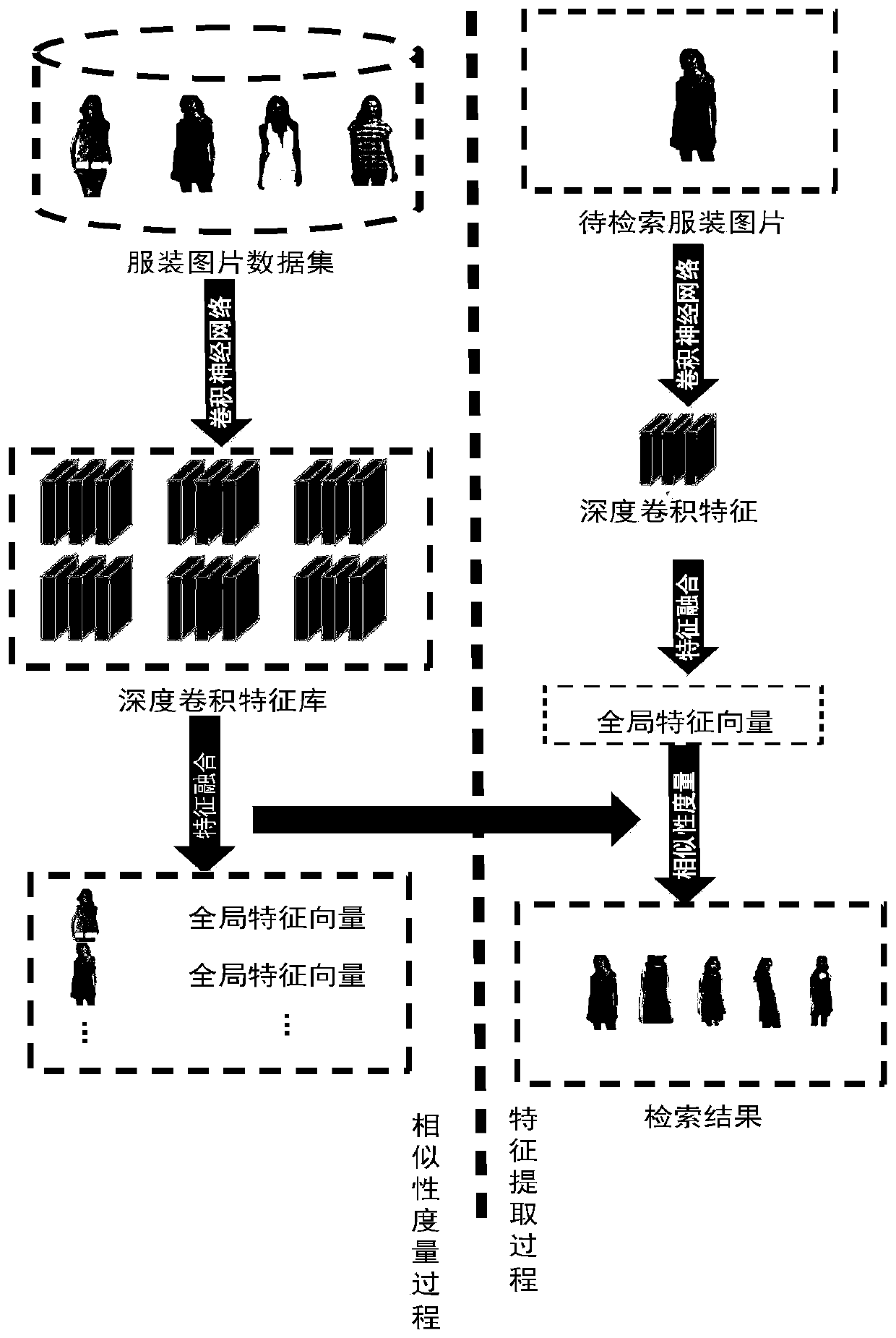

[0066] Multi-feature fusion clothing image retrieval based on deep network includes two processes of feature extraction and similarity measurement. Such as figure 2 As shown, in the feature extraction process, first input the pictures in the data set into the pre-trained network model, extract the deep features output through the network layer, and use the aggregation method to fuse other feature information as the global feature representation of the image. Stored in the feature library; the similarity measurement process is to input the clothing picture to be retrieved into the same neural network as the data set, and use the same aggregation method to obtain the global feature vector of the clothing picture to be queried. Query the distance between the image feature vector and the vector in the feature library to sort the similarity, and return the search results in ascending order o...

Embodiment 2

[0089] 1. Data and parameter preparation

[0090] In order to verify the effect of the method proposed by the present invention, this experiment selects Category and AttributePrediction Benchmark as a data set, which contains more than 200,000 sets of 50 categories of clothing pictures. This experiment extracts 60,000 training images from this subset. set, 20,000 test sets, and 20,000 validation sets, in which there are 30 categories of images. The experiment is compiled and implemented in Python.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com