Deep learning-oriented data processing method for GPU parallel computing

A deep learning and parallel computing technology, applied in neural learning methods, electrical digital data processing, computing, etc., can solve problems such as slow training speed, and achieve the effect of improving running speed, good image batch processing, and improving training speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

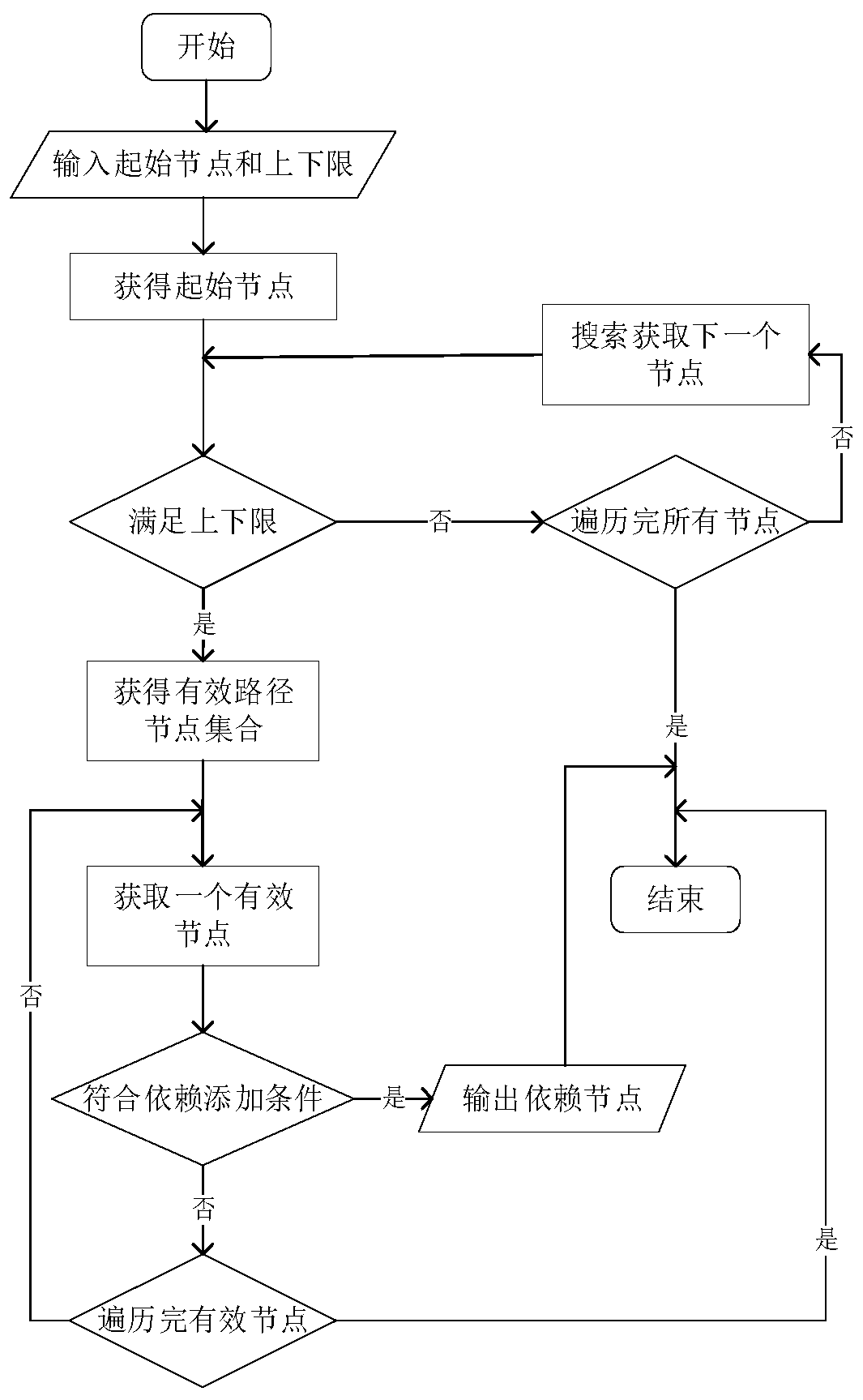

[0056] The present invention mainly comprises the following contents:

[0057] 1. Model the calculation graph

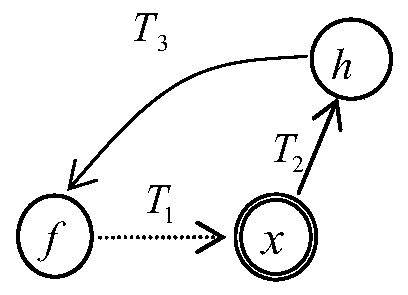

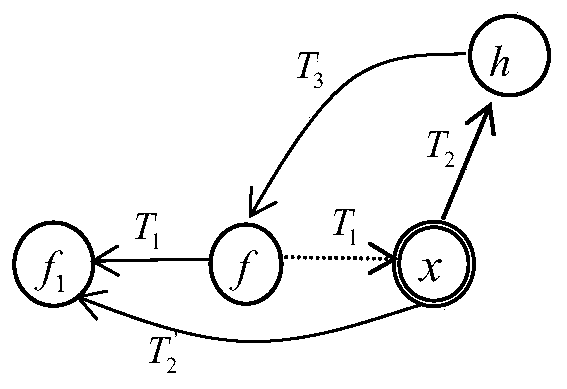

[0058] (1) Calculation graph

[0059] Construct a directed graph G=(V,Eλ,τ) for the input data, where V is the vertex set in G, E∈V×V is the set of edges in G, and λ:V→(O,Bool) is the representation A function that maps each vertex to an operation o (tuple o ∈ O) and a boolean whether the operation is parameterized, τ: E→(D, ACT) is a function that maps each edge to a data type D and an action to ACT mapping function, where ACT = {"read", "update", "control"}.

[0060] (2) Topological sorting

[0061] Given a computational graph G=(V,Eλ,τ), let N be the number of vertices in the graph, topological sorting is a mapping from fixed points to integers, γ: V→{0,1,...,N-1}, satisfy

[0062] ·

[0063] ·

[0064] Topological sorting represents the execution order of operations in the graph. Given two operations u and v, if γ(u) < γ(v), execute u before v. If γ(...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com