Federated learning method based on trusted execution environment

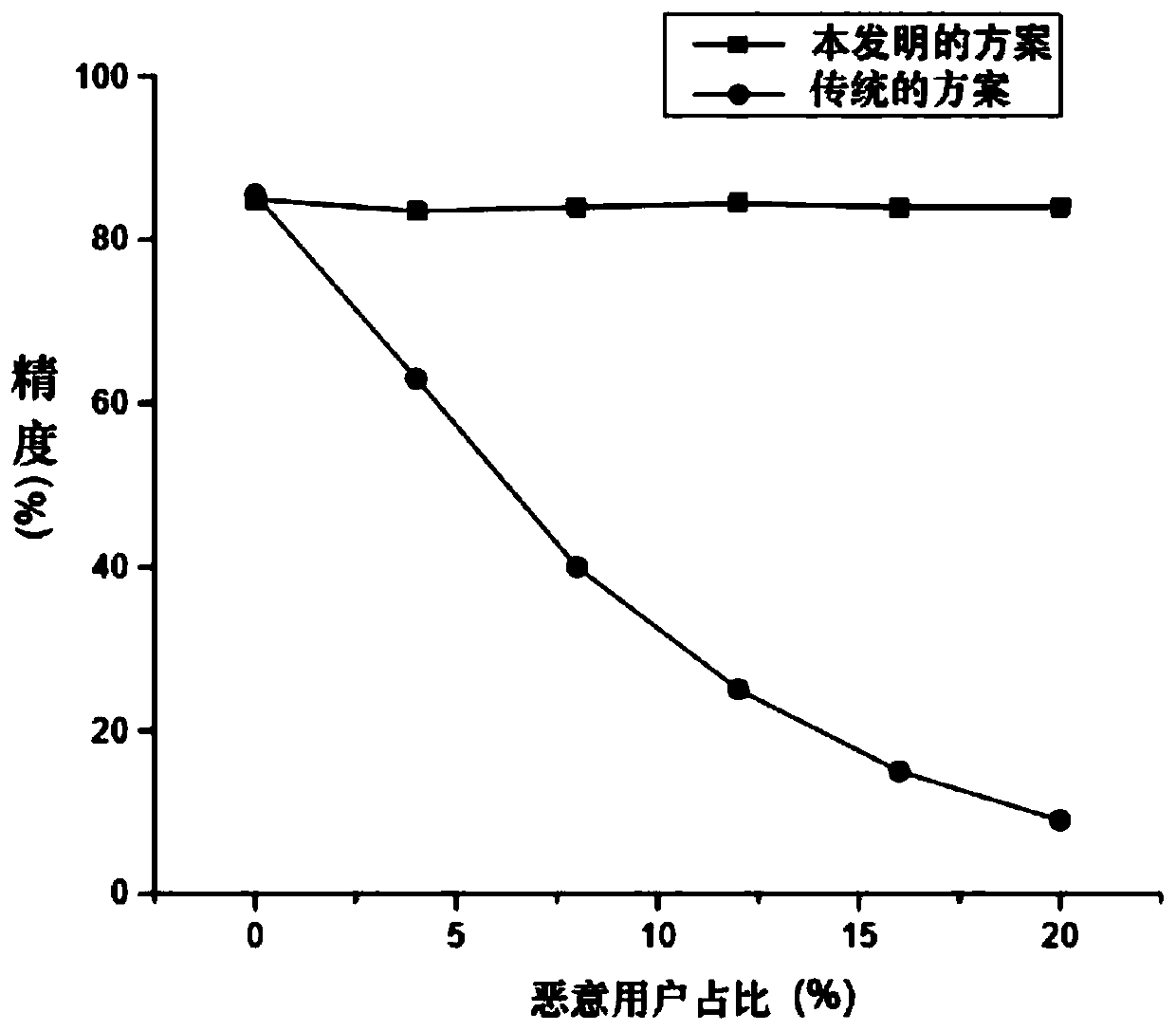

A technology of execution environment and learning method, applied in the field of data security, can solve the problems of inability to predict confidential data from patterns, failure to solve the authenticity of training results and misreported data volume, etc., to ensure the integrity of training, privacy security, application Scene wide range of effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

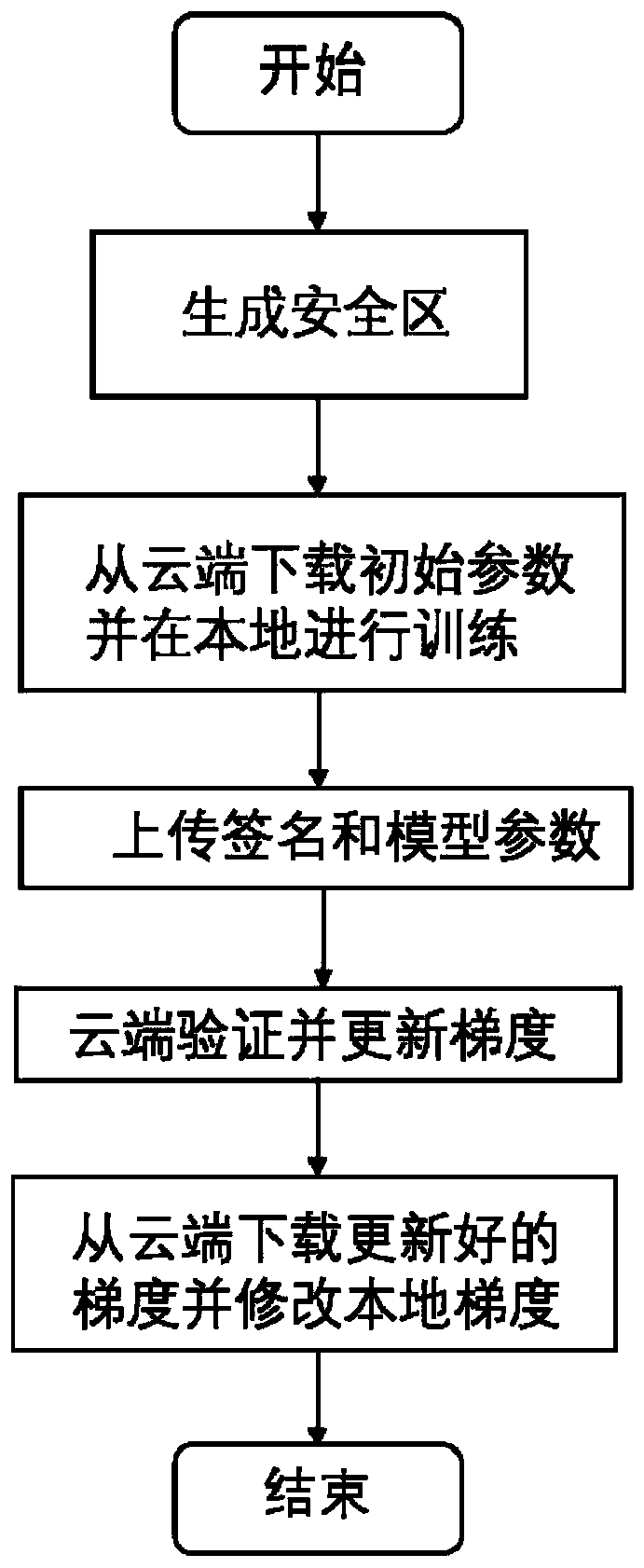

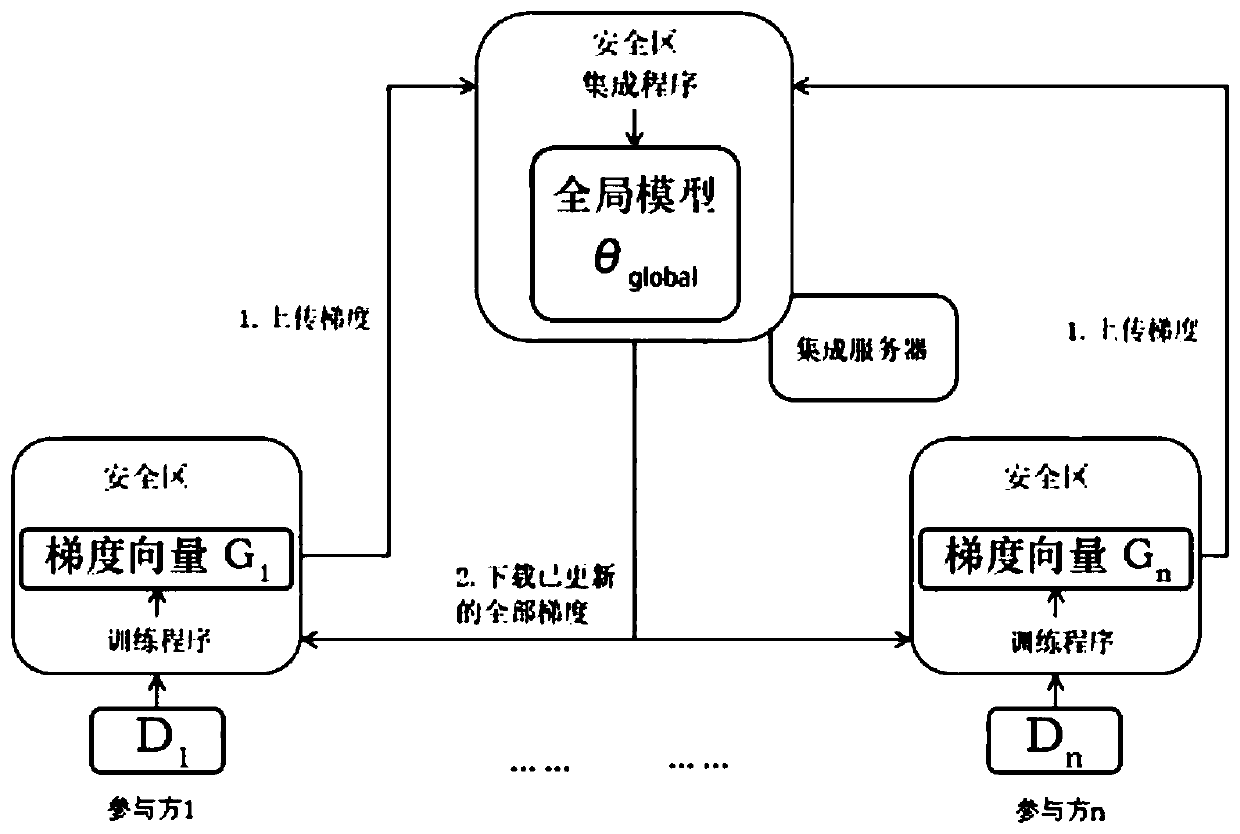

Method used

Image

Examples

Embodiment

[0022] Training deep learning models based on large-scale data is a common solution. The present invention needs to solve two key problems, how to use data while protecting user privacy from being leaked and how to prevent users from falsifying data. For example, if a user wants to obtain a model for handwritten digit recognition, the user can do training in the local safe area, and then send the generated model to the cloud, and other users perform the same operation, and so on, and the end user can download it through the cloud. get the model. Some basic concepts related to the present invention are:

[0023] (1) Deep learning: Deep learning focuses on extracting features from high-dimensional data and using them to generate a model that maps output from the input. Multilayer perceptron is the most common neural network model. In a multi-layer perceptron, the input of each hidden layer node is the output of the previous layer network (plus a bias), each hidden layer node ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com