Node storage method and system based on neural network, server and storage medium

A neural network and storage system technology, applied in the field of node storage, can solve problems such as the rearrangement of node storage order, achieve the effect of improving storage space utilization and accelerating graph reasoning process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

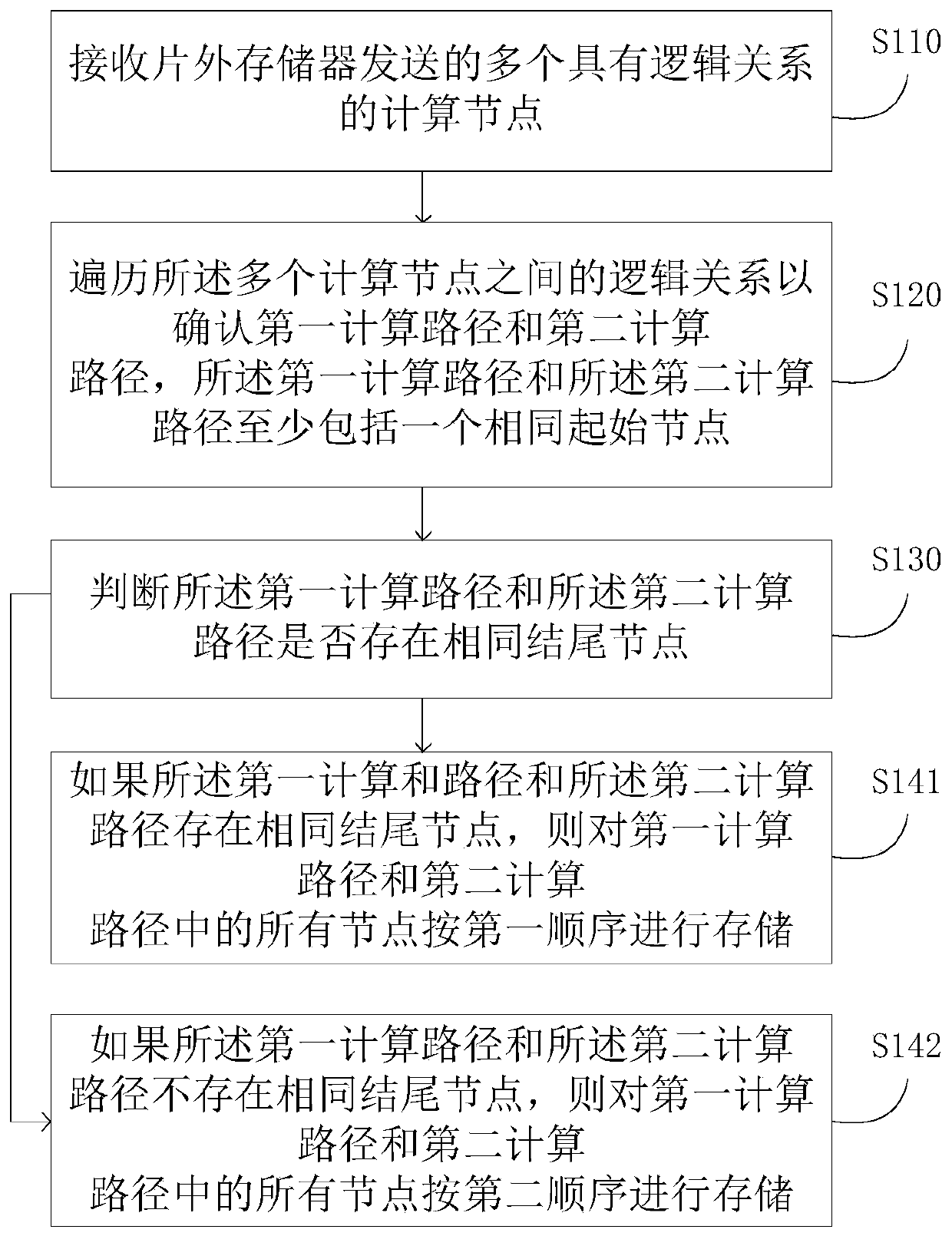

[0049] figure 1 It is a flow chart of a neural network-based node storage method provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation where the ordering of nodes is optimized before performing neural network calculations on nodes. This method can be performed by a processor Execution, such as figure 1 As shown, a neural network-based node storage method, including:

[0050] Step S110, receiving a plurality of computing nodes with logical relationships sent by the off-chip memory;

[0051] Specifically, a memory is a memory device used to store information in modern information technology. Its concept is very broad and has many levels. In a digital system, as long as it can store binary data, it can be a memory; in an integrated circuit, a circuit with a storage function without a physical form is also called a memory, such as RAM, FIFO, etc.; In the system, storage devices in physical form are also called storage devices, such as m...

Embodiment 2

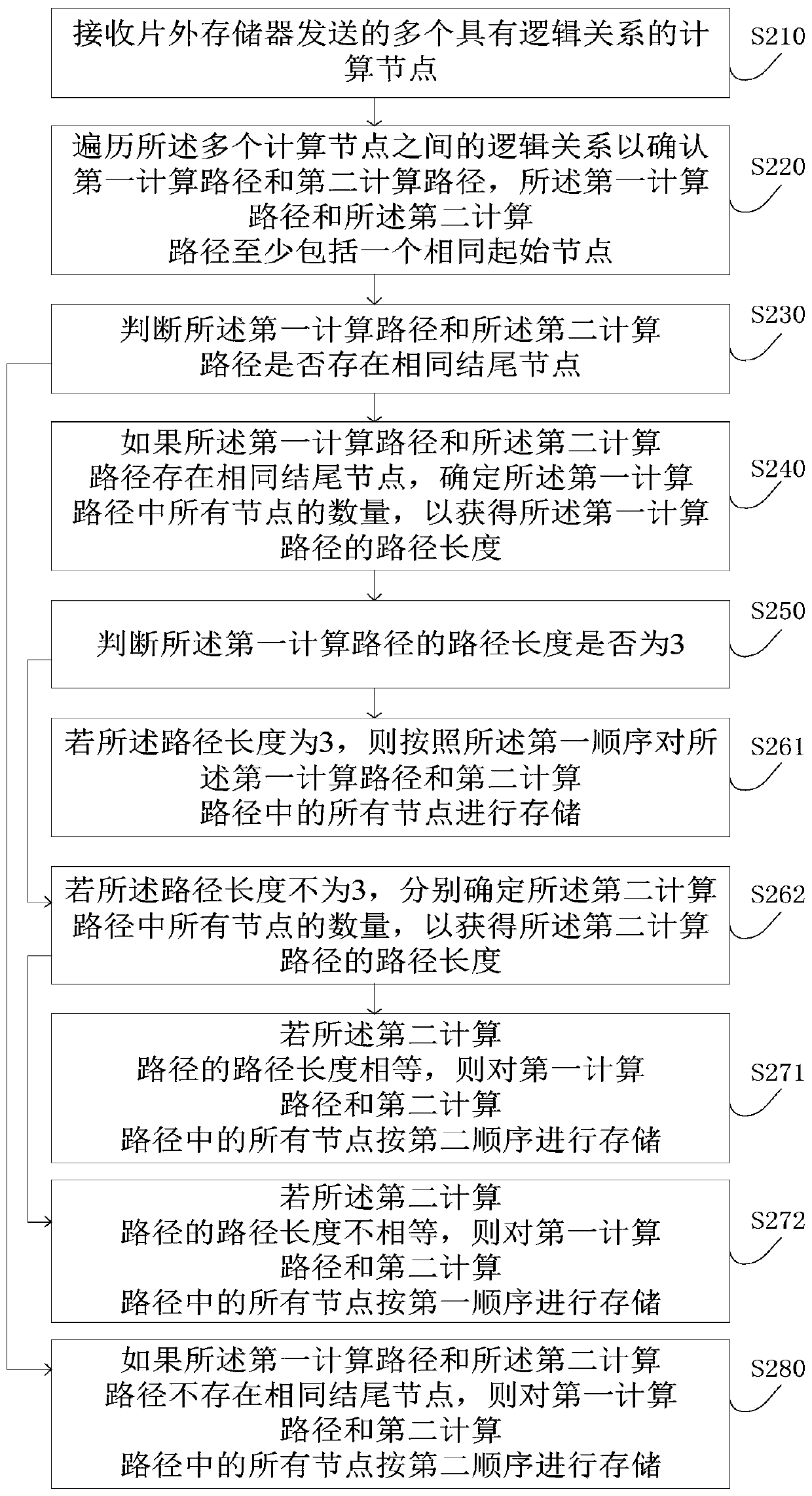

[0064] Embodiment 2 of the present invention is further optimized on the basis of Embodiment 1. figure 2 It is a flowchart of a neural network-based node storage method provided by Embodiment 2 of the present invention. Such as figure 2 As shown, the neural network-based node storage method of this embodiment includes:

[0065] Step S210, receiving a plurality of computing nodes with logical relationships sent by the off-chip memory;

[0066] Specifically, a memory is a memory device used to store information in modern information technology. Its concept is very broad and has many levels. In a digital system, as long as it can store binary data, it can be a memory; in an integrated circuit, a circuit with a storage function without a physical form is also called a memory, such as RAM, FIFO, etc.; In the system, storage devices in physical form are also called storage devices, such as memory sticks and TF cards. All information in the computer, including input raw data, c...

Embodiment 3

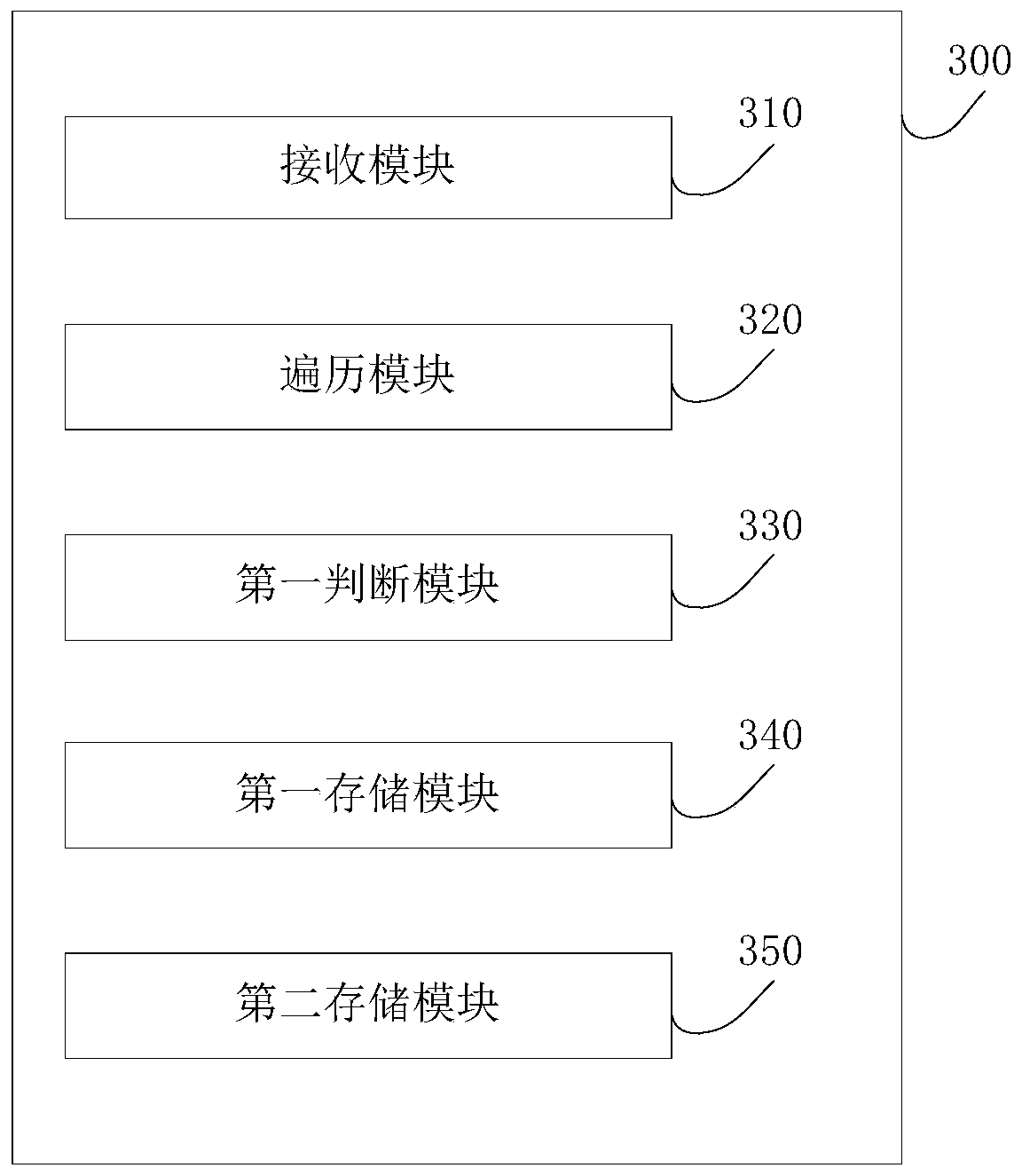

[0088] image 3 It is a schematic structural diagram of a neural network-based node storage system provided by Embodiment 3 of the present invention. Such as image 3 As shown, a neural network-based node storage system 300 includes:

[0089] The receiving module 310 is configured to receive a plurality of computing nodes with logical relationships sent by the off-chip memory;

[0090] Traversing module 320, configured to traverse the logical relationship between the plurality of computing nodes to confirm the first computing path and the second computing path, the first computing path and the second computing path include at least one same starting node ;

[0091] A first judging module 330, configured to judge whether the first calculation path and the second ground path have the same end node;

[0092] The first storage module 340 is configured to store all nodes in the first calculation path and the second calculation path in a first order if the same end node exists i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com