Micro-expression detection method based on self-adaptive transition frame removal depth network

A deep network and detection method technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of small scope of application and low detection accuracy of micro-expressions, and achieve the effect of wide application scope and high detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The present invention will be described in detail below in conjunction with the accompanying drawings. It should be noted that the described embodiments are only intended to facilitate the understanding of the present invention, rather than limiting it in any way.

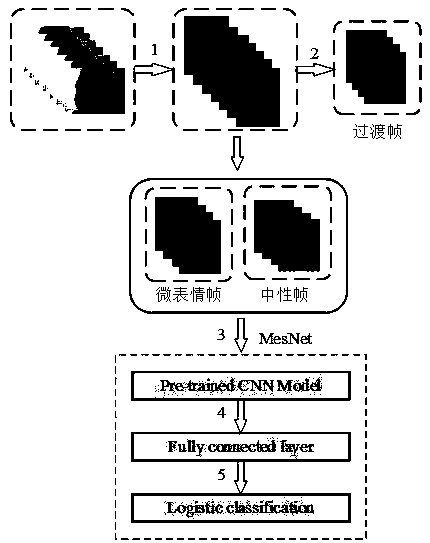

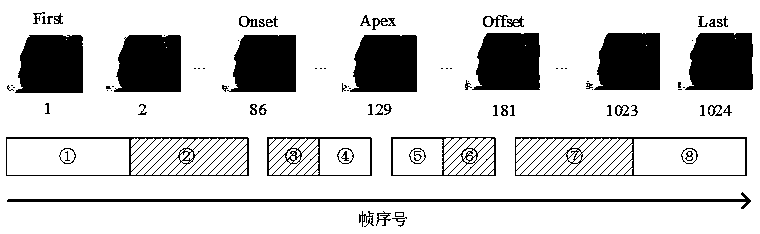

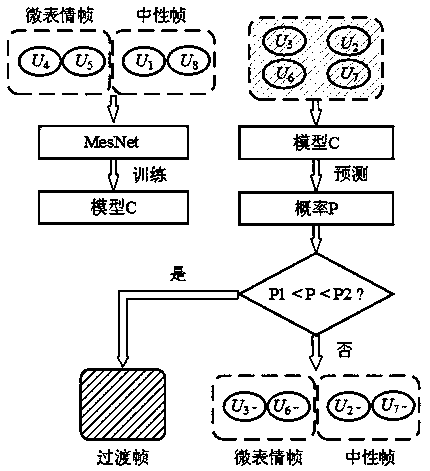

[0043] figure 1 Take a video numbered 20_EP15_03f in the CASME II database as an example to describe the MesNet training process. As shown in formula (1), Input is the micro-expression frame and neutral frame samples input into the MesNet network, and f(Input) represents the shape and texture feature features extracted from the image using the pre-trained model:

[0044] Features=f(Input). (1)

[0045] In order to further extract micro-expression features, as shown in formula (2), the function f 1 (Features,N) means that features are used as input, and a fully connected layer containing N neurons is connected after the pre-training model:

[0046] FC=f 1 (Features, N). (2)

[0047] Then take FC as input...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com