Palm image recognition method, system and device

An image recognition, palm technology, applied in the field of image recognition, can solve the problem of low accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

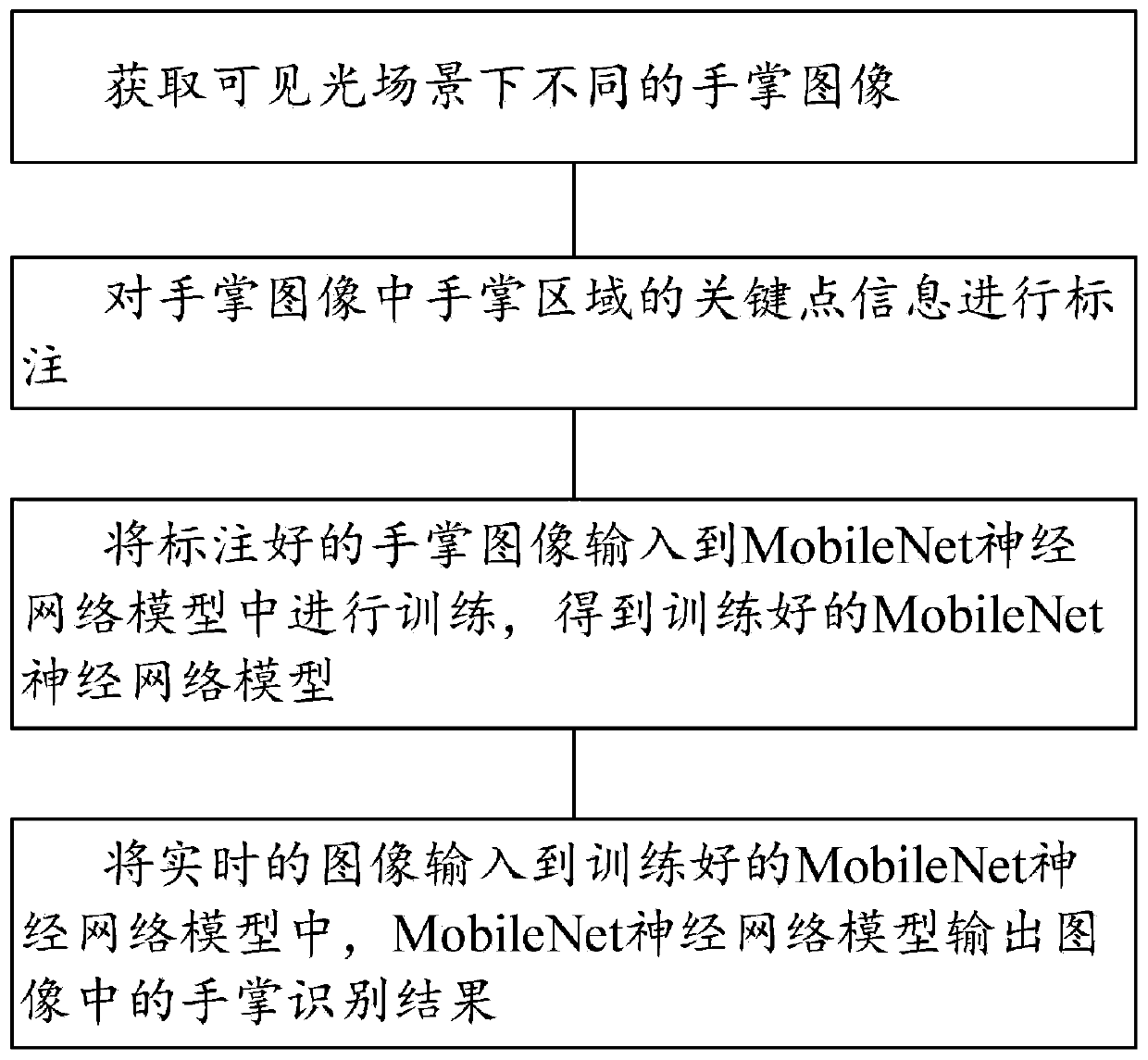

[0050] See figure 1 , figure 1 It is a method flowchart of a palm image recognition method, system and device provided by the embodiments of the present invention.

[0051] The palm image recognition method provided by the present invention is suitable for a pre-established MobileNet neural network model. The MobileNet neural network model uses the MaxMin function as the activation function. The method includes the following steps:

[0052] Obtain different palm images in the visible light scene. Different palm images include different people, different lights, different angles, and different ages; by selecting different palm images for subsequent training of the MobileNet neural network model, the MobileNet neural network model can recognize Expand the scope of application of the palm in the palm image under different circumstances;

[0053] Annotate the key point information of the palm area in the palm image, and annotate the key point information of the palm in the palm image, ...

Embodiment 2

[0070] In this embodiment, when training the MobileNet neural network model, it can be divided into the following three parts: (1) Model input: palm image and annotated 9 palm key point pairs; (2) model structure: for palm detection Task, the palm detection CNN framework built, that is, MobileNet is selected as the backbone, the multi-scale sampling fusion operation of the SSD model is the detection head, and the activation function mapping method in the convolution operation module in the MobileNet and SSD structure is changed from the ReLU function to MaxMin function; (3) Model training: According to the back propagation rules, in the forward propagation learning process of image feature information, the information after the convolution linear operation is subjected to the non-linear operation of MaxMin activation function, and its feature value is not easy to lose, so the parameter When backpropagating, the gradient value is not easy to disappear, and there is less overfitti...

Embodiment 3

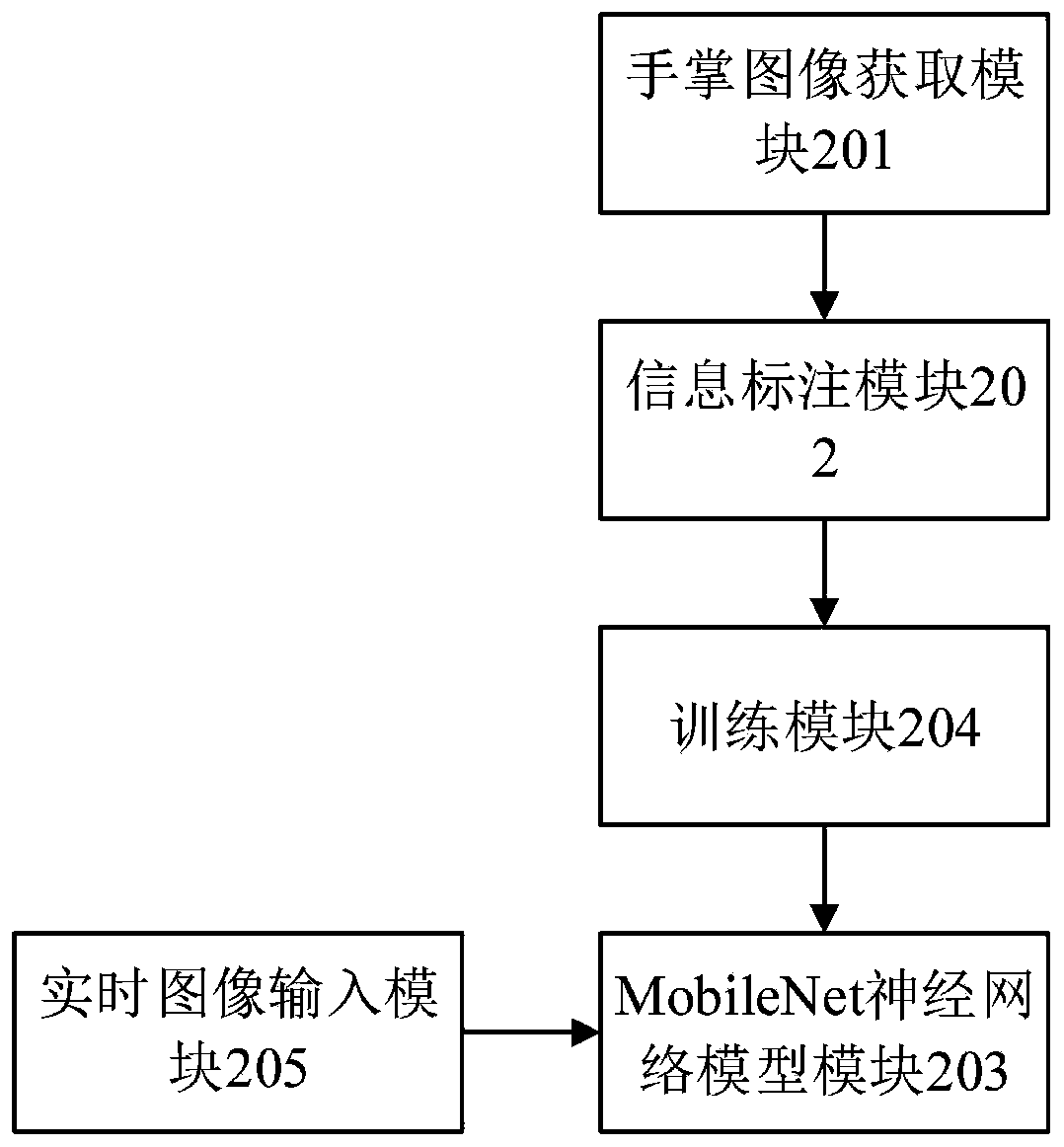

[0074] Such as figure 2 As shown, a palm image recognition system includes a palm image acquisition module 201, an information labeling module 202, a MobileNet neural network model module 203, a training module 204, and a real-time image input module 205;

[0075] The palm image acquisition module 201 is used to acquire different palm images in a visible light scene;

[0076] The information labeling module 202 is used for labeling key point information of the palm area in the palm image;

[0077] The MobileNet neural network model module 203 is used to provide a MobileNet neural network model, and the MobileNet neural network model uses the MaxMin function as the activation function;

[0078] The training module 204 is used to input the labeled palm image into the MobileNet neural network model for training;

[0079] The real-time image input module 205 is used to input real-time images into the trained MobileNet neural network model.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com