Target detection method based on global convolution and local deep convolution fusion

A target detection and local depth technology, applied in the field of computer vision, can solve problems such as poor detection effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

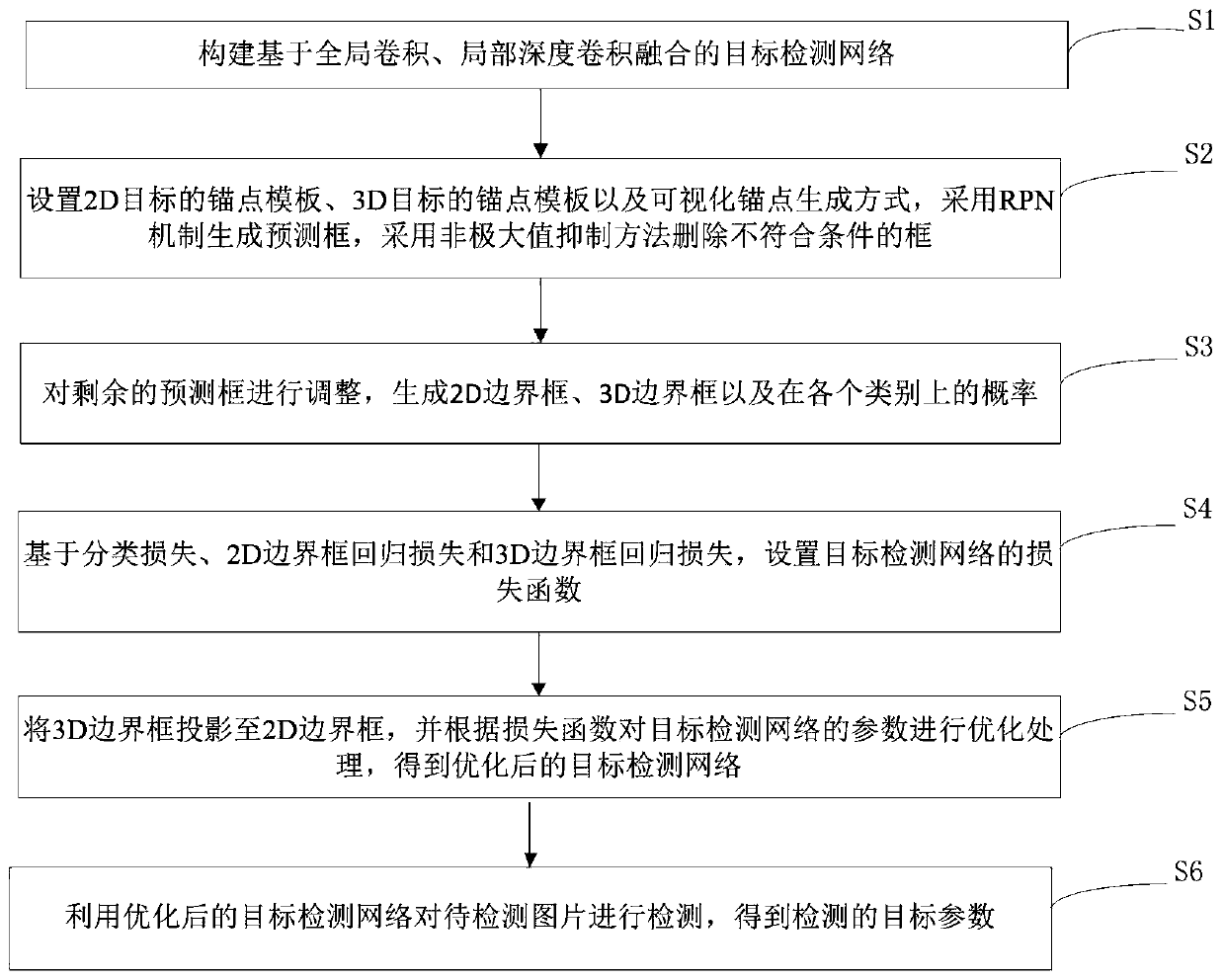

[0084] This embodiment provides a target detection method based on global convolution and local deep convolution fusion, please refer to figure 1 , the method includes:

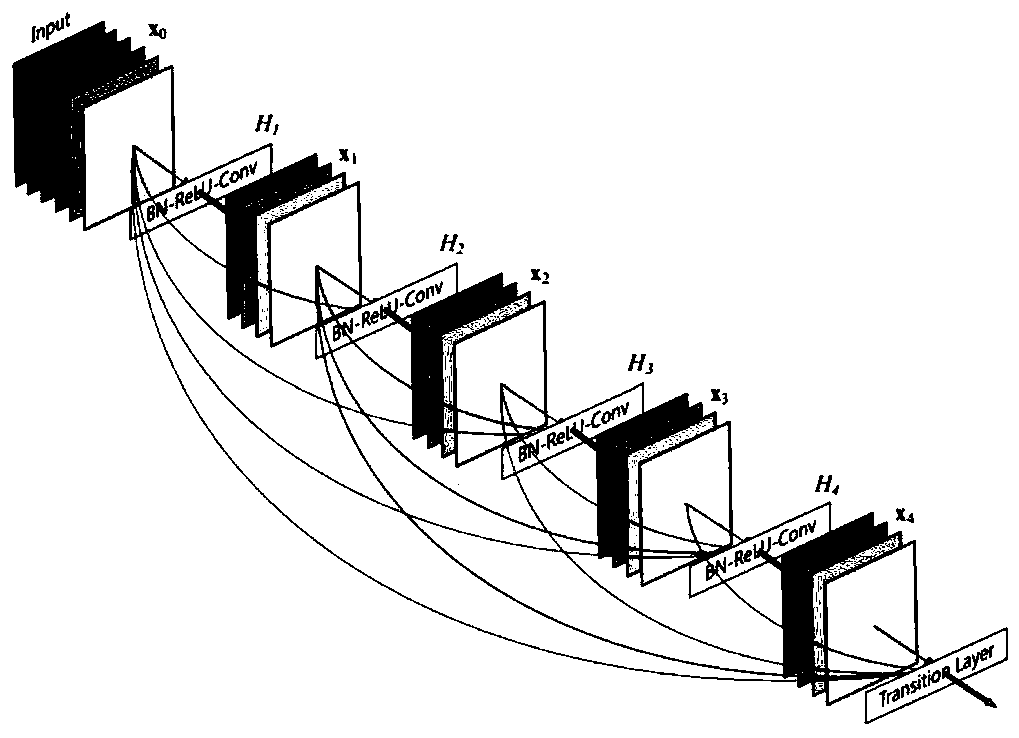

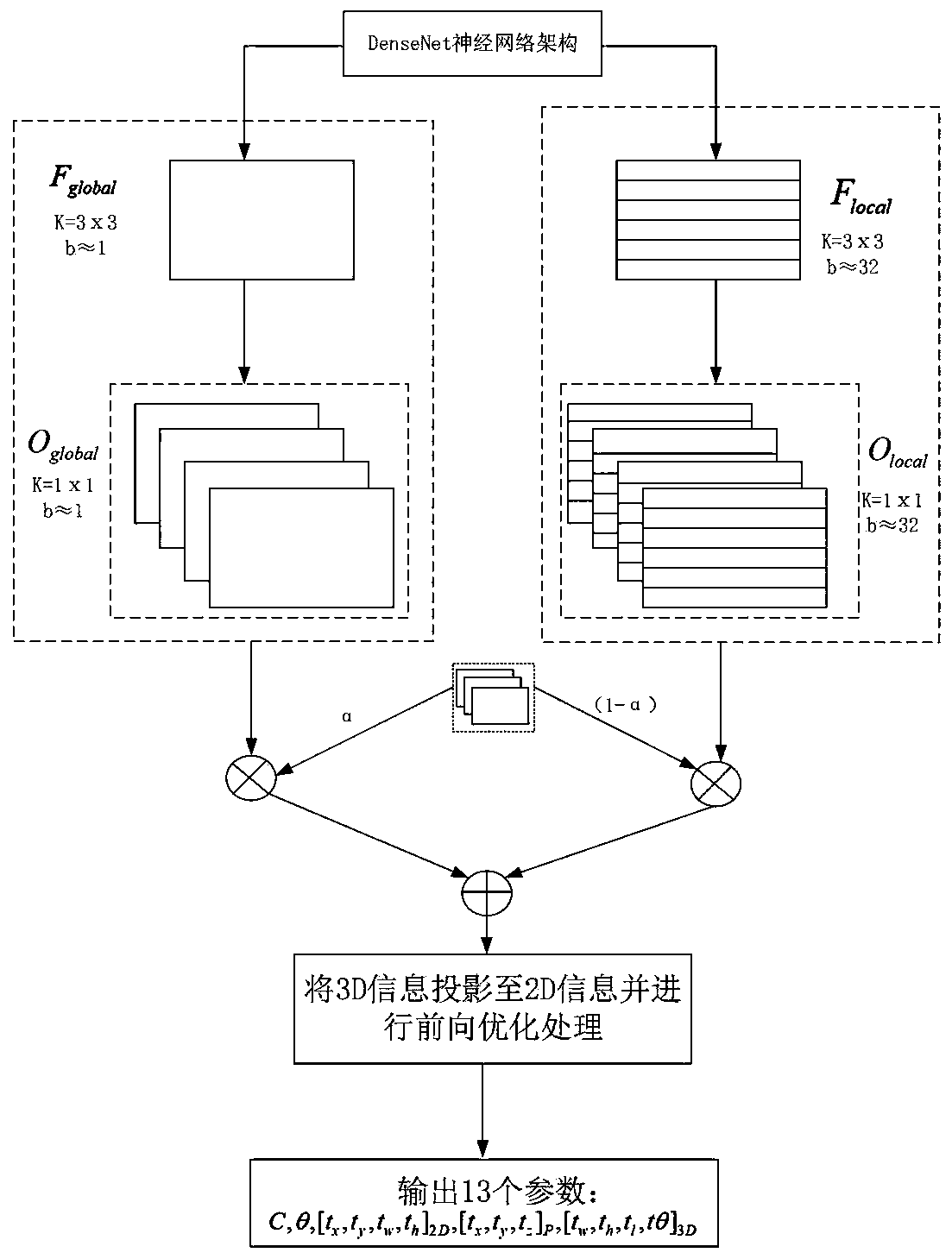

[0085] S1: Build a target detection network based on global convolution and local deep convolution fusion. The target detection network includes a backbone network, a global network, and a depth-aware convolutional region proposal network. The backbone network is used to extract features from input images. The global network is used to extract global features from the images processed by the backbone network, and the depth-aware convolutional region proposal network is used to extract local features from the images processed by the backbone network.

[0086] Specifically, 3D target visual analysis plays an important role in the visual perception system of autonomous driving vehicles. Object detection in 3D space using lidar and image data to achieve highly accurate target localization and recognition of obje...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com