Concurrent graph data preprocessing method based on FPGA

A graph data and preprocessing technology, which is applied in the field of embedded system data processing, can solve problems such as performance bottlenecks, large performance differences, and inability to apply concurrent graph processing, etc., to improve memory access hit rate, reduce overhead, and improve concurrent graph calculations efficiency effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

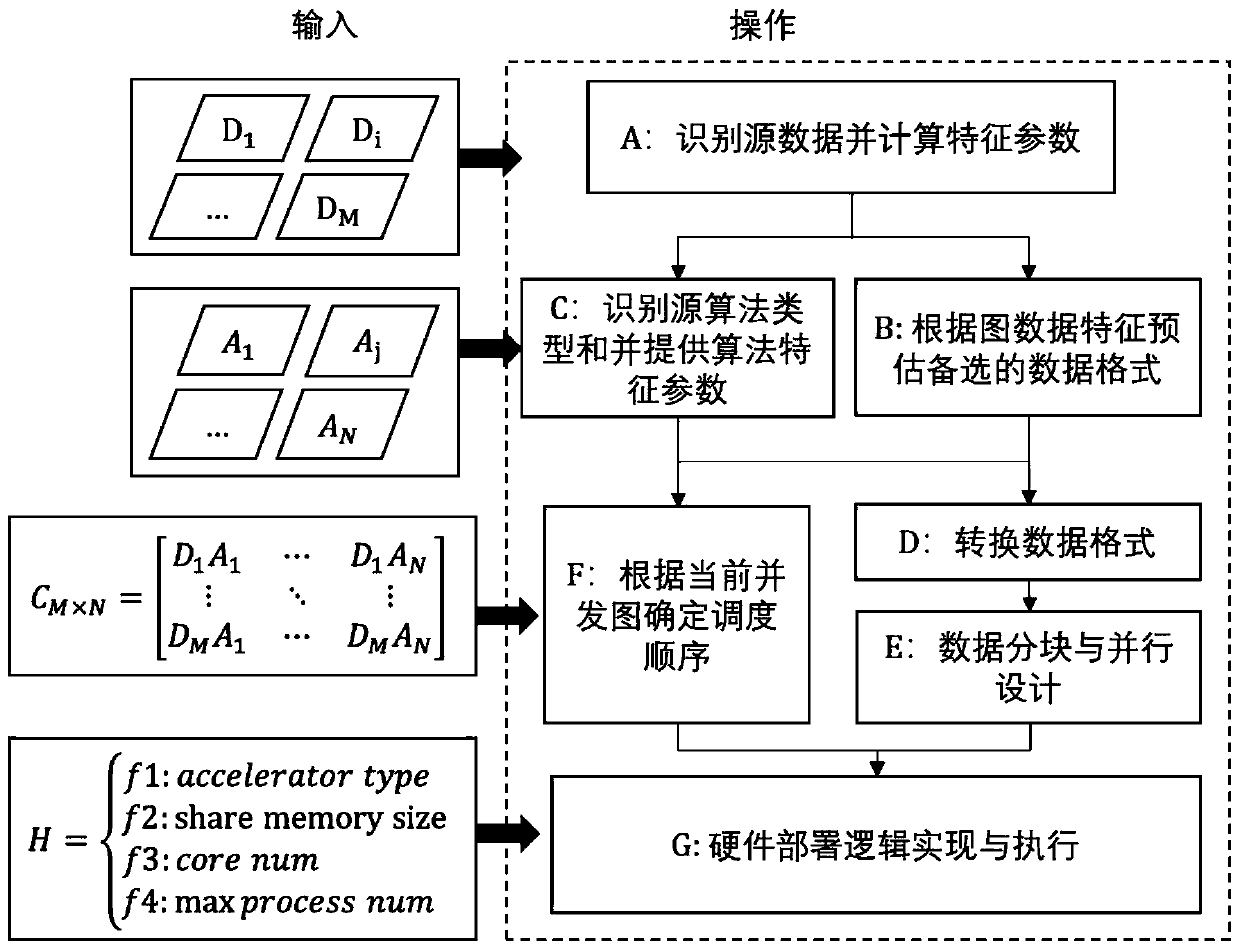

[0029] Such as figure 1 As shown, it is a FPGA-based concurrent graph data preprocessing method involved in this embodiment, including the following steps:

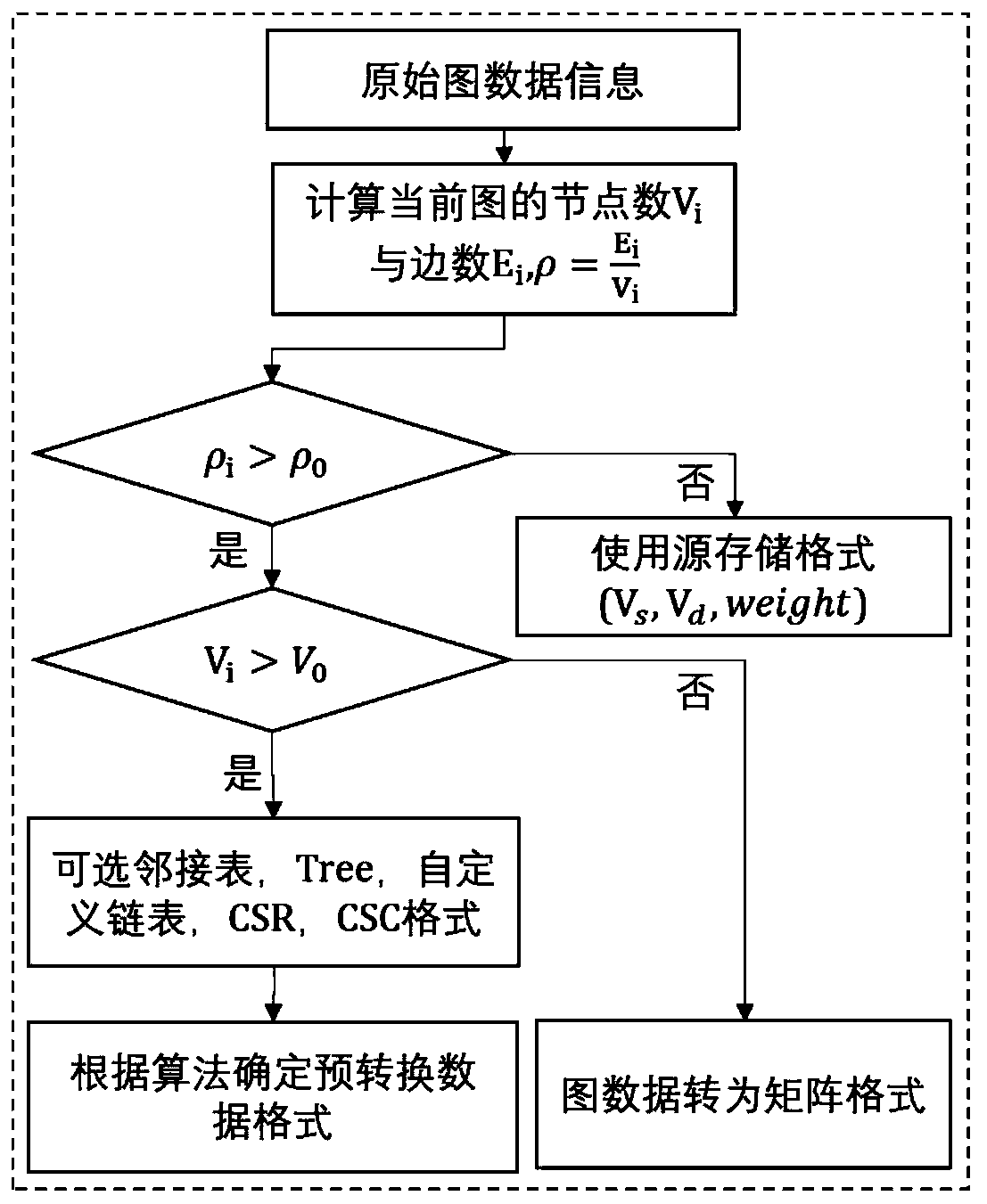

[0030] Step 1) According to the data information D, the strategy identifies the source data format, which defaults to a triplet (V s ,V d ,weight), and calculate the relevant characteristic parameters of the graph data information D, including the number of graph data nodes V i , side number E i ,density

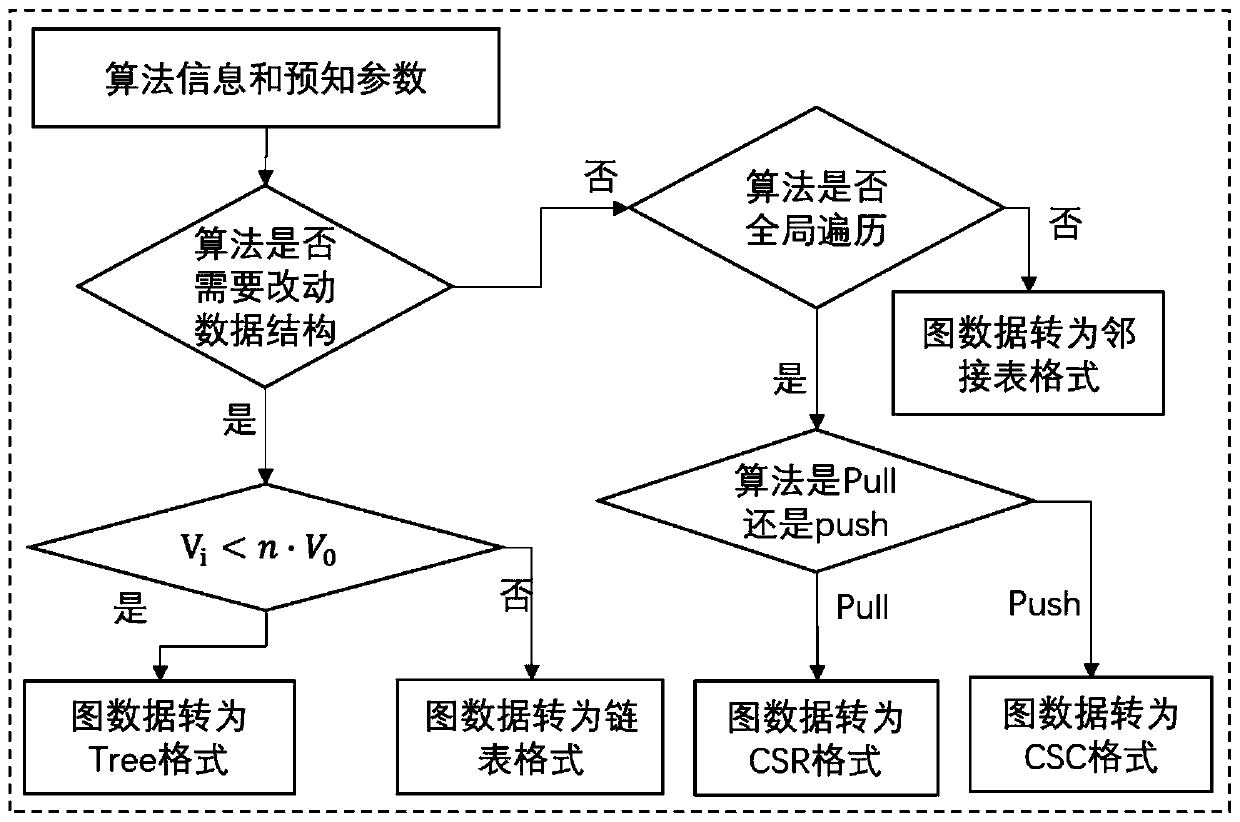

[0031] Step 2) According to the feature information of the graph data in step 1), an alternative data format is estimated, and the data format includes matrix, adjacency list, Tree, linked list, CSR or CSC format.

[0032] The estimated alternative specific steps include:

[0033] 2.1) Calculate the relevant characteristic parameters of the graph data information D, including the number of graph data nodes V i , side number E i ,density

[0034] 2.2) The calculated density ρ i and with the preset ρ 0 Compa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com