Deep learning model compression method and device

A deep learning and model technology, applied in the field of artificial intelligence, can solve problems such as neural network redundancy, and achieve the effect of enhancing usability and good performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

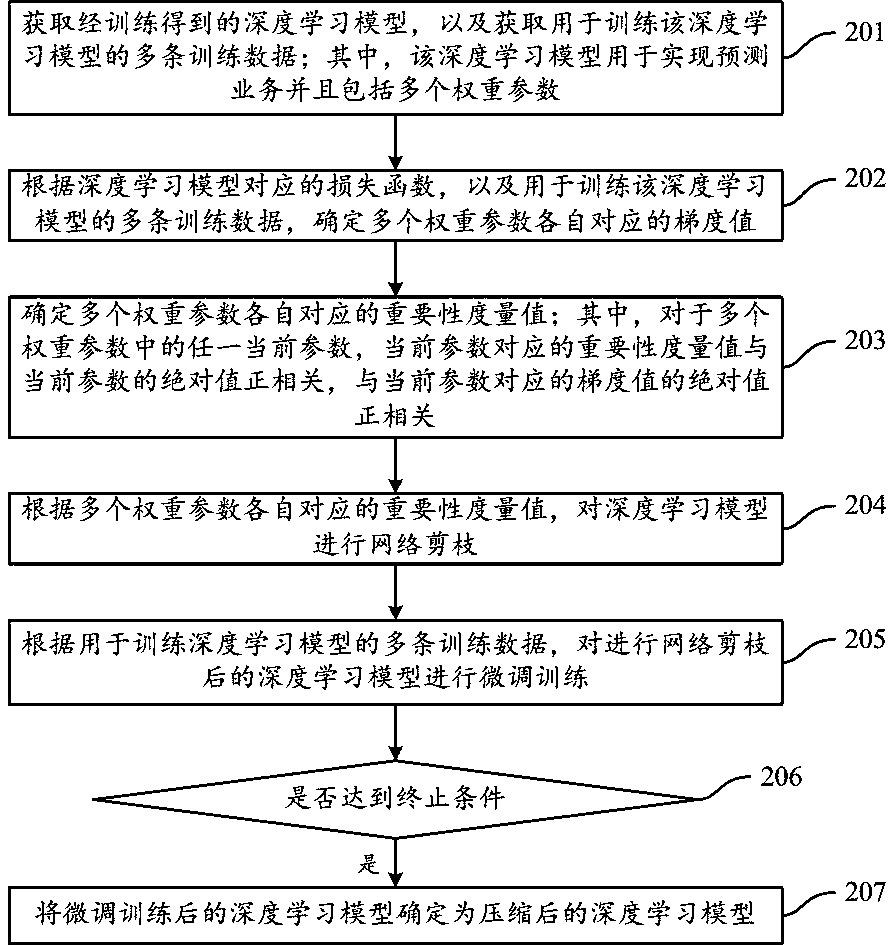

[0036] Various non-limiting embodiments provided in this specification will be described in detail below with reference to the accompanying drawings.

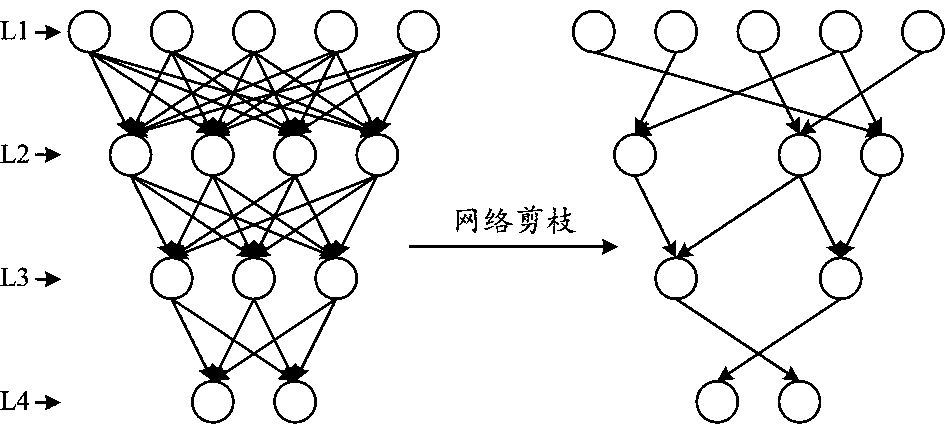

[0037] Methods for compressing deep learning models can include, but are not limited to: low-rank approximation, network pruning, network quantization, knowledge distillation, and compact network design. Network design), etc. Among them, network pruning is one of the most commonly used model compression methods.

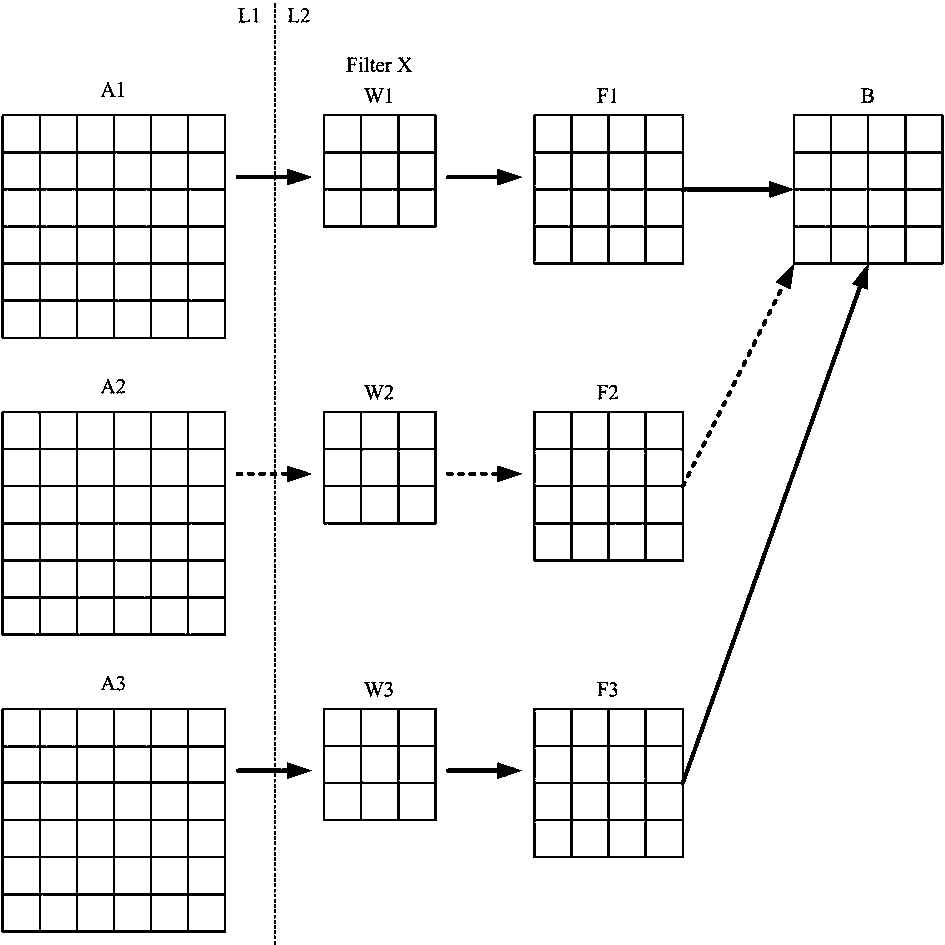

[0038] The core idea of network pruning is: after training the weight matrix of the deep learning model, that is, after training the deep learning model that meets the accuracy requirements, find relatively "unimportant" weight parameters from the deep learning model and delete them. Then the deep learning model is fine-tuned and trained to obtain a compressed deep learning model. Specifically, the trained deep learning model may include multiple network layers, and each network layer may include multiple neurons;...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com