Remote sensing image matching method and device, electronic equipment and readable storage medium

A technology of remote sensing image and matching method, applied in instrument, character and pattern recognition, scene recognition and other directions, can solve problems such as poor matching effect, and achieve the effect of reducing time overhead, improving matching efficiency, and reducing the number of

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

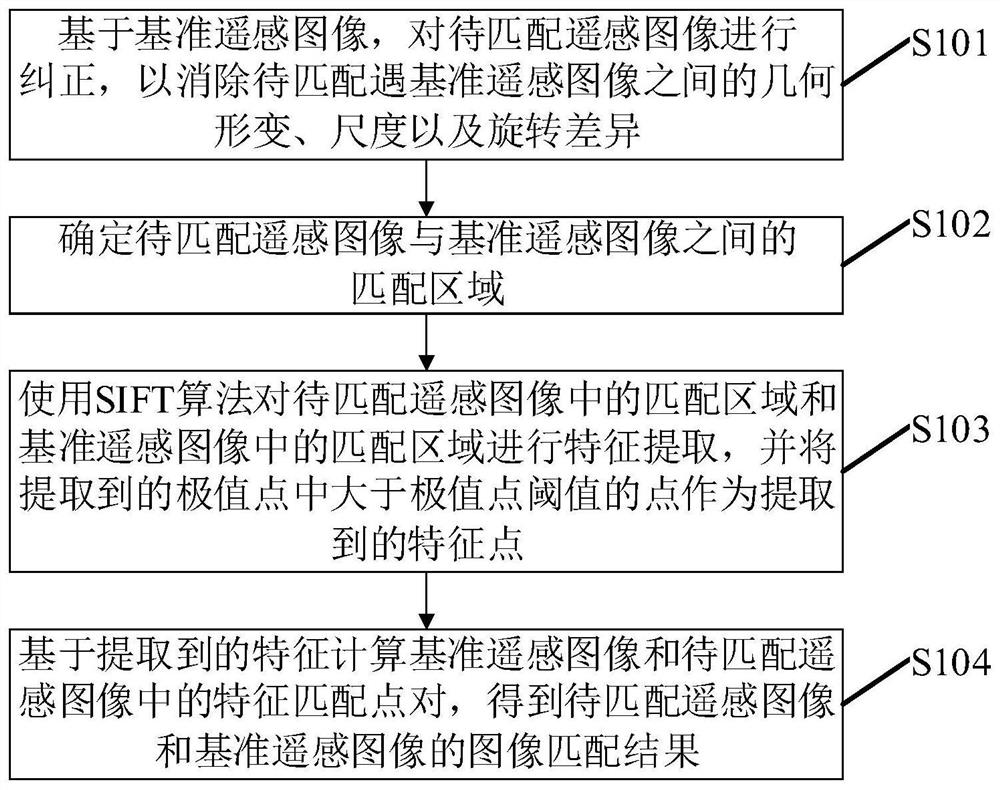

[0027] figure 1 A flowchart of the remote sensing image matching method according to the embodiment of the present invention is shown, such as figure 1 As shown, the method may include the following steps:

[0028] S101: Correct the remote sensing image to be matched based on the reference remote sensing image, so as to eliminate the geometric deformation, scale and rotation difference between the remote sensing image to be matched and the reference remote sensing image.

[0029] In the embodiment of the present invention, the reference image refers to an image of two or more images to be matched whose shooting angle of view is closest to the shooting angle of view perpendicular to the ground to be photographed.

[0030] In the embodiment of the present invention, the remote sensing image may be a remote sensing image captured by a low-altitude aircraft such as an unmanned aerial vehicle. Correspondingly, according to the external orientation elements of the image recorded in...

Embodiment 2

[0058] Figure 5 A principle block diagram of a remote sensing image matching apparatus according to an embodiment of the present invention is shown, and the apparatus can be used to implement the remote sensing image matching method described in Embodiment 1 or any optional implementation manner thereof. like Figure 5 As shown, the device includes: an image correction module 10 , a region determination module 20 , a feature extraction module 30 and an image matching module 40 . in,

[0059] The image correction module 10 is used for correcting the remote sensing image to be matched based on the reference remote sensing image, so as to eliminate the geometric deformation, scale and rotation difference between the remote sensing image to be matched and the reference remote sensing image.

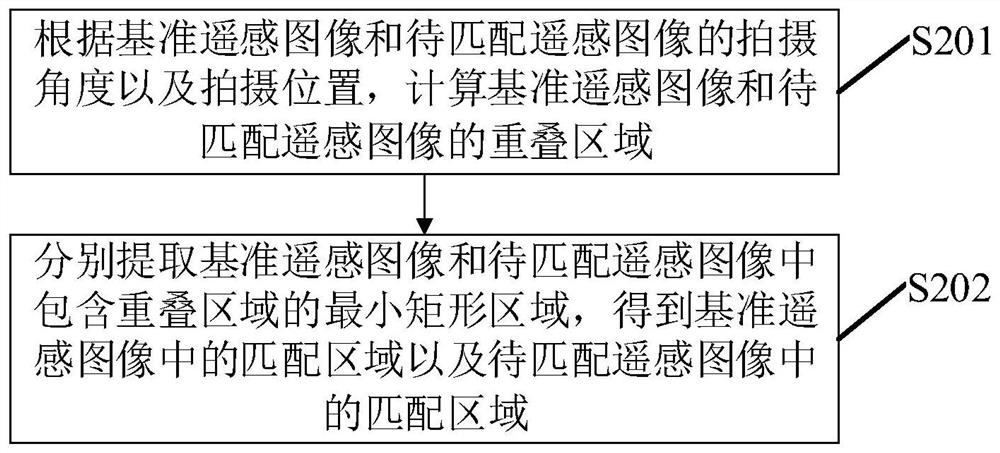

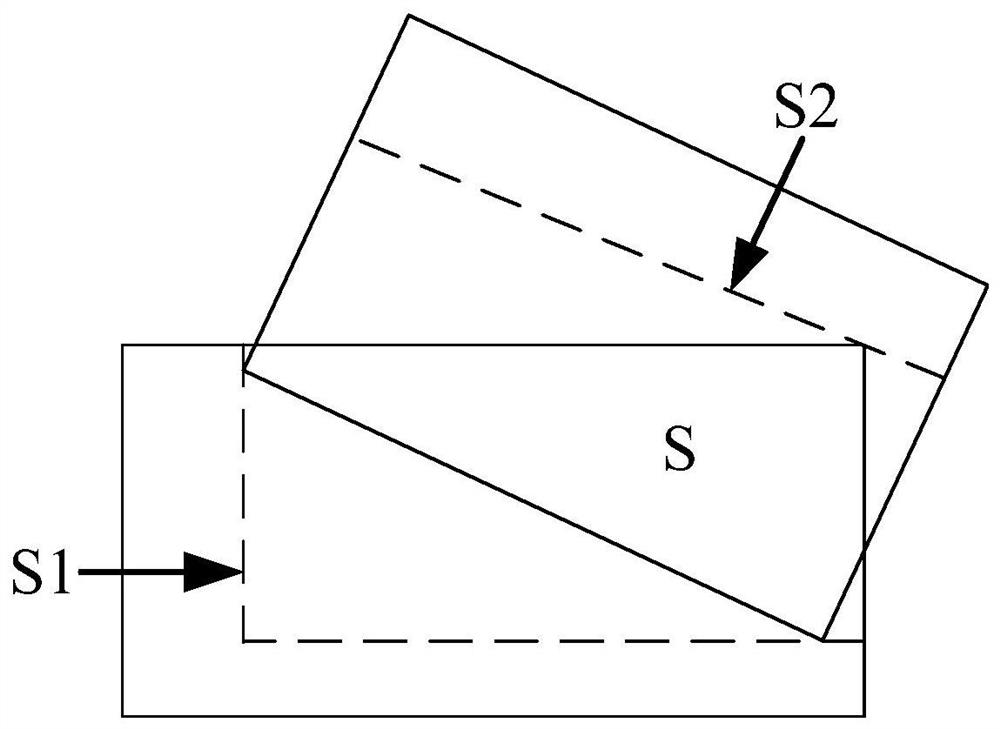

[0060] The region determination module 20 is used for determining the matching region between the remote sensing image to be matched and the reference remote sensing image. In the embodim...

Embodiment 3

[0065] The embodiment of the present invention provides an electronic device, such as Image 6As shown, the electronic device may include a processor 61 and a memory 62, wherein the processor 61 and the memory 62 may be connected by a bus or in other ways, Image 6 Take the connection through the bus as an example.

[0066] The processor 61 may be a central processing unit (Central Processing Unit, CPU). The processor 61 may also be other general-purpose processors, digital signal processors (Digital Signal Processor, DSP), application specific integrated circuit (Application Specific Integrated Circuit, ASIC), Field-Programmable Gate Array (Field-Programmable Gate Array, FPGA) or Other programmable logic devices, discrete gate or transistor logic devices, discrete hardware components and other chips, or a combination of the above types of chips.

[0067] The memory 62, as a non-transitory computer-readable storage medium, can be used to store non-transitory software program...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com