Video target detection method, system and device based on class external memory

A target detection and category technology, applied in the field of computer vision and pattern recognition, can solve the problems of auxiliary frame performance degradation, achieve the effect of enhancing robustness and discrimination, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

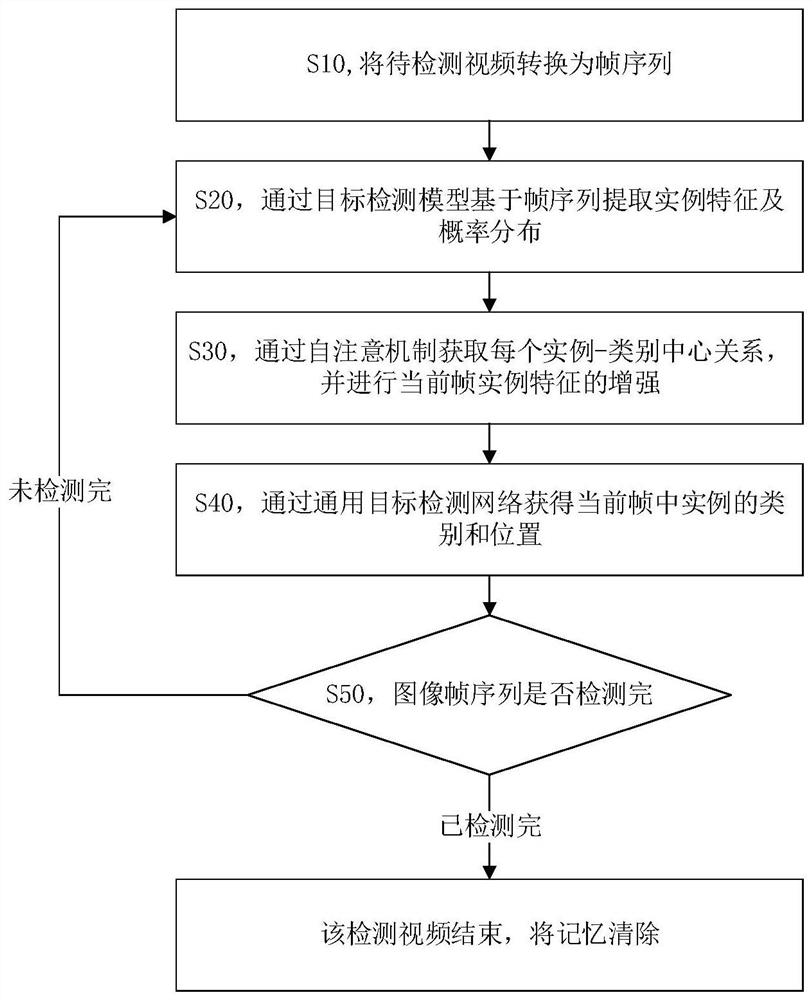

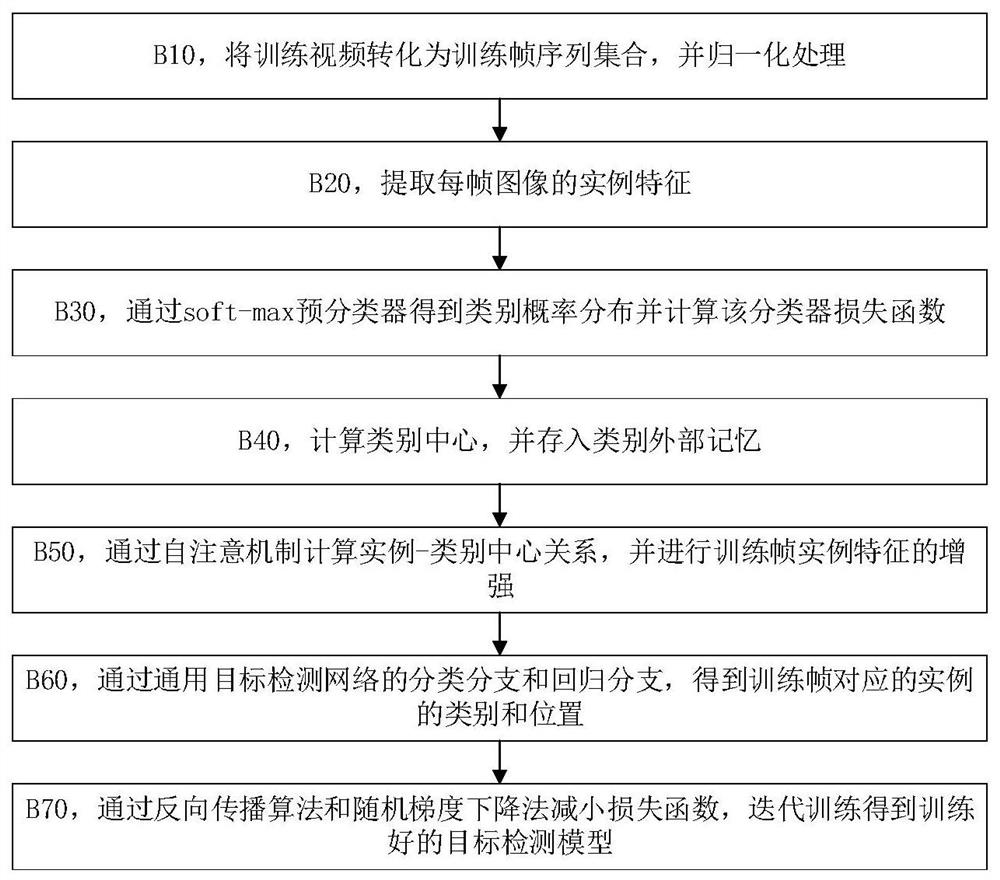

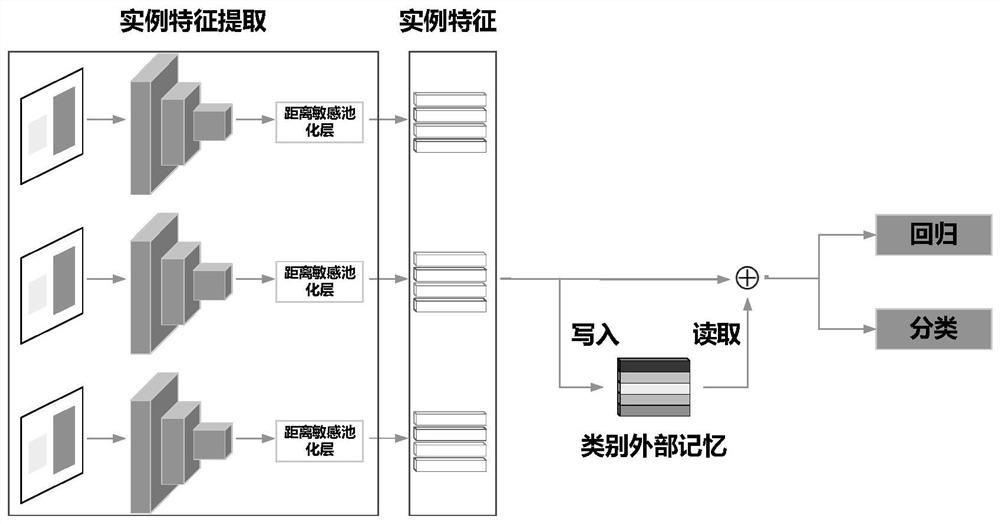

Method used

Image

Examples

no. 1 example

[0076] Step B20, for each normalized first frame sequence in the normalized first frame sequence set, randomly select one frame as a training frame, m frames as auxiliary frames, m is a natural number, preferably to balance training speed and model For the selection of performance, select two frames as the auxiliary frames corresponding to the training frame, and select other numbers of frames to achieve similar effects. There is no specific limitation here, and the first frame corresponding to each frame of image is extracted through a general-purpose target detection network based on deep learning. an instance feature;

[0077] In one embodiment of the present invention, the general target detection network selects Faster R-CNN, and in other embodiments, an appropriate network can also be selected according to needs, and the present invention will not be described in detail here;

[0078] Step B30, input the features of the first instance into the soft-max pre-classifier to ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com