Interactive music generation method and device, intelligent loudspeaker box and storage medium

A technology for smart speakers and music, applied in neural learning methods, biological neural network models, special data processing applications, etc., can solve problems such as reducing user interest, and achieve the effect of enriching interactive methods and improving interactive performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

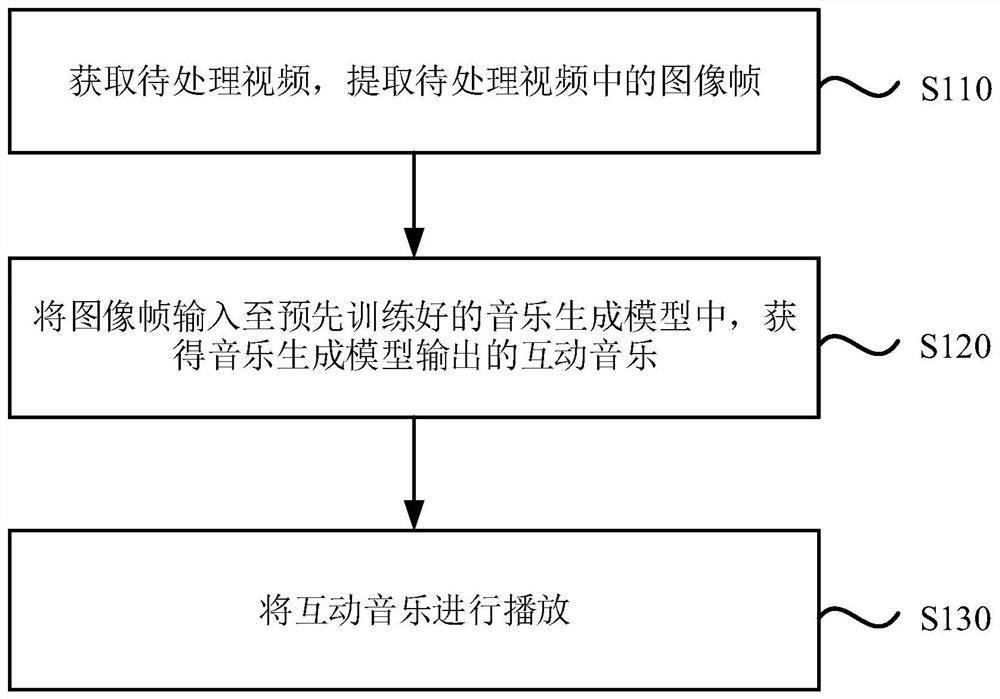

[0027] Figure 1a It is a flowchart of a method for generating interactive music provided by Embodiment 1 of the present invention. This embodiment is applicable to a situation where corresponding interactive music is generated according to a video. The method can be executed by an interactive music generating device, and the interactive music generating device can be realized by software and / or hardware. For example, the interactive music generating device can be configured in a smart speaker. like Figure 1a As shown, the method includes:

[0028] S110. Acquire a video to be processed, and extract image frames in the video to be processed.

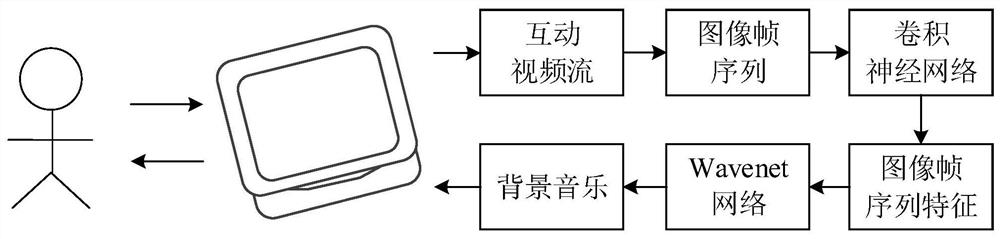

[0029] In this embodiment, the interactive performance of the smart speaker is improved by enriching the human-computer interaction function in the smart speaker with a screen. Optionally, the video specified by the user can be used as the video to be processed, and the corresponding interactive music can be generated as the background...

Embodiment 2

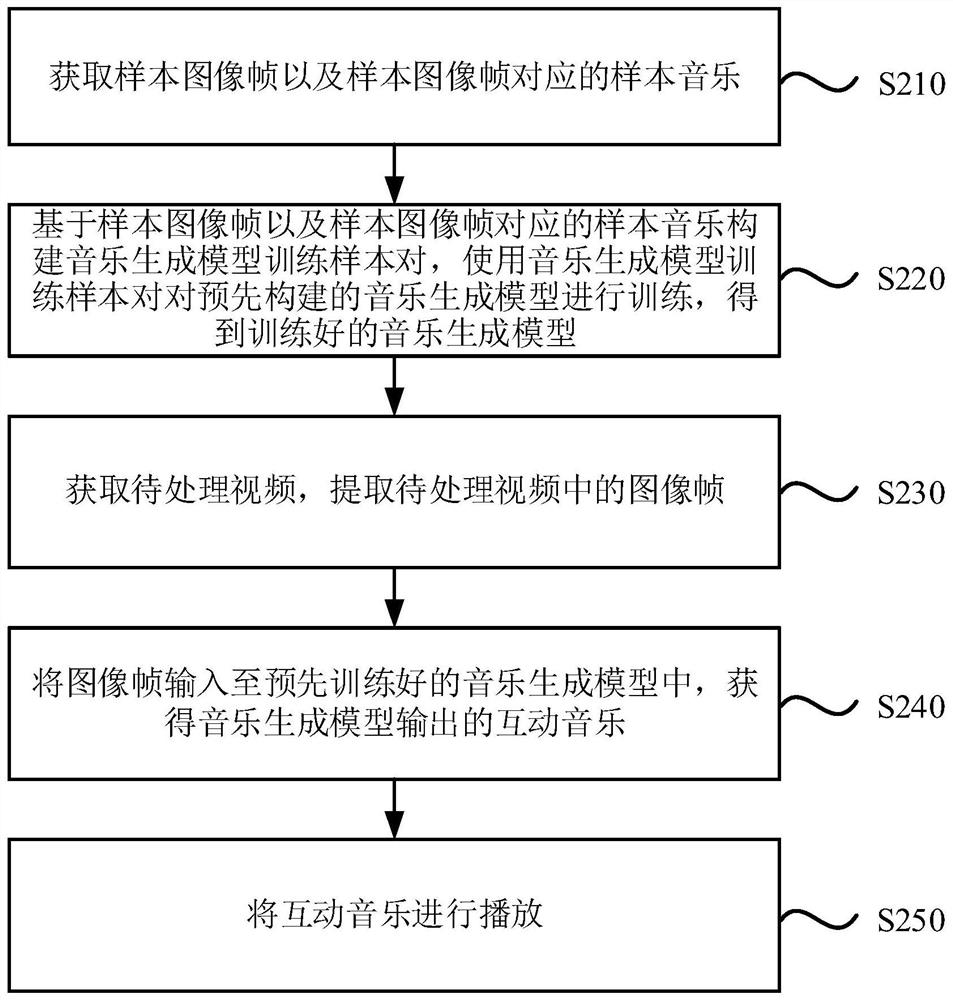

[0043] figure 2 It is a flow chart of an interactive music generation method provided by Embodiment 2 of the present invention. This embodiment provides a method for training a music generation model. like figure 2 As shown, the method includes:

[0044] S210. Acquire sample image frames and sample music corresponding to the sample image frames.

[0045] In this embodiment, the feature extraction network module and the music generation network module in the music generation model can be trained as a whole, that is, the feature extraction network module and the music generation network module are constructed first, and the feature extraction network module and the music generation network module are constructed according to the constructed feature extraction network module and music generation network module. The music generation network module obtains the constructed music generation model, uses the training sample set to train the constructed music generation model, and ...

Embodiment 3

[0056] image 3 It is a flow chart of an interactive music generation method provided by Embodiment 3 of the present invention. This embodiment provides another method for training a music generation model on the basis of the above embodiments. like image 3 As shown, the method includes:

[0057] S310. Acquire sample image frames and sample music corresponding to the sample image frames.

[0058] In this embodiment, the feature extraction network module and the music generation network module in the music generation model can be trained respectively to obtain the trained feature extraction network module and the trained music generation network module, and the trained feature extraction network module module and the trained music generation network module compose the trained music generation model.

[0059] The training sample set required for feature extraction network module training needs to include sample image frames, and the training sample set required for music ge...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com