Visual SLAM method based on optical flow and semantic segmentation

A semantic segmentation and vision technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve problems such as poor tracking and positioning effects of SLAM

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

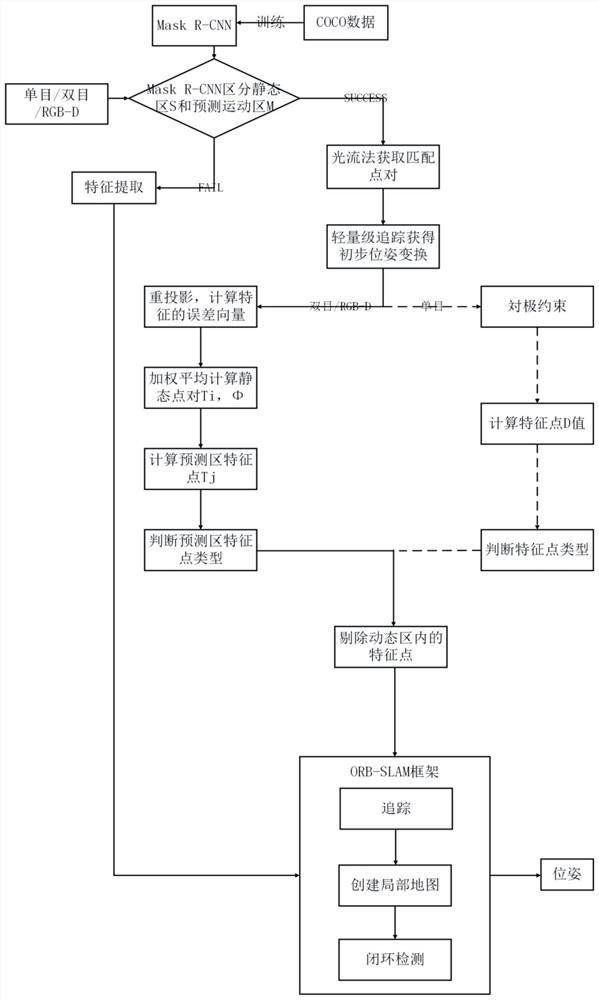

[0064] This embodiment provides a visual SLAM method based on optical flow and semantic segmentation, which mainly includes the following steps:

[0065] Step 1. Use the semantic segmentation network to segment the input image information to obtain static regions and predict dynamic regions.

[0066] Step 2. Perform feature tracking on the static region and the predicted dynamic region by using the sparse optical flow method.

[0067] Step 3. Determine the type of the feature points in the input image information, and remove the dynamic feature points.

[0068] Step 4. The set of removed motion feature points is used as tracking data, input into ORB-SLAM for processing, and the pose result is output.

[0069] In order to better understand the above-mentioned technical solution, the above-mentioned technical solution will be described in detail below in conjunction with the accompanying drawings and specific implementation methods.

[0070] This embodiment provides a visual S...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com