Patents

Literature

146 results about "Visual methods" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

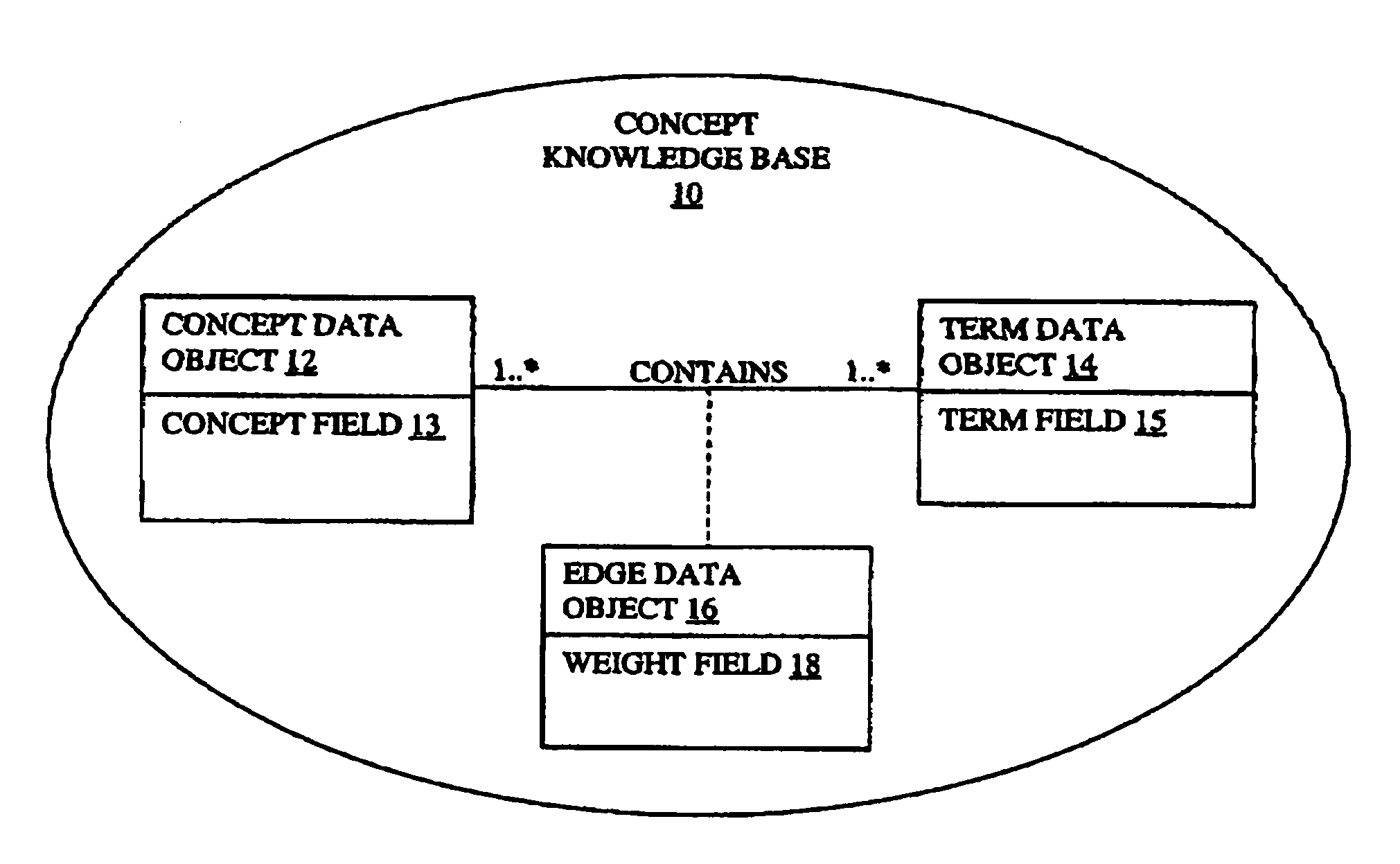

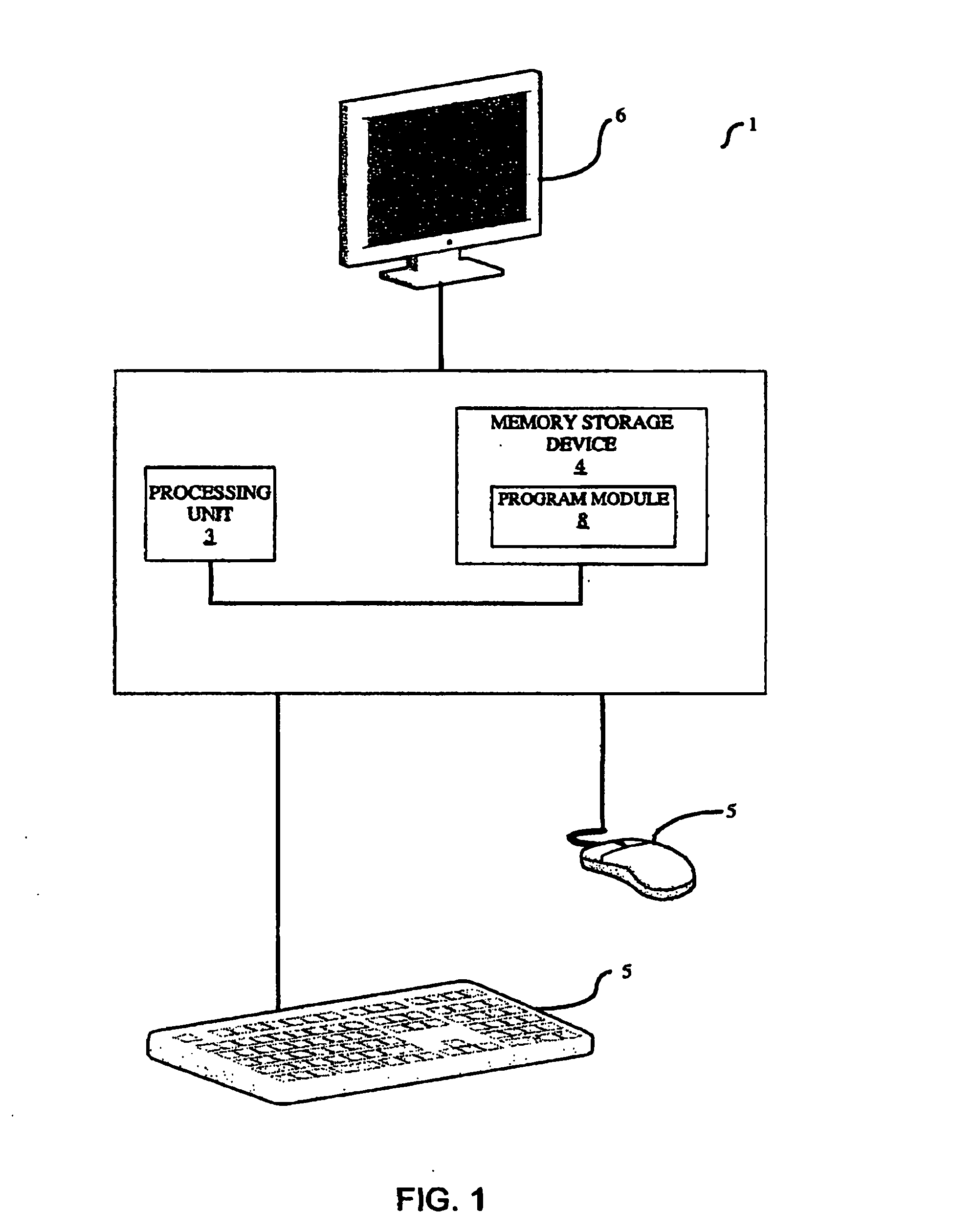

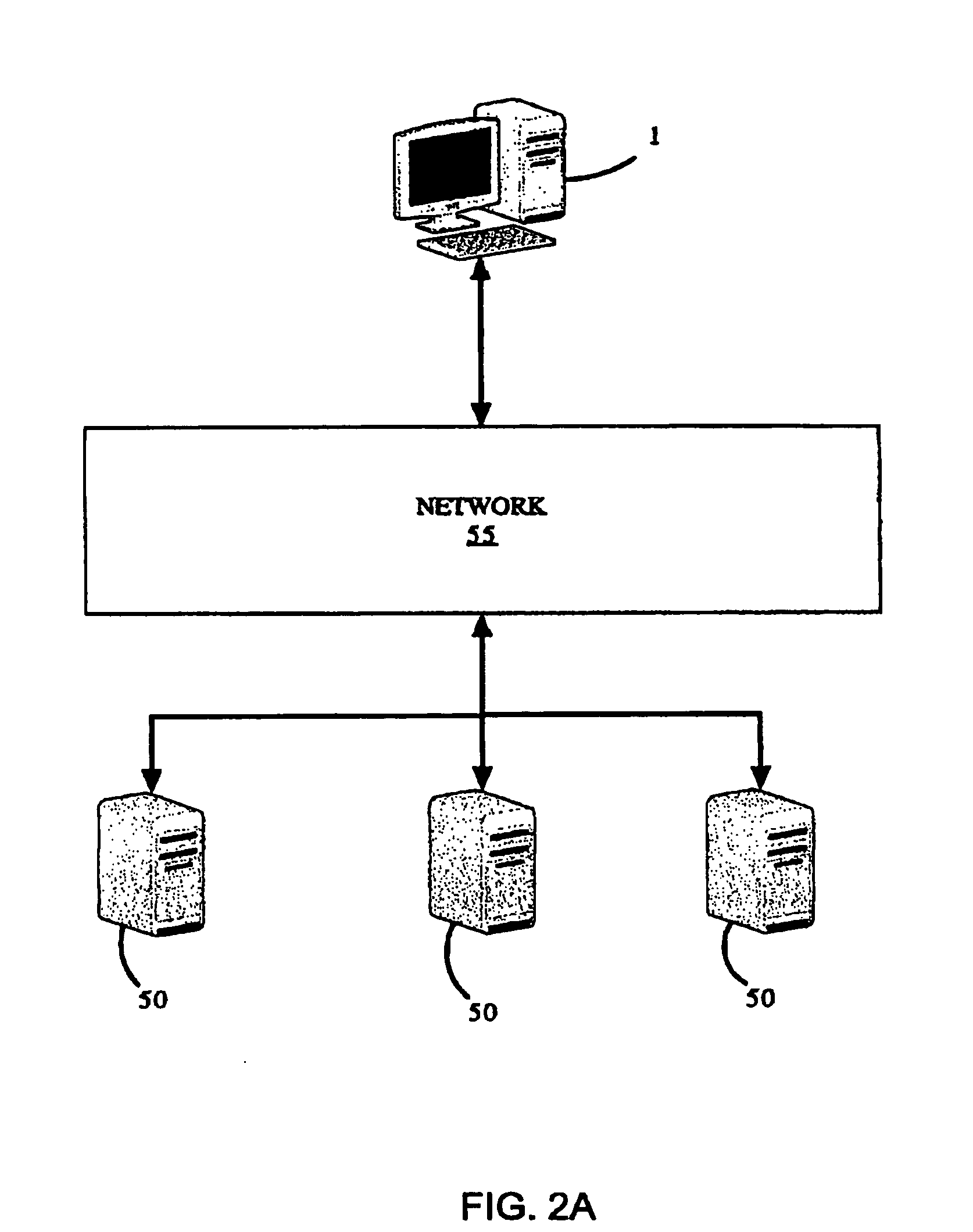

Method and apparatus for concept-based visual

A method and apparatus is provided for visually coding or sorting search results based on the similarity of the search results to one or more concepts. A search query containing search terms is used to conduct a web search and obtain search results comprising a number of document surrogates describing the located web pages. Concepts are obtained using the search terms and the similarities between the obtained concepts and the search results are evaluated. The search results are then displayed in a manner that indicates the relative similarity of search results to one or more of the determined concepts, such as by sorting the search results based on the level of similarity of the search results to one or more concepts or by providing an accordance indicator with each displayed search result the accordance indicators indicating the similarity of the corresponding search result with one ore more of the concepts.

Owner:BLACKBIRD TECH

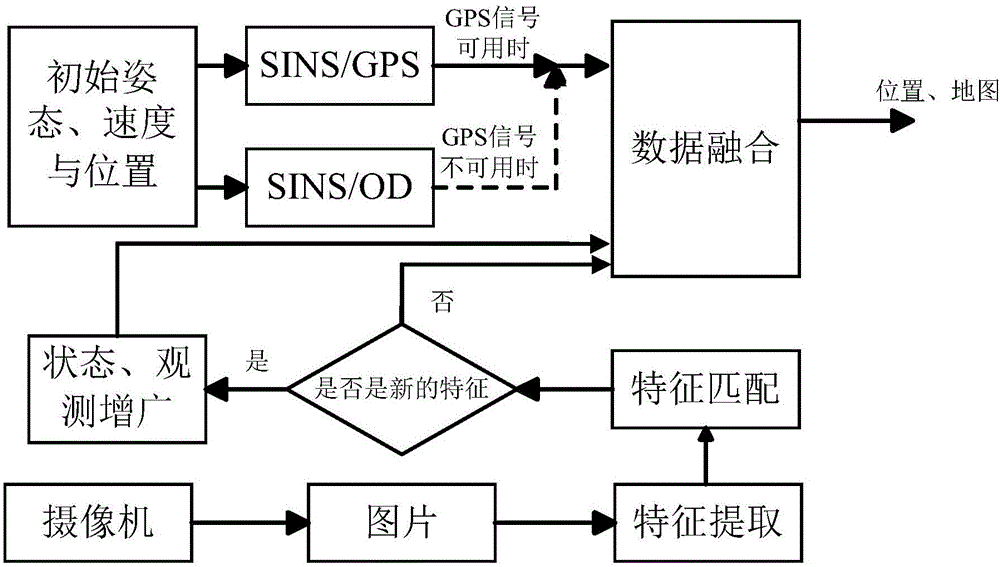

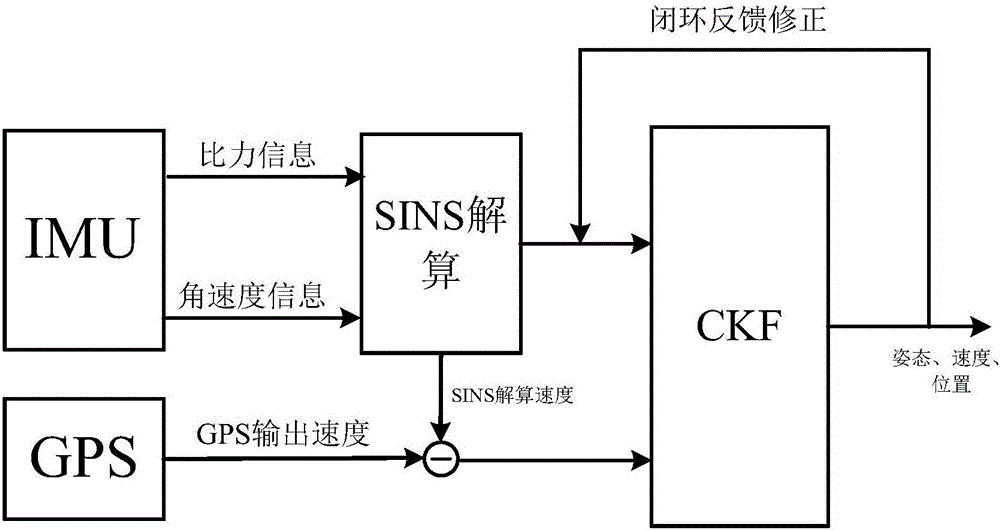

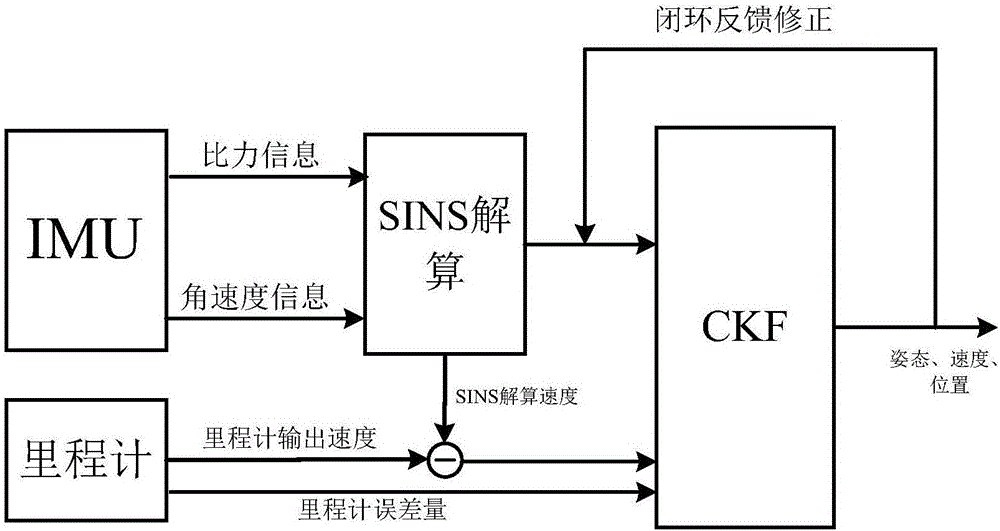

Visual SLAM (simultaneous localization and mapping) method based on SINS (strapdown inertial navigation system)/GPS (global positioning system) and speedometer assistance

ActiveCN106780699AOvercome the problem of a single application environmentHigh precisionInstruments for road network navigationGeographical information databasesSimultaneous localization and mappingGlobal Positioning System

The invention discloses a visual SLAM (simultaneous localization and mapping) method based on SINS (strapdown inertial navigation system) / GPS (global positioning system) and speedometer assistance. The method comprises the following steps: when GPS signals are available, performing data fusion on output information of the GPS and the SINS to obtain information including attitudes, speeds, positions and the like; when the GPS signals are unavailable, performing data fusion on output information of a speedometer and the SINS to obtain information including attitudes, speeds, positions and the like; shooting environmental images by using a binocular camera, and performing feature extraction and feature matching on the environmental images; and achieving positioning and map building by using the obtained transcendental attitude, speed and position information and environmental features, thereby completing a visual SLAM algorithm. According to the visual SLAM method disclosed by the invention, visual SLAM is assisted by using the SINA, the GPS and the speedometer, the positioning and map building under indoor and outdoor environments can be achieved, the application range is wide, and the precision and robustness of positioning can be improved.

Owner:SOUTHEAST UNIV

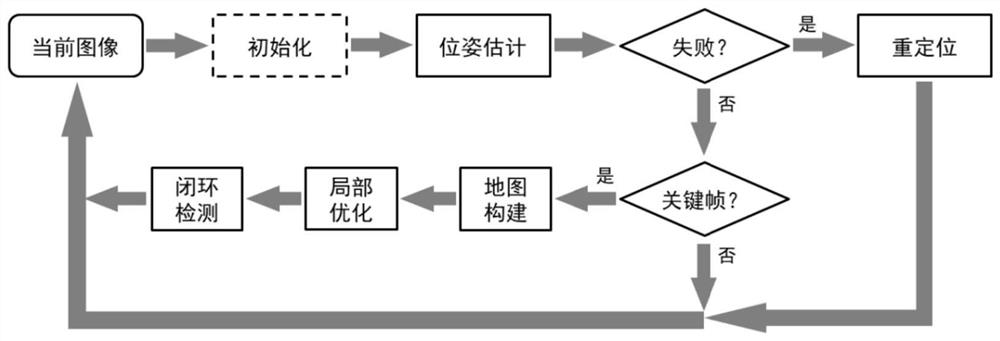

Collaborative visual SLAM method based on multiple cameras

InactiveCN105869136AQuick updateReconstruct 3D trajectoryImage analysisSimultaneous localization and mappingTemporal change

The present invention provides a multi-camera-based collaborative visual SLAM method, in particular to a collaborative visual SLAM method using multiple cameras in a dynamic environment. The method of the invention allows the relative position and direction of the cameras to change with time, so that multiple cameras can move independently and be installed on different platforms, and solve problems related to camera pose estimation, map point classification and camera group management, etc. problem, so that the method works robustly in dynamic scenes and can reconstruct the 3D trajectories of moving objects. Compared with the existing SLAM method based on single camera, the method of the present invention is more accurate and robust, and can be applied to micro-robots and wearable augmented reality.

Owner:BEIJING ROBOTLEO INTELLIGENT TECH

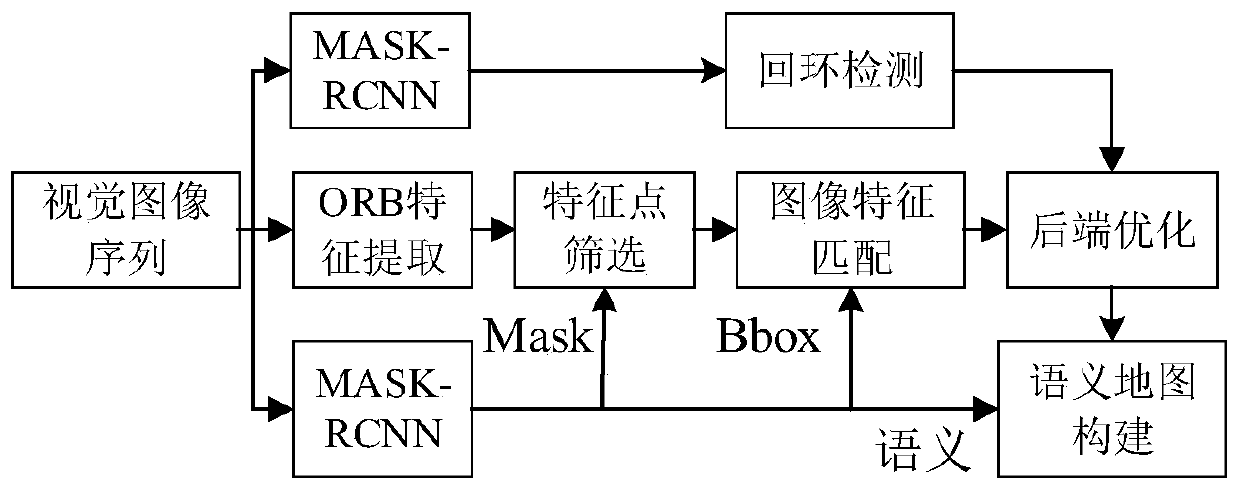

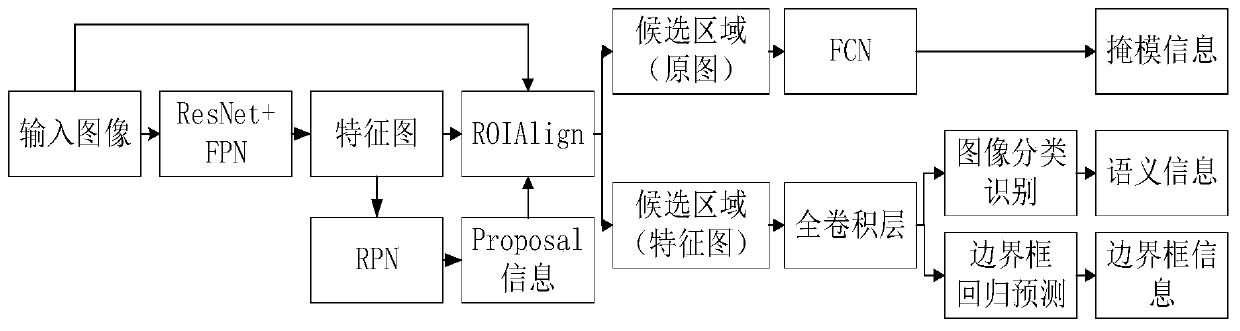

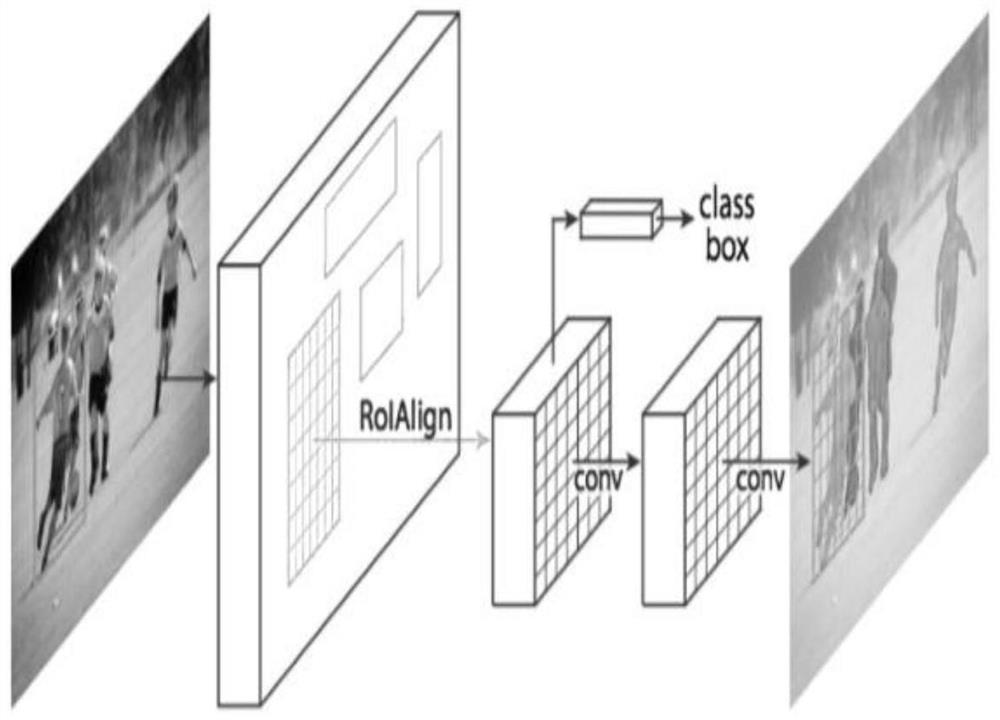

Visual SLAM method based on instance segmentation

InactiveCN110738673ARealize autonomous positioning and navigationHigh precisionImage enhancementImage analysisData setVision algorithms

The invention provides a visual SLAM algorithm based on instance segmentation, and the algorithm comprises the steps: firstly extracting feature points of an input image, and carrying out the instancesegmentation of the image through employing a convolutional neural network; secondly, using instance segmentation information for assisting in positioning, removing feature points prone to causing mismatching, and reducing a feature matching area; and finally, constructing a semantic map by using the semantic information segmented by the instance, thereby realizing reuse and man-machine interaction of the established map by the robot. According to the method, experimental verification is carried out on image instance segmentation, visual positioning and semantic map construction by using a TUM data set. Experimental results show that the robustness of image feature matching can be improved by combining image instance segmentation with visual SLAM, the feature matching speed is increased,and the positioning accuracy of the mobile robot is improved; and the algorithm can generate an accurate semantic map, so that the requirement of the robot for executing advanced tasks is met.

Owner:HARBIN UNIV OF SCI & TECH

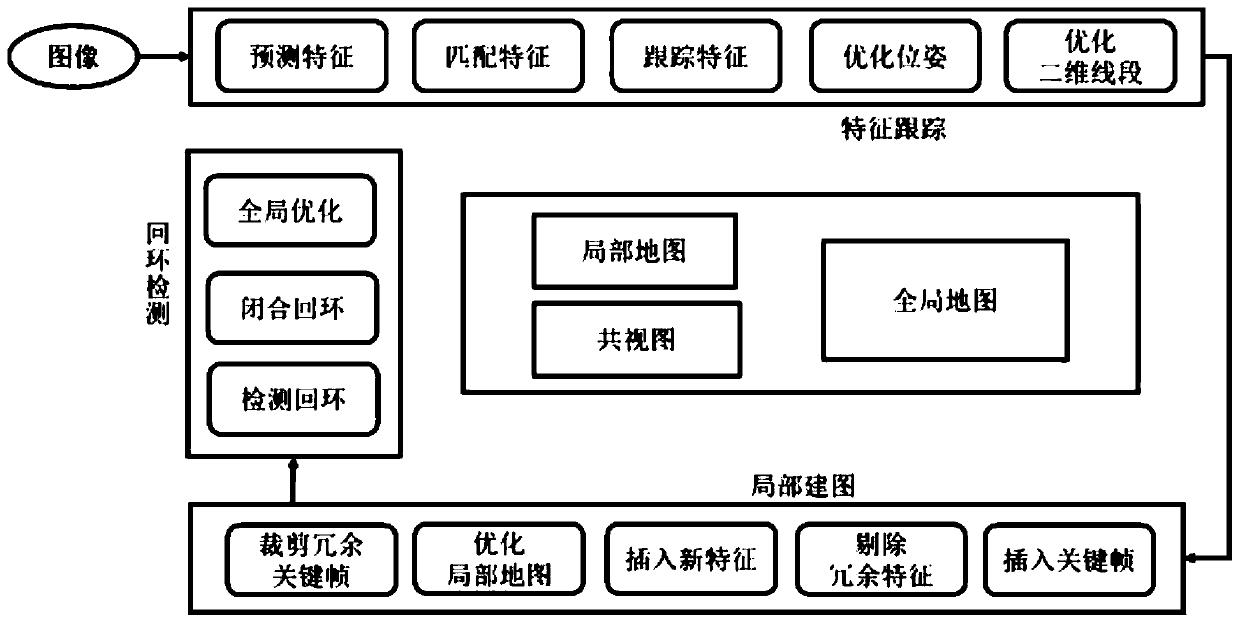

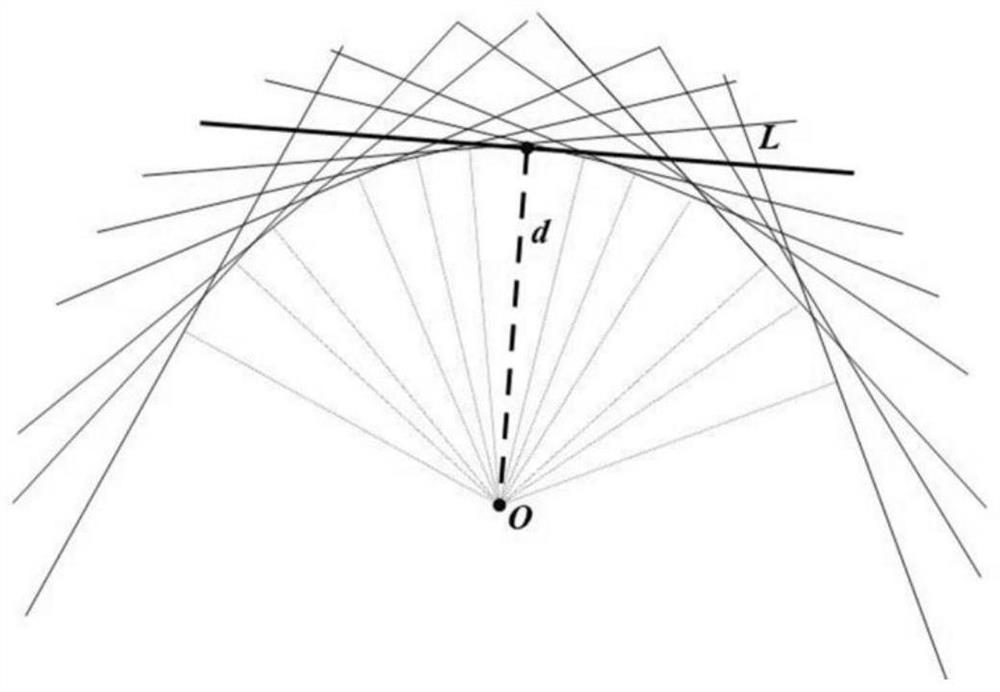

Visual SLAM method based on point-line fusion

PendingCN110782494AExtract completeFast extractionImage analysis3D modellingImage extractionRadiology

The invention discloses a visual SLAM method based on point-line fusion, and the method comprises the steps: firstly inputting an image, predicting the pose of a camera, extracting a feature point ofthe image, and estimating and extracting a feature line through the time sequence information among a plurality of visual angles; and matching the feature points and the feature lines, tracking the features in front and back frames, establishing inter-frame association, optimizing the pose of the current frame, and optimizing the two-dimensional feature lines to improve the integrity of the feature lines; judging whether the current key frame is a key frame or not, if yes, adding the key frame into the map, updating three-dimensional points and lines in the map, performing joint optimization on the current key frame and the adjacent key frame, and optimizing the pose and three-dimensional characteristics of the camera;and removing a part of external points and redundant key frames; and finally, performing loopback detection on the key frame, if the current key frame and the previous frame are similar scenes, closing loopback, and performing global optimization once to eliminate accumulated errors. Under an SLAM system framework based on points and lines, the line extraction speed and the feature line integrity are improved by utilizing the sequential relationship of multiple view angle images, so that the pose precision and the map reconstruction effect are improved.

Owner:BEIJING UNIV OF TECH

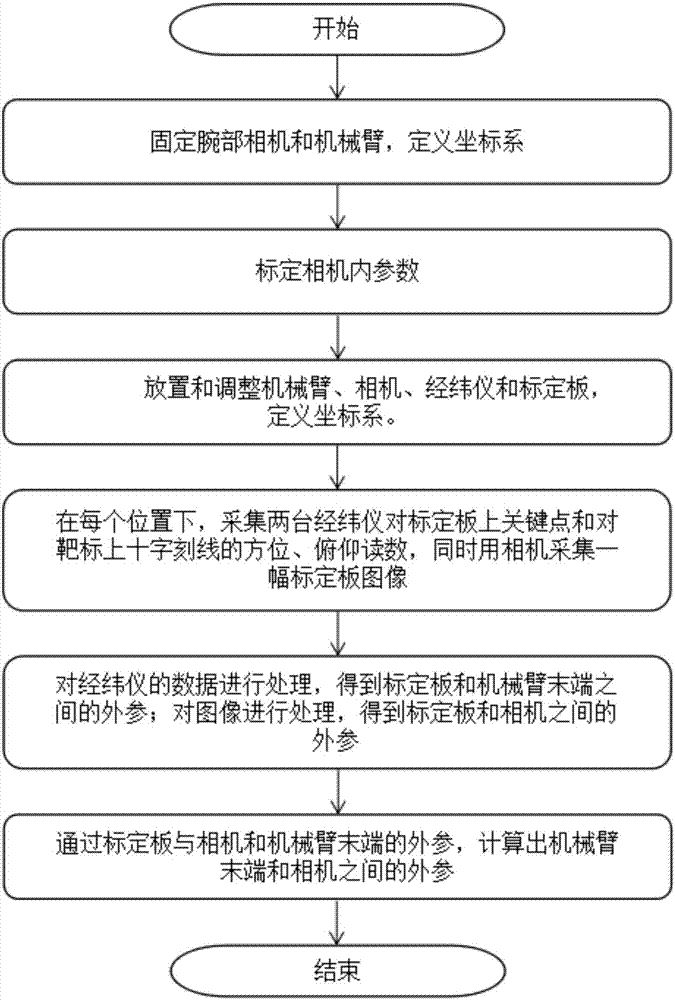

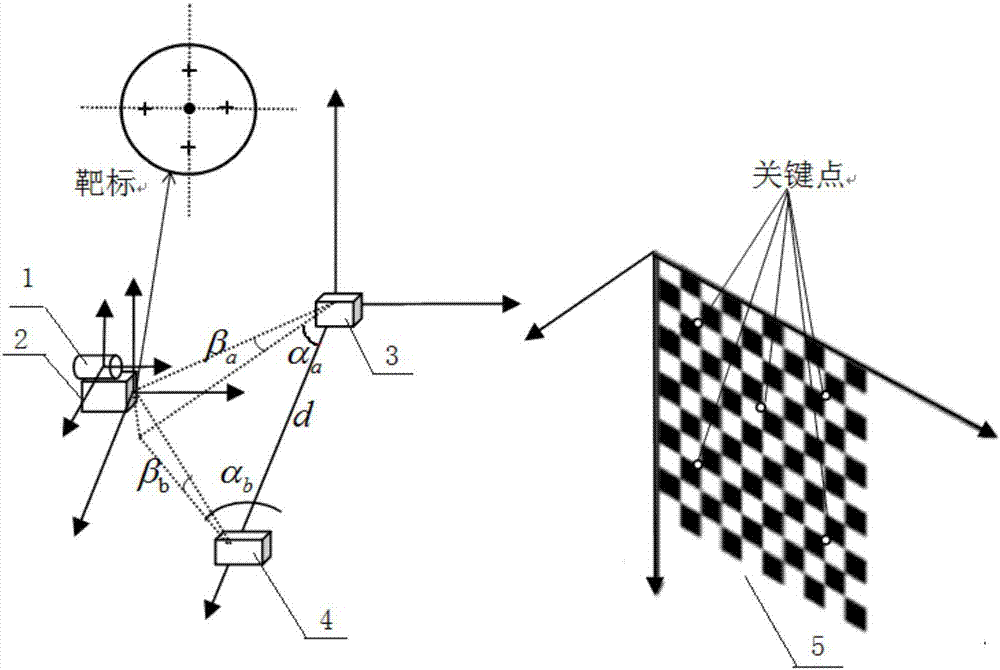

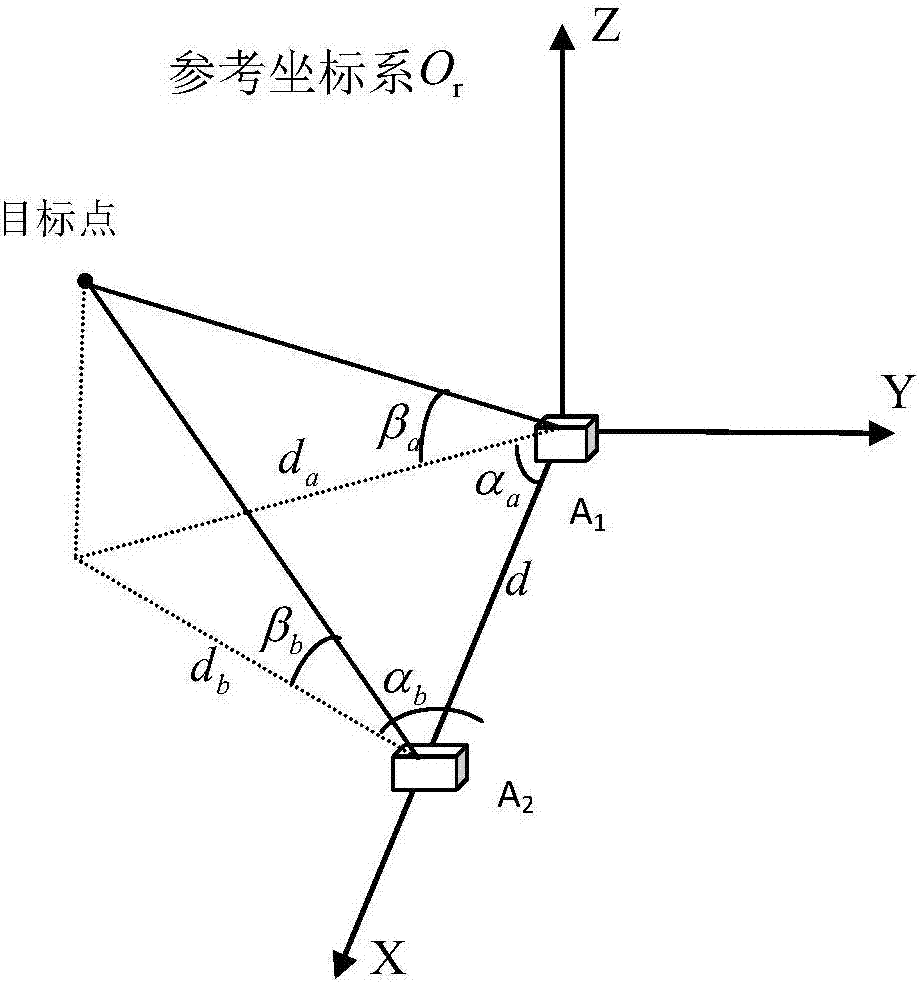

High-precision mechanical arm hand-eye camera calibration method and system

The invention discloses a high-precision mechanical arm hand-eye camera calibration method and system, and the method comprises the steps: collecting images of a calibration plate at different positions, and measuring the internal parameters of a camera and the external parameters between a wrist camera and the calibration plate through a visual method; measuring the external parameters between a mechanical arm target and the calibration plate through a high-precision self-collimating theodolite, a mechanical arm target design and key points selected from the calibration plate; and finally completing the calibration of the mechanical arm wrist camera through a visual computing method. According to the invention, the system employs two high-precision measurement instruments, takes a calibration plate coordinate system as the central coordinate system, achieves the high-precision coordinate system parameter conversion, and finally obtains the external parameters between the tail end of the mechanical arm and the camera. The method can achieve the high-precision calibration of the external parameters between the mechanical arm and the camera.

Owner:XI AN JIAOTONG UNIV +1

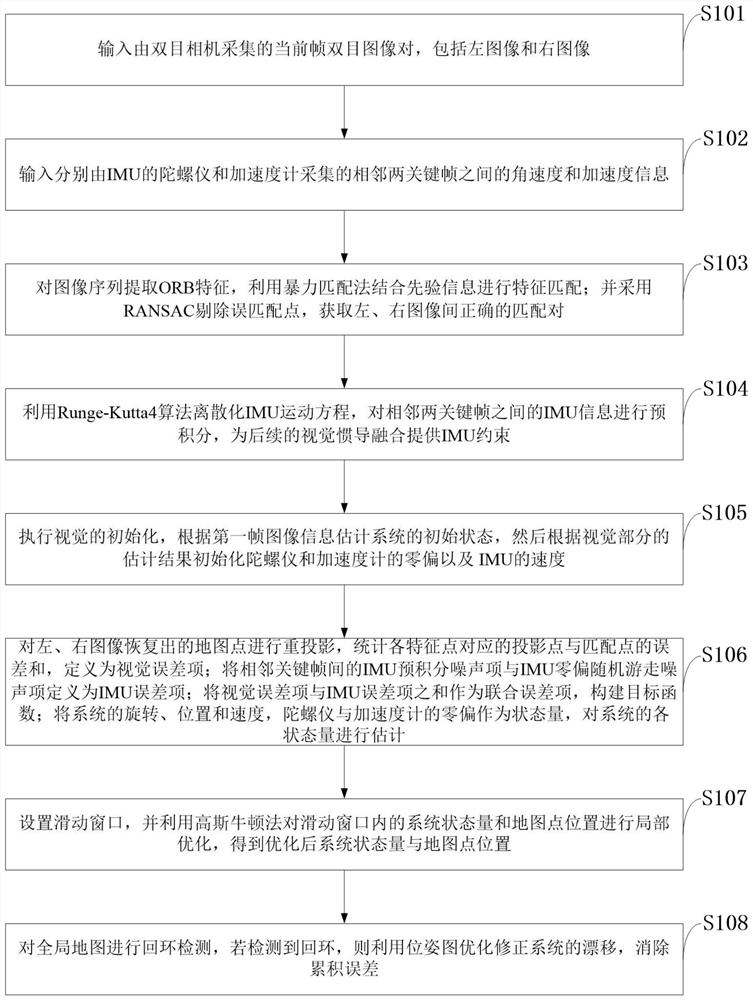

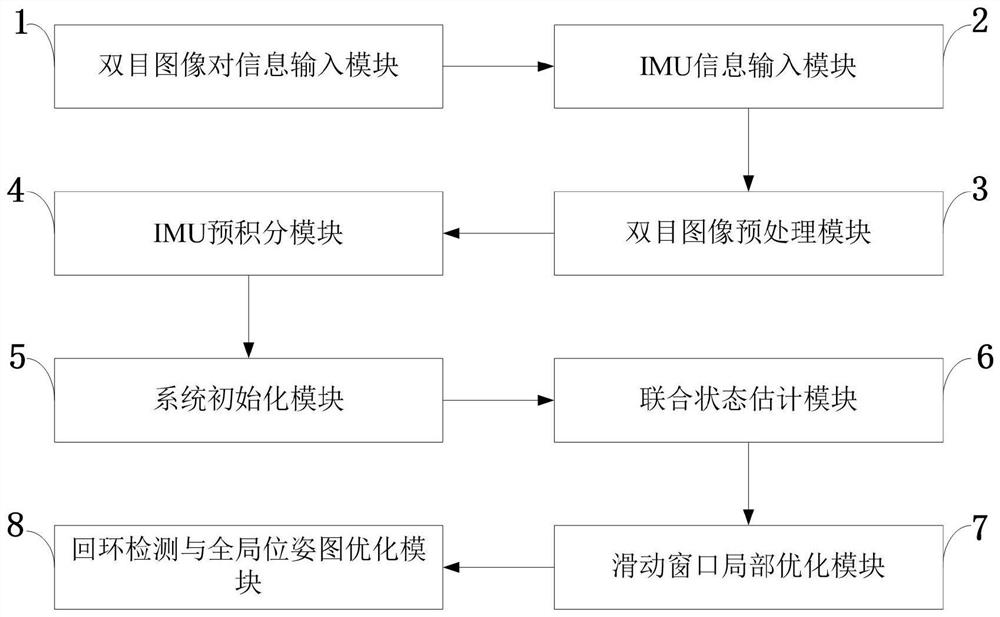

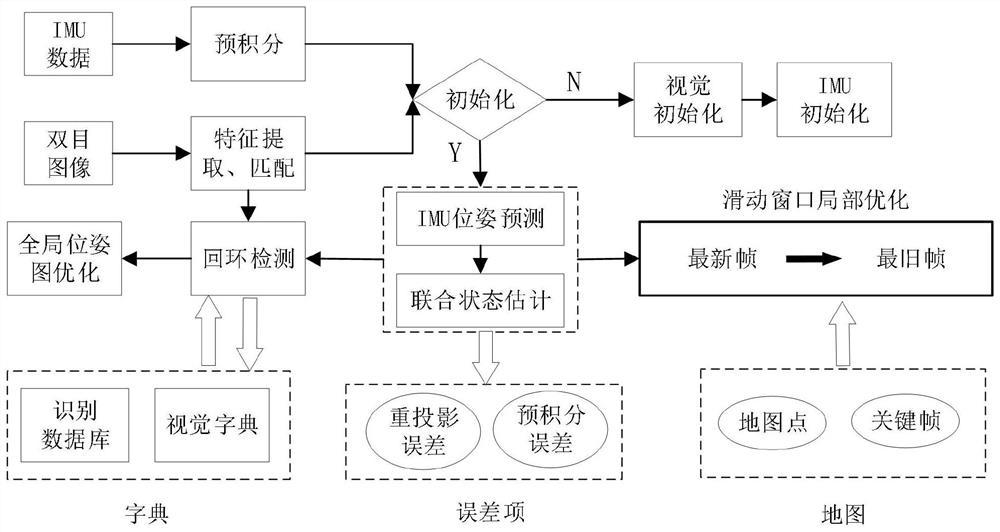

Visual inertial navigation fusion SLAM method based on Runge-Kutta4 improved pre-integration

PendingCN112240768AAvoid errorsGuaranteed accuracyImage analysisNavigational calculation instrumentsComputer graphics (images)Engineering

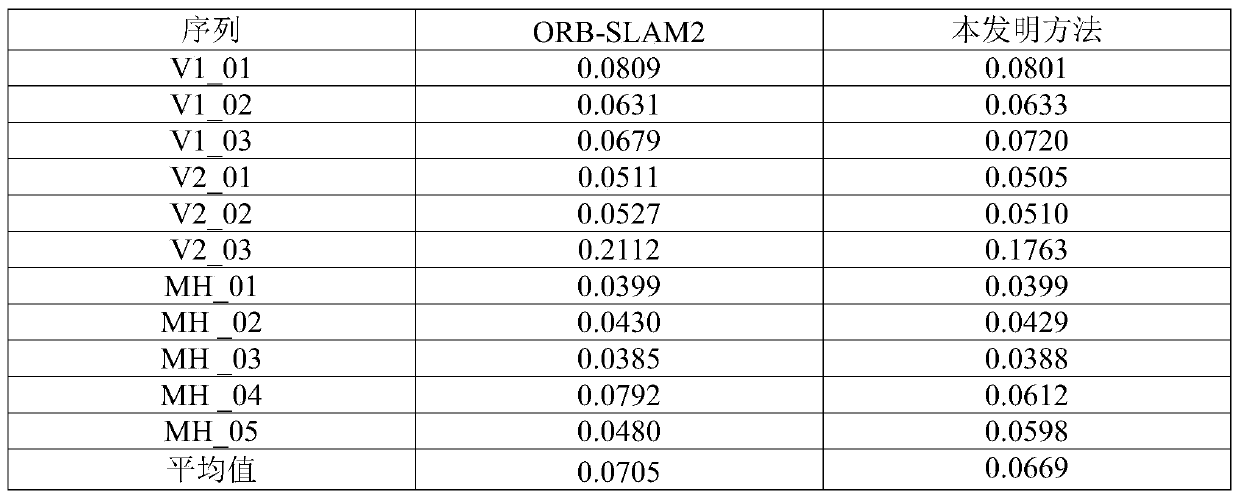

The invention belongs to the technical field of unmanned driving, discloses a visual inertial navigation fusion SLAM method based on Runge-Kutta4 improved pre-integration, and is used for solving thetechnical problems of low positioning precision, poor robustness and the like of an existing visual ORB-SLAM2 method in occasions of rapid movement, sparse environmental characteristics and the like.The method comprises the following steps: inputting binocular image pair information; inputting IMU information; preprocessing the binocular image; carrying out pre-integration on the IMU by utilizinga RungeKutta4 algorithm; initializing a system; estimating joint state; locally optimizing a sliding window; and carrying out loop detection and global pose graph optimization. According to the method, positioning estimation and map creation can be effectively carried out in scenes with different difficulty levels, and compared with an original visual ORB-SLAM2 method, the method has higher positioning precision and can be applied to the technical fields of unmanned system navigation, virtual reality and the like.

Owner:XIDIAN UNIV +1

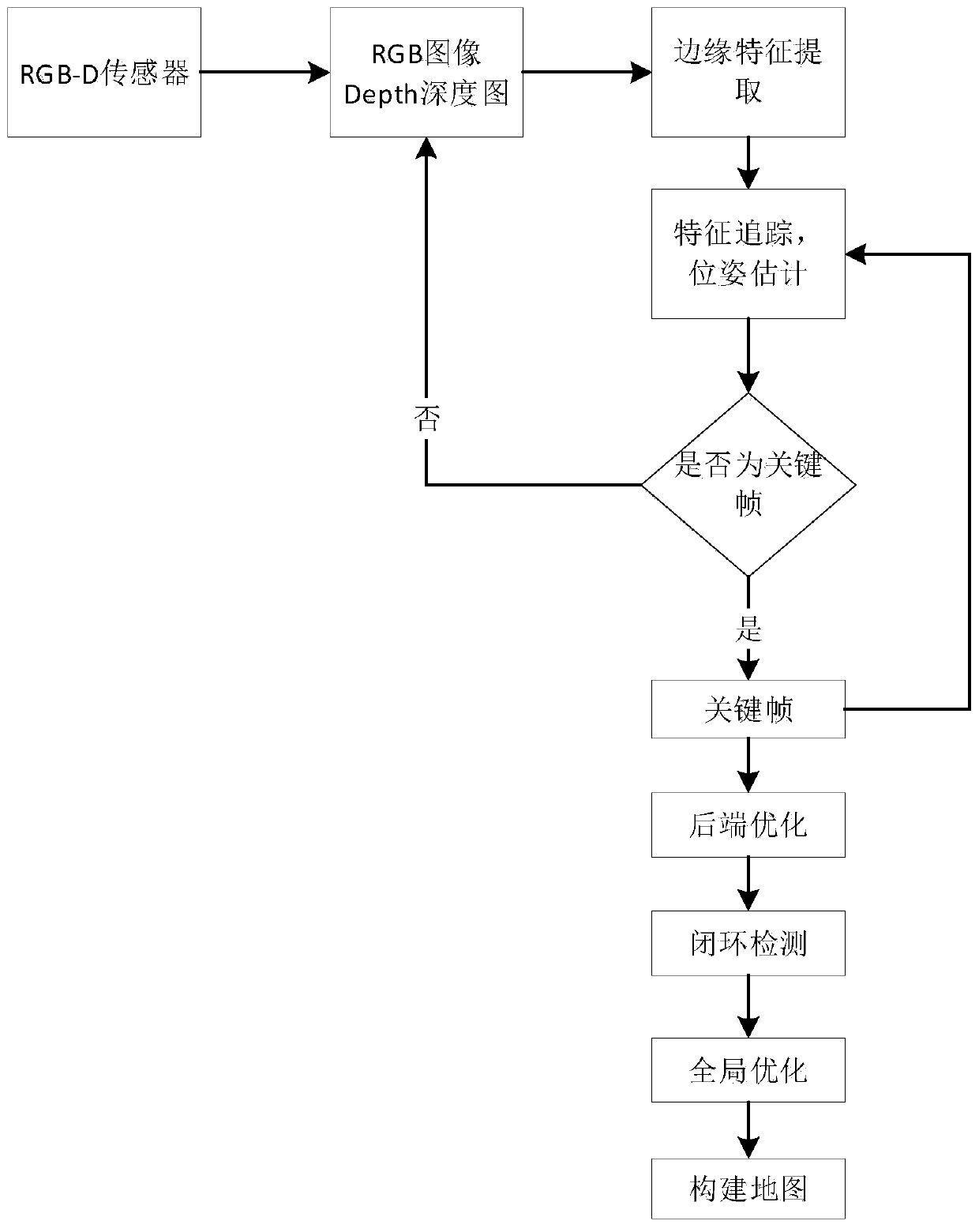

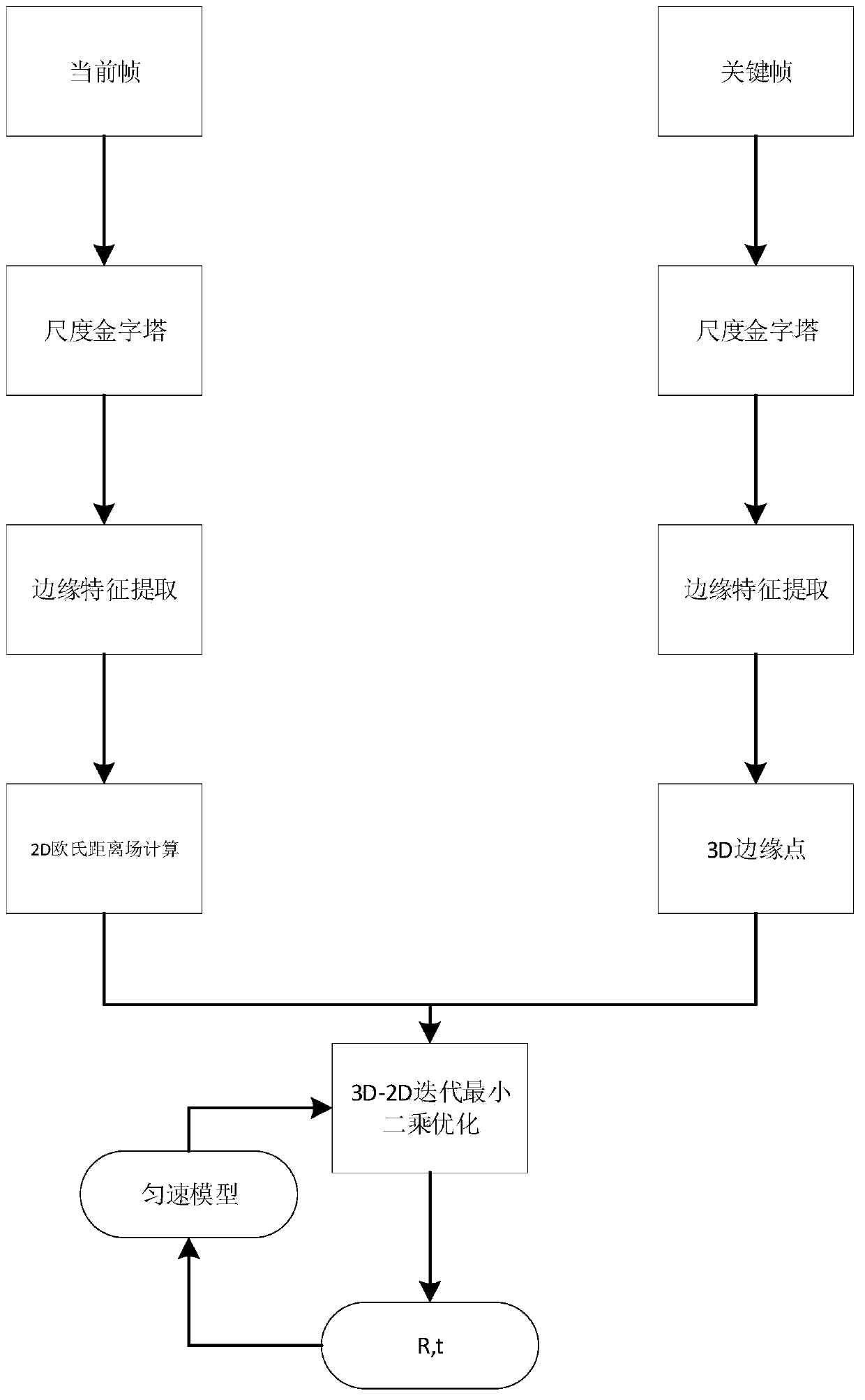

Visual SLAM method and system based on image edge features

ActiveCN111060115AImprove anti-interference abilityPromote reductionInstruments for road network navigationImage extractionRadiology

The invention discloses a visual SLAM method based on image edge features. The visual SLAM method comprises the following steps: acquiring an image through a visual sensor; extracting edge features ofthe acquired image to perform pose estimation; carrying out nonlinear optimization according to a pose estimation result; performing closed-loop detection according to a nonlinear optimization result; carrying out global optimization according to a closed-loop detection result; and constructing a global map. Compared with the prior art, the method has the advantages that firstly, the image edge is an important component in the whole image and can always represent the whole image, so that the method has higher overall precision and signal-to-noise ratio and is more robust; secondly, the edge features can also operate in a robust mode under sparse textures, and the anti-interference capacity to illumination changes is high; and finally, the image edge features are the simplest expressions of the real scene, and the established map can well restore the real scene.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

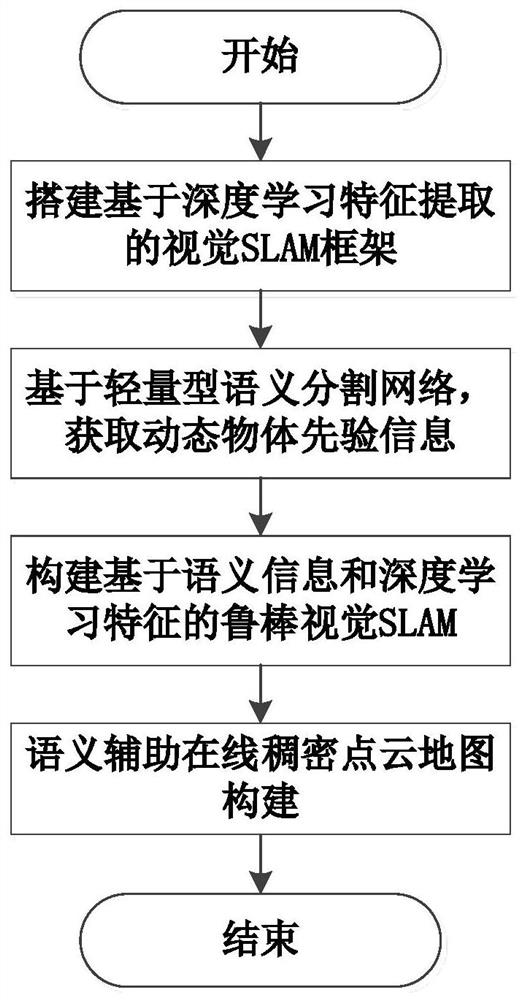

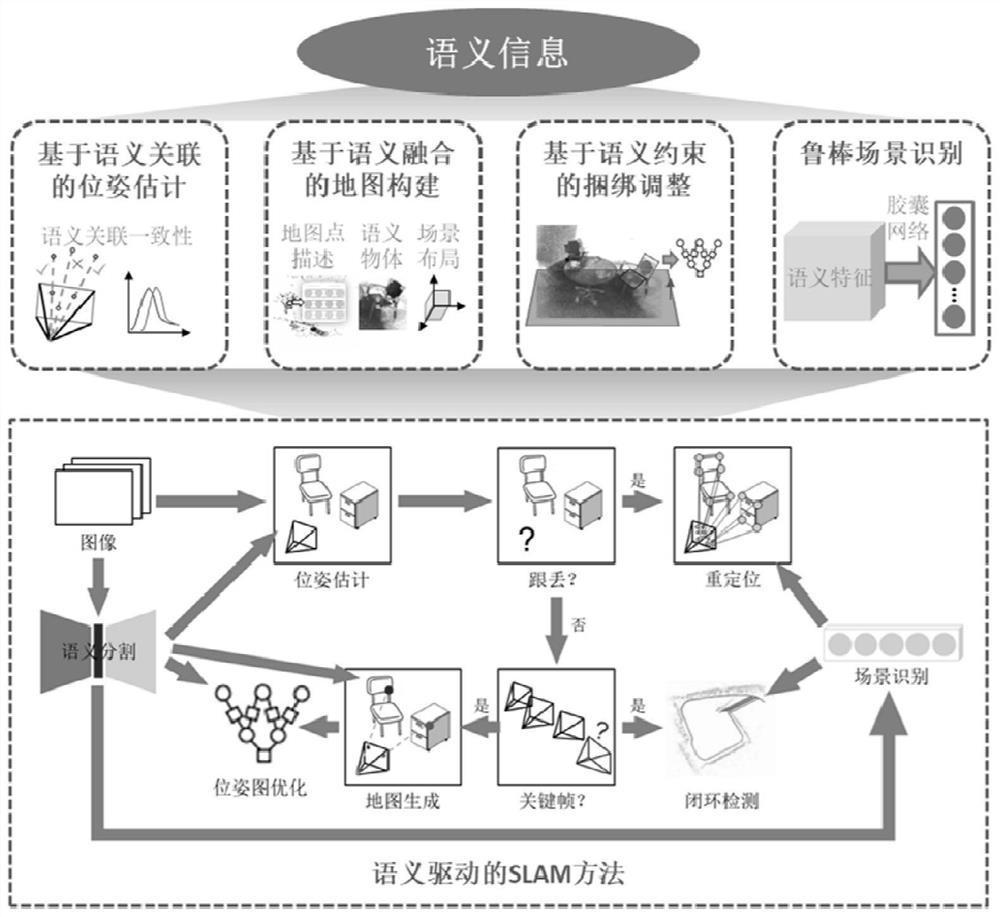

Robust vision SLAM method based on semantic prior and deep learning features

ActiveCN111814683ARobust Visual SLAMImprove bindingProgramme-controlled manipulatorCharacter and pattern recognitionFeature extractionPoint cloud

The invention relates to a robust vision SLAM method based on semantic prior and deep learning features. The method comprises the following steps: (1), building a vision SLAM framework based on deep learning feature extraction, enabling a tracking thread of the framework to input an image obtained by a camera sensor into a deep neural network, and extracting depth feature points; (2) based on a lightweight semantic segmentation network model, performing semantic segmentation on the input video sequence to obtain a semantic segmentation result, and obtaining semantic prior information of a dynamic object in the scene; (3) removing the depth feature points extracted in the step (1) according to the semantic prior information in the step (2), removing the feature points on the dynamic object,and improving the positioning precision in the dynamic scene; and (4) obtaining a static point cloud corresponding to the key frame selected by the tracking thread according to the semantic segmentation result in the step (2), performing static point cloud splicing according to the key frame pose obtained in the step (3), and constructing a dense global point cloud map in real time.

Owner:BEIHANG UNIV

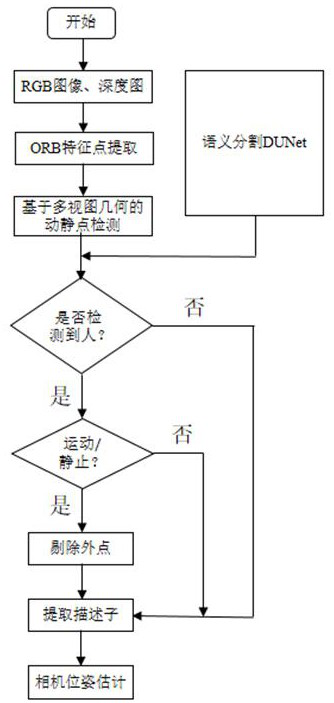

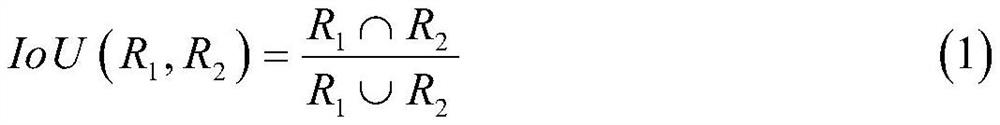

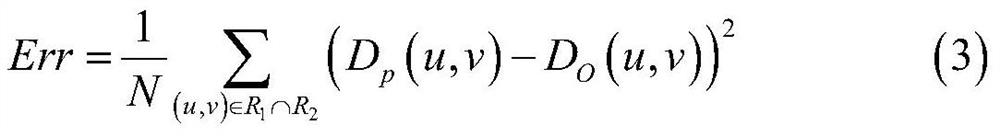

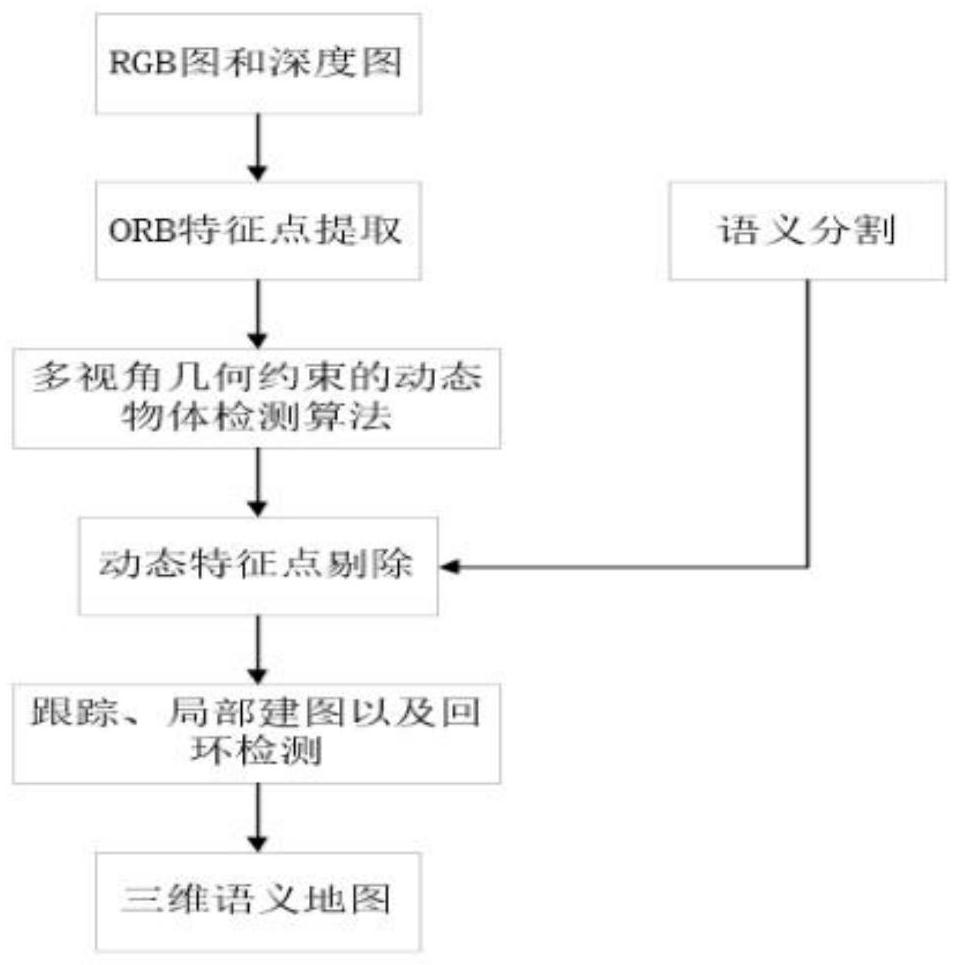

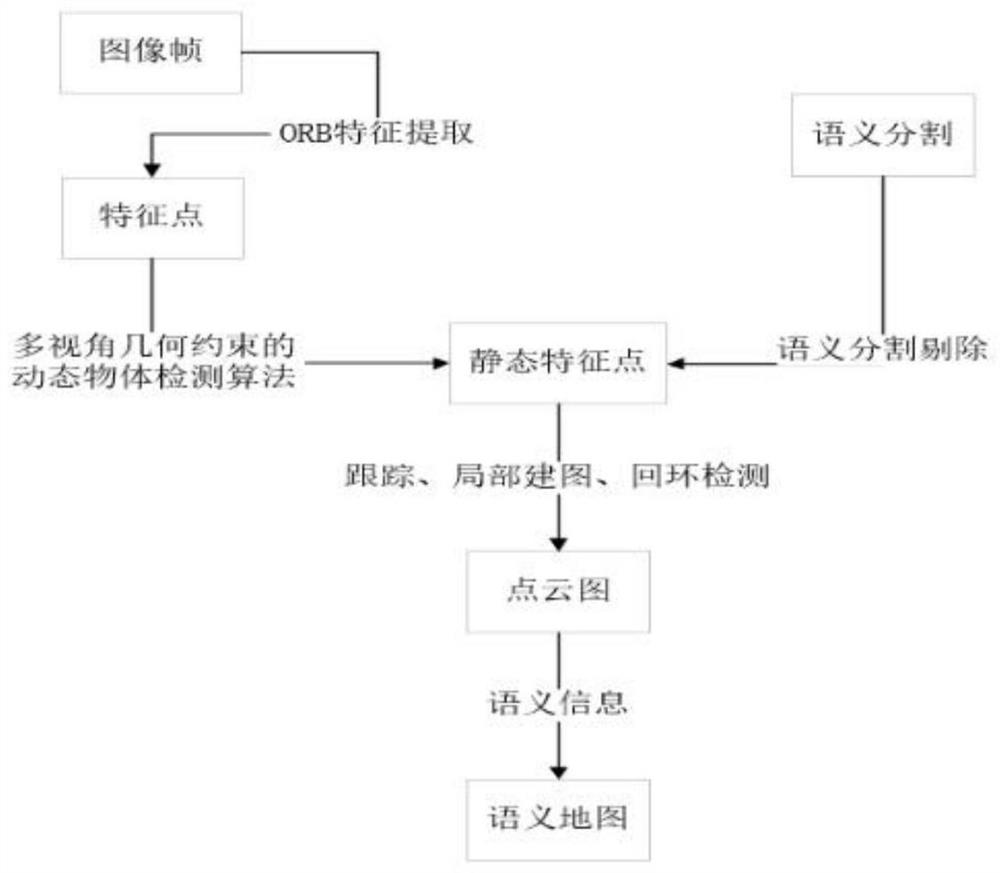

Visual SLAM method based on semantic segmentation of deep learning

PendingCN112132897AHigh precisionGood precisionImage enhancementImage analysisObject basedFeature extraction

The invention discloses a visual SLAM method based on semantic segmentation of deep learning, and relates to the technical field of computer vision sensing. The method comprises the following steps: acquiring an image through an RGBD depth camera, and performing feature extraction and semantic segmentation to obtain extracted ORB feature points and a pixel-level semantic segmentation result; detecting a moving object based on a multi-view geometric dynamic and static point detection algorithm, and deleting ORB feature points; and executing initialization mapping: sequentially executing tracking, local mapping and loopback detection threads, constructing an octree three-dimensional point cloud map of a static scene according to the key frame pose and a synthetic image obtained by a static background restoration technology, and finally realizing a dynamic scene-oriented visual SLAM method based on deep learning semantic segmentation. The precision of camera pose estimation and trajectoryevaluation of the visual SLAM in the dynamic scene is improved, and the robustness, stability and accuracy of the performance of a traditional visual SLAM system in the dynamic scene are enhanced.

Owner:ARMY ENG UNIV OF PLA

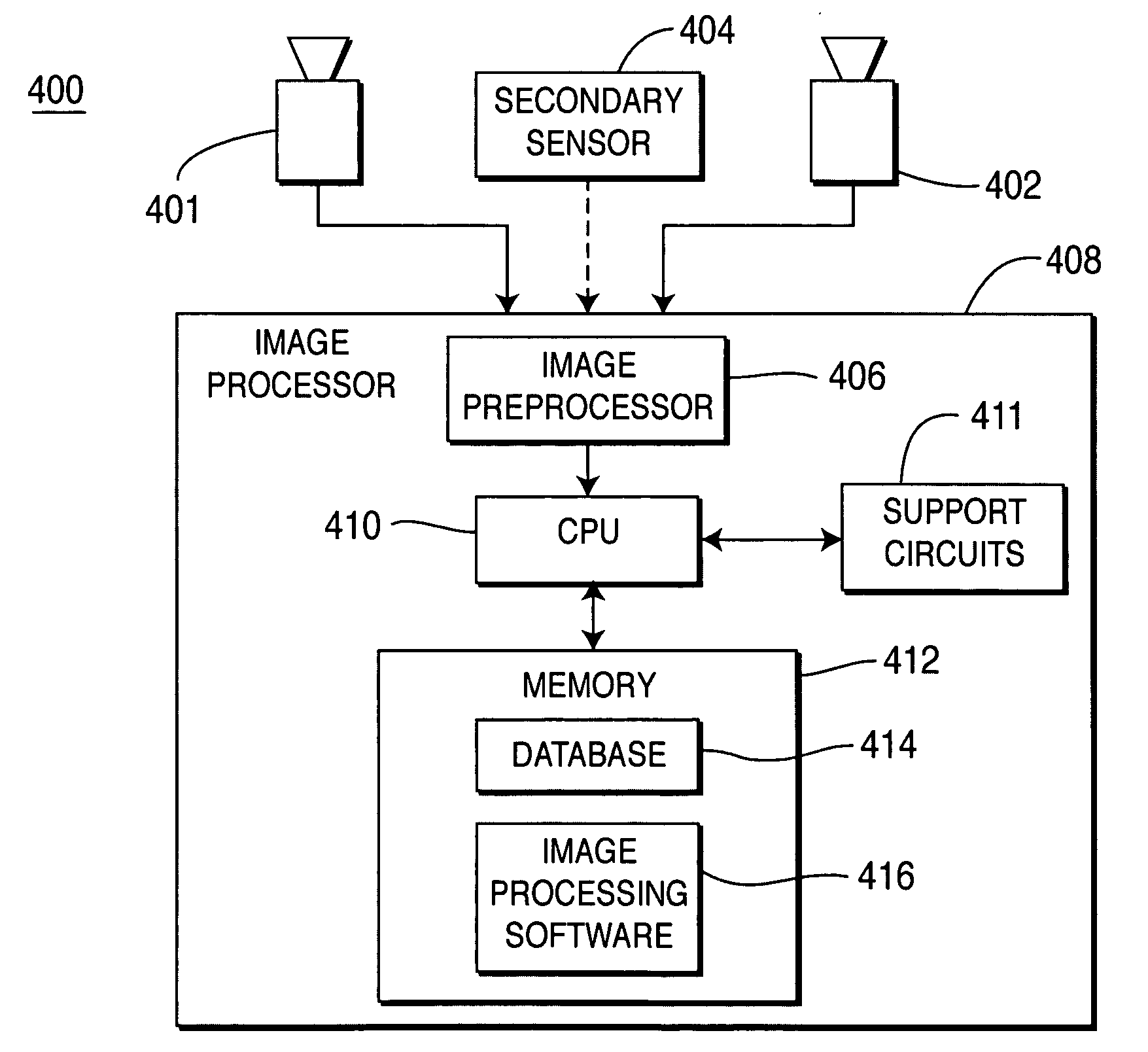

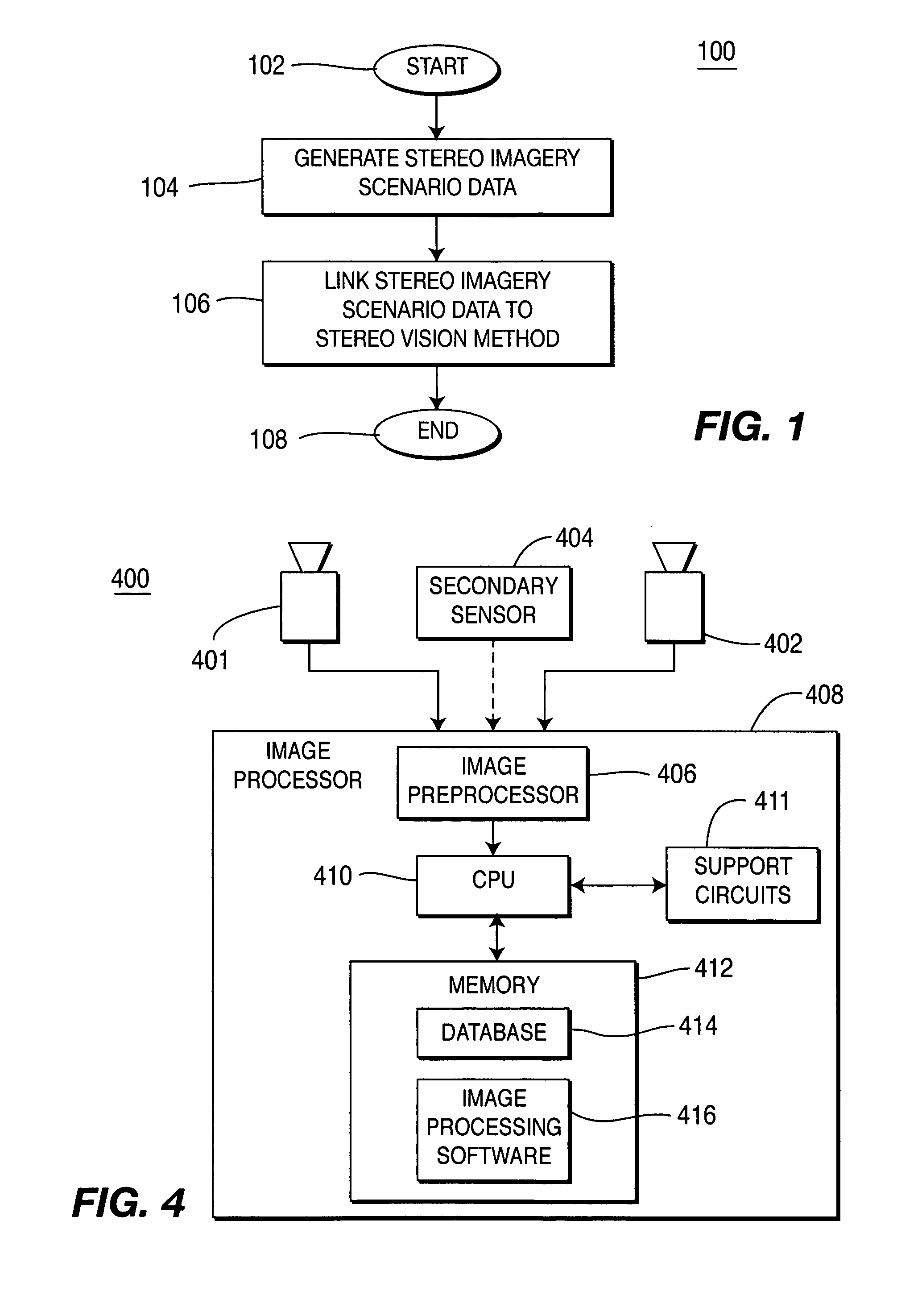

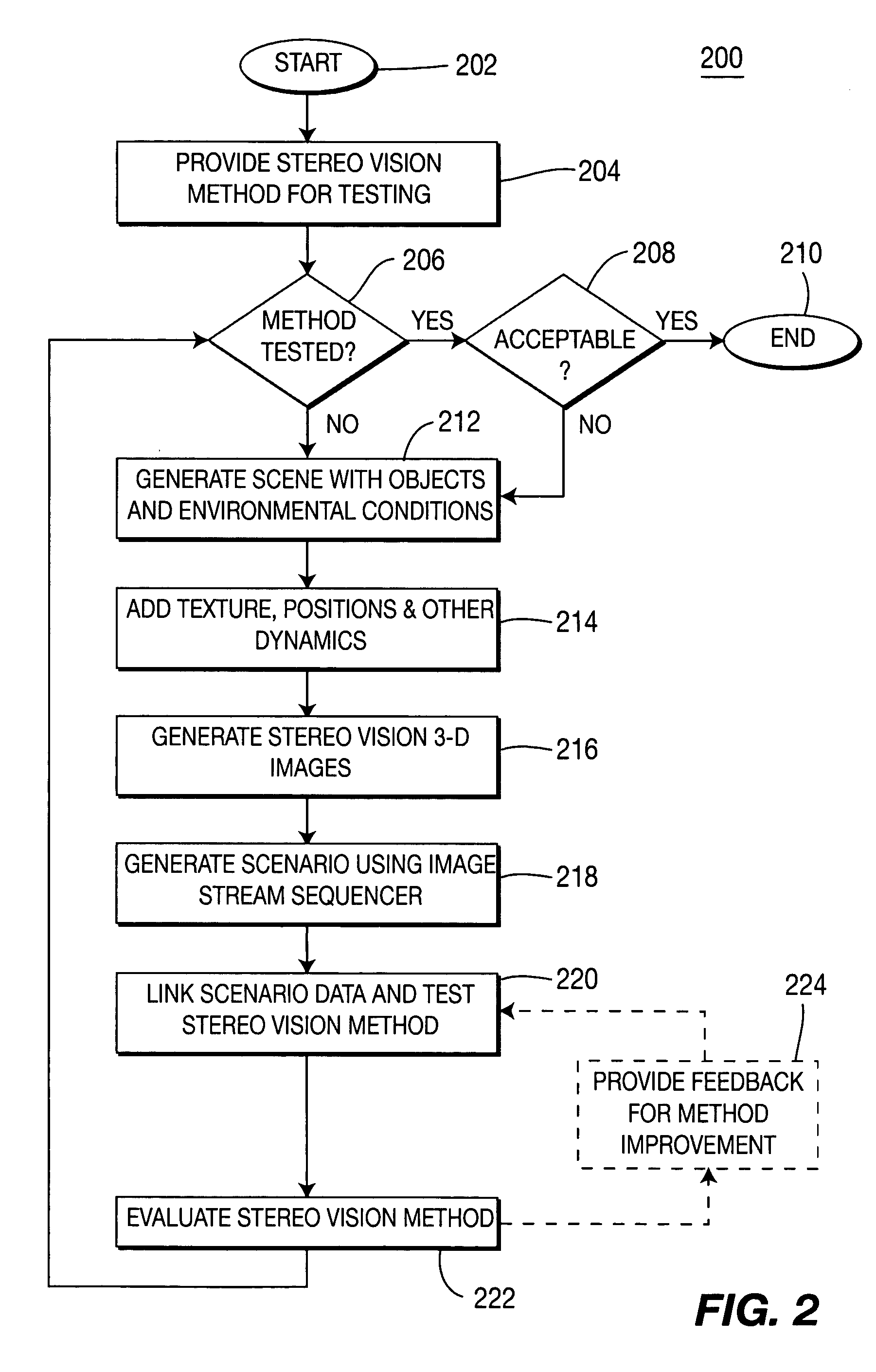

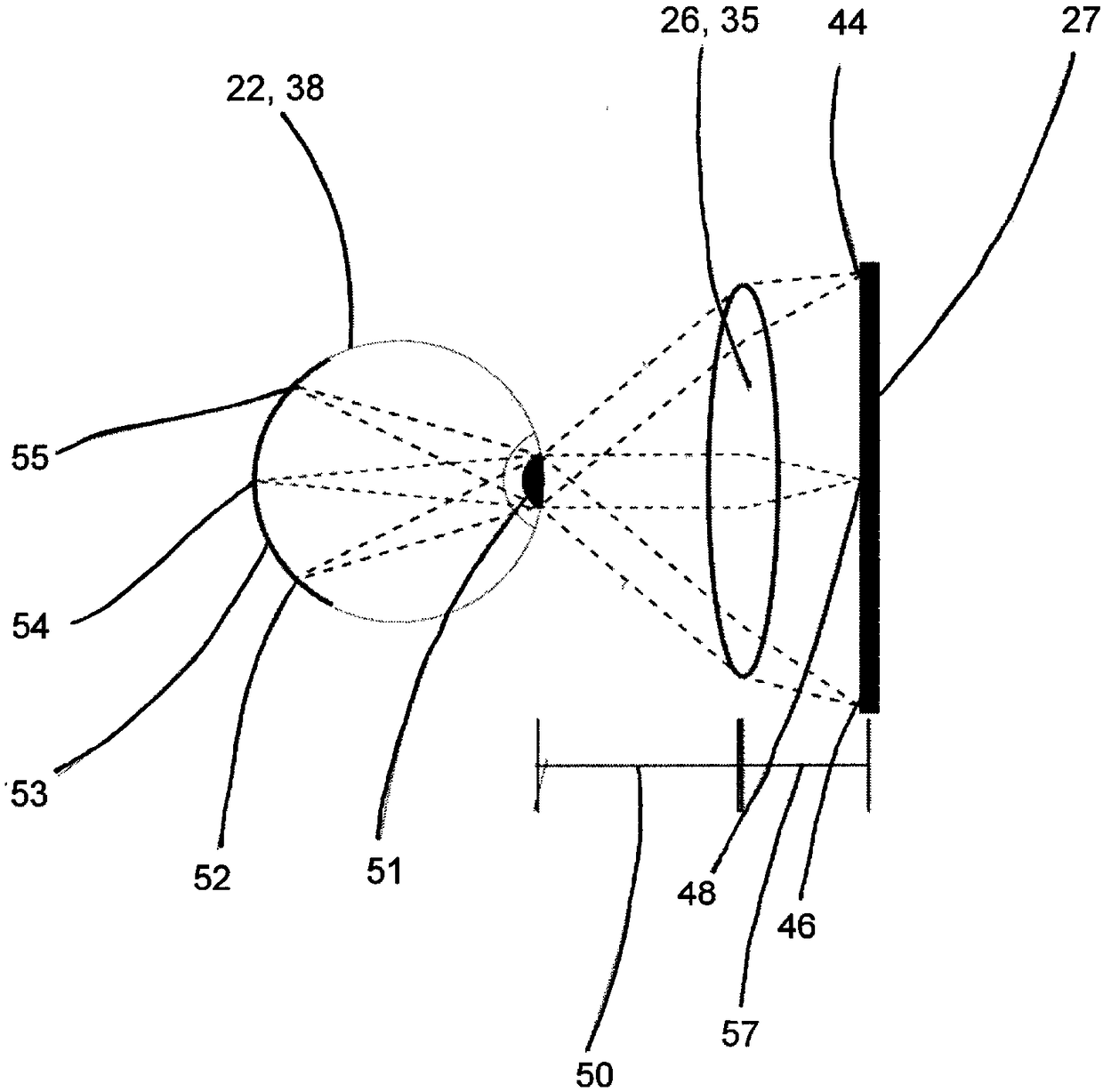

Method and apparatus for testing stereo vision methods using stereo imagery data

A method and apparatus for generating stereo imagery scenario data to be used to test stereo vision methods such as detection, tracking, classification, steering, collision detection and avoidance methods is provided.

Owner:SRI INTERNATIONAL

Finger tip point extraction method based on pixel classifier and ellipse fitting

InactiveCN105046199ARealize detectionAccurate detectionCharacter and pattern recognitionPattern recognitionSkin colour

The invention relates to a finger tip point extraction method based on a pixel classifier and ellipse fitting. A coordinate of a palm point provided by OpenNI (open type natural interaction) is employed to segment a hand area, a Bayes skin color model is employed to remove non-skin-color areas, and the hand area is accurately extracted; and the pixel classifier is defined for the clustering of finger areas, the contour of the finger areas is extracted, the least squares method is employed for ellipse fitting, and the point of two endpoints of the long axis of the ellipse, furthest away from the palm point, is the finger tip point. The method is advantageous in that finger tip points are accurately detected in real time based on a visual-only method, and compared with data glove and color glove methods, the method is more natural and comfortable.

Owner:JILIN JIYUAN SPACE TIME CARTOON GAME SCI & TECH GRP CO LTD

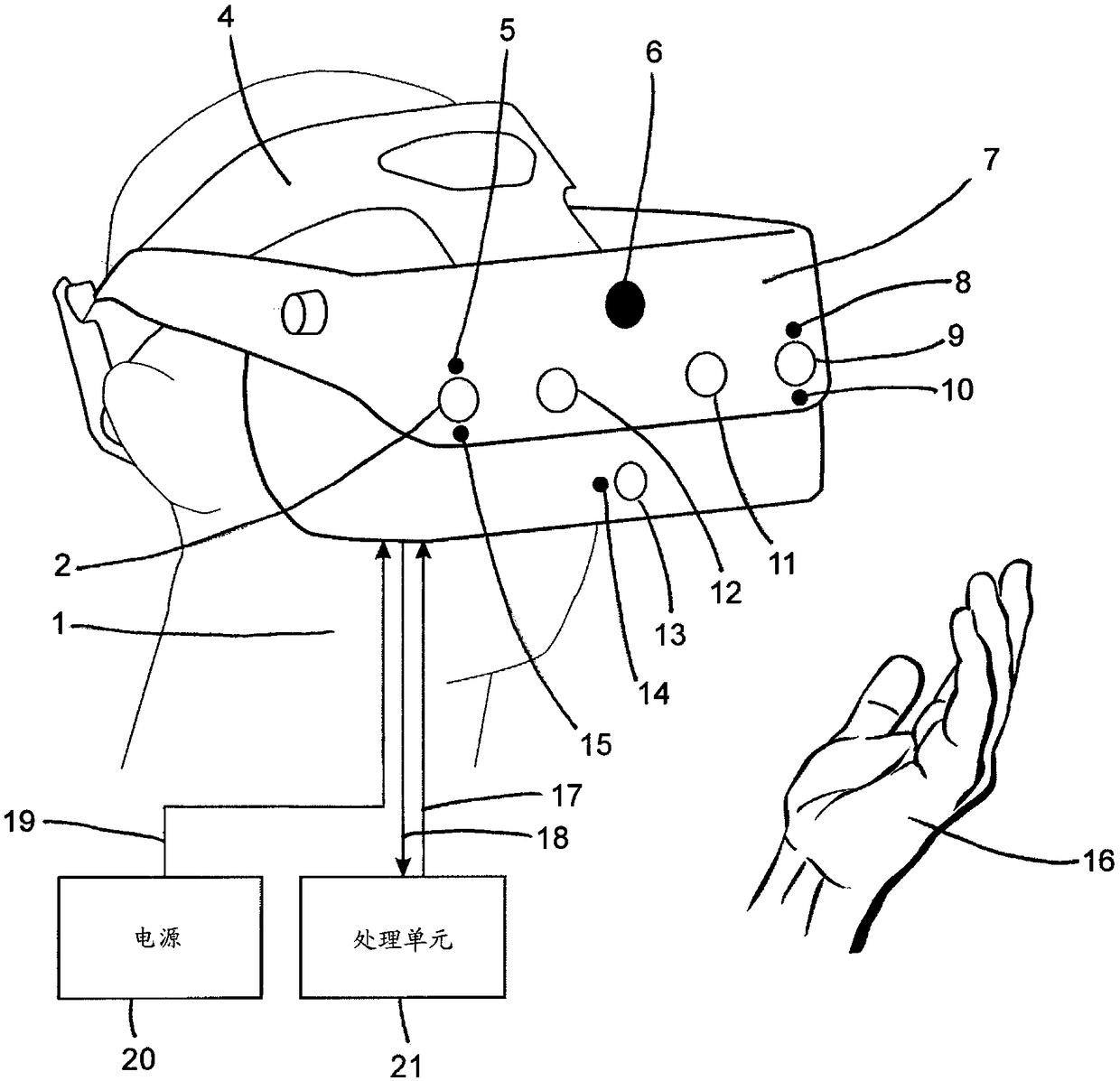

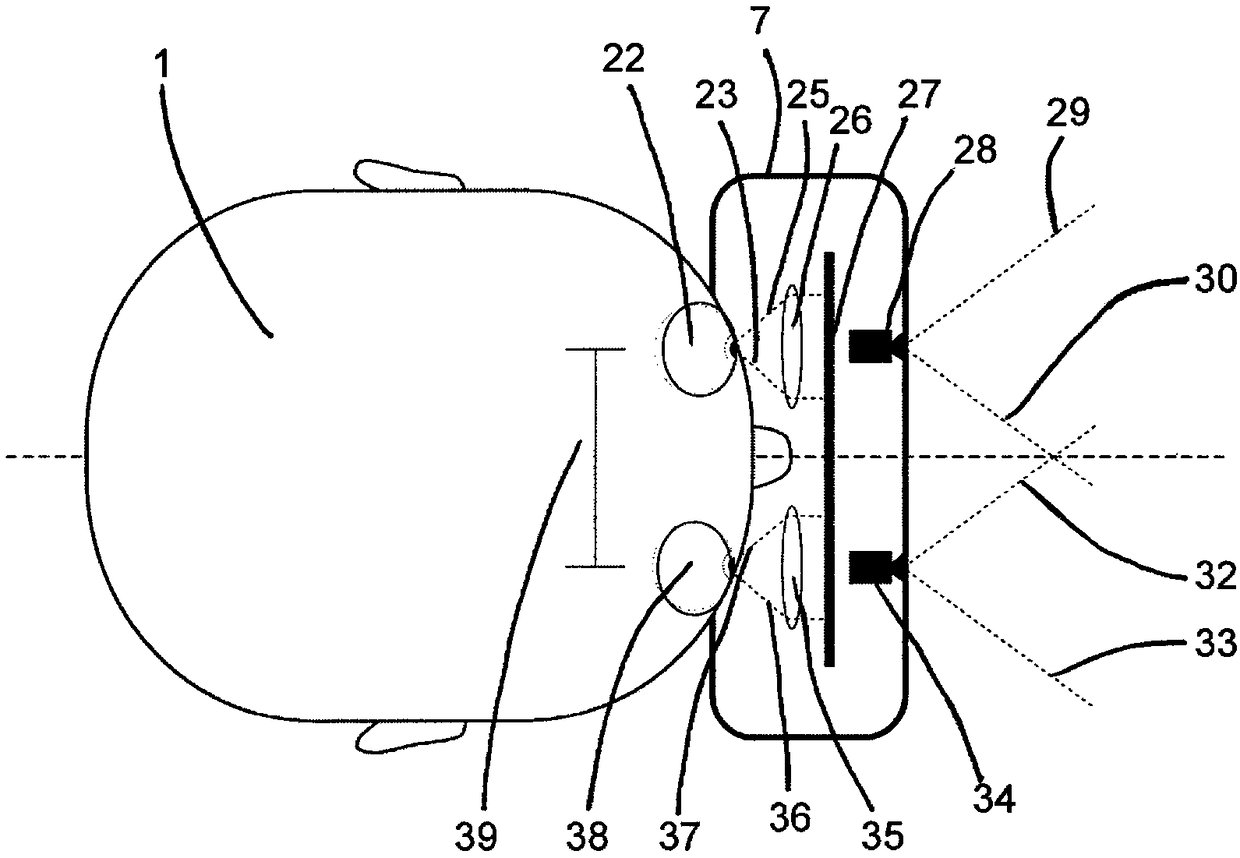

Head-mounted display for virtual and mixed reality with inside-out positional, user body and environment tracking

ActiveCN109477966AImage data processingInput/output processes for data processingMixed realityHigh frame rate

A Head-Mounted Display system together with associated techniques for performing accurate and automatic inside-out positional, user body and environment tracking for virtual or mixed reality are disclosed. The system uses computer vision methods and data fusion from multiple sensors to achieve real-time tracking. High frame rate and low latency is achieved by performing part of the processing on the HMD itself.

Owner:APPLE INC

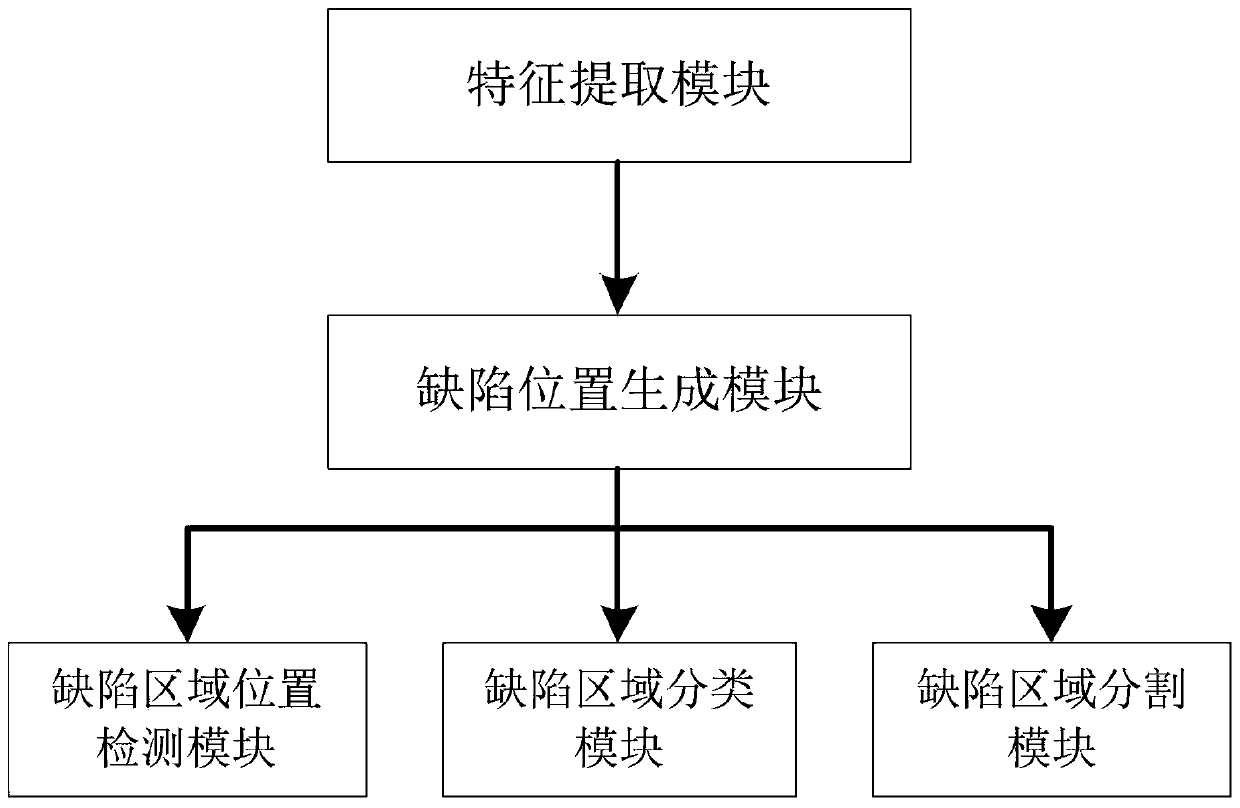

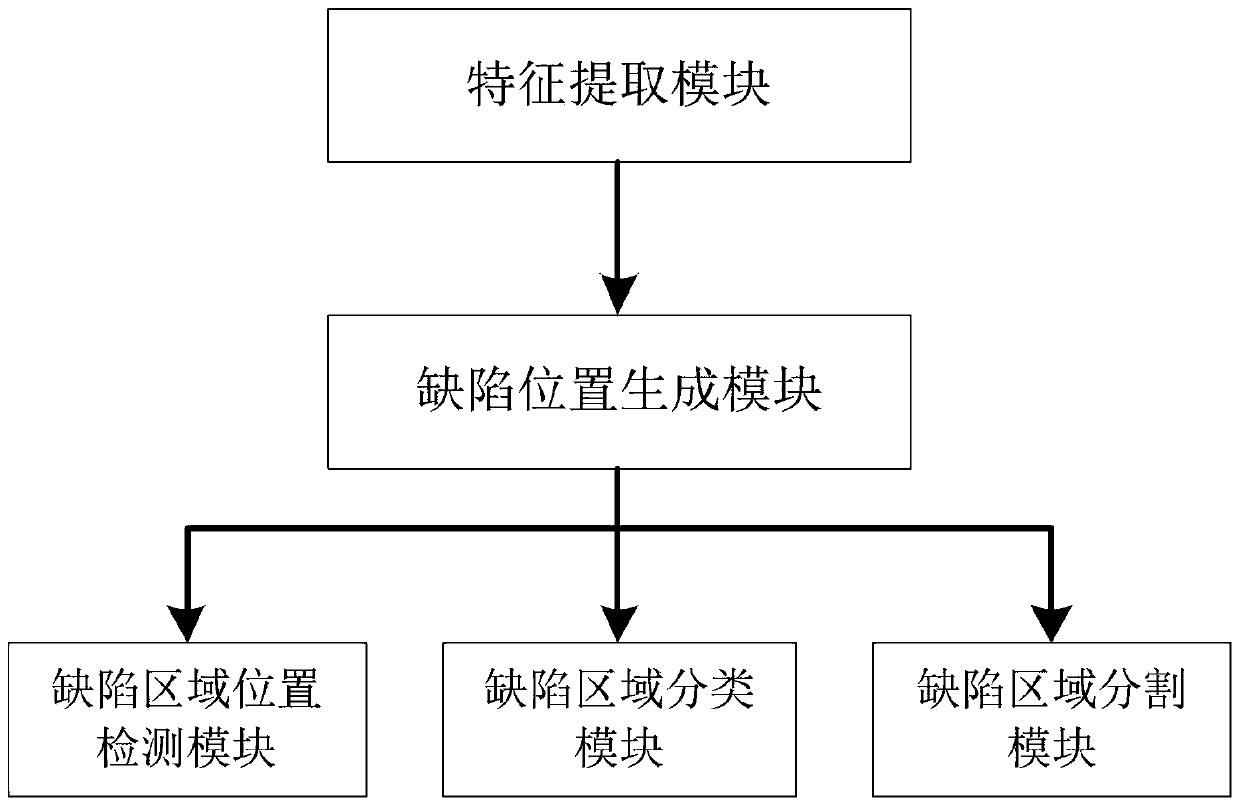

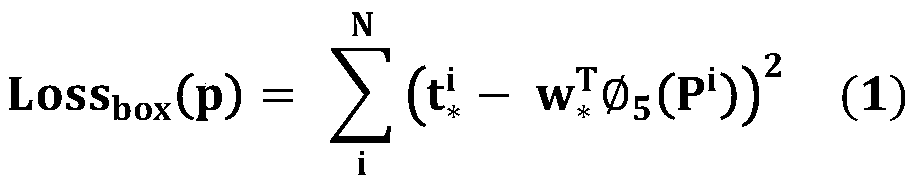

Concrete structure surface defect automatic detection method based on computer vision

PendingCN111127416ASolve the high false positive rateSolve practical problems such as poor versatilityImage enhancementImage analysisVisual technologyEngineering

The invention belongs to the technical field of computer vision, and particularly relates to a concrete structure surface defect automatic detection method based on computer vision, which comprises the following steps: performing time axis up-sampling on video data to obtain an image, and inputting the image into a deep convolutional neural network model to obtain a defect position, a defect category and a defect segmentation effect. In actual concrete structure detection, the method provided by the invention is based on a video image recognition technology under a deep learning framework, andthe actual problems of high false detection rate, poor universality and the like in the conventional computer vision method are fundamentally solved; from video image data processing and final resultoutput, the method has the advantages of being high in automation degree, good in real-time performance, high in accuracy, good in universality, convenient to upgrade and maintain in the later periodand the like.

Owner:WUHAN UNIV

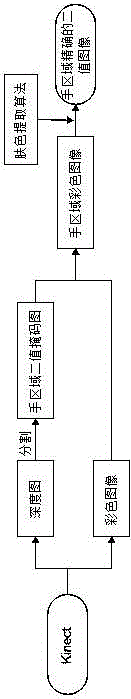

Wrist point and arm point extraction method based on depth camera

ActiveCN104899591AStable extractionSimple methodCharacter and pattern recognitionColor imageHand parts

The invention relates to a wrist point and arm point extraction method based on a depth camera, applied to the fields of virtual reality and augmented reality. Segmenting hand areas according to color images and depth maps collected by a Kinect depth camera, and palm point three-dimensional positions provided by an OpenNI / NITE (open type natural interactive API); extracting accurate hand areas by a Bayesian skin color model, and extracting wrist points and arm points in a vision method; and designing a plurality of man-machine interaction gestures based on the extracted wrist points and arm points and palm points provided by an NITE bank. Since the Kinect depth camera comes out, many researchers have utilized the Kinect depth camera to do research on gesture identification, however, researches based on wrists and arms are very few, and methods of extracting the wrist points and arm points based on a vision method are rare. The wrist point and arm point extraction method of the invention has a small calculated quantity, is simple to operate, and can timely, stably and accurately extract the wrist points and arm points.

Owner:JILIN JIYUAN SPACE TIME CARTOON GAME SCI & TECH GRP CO LTD

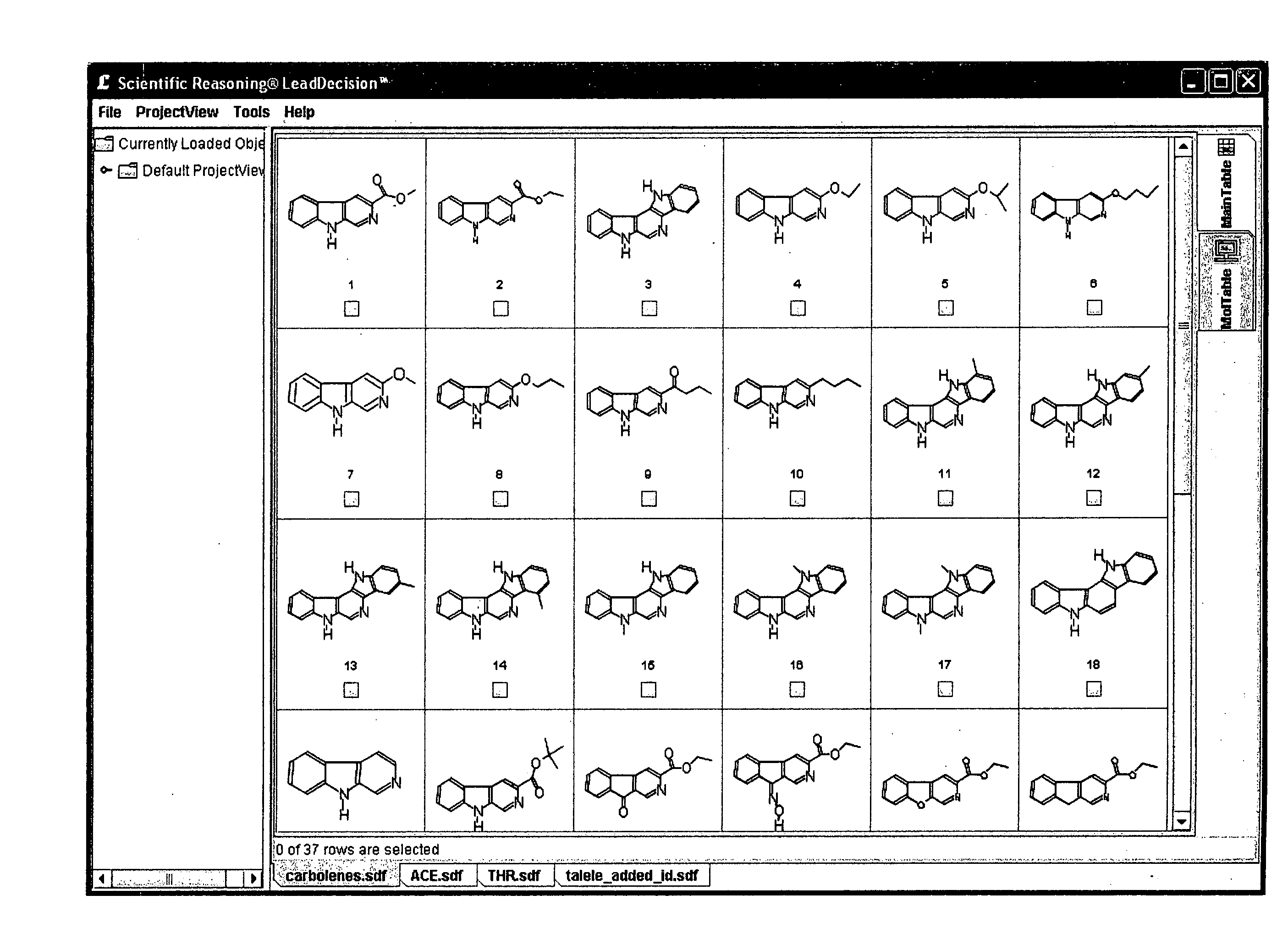

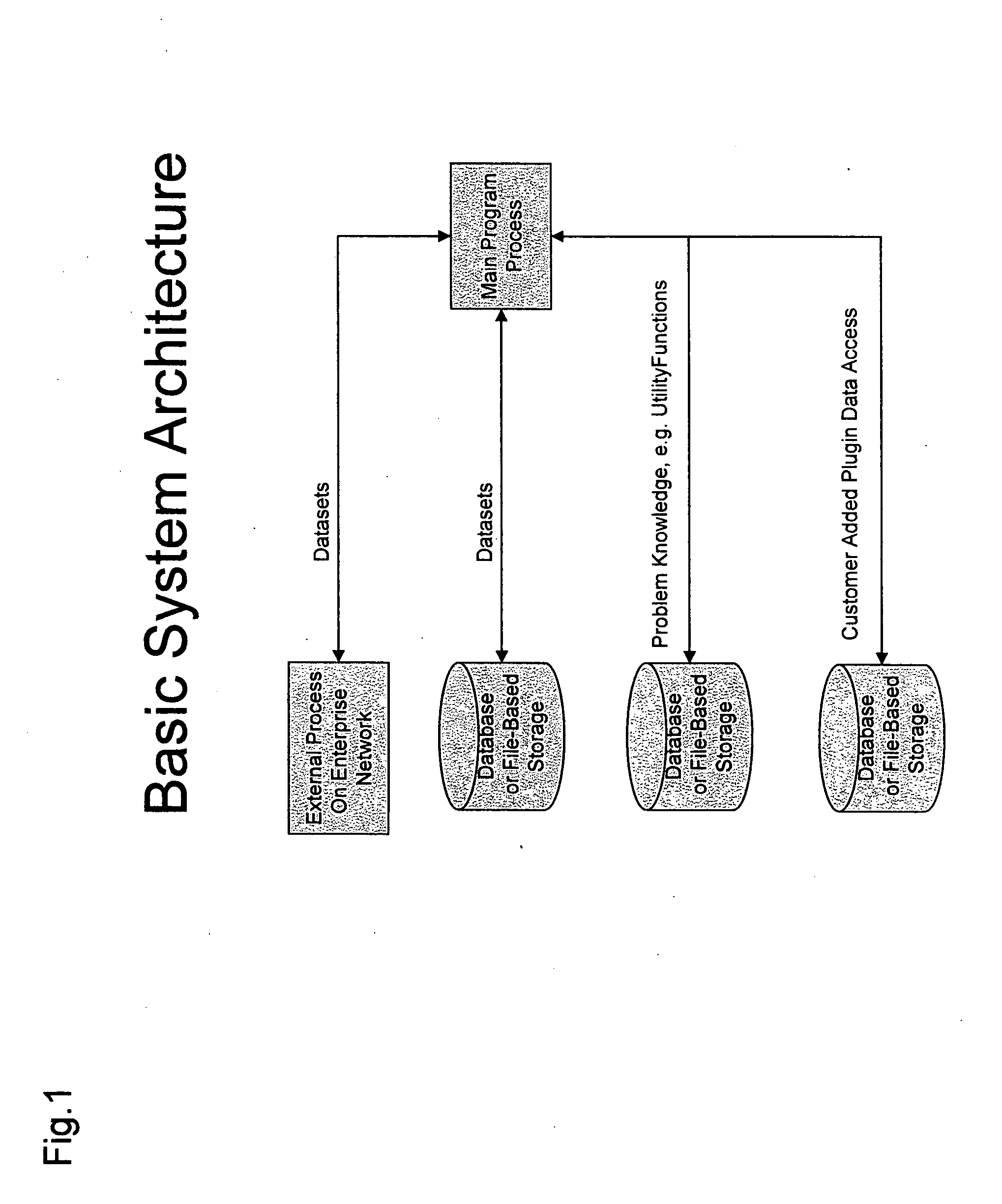

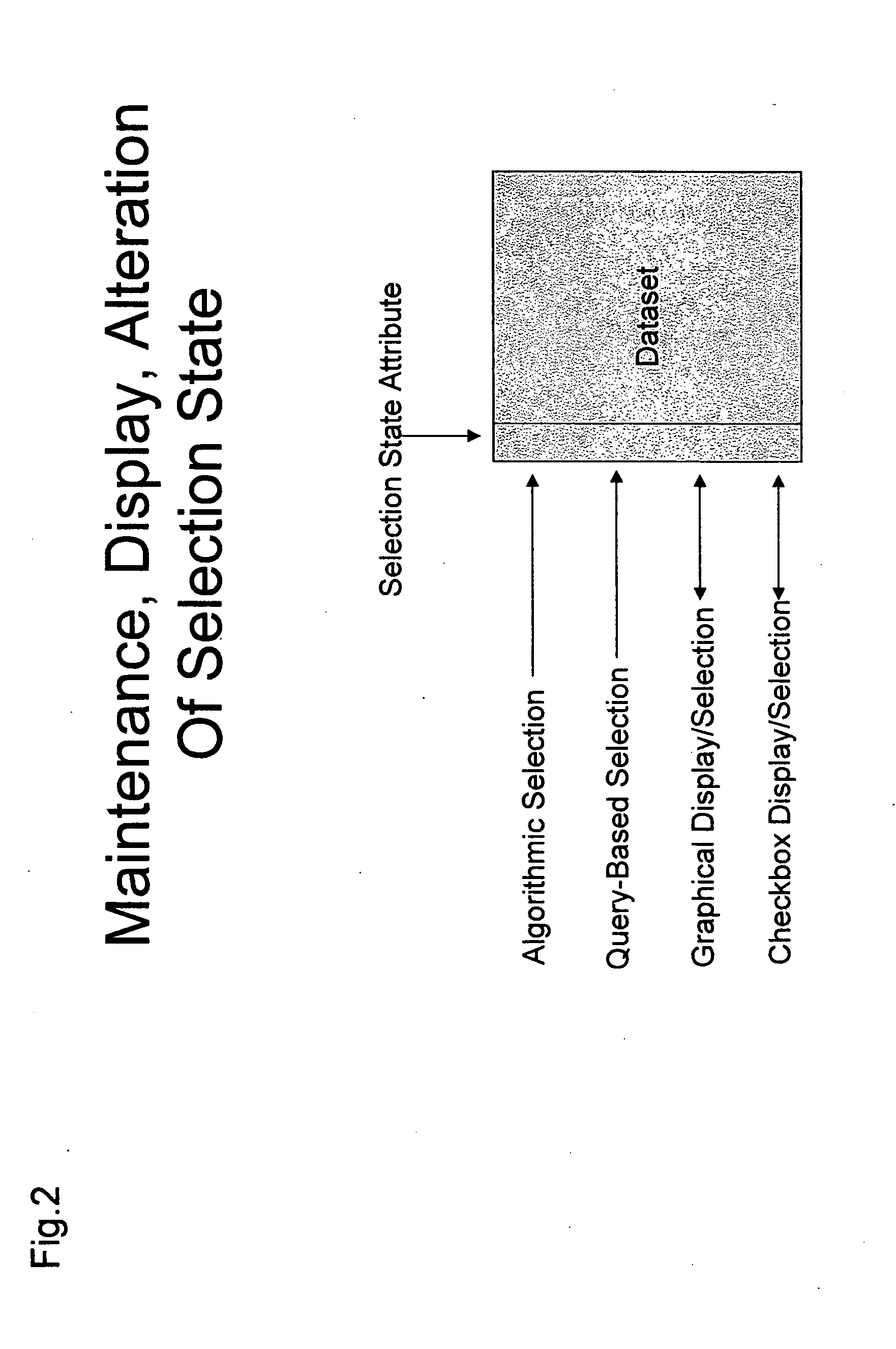

System for visualization and analysis of numerical and chemical information

InactiveUS20070276636A1Easy to handleEasy to processComputation using non-denominational number representationChemical data visualisationData setCognitive capability

The invention contains methods for identifying, parameterizing, saving and utilizing Multicriterion Decision Making (MCDM) functions to analyze data sets. The invention contains methods for performing selections with individual interaction with checkboxes, plots, algorithms, and queries, all linked to a single selection state attribute that is automatically added to each dataset. Successive selection steps may be combined with boolean operators: SET / AND / OR. Selection state is sortable to allow selected objects to be visually collected. Together, this provides a powerful suite for MCDM, which is true Decision Support. The invention contains methods for visualizing molecular structures which allow greater cognitive power to be brought to bear on crucial aspects of many types of structural comparison and analysis, using visual topological cueing. The invention contains visual methods for enhancing an analyst's ability to identify and robustly recognize relationships between molecular structures and the properties they give rise to.

Owner:WYTHOFF BARRY JOHN

Ultrasonic or CT medical image three-dimensional reconstruction method based on transfer learning

ActiveCN112767532ARealize 3D reconstructionImprove the efficiency of auxiliary diagnosisNeural architecturesMachine learningNuclear medicineUnsupervised learning

The invention discloses an ultrasonic or CT medical image three-dimensional reconstruction method based on transfer learning, which is characterized in that an unsupervised learning mechanism is adopted, and a three-dimensional reconstruction function of an ultrasonic image is achieved through transfer learning by utilizing a visual method according to the characteristics of ultrasonic or CT image acquisition. By means of the method, three-dimensional reconstruction of ultrasonic or CT images can be effectively achieved, in auxiliary diagnosis of artificial intelligence, the effect of auxiliary diagnosis is fully played, and the efficiency of auxiliary diagnosis can be improved through the 3D visual reconstruction result.

Owner:EAST CHINA NORMAL UNIVERSITY

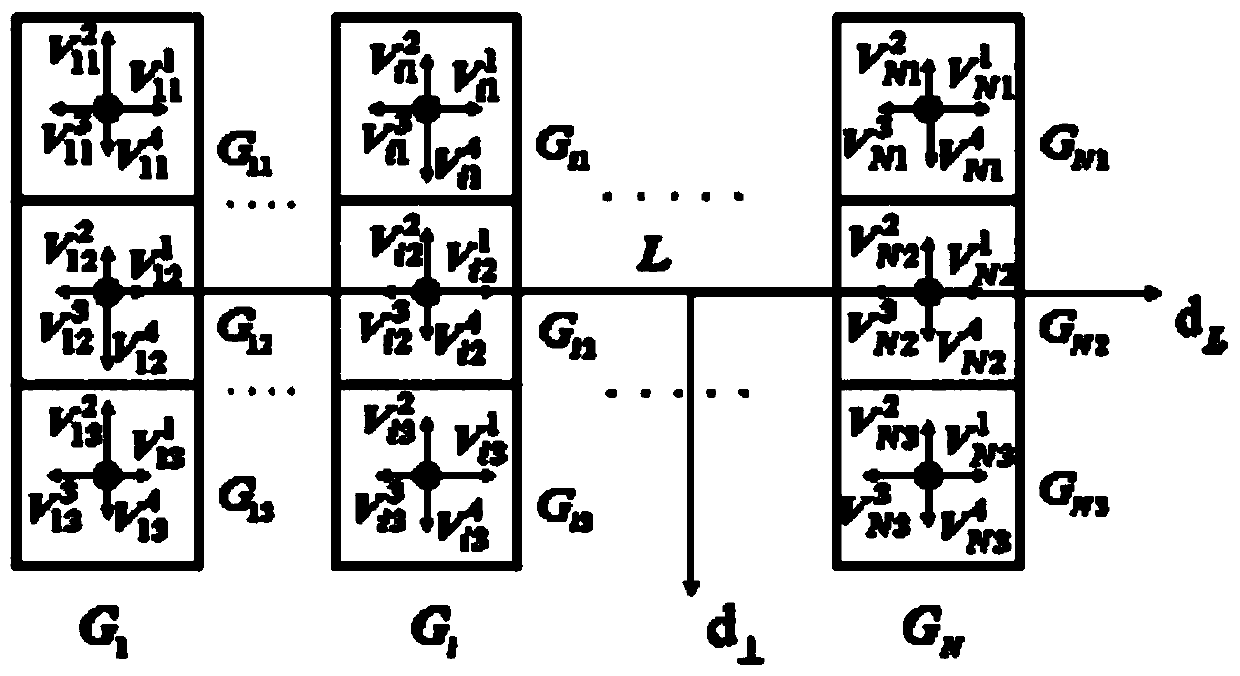

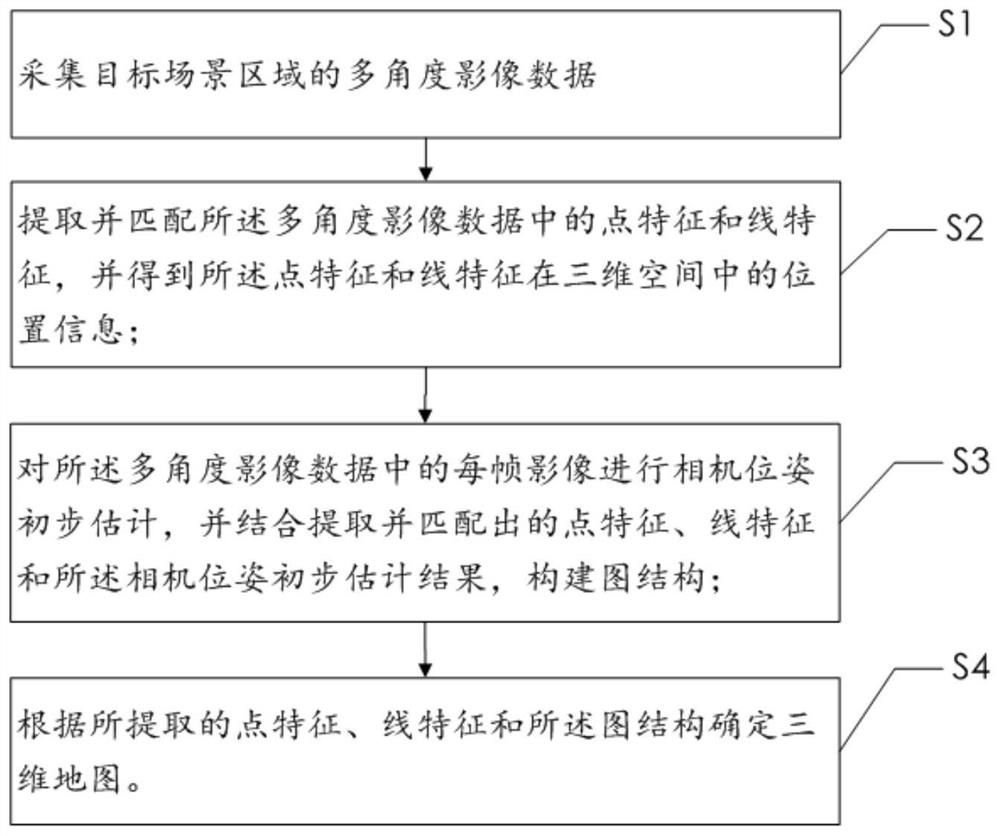

Point-line combined multi-camera vision SLAM method and device and storage medium

InactiveCN112085790AClear and abstract descriptionIntuitive abstract descriptionDetails involving processing stepsImage enhancementData ingestionThree-dimensional space

The invention provides a point-line combined multi-camera vision SLAM method and device, and a storage medium, and the method comprises the steps: collecting multi-angle image data of a target scene,extracting and matching point features and line features in the multi-angle image data, and obtaining the position information of the point features and the line features in a three-dimensional space;and performing camera pose preliminary estimation on each frame of image in the multi-angle image data, constructing a graph structure in combination with the extracted and matched point features, line features and camera pose preliminary estimation results, and determining a three-dimensional map according to the extracted point features, line features and the graph structure. According to the embodiment of the invention, a point and line feature joint calculation method is adopted, and the line features contain more information, so that the tracking stability and accuracy can be improved, and a sparse feature map constructed by using the line features can be used for carrying out abstract description on a scene more clearly and intuitively.

Owner:THE HONG KONG POLYTECHNIC UNIV SHENZHEN RES INST

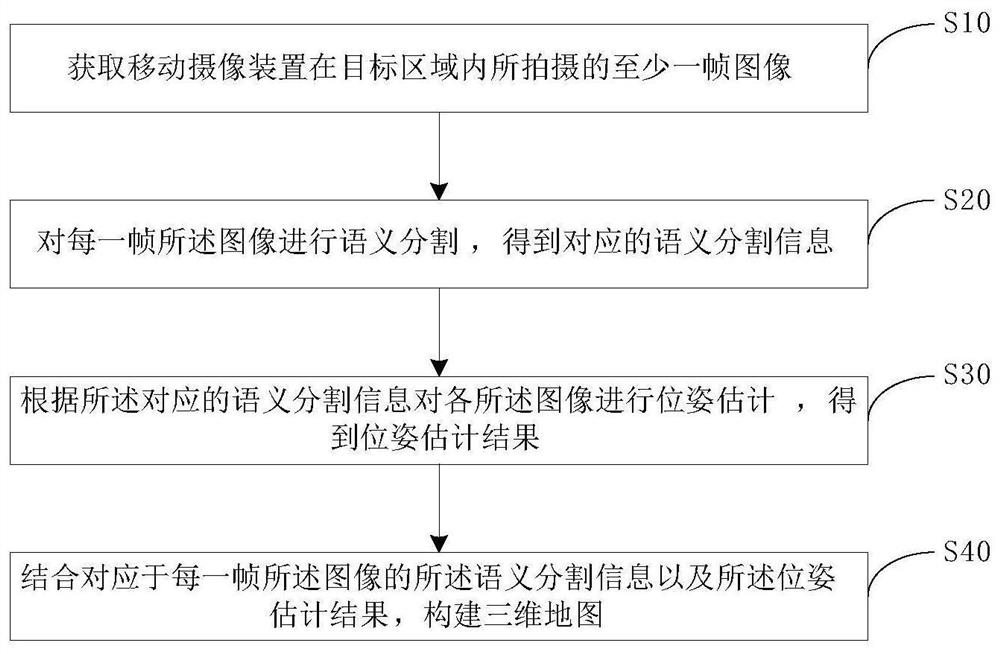

Three-dimensional map construction method and device, electronic equipment and storage medium

PendingCN113674416AImprove active cognitive abilityImage enhancementDetails involving processing stepsMobile cameraRobotic systems

The invention discloses a three-dimensional map construction method and device, electronic equipment and a storage medium. The three-dimensional map construction method comprises the following steps: acquiring at least one frame of image shot by a mobile camera device in a target area; performing semantic segmentation on each frame of image to obtain corresponding semantic segmentation information; performing pose estimation on each image according to the corresponding semantic segmentation information to obtain a pose estimation result; and constructing a three-dimensional map in combination with the semantic segmentation information and the pose estimation result corresponding to each frame of the image. According to the three-dimensional map construction method provided by the embodiment of the invention, a long-term vision SLAM method capable of effectively coping with the extreme appearance change of a scene is provided on the basis of the high-level cognitive information obtained by scene semantic segmentation; self-adaptive high-level cognition for a dynamic environment is formed through map representation fused with semantic information and updating and association of the map representation, and the active cognition ability of a robot system is improved.

Owner:INFORMATION SCI RES INST OF CETC

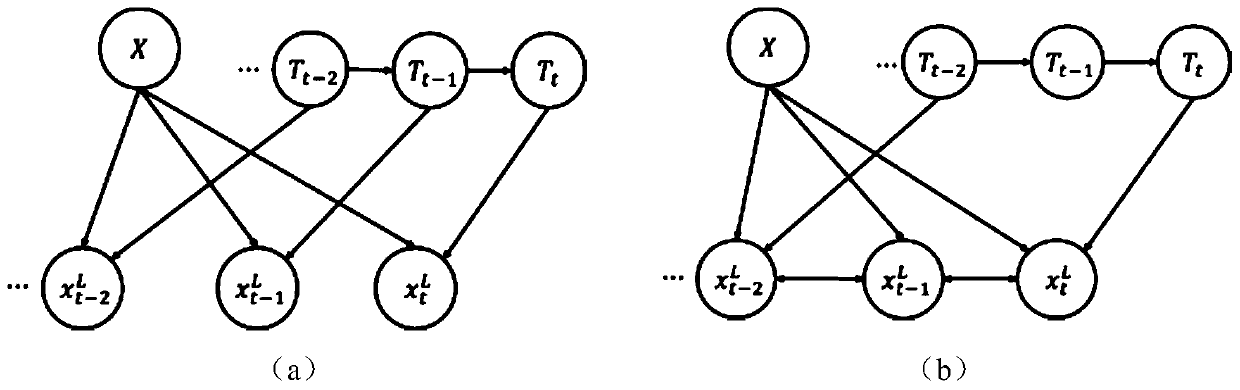

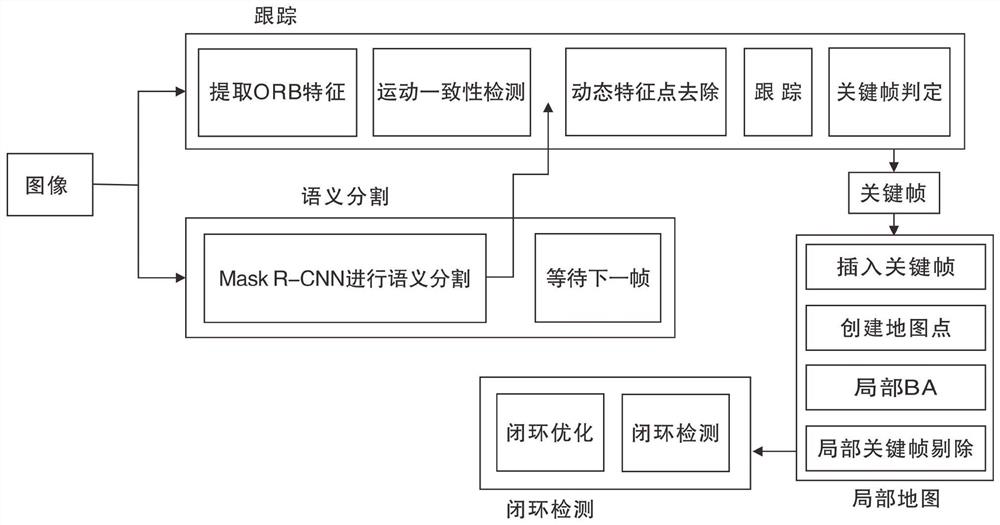

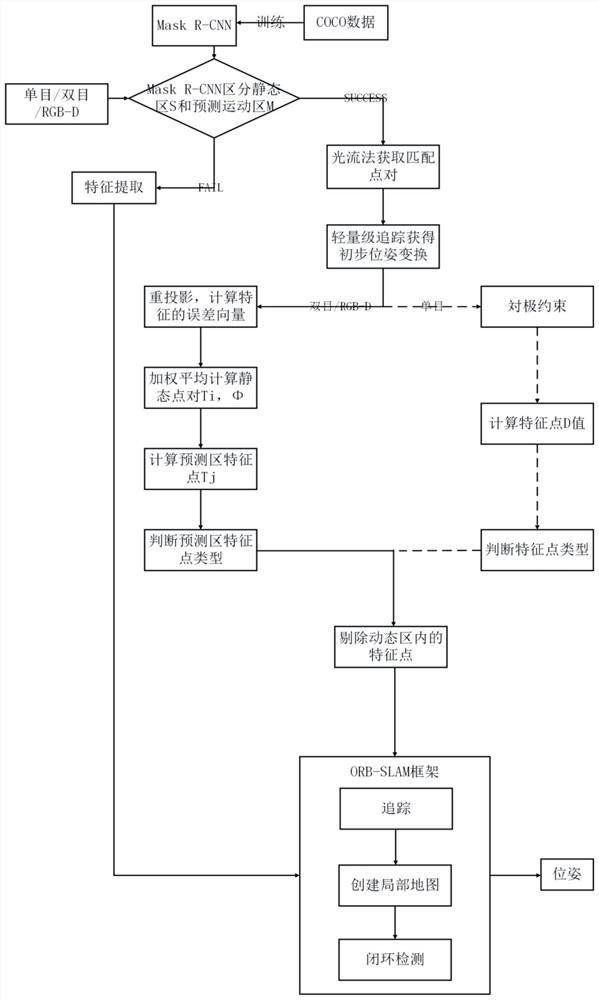

Robust vision SLAM method based on deep learning in dynamic scene

PendingCN112446882AReduce mistakesImprove accuracyImage enhancementImage analysisMachine learningVisual perception

The invention discloses a robust vision SLAM method based on deep learning in a dynamic scene, and belongs to the field of artificial intelligence and robot and computer vision. A camera is used as animage acquisition device. The method comprises the following steps: firstly, dividing an object in an image sequence acquired by a camera into a static object and a dynamic object by utilizing a MaskR-CNN semantic segmentation network based on deep learning, taking pixel-level semantic segmentation of the dynamic object as semantic priori knowledge, and removing feature points on the dynamic object; further checking whether the features are dynamic features or not by utilizing geometric constraints on polar geometric features; and forming a complete robust vision SLAM system by combining local mapping and a loop detection module. According to the method, the absolute trajectory error and the relative pose error of the SLAM system can be well reduced, and the accuracy and robustness of pose estimation of the SLAM system are improved.

Owner:BEIJING UNIV OF TECH

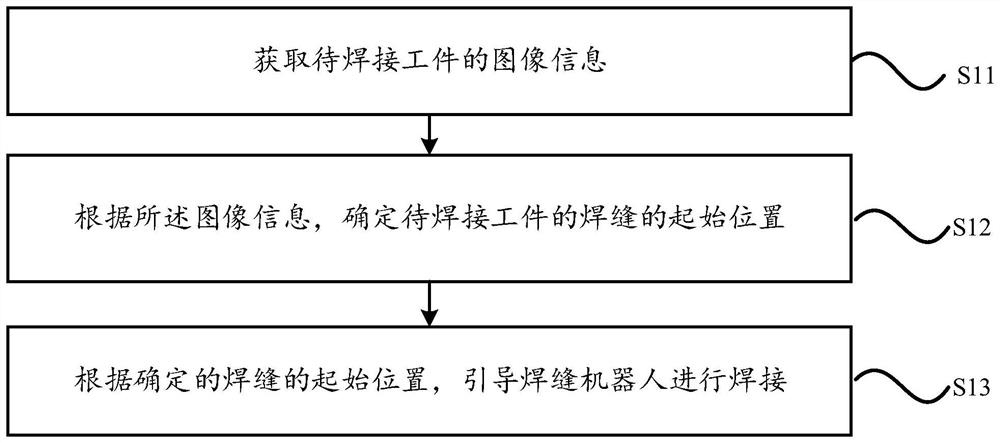

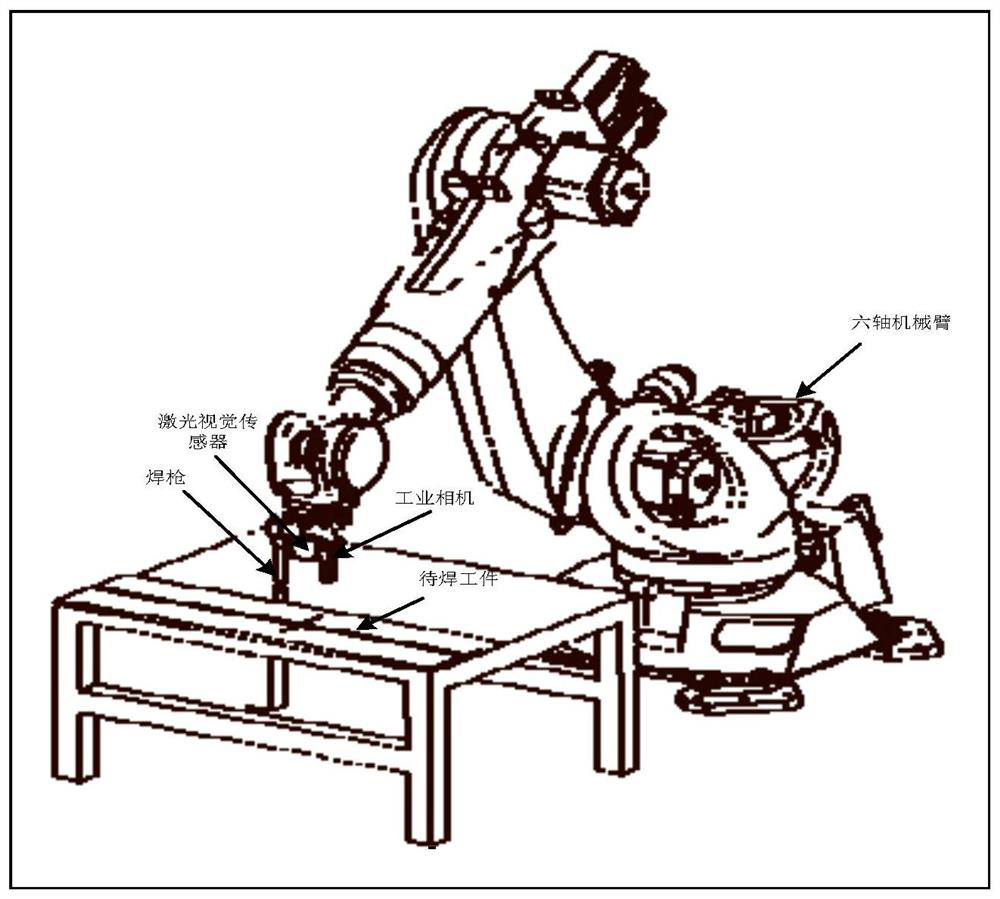

Welding seam positioning method and system based on visual guidance robot

ActiveCN113369761AImprove experienceNo human intervention requiredWelding/cutting auxillary devicesAuxillary welding devicesImaging processingEngineering

The invention relates to a welding seam positioning method and system based on a visual guidance robot. The method comprises the following steps: acquiring image information of a to-be-welded workpiece; according to the image information, determining the initial position of a welding seam of the to-be-welded workpiece; and according to the determined initial position of the welding seam, guiding a welding seam robot to weld. According to the technical scheme, the image information of the to-be-welded workpiece is obtained by the visual method, the initial position of the welding seam of the to-be-welded workpiece is determined through image processing, and the welding seam robot is guided to move to the initial position of the welding seam for welding. In the whole welding operation process, manual intervention is not needed, the welding efficiency is high, and the user experience degree is good.

Owner:BEIJING INSTITUTE OF PETROCHEMICAL TECHNOLOGY

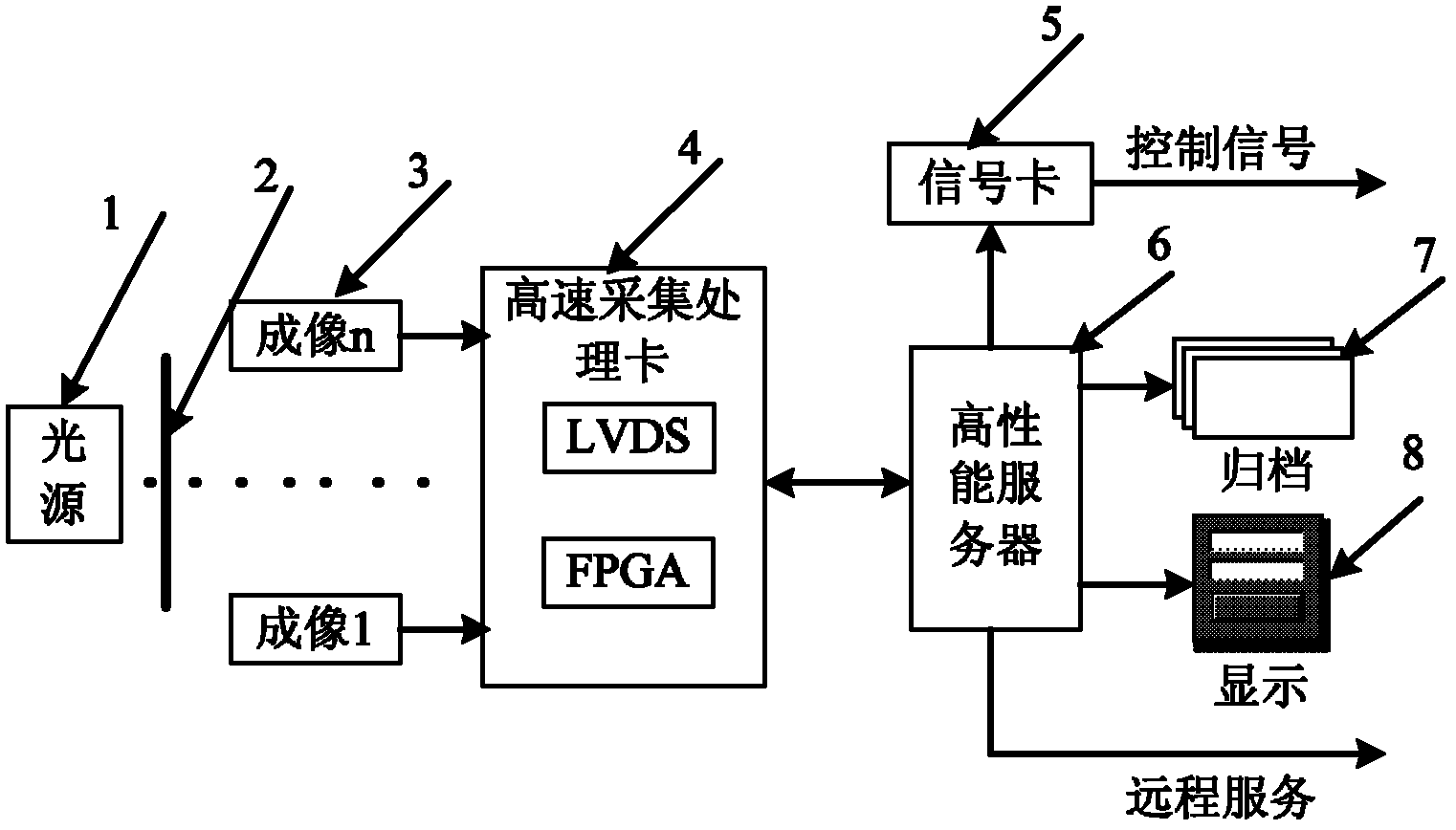

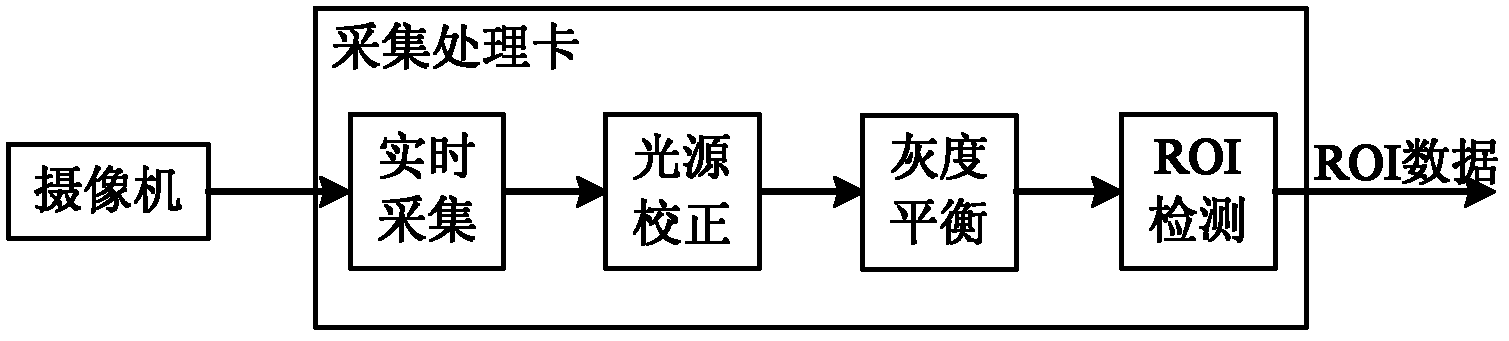

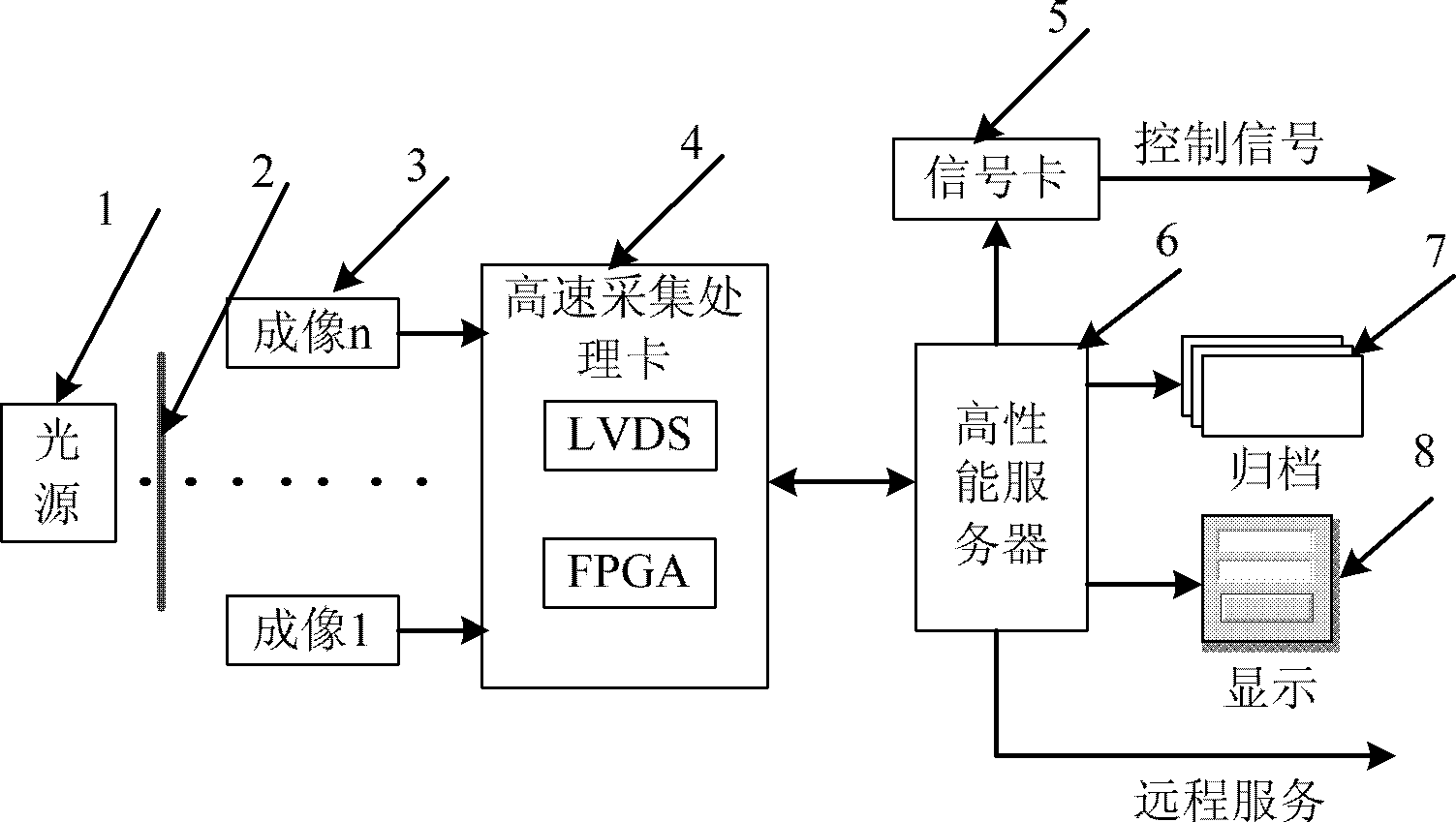

System for detecting quality of carpet threads based on visual method

InactiveCN102680479AImprove data processing efficiencyReal-time disconnection detectionOptically investigating flaws/contaminationRegion of interestOblique illumination

The invention discloses a system for detecting quality of carpet threads based on a visual method, which comprises a light source, a camera set, a high-speed acquisition and processing card and a server, wherein the light source is a linear light source and adopts an oblique illumination way; the camera is a linear camera and the camera set adopts a breadth splicing way; the output cable of the linear camera is connected with the high-speed image acquisition and processing card which is integrated with a field programmable gate array (FPGA) module, and the FPGA module comprises a brightness correction unit, a gray balancing unit, a target segmentation unit, and a region of interest (ROI) unit; the high-speed image acquisition and processing card is in signal connection with the server; and the server comprises a defect judgment module for analyzing an ROI image, and is communicated with a carpet thread controller by a signal card.

Owner:ZHEJIANG UNIV OF TECH

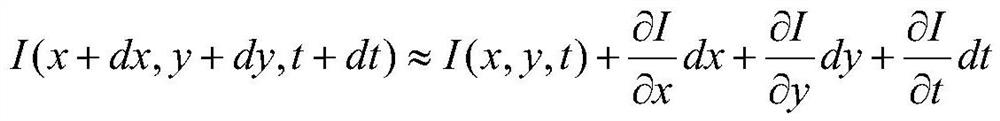

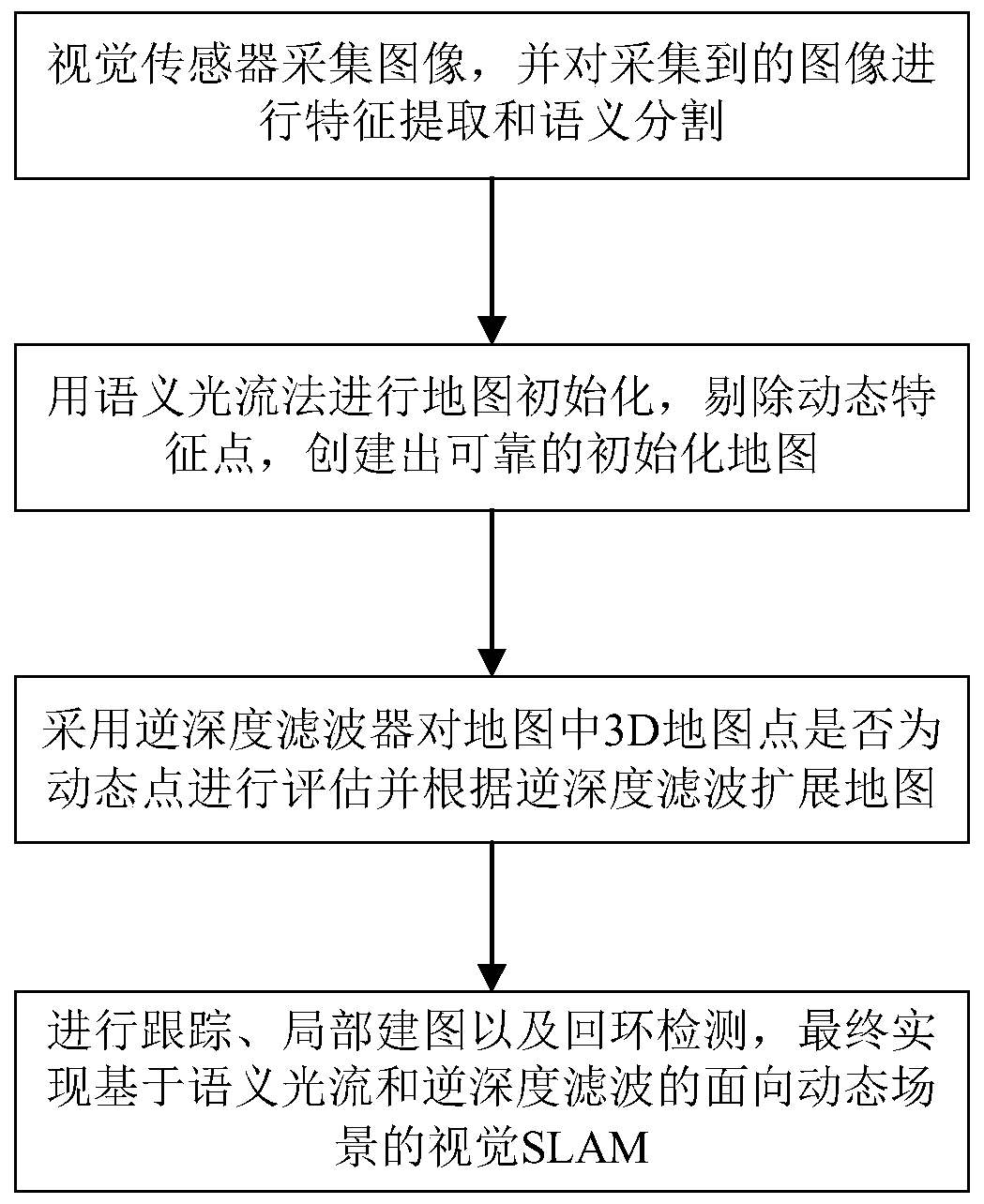

Visual SLAM method based on semantic optical flow and inverse depth filtering

ActiveCN111311708ASolve problems such as inability to understand scene information and inability to cope with dynamic scenesImprove the accuracy of pose calculationDrawing from basic elementsCharacter and pattern recognitionEvaluation resultFeature extraction

The invention relates to a visual SLAM method based on semantic optical flow and inverse depth filtering, and the method comprises the following steps: (1) a visual sensor collects an image, carries out the feature extraction and semantic segmentation of the collected image, and obtains extracted feature points and a semantic segmentation result; and (2) according to the feature points and a segmentation result, performing map initialization by using a semantic optical flow method, removing dynamic feature points, and creating a reliable initialization map; and (3) evaluating whether 3D map points in the initialized map are dynamic points or not by adopting an inverse depth filter, and expanding the map according to an evaluation result of the inverse depth filter. And (4) continuing to perform tracking, local mapping and loopback detection in sequence for the map expanded by the depth filter, and finally realizing dynamic scene-oriented visual SLAM based on semantic optical flow and inverse depth filtering.

Owner:BEIHANG UNIV

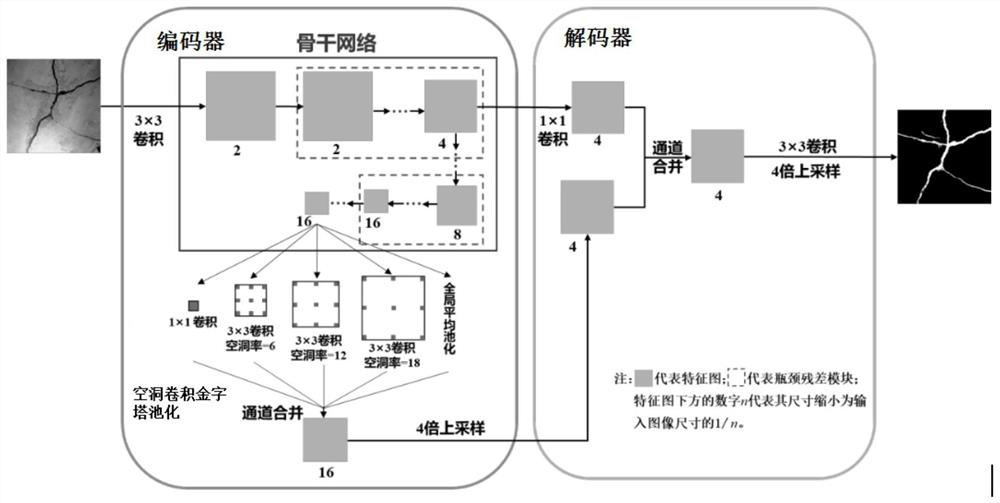

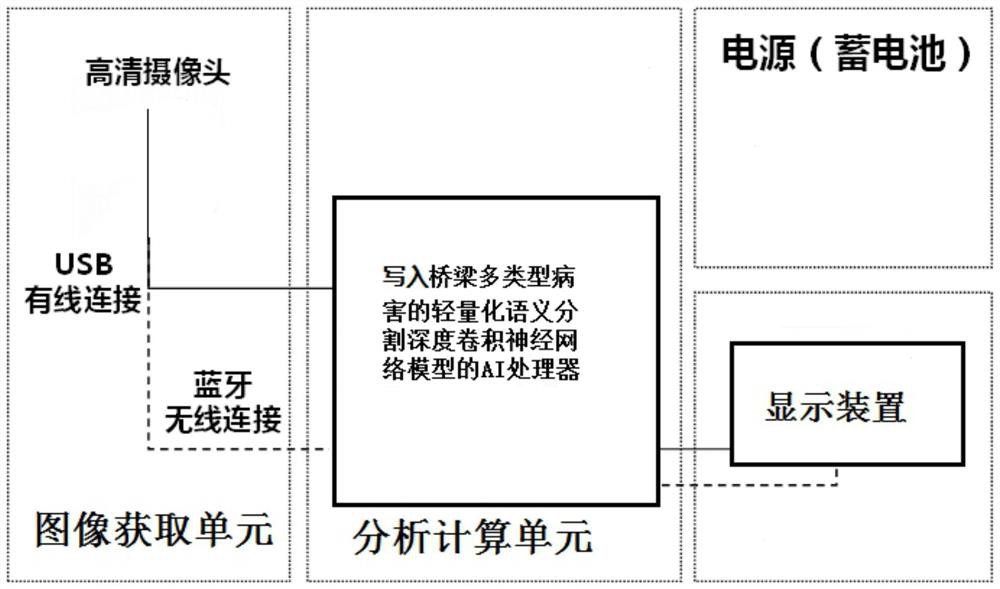

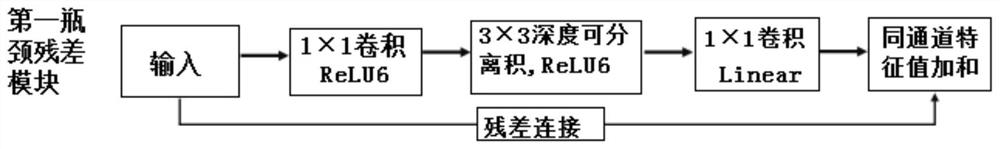

Computer vision method and intelligent camera system for bridge health diagnosis

The invention discloses a computer vision method and an intelligent camera system for bridge health diagnosis, belongs to the technical field of bridge health monitoring, and solves the problems of low image recognition precision, poor real-time performance and low efficiency of bridge health diagnosis in the prior art. The method comprises the following steps: establishing a lightweight semantic segmentation deep convolutional neural network model for bridge multi-type diseases; establishing an image data set of the multi-type diseases of the bridge, and obtaining a trained lightweight semantic segmentation deep convolutional neural network model of the multi-type diseases of the bridge; and collecting a bridge image in real time, and obtaining a semantic segmentation result graph of the bridge image. The method and the system are suitable for monitoring and detecting the health conditions of multiple types of bridge diseases online in real time, and can directly carry or integrate an integrated part of image acquisition, analysis and calculation and result display on inspection equipment such as an unmanned aerial vehicle, a robot, a detection vehicle and the like, so that automatic acquisition and intelligent identification of bridge disease images are realized.

Owner:HARBIN INST OF TECH

Visual SLAM method based on semantic segmentation dynamic points

PendingCN113516664AHigh precisionImprove accuracyImage enhancementImage analysisVisual technologyFeature extraction

The invention discloses a visual SLAM method based on semantic segmentation dynamic points, and relates to the technical field of computer vision. The method comprises the following steps: acquiring environment image information through an RGB-D camera, and performing feature extraction and semantic segmentation on an obtained image to obtain an extracted ORB feature point and a semantic segmentation result; using a dynamic object detection algorithm based on multi-view geometric constraints to detect residual dynamic objects and reject dynamic feature points; and tracking, local mapping and loopback detection threads are executed in sequence, so that an accurate static scene octree three-dimensional semantic map is constructed in a dynamic scene, and finally the visual SLAM method based on semantic segmentation dynamic points facing the dynamic scene is realized.

Owner:CHANGCHUN UNIV OF TECH

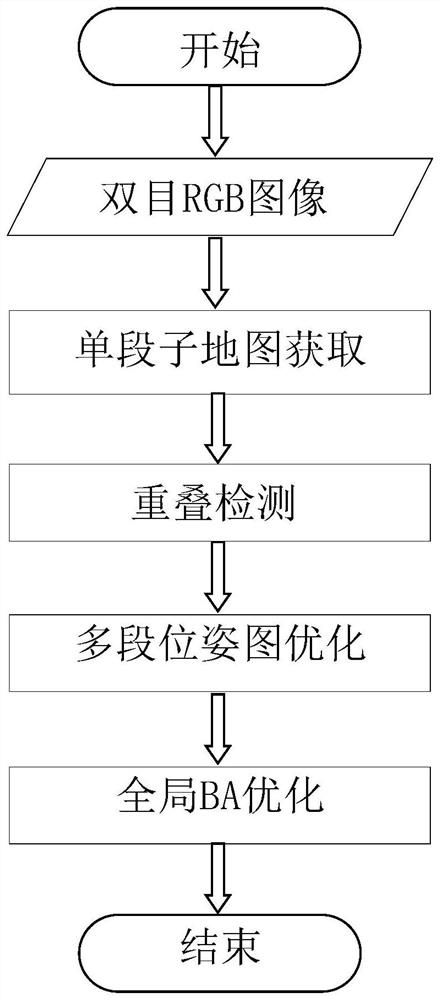

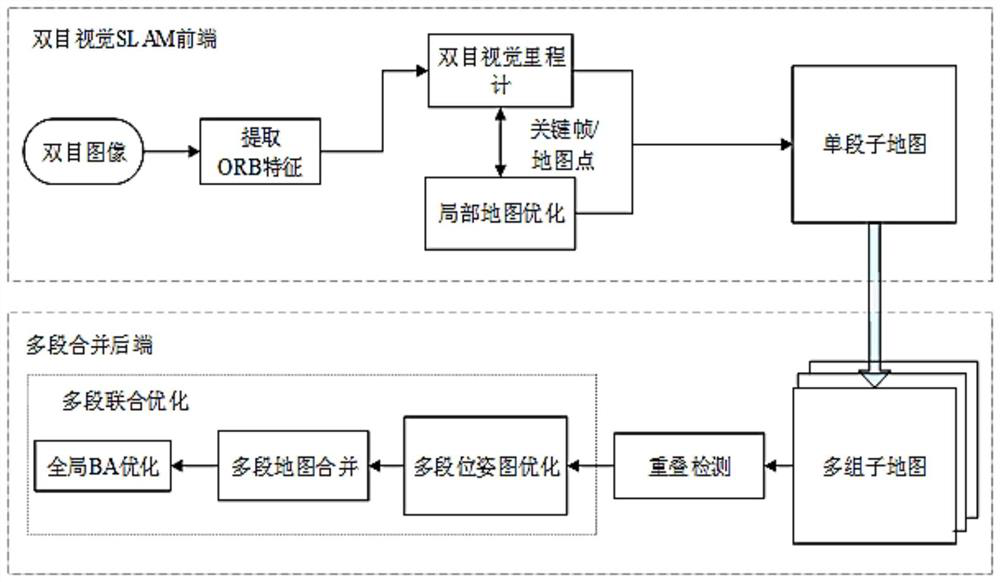

Map construction method for accurate positioning based on multi-segment joint optimization

PendingCN114088081AInstruments for road network navigationCharacter and pattern recognitionSimultaneous localization and mappingWord model

The invention discloses a map construction method for accurate positioning based on multi-section joint optimization, and belongs to the field of accurate positioning of robots or automatic driving automobiles based on prior maps. The method comprises the following steps: (1) for a scene needing to construct a positioning map, using a feature point-based visual SLAM (Simultaneous Localization and Mapping) method to obtain a plurality of groups of single-segment sub-maps through a visual odometer and local map optimization, and carrying out parallel operation on the visual odometer and the local map optimization in two independent threads; (2) utilizing ORB descriptors in the key frames, and adopting a scene recognition strategy based on a bag-of-words model to carry out rapid overlap detection between the sub-maps; (3) executing multi-section pose map optimization in a global coordinate system by utilizing the anchor points distributed to each sub-map; and (4) combining all the sub-maps into an integral map, and then performing global BA optimization on the integral map so as to obtain a more accurate offline map capable of being used for accurate positioning.

Owner:BEIJING UNIV OF TECH

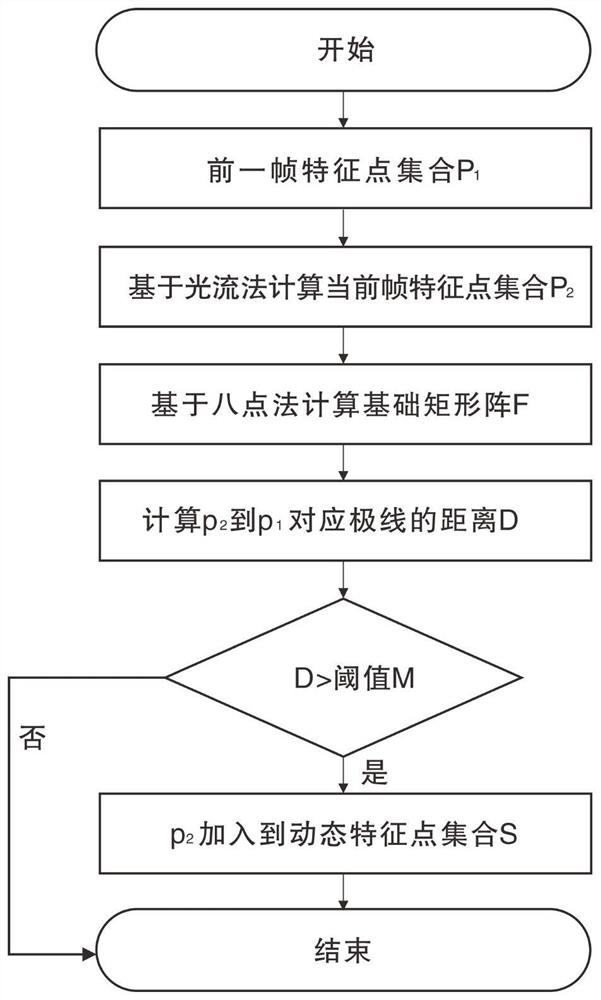

Visual SLAM method based on optical flow and semantic segmentation

InactiveCN111797688AEliminate precision issuesPicture taking arrangementsCharacter and pattern recognitionVisual spaceRadiology

The invention belongs to the technical field of visual space positioning, and discloses a visual SLAM method based on optical flow and semantic segmentation, and the method comprises the steps: carrying out the segmentation of input image information through employing a semantic segmentation network to obtain a static region and a predictive dynamic region; performing feature tracking on the static region and the predictive dynamic region by adopting a sparse optical flow method; determining the types of the feature points in the input image information, and removing the dynamic feature points; and taking the set without the motion feature points as tracking data, inputting the tracking data into ORB-SLAM for processing, and outputting a pose result. According to the method, the problem ofpoor SLAM tracking and positioning effect in the dynamic environment is solved, and the trajectory information with high pose precision in the dynamic environment can be obtained.

Owner:WUHAN UNIV

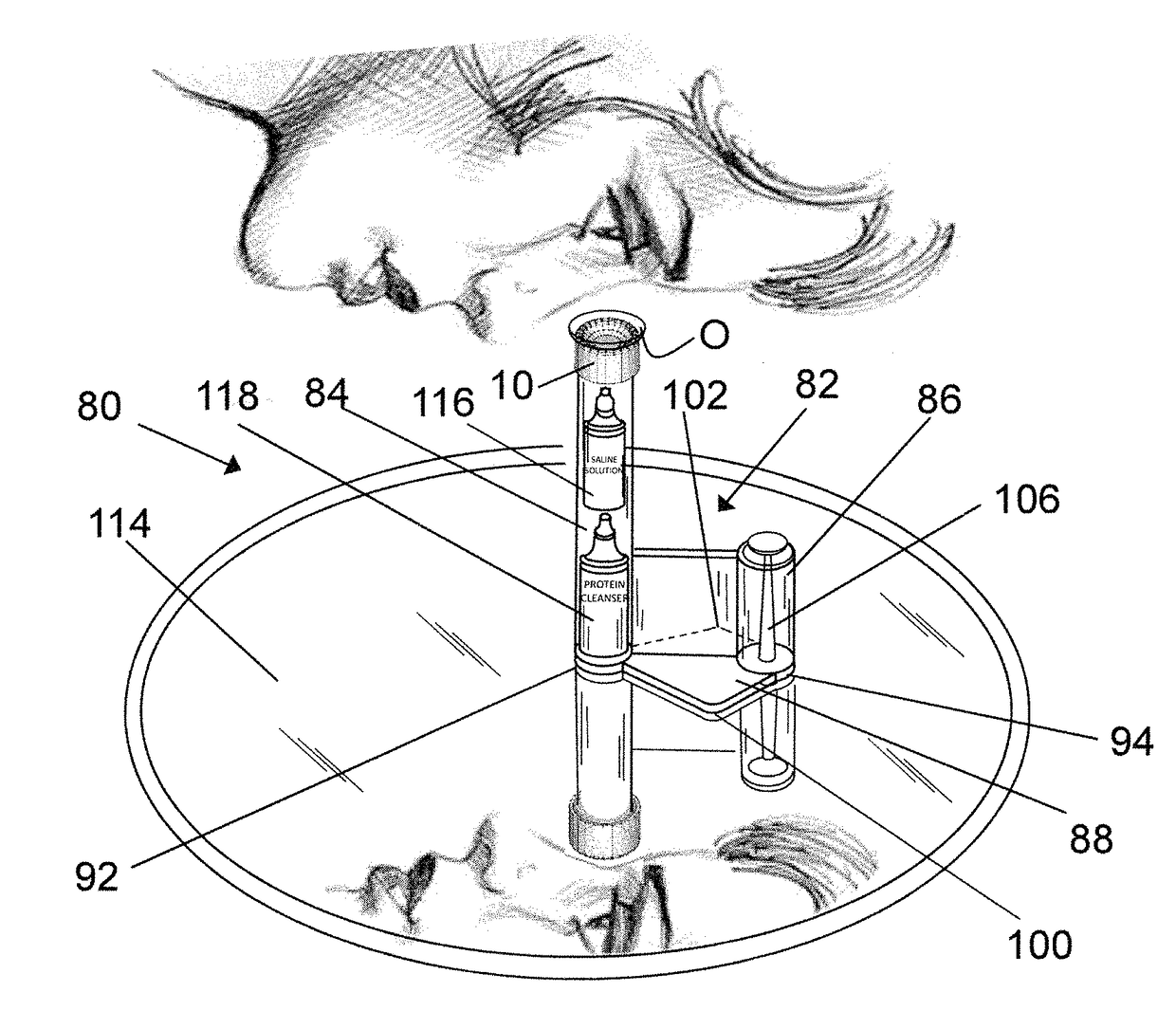

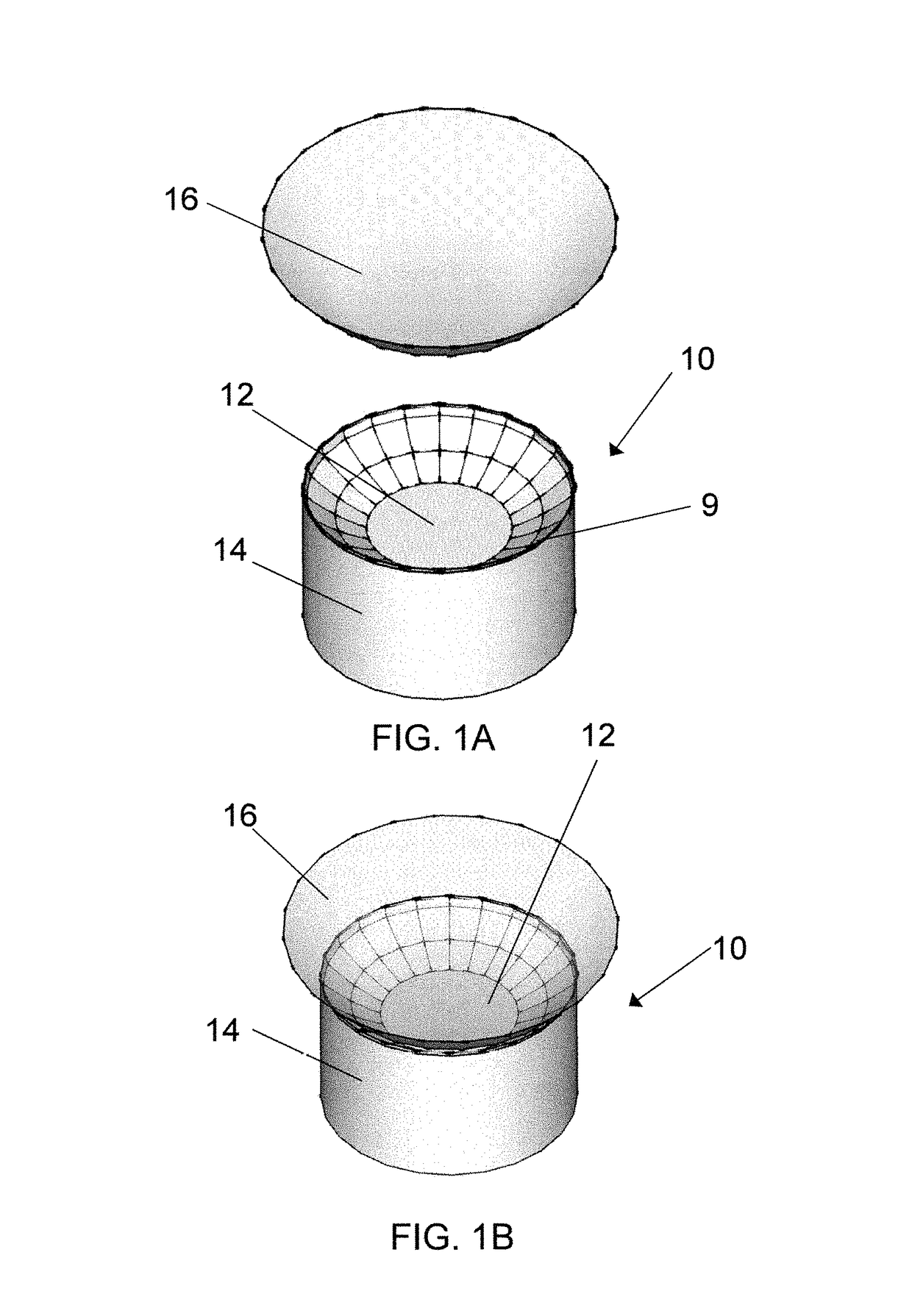

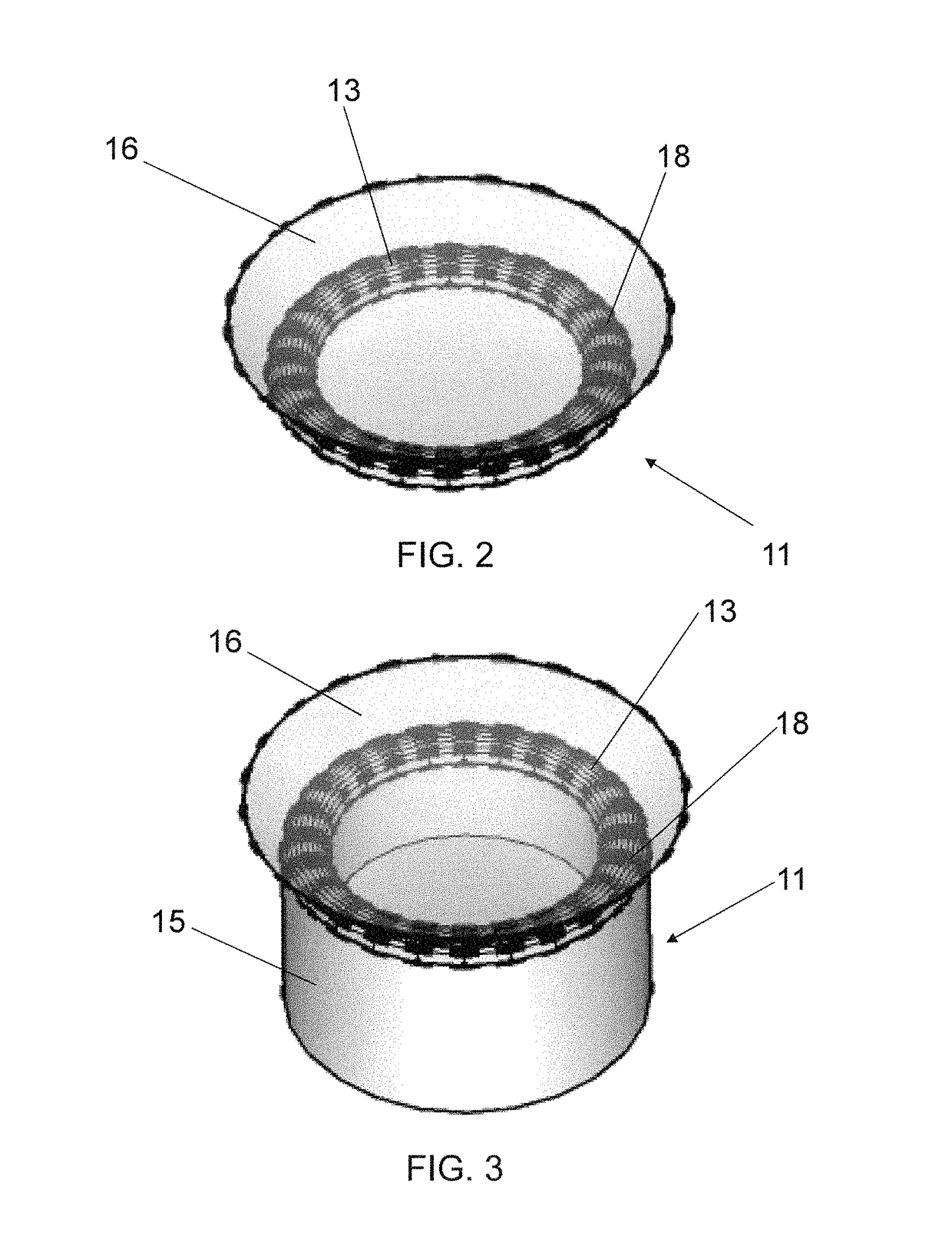

Lens aid and lens aid system and techniques for the insertion and removal of contact and scleral lenses

A lens aid, lens aid system and powered light source combined with a mirror that uses a lens cup of soft pliable material providing an improved visual method including a hands-free or one hand method for the insertion of a contact lens and more preferably a scleral lens into the eye.

Owner:HOPPER THOMAS P

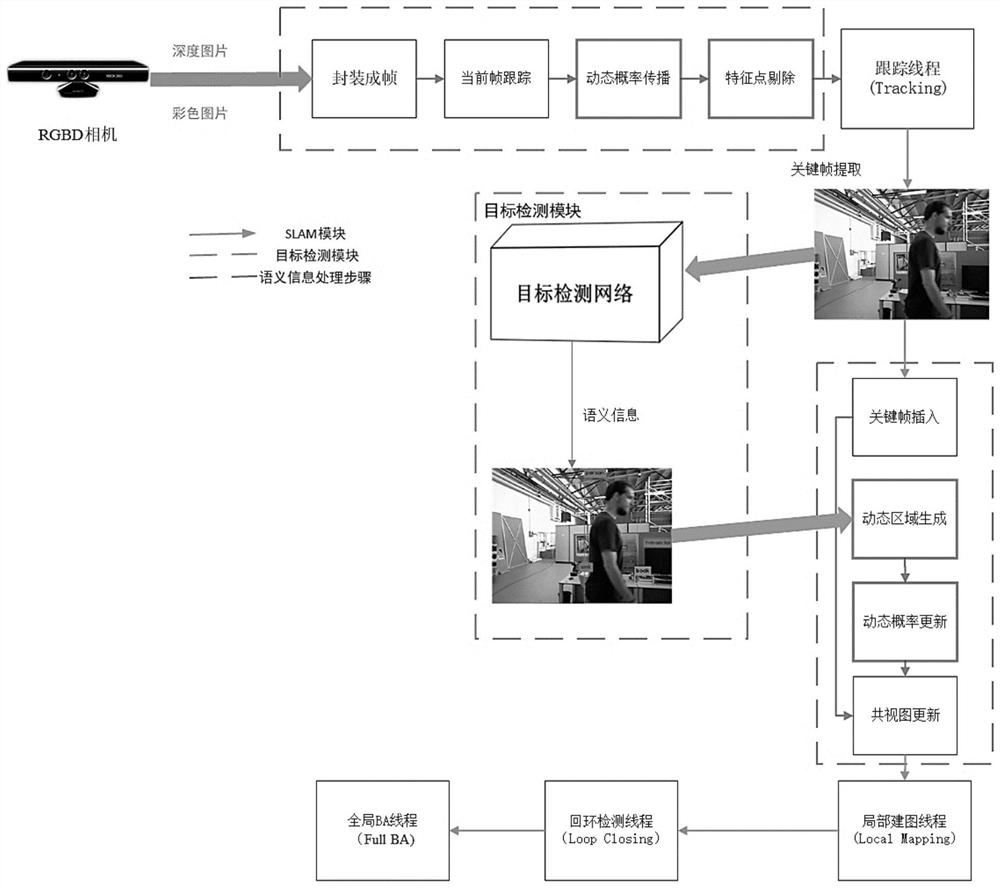

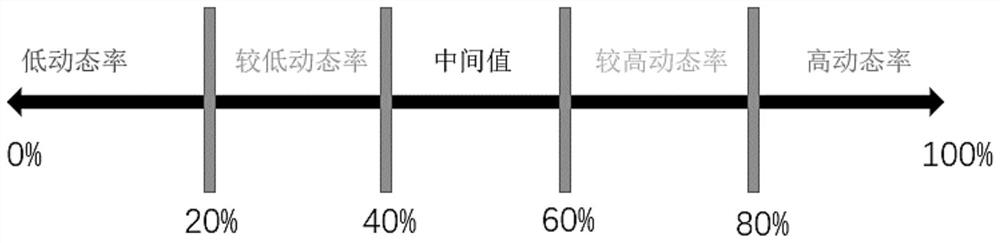

Visual SLAM method suitable for indoor dynamic environment

PendingCN112132893AImprove accuracyRemove in timeImage enhancementImage analysisColor imageProbability propagation

The invention relates to a visual SLAM method suitable for an indoor dynamic environment, and the method comprises the steps of obtaining a color image packaging frame of the environment, calculatinga dynamic probability propagation result, removing dynamic feature points according to the dynamic probability, reserving static feature points, and carrying out the target detection of a key frame ifa current frame meets a key frame condition during the judgment of the key frame; performing semantic segmentation on the picture according to a detection result, determining an area belonging to a dynamic object, updating a dynamic probability of a map point corresponding to the feature point of the key frame, inputting a local mapping thread, updating and extracting a local common view, performing local optimization on the poses of the key frame and the map point, and updating an essential diagram to perform global optimization. When pose calculation and map construction are carried out, object category information in the environment is effectively fused, a target detection algorithm is fused with a traditional visual SLAM system, feature points belonging to a dynamic object are removedin time, and the positioning and mapping accuracy and robustness are higher in the dynamic environment.

Owner:TONGJI ARTIFICIAL INTELLIGENCE RES INST SUZHOU CO LTD

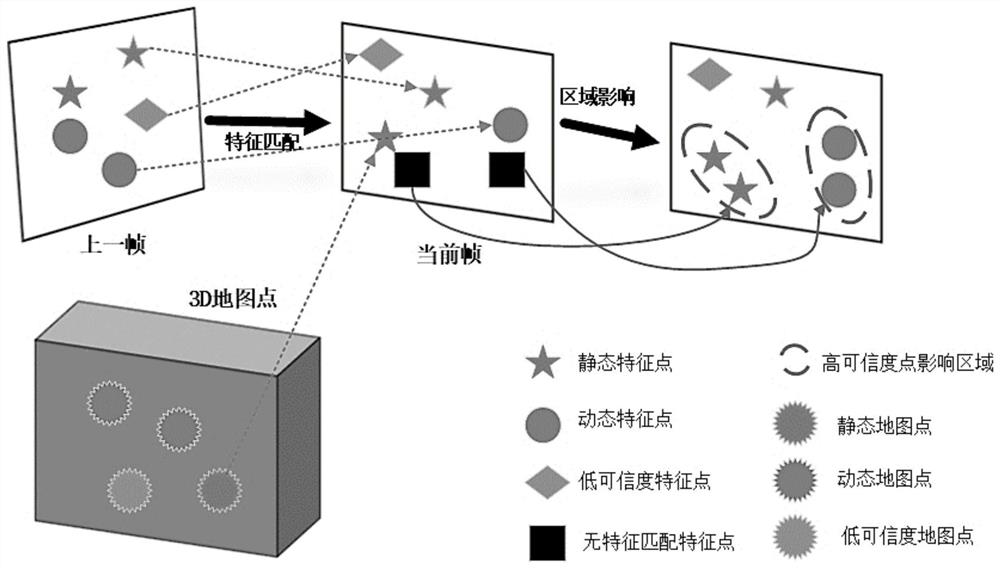

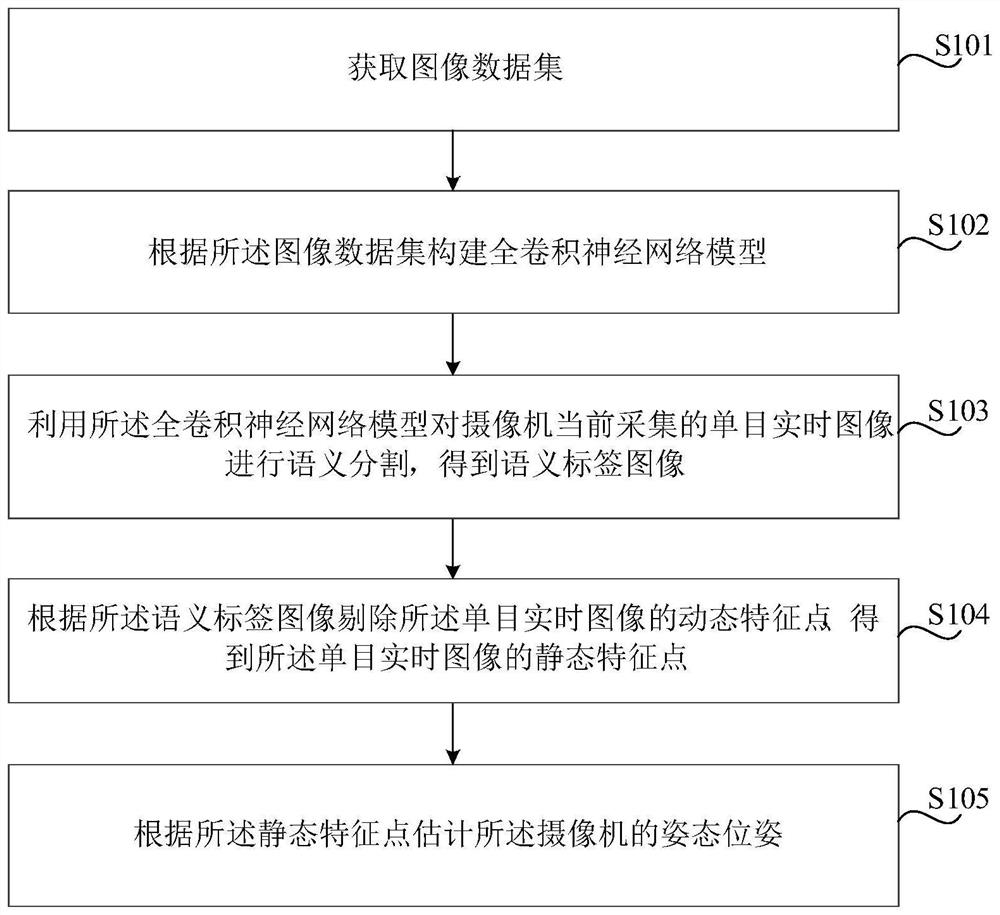

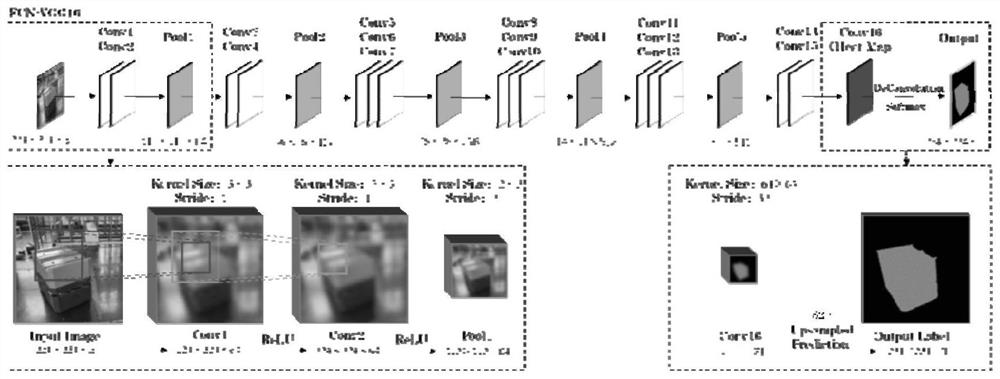

Visual SLAM method and system based on full convolutional neural network in dynamic scene

PendingCN112802197APrecise positioningHigh precisionImage enhancementImage analysisVisual perceptionImaging data

The invention provides a visual SLAM method and system based on a full convolutional neural network in a dynamic scene. The method comprises the following steps: acquiring an image data set; constructing a full convolutional neural network model according to the image data set; performing semantic segmentation on a monocular real-time image currently collected by a camera by using the full convolutional neural network model to obtain a semantic tag image; removing dynamic feature points of the monocular real-time image according to the semantic tag image to obtain static feature points of the monocular real-time image; and estimating the pose of the camera according to the static feature points. Through the method provided by the invention, a dynamic target can be accurately identified and semantic segmentation can be completed, the accuracy and robustness of camera tracking are effectively improved, and the positioning and mapping precision of visual SLAM in a dynamic scene is improved.

Owner:ZHEJIANG FORESTRY UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com