Visual SLAM method based on semantic segmentation of deep learning

A technology of semantic segmentation and deep learning, applied in the field of computer vision sensing, it can solve the problems of different visual appearance, inability to realize loopback detection, etc., and achieve the effect of enhancing robustness, improving accuracy and excellent accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

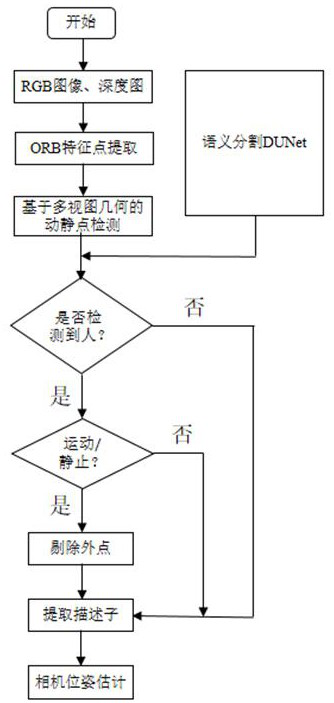

[0068] A visual SLAM method based on semantic segmentation of deep learning:

[0069] Step 1. After obtaining the image data collected by the RGB-D depth camera, extract the ORB feature points of the original RGB image, and use the DUNet semantic segmentation network to perform pixel-level semantic segmentation on the RGB image to obtain the semantic segmentation mask. The DUNet network model loaded in our visual SLAM system is trained based on the PASCAL VOC dataset, which can detect a total of 20 categories when used for segmentation tasks. If in the real application process, more than Pascal VOC data is encountered We can also use the larger MS COCO dataset to train our deep learning network. The input of the DUNet network is the original RGB image of size h×w×3, and the output of the network is a matrix of size h×w×n, where h represents the pixel height of the image, w represents the pixel width of the image, and n represents the Number of dynamic objects. For each outpu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com