Finger tip point extraction method based on pixel classifier and ellipse fitting

A technology of ellipse fitting and extraction method, applied in instruments, character and pattern recognition, computer parts and other directions, can solve problems such as the difficulty of opening the operation window, and achieve the effect of low cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0068] Segmentation of the hand region:

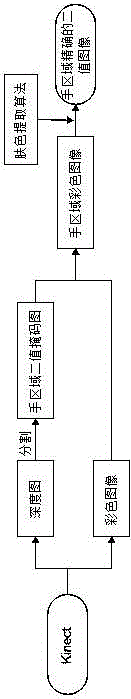

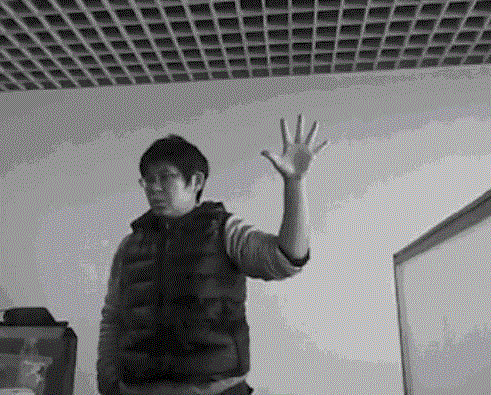

[0069] see Figure 1 to Figure 5 As shown, the segmentation in the two-dimensional direction: first use OpenNI to track the position of the palm point, and first segment the hand and arm area in the two-dimensional direction: the palm point is the center of the rectangle, and a rectangle containing the hand and arm area is segmented.

[0070] is the coordinates of the palm point in the two-dimensional direction. Indicates the side length of the rectangle.

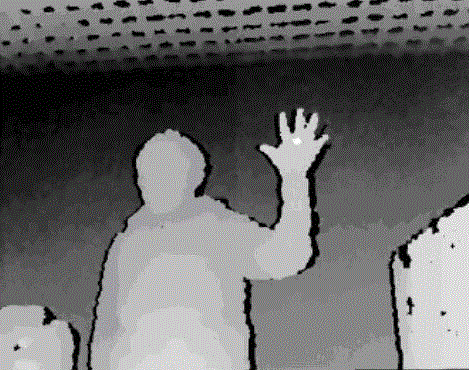

[0071] Segmentation in the depth direction: extract the depth value of the hand point according to the depth map provided by Kinect , to determine the hand and arm regions. if And the point depth value satisfy , are the points in the hand and arm region, namely:

[0072] in Whether the marked pixel is the hand and arm area, Indicates the depth value of the tracked hand point, represent pixels The depth value at , k represents the maximum depth range of th...

Embodiment 2

[0074] Extract the skin color area:

[0075] see Figure 6 to Figure 9 As shown, due to the characteristics of the Kinect data, the edges of the hand and arm areas are relatively jagged, which will affect the accuracy of subsequent contour extraction. Therefore, after getting the hand and arm area, apply the Bayesian skin color model to remove those areas that are not skin color, and get the accurate hand and arm area.

[0076] 1. Creation of skin color model

[0077] In this paper, YCbCr color space is used to construct the skin color model, because of its discreteness and the identity of human visual perception, the separation of brightness and chrominance, and the compactness of skin color gathering areas. In the YCbCr color space, the luminance information is represented by a single component Y, and the color information is stored by two color difference components Cb and Cr, Cb represents the blue chroma, and Cr represents the red chroma.

[0078]

[0079] First, co...

Embodiment 3

[0087] see Figure 10 to Figure 21 As shown, since the detection of the hand area is carried out based on the depth value provided by Kinect, but the depth value provided by Kinect has errors, which leads to inaccurate contours of the hand area during motion, due to motion blur presence, the effect will be even worse. Fingertip detection algorithms based on templates and contours have low detection accuracy for slightly curved fingertips, while fingertip detection algorithms based on morphological operations are slower, time-consuming, and frequently misdetect fingertips . Therefore, the present invention selects the method based on the pixel classifier to extract the finger and fingertip area, and then uses ellipse fitting to obtain the position of the fingertip point.

[0088] (1) Extract finger area based on pixel classifier method

[0089] According to the geometric characteristics of fingertips, this paper defines a square as a pixel classifier, and classifies pixels a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com