Active content caching method based on federated learning

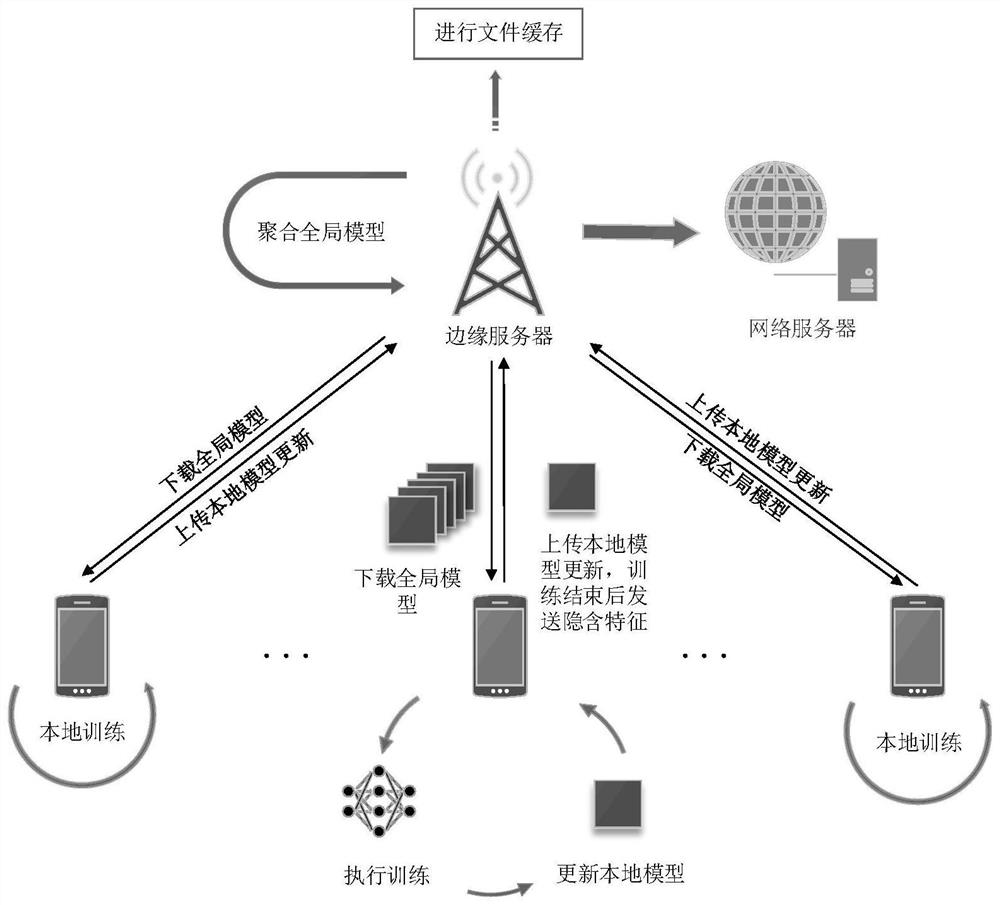

A content caching and federation technology, applied in integrated learning, instrumentation, digital data processing, etc., can solve the problems of data being attacked or intercepted, the difficulty of collecting historical demand data, and the difficulty of large-scale learning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072] The present invention will be further described below in conjunction with specific examples.

[0073] Taking the dataset Movielens as an example, the Movielens 100K dataset contains 100,000 ratings for 1,682 movies by 943 users. Each dataset entry consists of a user ID, a movie ID, a rating, and a timestamp. In addition, it provides the user's demographic information, such as gender, age, and occupation. Because users usually rate movies after watching them, we assume that movies represent files requested by users, and popular movie files are files that need to be cached in the edge server base station.

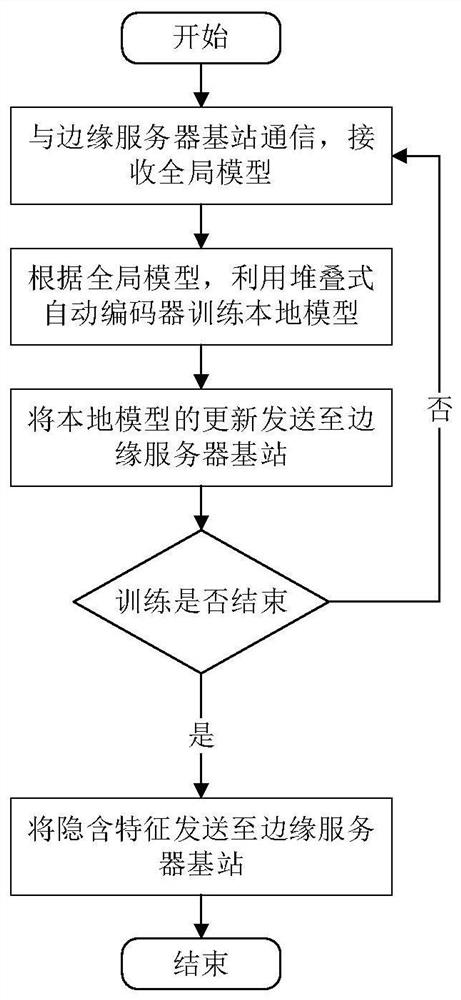

[0074] An active content caching method based on federated learning, comprising the following steps:

[0075] Step 1: Information collection and model building

[0076] Step 1.1 Collect information: According to the type of information, the process of collecting information by the edge server base station mainly includes two aspects:

[0077] 1) The access request ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com