Self-supervised learning fusion method for multi-band images

A fusion method and supervised learning technology, applied in the field of image fusion, which can solve the problems of limited fusion results and lack of labeled images.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The self-supervised learning fusion method of multi-band images based on multi-discriminator comprises the following steps:

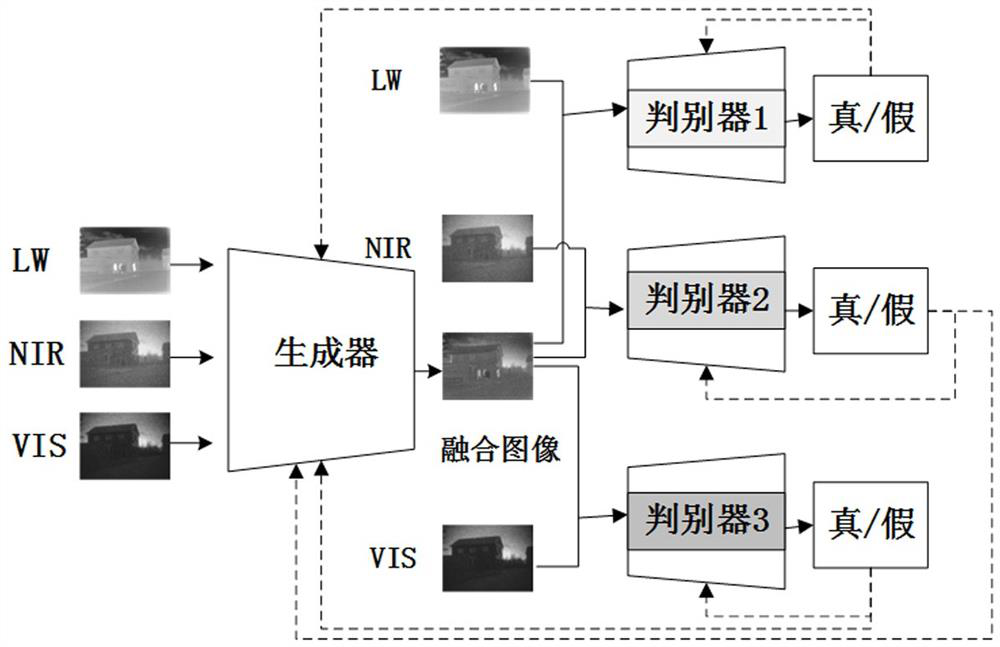

[0028] The first step is to design and build a generative confrontation network: design and build a multi-discriminator generative confrontation network structure. The multi-discriminator generative confrontation network consists of a generator and multiple discriminators; taking n-band image fusion as an example, a generator device and n discriminators.

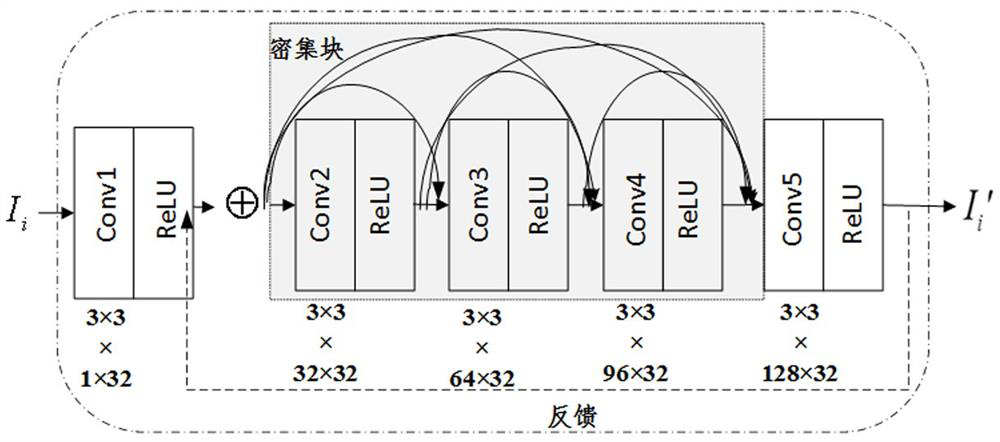

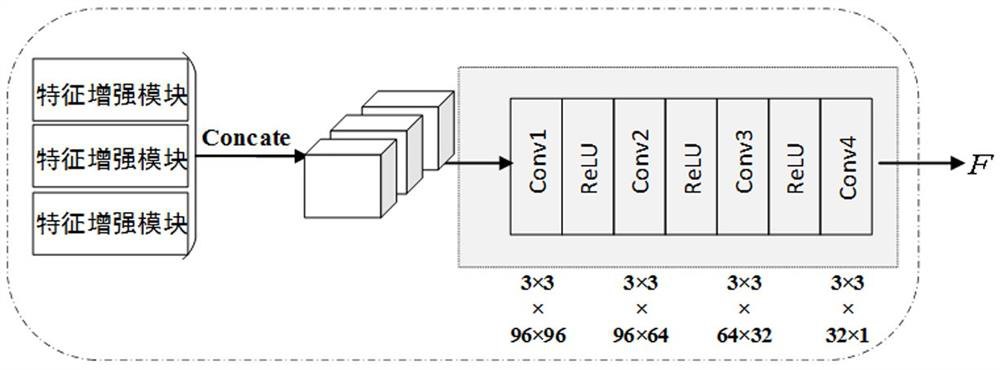

[0029] The generator network structure consists of two parts: a feature enhancement module and a feature fusion module. The feature enhancement module is used to extract the features of source images in different bands and enhance them to obtain multi-channel feature maps of each band. The feature fusion module uses the merged connection layer in the channel Dimensionally perform feature connection and reconstruct the connected feature map into a fusion image, as follows:

[0030] The feature ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com