Multi-Camera/Lidar/IMU-based multi-sensor SLAM method

A multi-sensor, multi-camera technology, applied in the field of multi-sensor SLAM, can solve the problem that the sensor cannot meet the complex outdoor environmental scenes, and achieve the effect of accurate lidar frame pose and improved robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The present invention will be described in detail below in conjunction with the specific embodiments shown in the accompanying drawings, but these embodiments do not limit the present invention, those of ordinary skill in the art make structural, method, or functional changes based on these embodiments All are included in the scope of protection of the present invention.

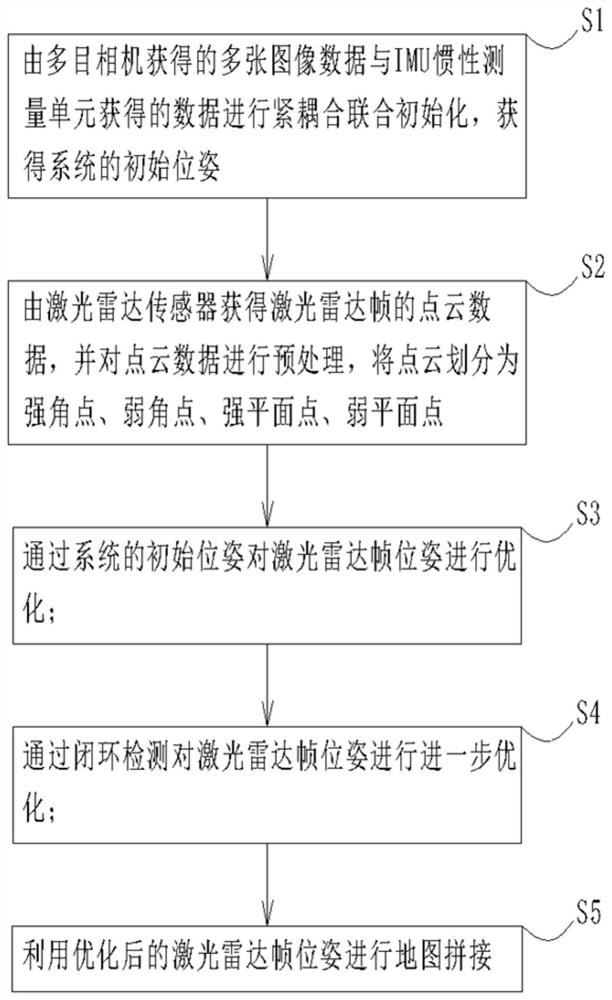

[0058] Such as figure 1 As shown, a multi-sensor SLAM method based on Multi-Camera / Lidar / IMU includes the following steps:

[0059] S1: The multiple image data obtained by the multi-eye camera and the data obtained by the IMU inertial measurement unit are tightly coupled and jointly initialized to obtain the initial pose of the system;

[0060] Obtaining the system initialization pose includes the following steps:

[0061] S11: Hard synchronous triggering of multi-camera via Raspberry Pi;

[0062] S12: Obtain multi-eye image data and IMU data from the multi-eye camera, and align the multi-eye image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com