Model training method, model training device, storage medium and electronic equipment

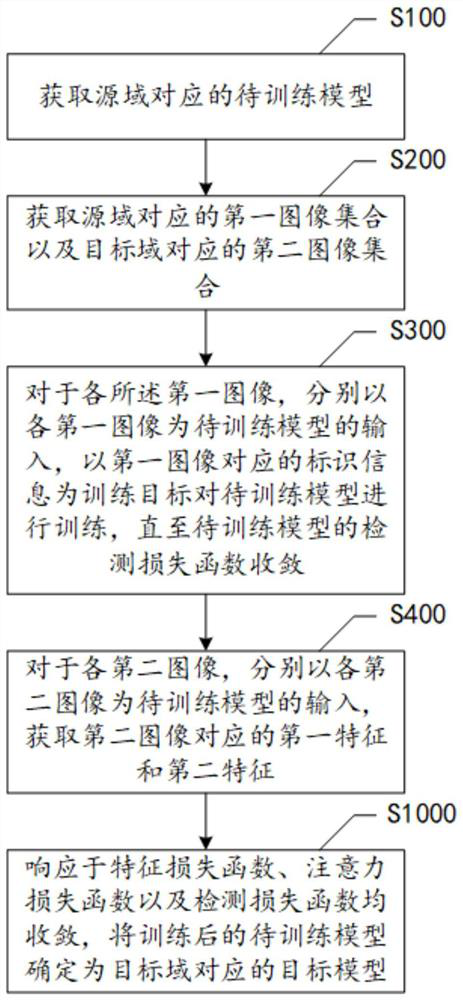

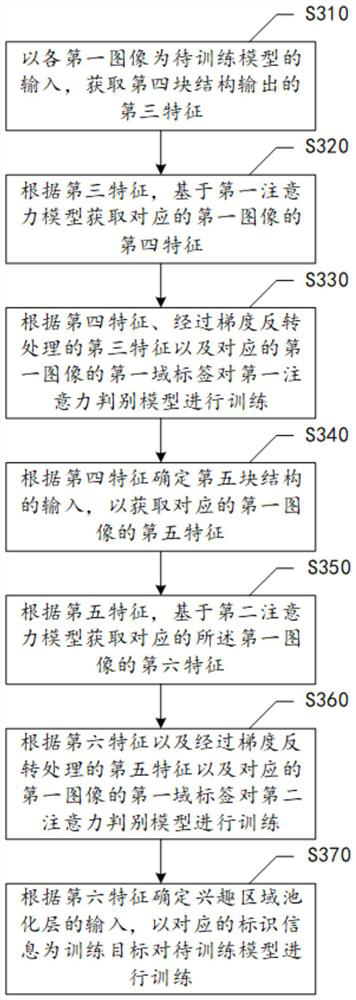

A technology for model training and training model, applied in the field of data processing, can solve the problem of low detection accuracy of RGB pixel matrix, and achieve the effect of improving object detection ability and reducing labeling cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] The present invention is described below based on examples, but the present invention is not limited to these examples. In the following detailed description of the invention, some specific details are set forth in detail. The present invention can be fully understood by those skilled in the art without the description of these detailed parts. In order not to obscure the essence of the present invention, well-known methods, procedures, procedures, components and circuits have not been described in detail.

[0029] Additionally, those of ordinary skill in the art will appreciate that the drawings provided herein are for illustrative purposes and are not necessarily drawn to scale.

[0030] Unless the context clearly requires, the words "including", "including" and similar words in the description should be interpreted as inclusive rather than exclusive or exhaustive; that is, "including but not limited to".

[0031] In the description of the present invention, it shoul...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com