A short video classification method and device based on multimodal joint learning

A classification method and short video technology, applied in neural learning methods, video data clustering/classification, video data retrieval, etc., to achieve the effect of solving multi-label classification problems, ensuring objectivity, and improving classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

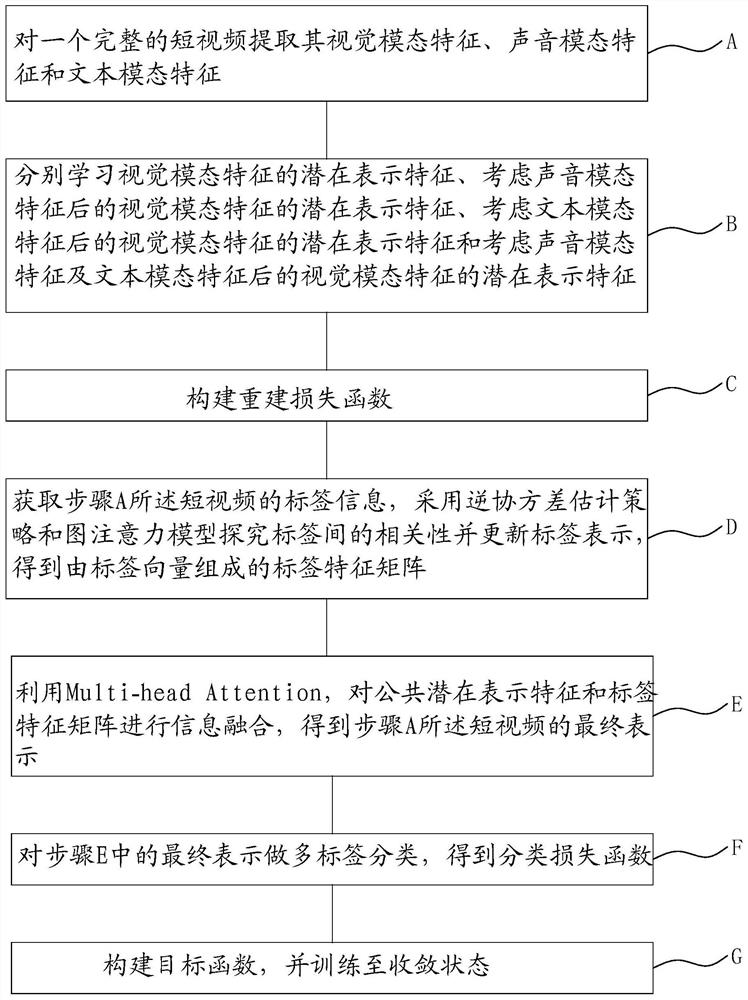

[0061] Such as figure 1 As shown, the short video classification method based on multimodal joint learning includes the following steps:

[0062] A. Extracting visual modality features z from a complete short video v , sound mode characteristics z a and the text modal feature z t ; specifically include:

[0063] First do ResNet (residual network) on the key frames of the short video, and then do an average pooling operation on all frames to obtain the visual modality z v :

[0064] Extraction of sound modality features z using long short-term memory networks a :

[0065] Extracting text modality features z using multi-layer perceptron t :

[0066] Among them, X={X v ,X a ,X t} represents the short video, where X v 、X a and x t Indicates the original visual information, original audio information and original text information of the short video; β v ,β a ,β t Respectively represent the network parameters used to extract the visual modal features, audio mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com