Multi-modal MR image brain tumor segmentation method based on deep learning and multi-guidance

A deep learning, multi-modal technology, applied in the field of image processing, can solve the problems of low segmentation accuracy, uneven brightness distribution, blurred target boundary and so on

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] In order to make the purpose, features and advantages of the present invention understandable, the specific implementation manners of the present invention will be described in detail below in conjunction with the accompanying drawings.

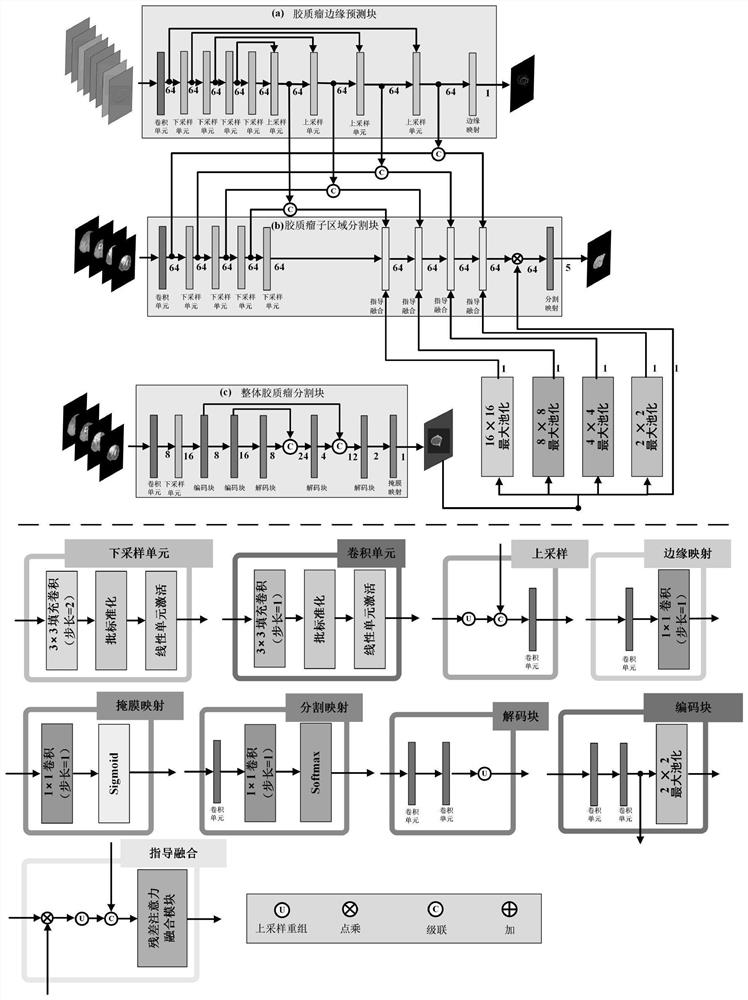

[0032] like figure 1 As shown, the multimodal MRI brain tumor segmentation method based on deep learning and multi-guidance is mainly composed of three network modules: the overall brain glioma segmentation network module, the brain glioma edge prediction network module and the brain glioma substructure The segmentation network module, wherein, the overall brain glioma segmentation network module and the brain glioma edge prediction network module are used to generate multiple guide maps, and these guide information are used to guide the brain glioma sub-region segmentation, brain glioma sub-structure segmentation The segmentation result obtained by the network module is the final segmentation result, and the substructures of different...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com