Bridge crane hoisting safety anti-collision system and method based on dynamic binocular vision

A binocular vision system, bridge crane technology, applied in safety devices, transportation and packaging, load hanging components, etc., can solve the problems of high safety risk, difficult to grasp the timing of emergency stop, etc., to reduce complexity and improve reliability. The effect of robustness and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0059] The present invention will be described in further detail below in conjunction with the accompanying drawings.

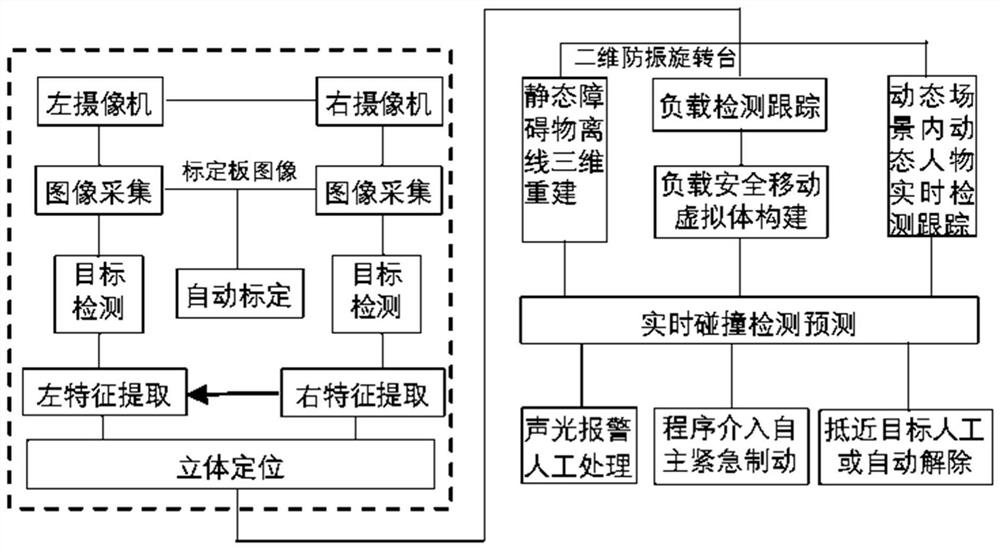

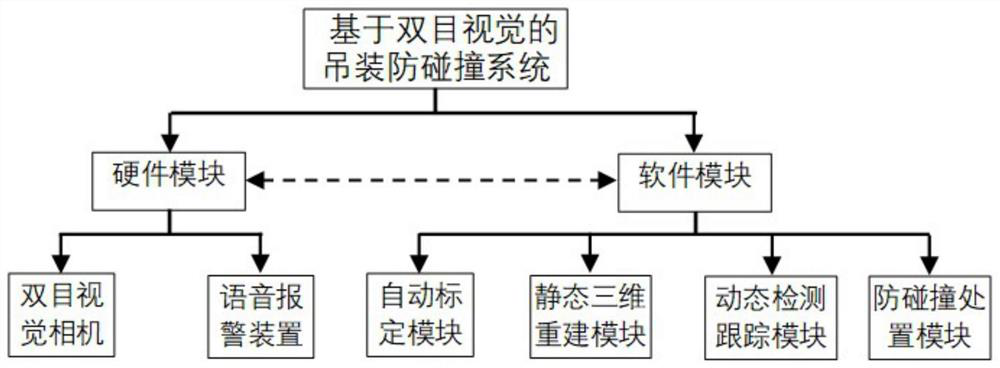

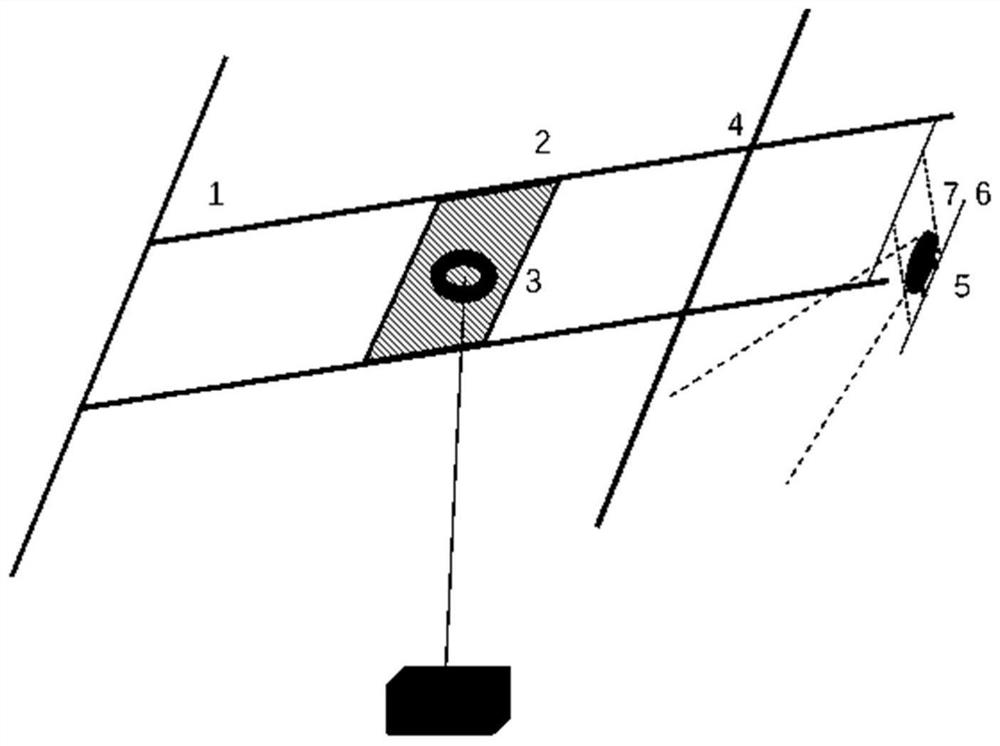

[0060] With the continuous development of computer vision technology, visual sensors have been more and more widely used in various electromechanical systems. Binocular vision sensing has the advantages of high efficiency, appropriate precision, simple system structure, and low cost. It is widely used in In-line, non-contact product inspection and quality control at the manufacturing site. In the measurement of moving objects (including animals and human body), since the image acquisition is completed in an instant, it is an effective measurement method. During the operation of the bridge crane, the binocular vision system is used to complete the detection of moving objects and the three-dimensional reconstruction of the hoisting space, and calculate the operation information, position information, and scale information of the load and obstacles online to pre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com