Indication expression understanding method based on multi-level expression attention-guiding network

A multi-level, attentional technology, applied in the field of indication expression understanding, can solve the problems of indistinguishable target area from other areas, indistinguishable objects that cannot be similar, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

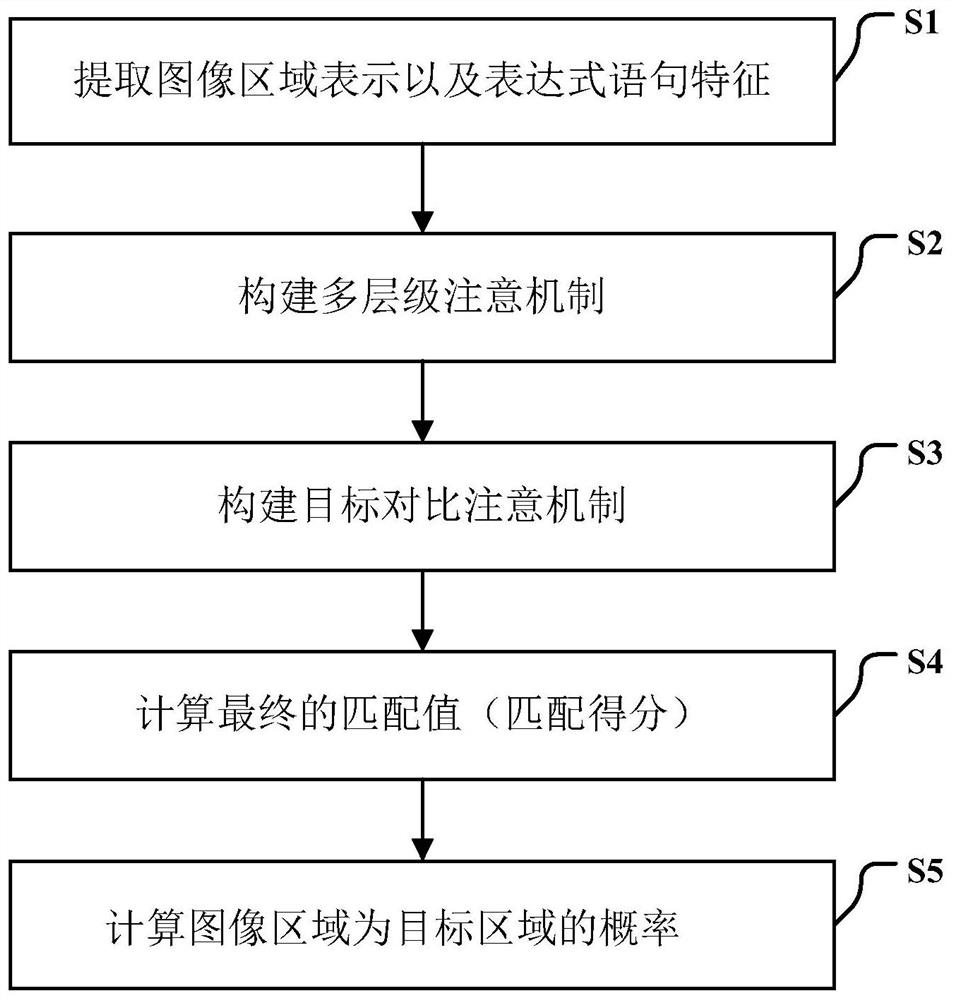

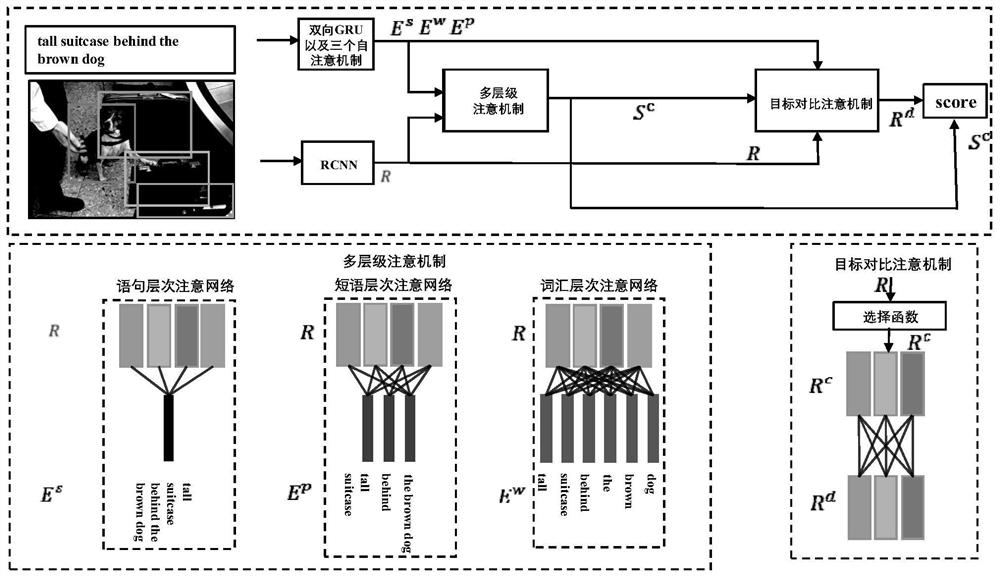

Method used

Image

Examples

example

[0160] The present invention is tested on three large-scale benchmark data sets RefCOCO, RefCOCO+ and RefCOCOg. From the experimental results, it can be seen that the present invention is superior to the highest level method, as shown in Table 1.

[0161]

[0162] Table 1

[0163] It can be concluded from Table 1 that the present invention has achieved the best performance in most of the subtasks, achieving accuracy rates of 87.45% and 86.93% respectively on the testA and testB test sets in the RefCOCO data; in the RefCOCO+ data The accuracy rates of 77.05% and 69.65% were respectively achieved on the testA and testB test sets in the RefCOCOg data; the accuracy rates of 80.29% were respectively achieved on the test sets in the RefCOCOg data.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com