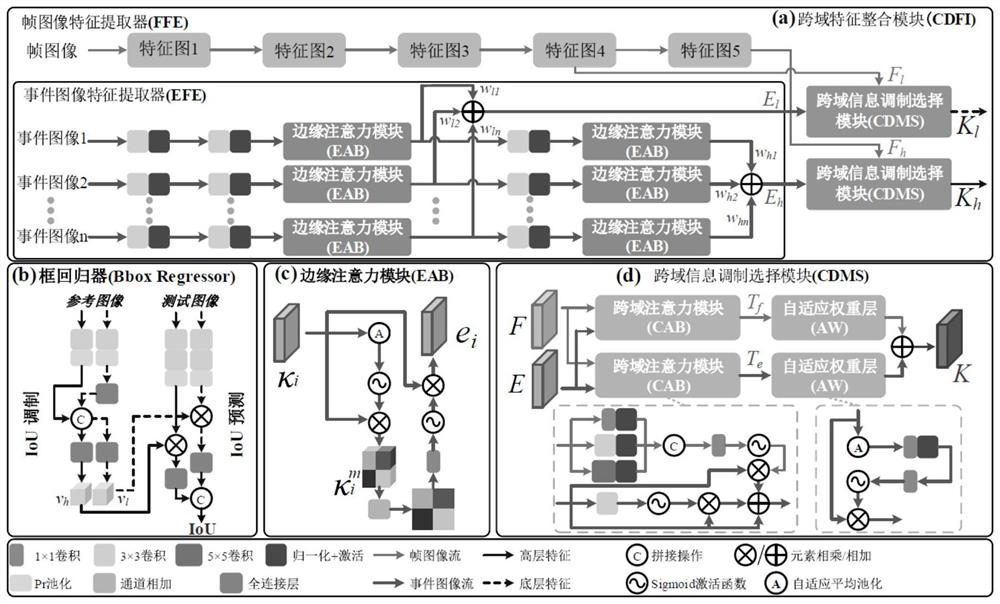

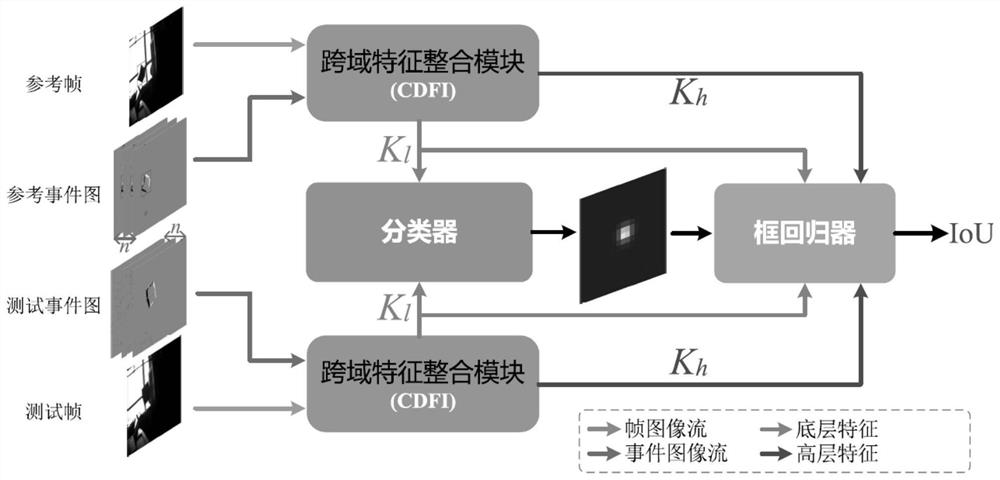

Moving target visual tracking method based on multi-source information fusion

A multi-source information fusion, moving target technology, applied in image analysis, instrumentation, computing and other directions, can solve problems such as the inability to represent discrete frame events

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The present invention will be described in further detail below in conjunction with specific embodiments, but the present invention is not limited to specific embodiments.

[0043] A moving target visual tracking method based on fusing event and frame domain information features, including the production of data sets and the training and testing of network models.

[0044] (1) Production of training data set

[0045] In order to mark the moving target for the grayscale frame and event stream of the event camera, two steps need to be completed: camera coordinate system transformation and coordinate transformation of the target positioning point.

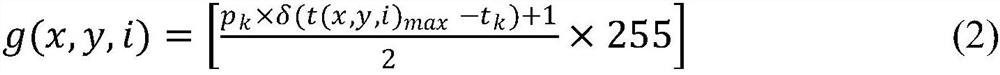

[0046] To realize the coordinate system transformation between the event camera and the VICON system, we first determine the event camera matrix K and the distortion coefficient d using a calibration board. Then, we can get the rotation vector r and translation vector t of DAVIS346 by the following formula,

[0047] r,t=S(K,d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com