Unsupervised hyperspectral video target tracking method based on spatial-spectral feature fusion

A feature fusion and target tracking technology, applied in the field of computer vision technology processing, can solve the problem of few training samples

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0069] An embodiment of the present invention provides an unsupervised hyperspectral video target tracking method based on spatial spectral feature fusion, comprising the following steps:

[0070] Step 1, video data preprocessing, this step further includes:

[0071] Step 1.1, convert the video data into a frame of continuous image X i (RGB video frame or hyperspectral video frame).

[0072] Step 1.2, unmarked video image frame X i All resize into 200×200 pixel size video image frame Y i .

[0073] Step 2, randomly initialize the bounding box (BBOX), this step further includes:

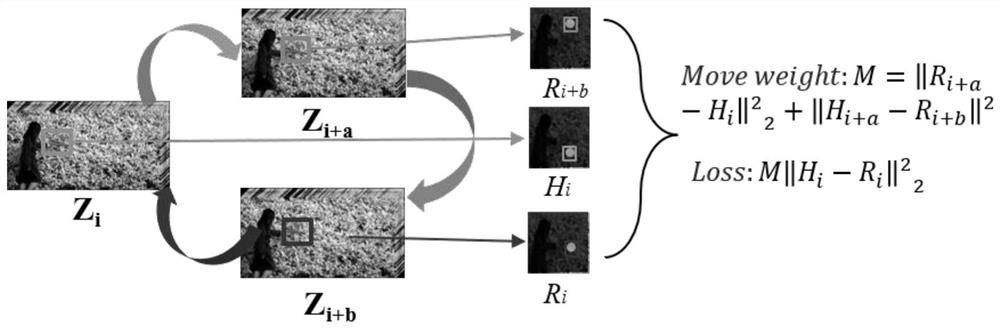

[0074] On the basis of step 1, in the unlabeled video frame Y i In the above, randomly select a 90×90 pixel area (a 90×90 pixel area centered on the coordinates [x, y]) as the target to be tracked (this area is the initialized BBOX). Resize the 90×90 area to a Z size of 125×125 pixels i . At the same time in Y i+1 to Y i+10 Randomly select two frames Y from these 10 frames i+a and Y i+b (1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com