Behavior recognition method based on feature mapping and multilayer time interactive attention

A feature mapping and recognition method technology, applied in the field of computer vision and video processing, can solve problems such as poor behavior recognition ability, insufficient time dynamic information modeling, ignoring the interdependence of different frames, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

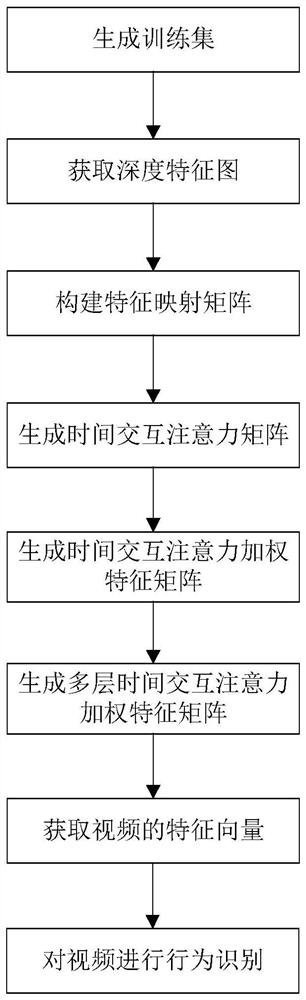

[0034] Attached below figure 1 The specific steps of the present invention are further described.

[0035] Step 1. Generate a training set.

[0036] The sample set is composed of RGB videos containing N behavior categories in the video data set, each category contains at least 100 videos, and each video has a certain behavior category, where N>50. Each video in the sample set is preprocessed to obtain the corresponding RGB image of the video, and the RGB images of all the preprocessed videos form a training set. Among them, preprocessing refers to sampling 60 frames of RGB images at equal intervals for each video in the sample set, scaling the size of each frame of RGB images to 256×340 and then cropping to obtain a video with a size of 224×224. 60 frames of RGB images.

[0037] Step 2. Get the depth feature map.

[0038] Input each frame of RGB image in each video in the training set to the Inception-v2 network in turn, and output the size of each frame image in each vide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com