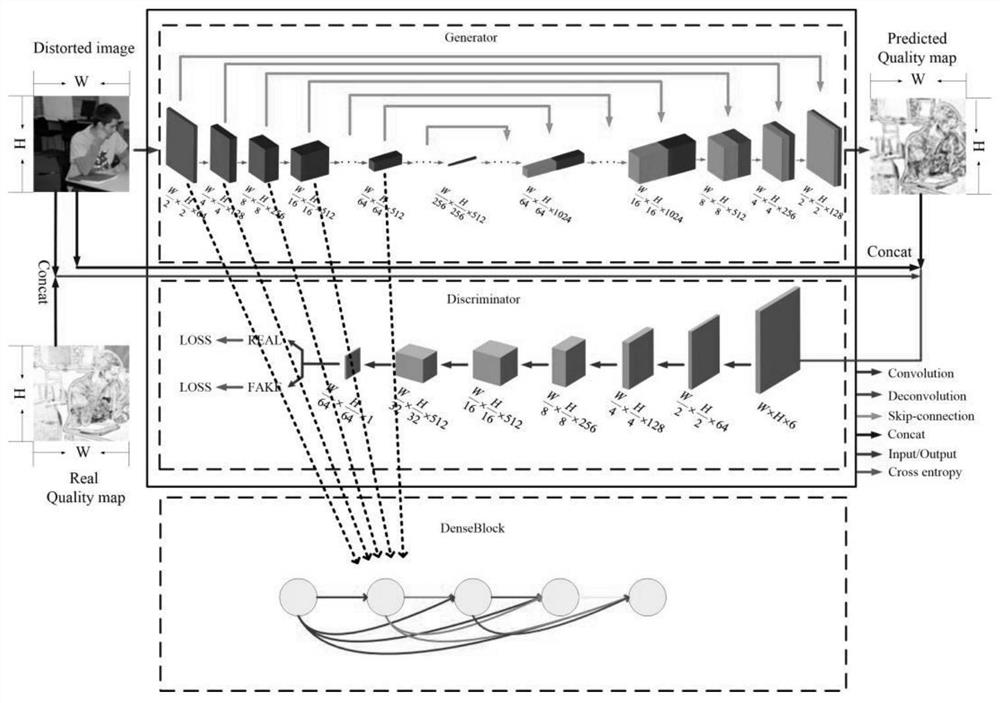

No-reference image quality evaluation method based on convolutional neural network

A technology of convolutional neural network and reference image, which is applied in the application field of generative confrontation network in image quality evaluation, can solve the problems of low performance and poor performance of no reference evaluation method, and achieve the effect of fast training speed and good experimental effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be further described below.

[0020] A no-reference image quality evaluation method based on convolutional neural network, the specific implementation steps are as follows:

[0021] Step 1: Preprocess the distorted image and the natural image to obtain a similar image;

[0022] 1-1. Calculate brightness contrast:

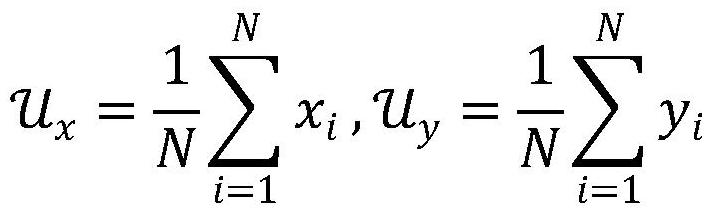

[0023] For distorted map: X, natural map: Y, use Indicates the brightness information of the distortion map X, Represents the brightness information of the natural graph Y:

[0024]

[0025] where x i ,y i is the pixel value of the image.

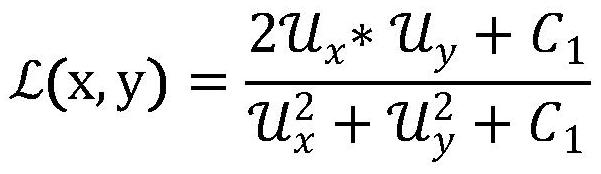

[0026] Then the brightness contrast between the distorted image X and the natural image Y can be expressed as:

[0027]

[0028] where C 1 It is an extremely small number set to prevent the denominator from being 0.

[0029] 1-2. Calculate the contrast ratio: C(x,y)

[0030] Using σ x Indicates the contrast information of the distortion map X, σ y Represents the contrast inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com