Rapid and automatic table extraction method based on deep neural network

A deep neural network and automatic extraction technology, which is applied in the application field of neural network, can solve the problems of easy mis-extraction of image contrast, inconsistent text spacing, slow speed, etc., and achieve good extraction effect, high degree of automation and high efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0075] Hereinafter, preferred embodiments of the present invention will be described in detail with reference to the accompanying drawings. It should be understood that the preferred embodiments are only for illustrating the present invention, but not for limiting the protection scope of the present invention.

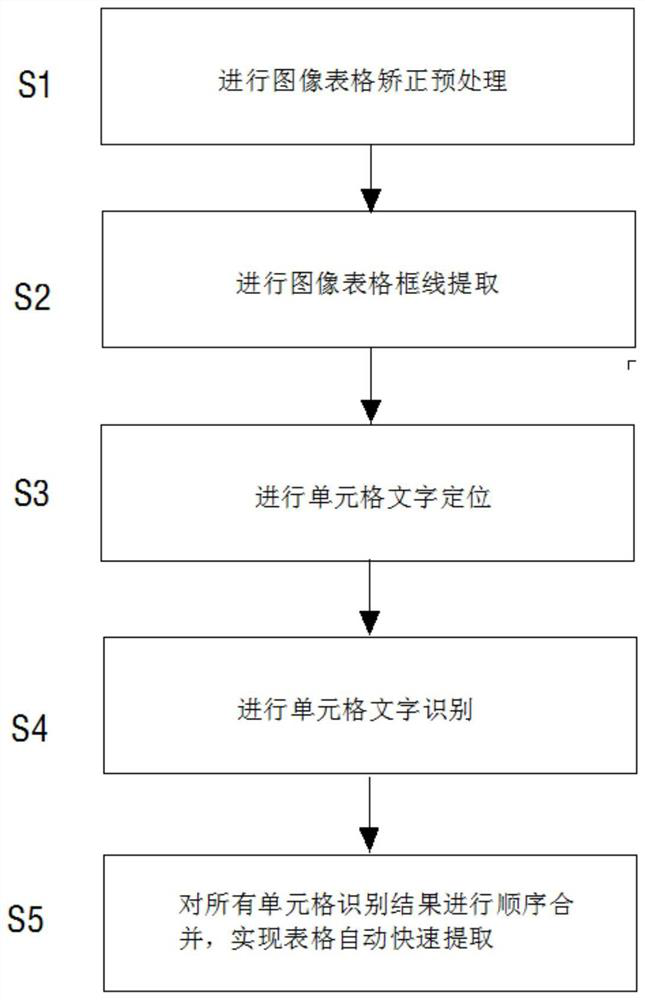

[0076] like figure 1 Shown, a kind of table automatic extraction method based on depth neural network of the present invention comprises the following steps:

[0077] Step S1: performing image form correction preprocessing; including establishing an image correction preprocessing model, and then automatically correcting the input image form, including performing brightness correction on the image form and geometric correction on the image form.

[0078] (1) Image table brightness correction

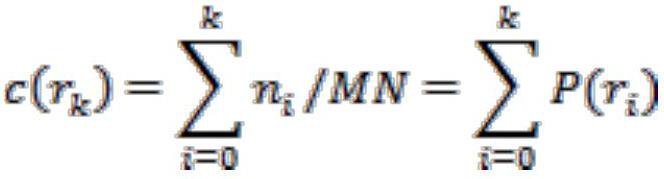

[0079] The original image is affected by the shooting lighting conditions, shooting sensor or other factors, and the brightness of the image will be inconsistent and the differe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com