Multi-modal image-text recommendation method and device based on deep learning

A technology of deep learning and recommendation methods, which is applied in the field of computer science and technology applications, can solve problems such as insufficient retrieval accuracy and long time-consuming, and achieve the effects of improving quality and efficiency, improving efficiency and accuracy, and fast training speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and examples.

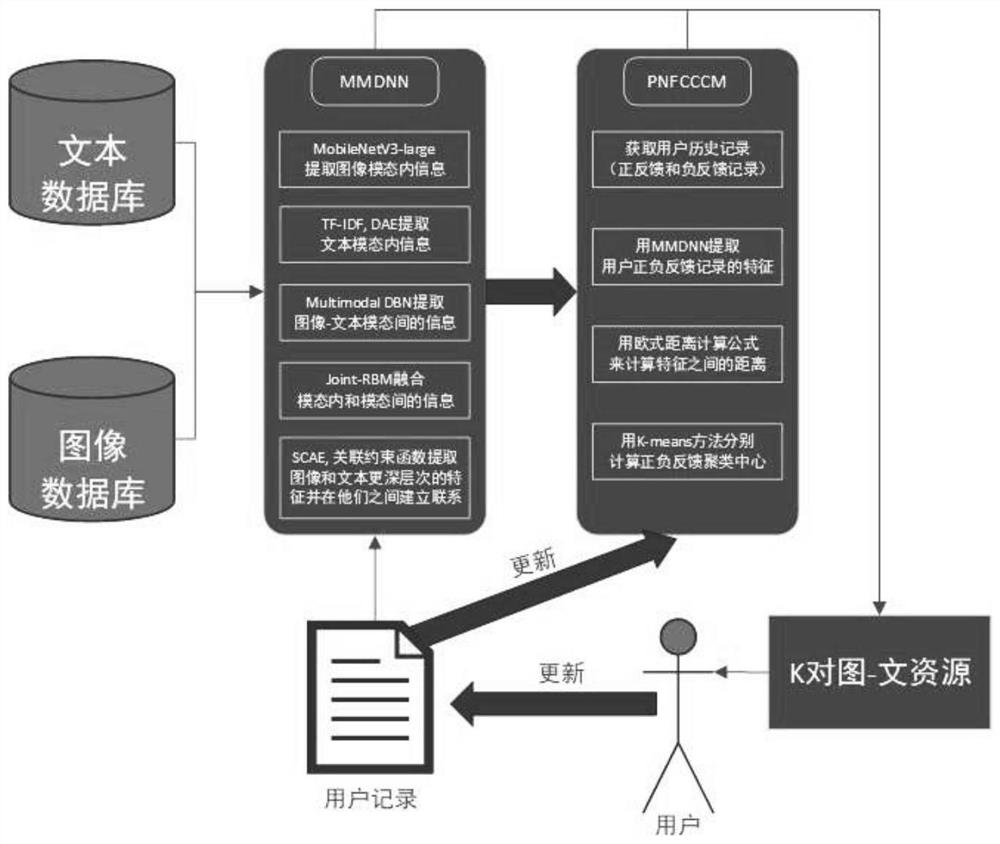

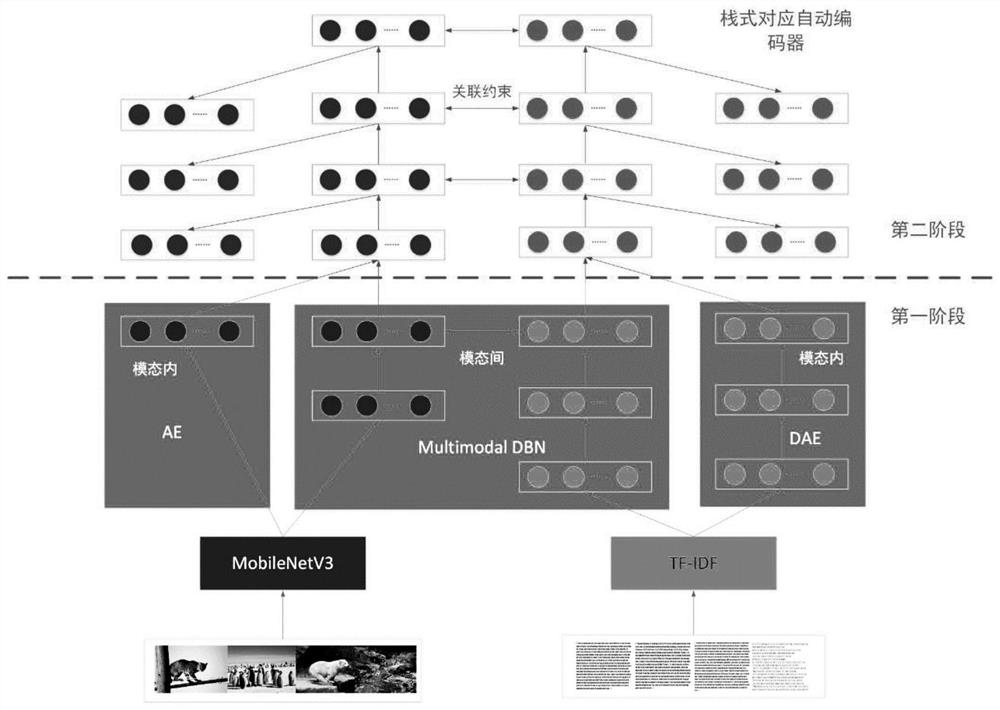

[0063] Using a cross-modal image-text retrieval model MMDNN (Multimodal Deep Neural Network), MMDNN is used in the recommendation system, using the positive and negative feedback clustering center calculation module PNFCCCM (Positive and Negative Feedback Cluster Center Calculation Module) and the user's positive and negative feedback Historical records, calculate the user's positive and negative feedback clustering centers; combine the data similarity score and positive and negative feedback scores, find out from the database the data with the highest comprehensive score in the user's historical records. Then use the MMDNN model to find another mode of data corresponding to these pieces of data from the database. Finally, the paired graphic-text resources are recommended to the user, and the user's history and the user's positiv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com