Video emotion classification method and system fusing electroencephalogram and stimulation source information

An emotion classification and stimulus source technology, applied in neural learning methods, sensors, character and pattern recognition, etc., can solve the problems of not paying attention to video, not paying attention to video information, and unsatisfactory classification effect, so as to improve efficiency and suppress uselessness. information, and the effect of improving generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

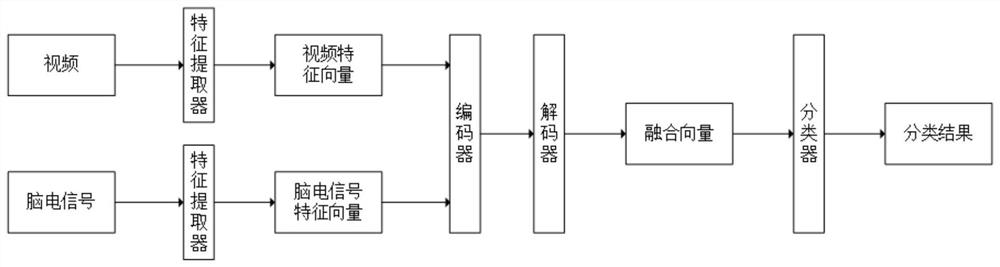

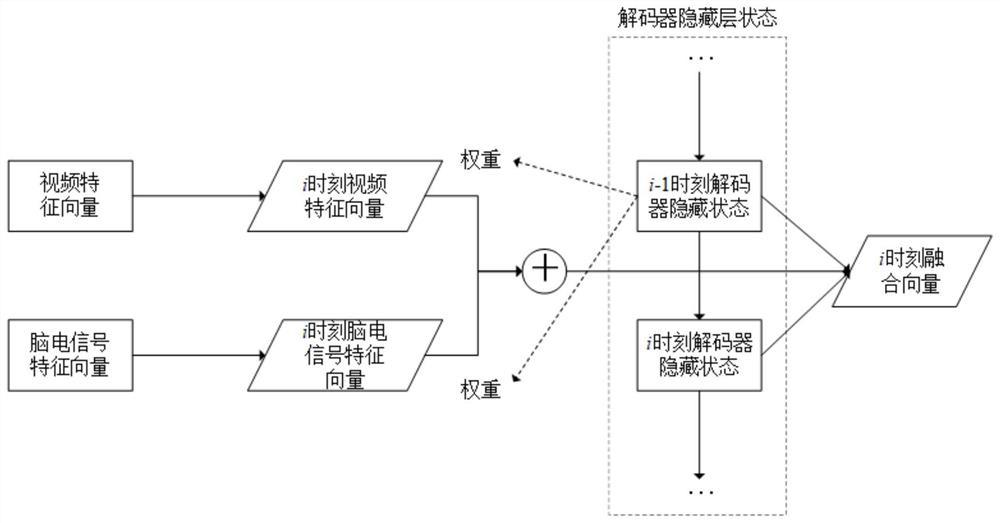

[0066] Taking the video of "Global Epidemic Event" on Twitter as an example to illustrate the video emotion classification process of fusing EEG and stimulus information.

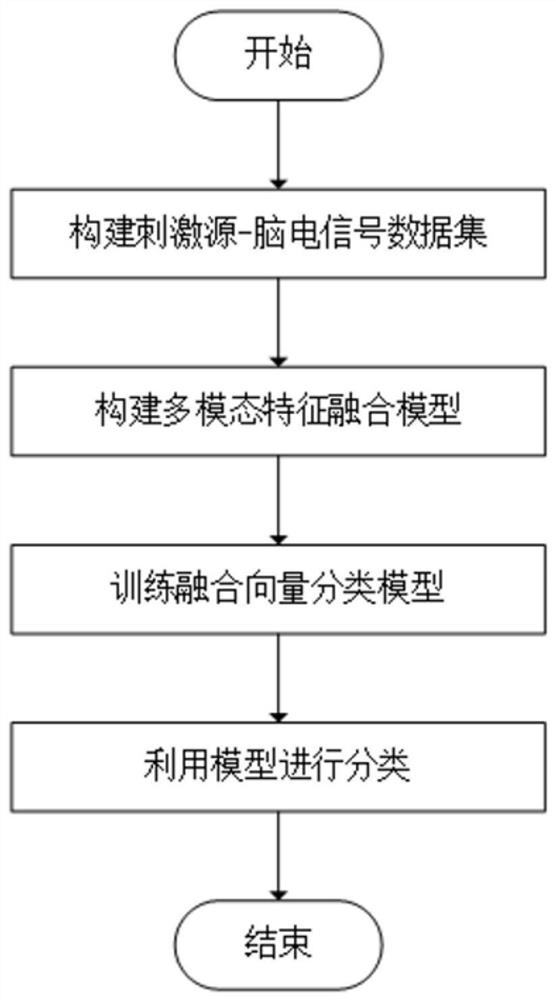

[0067] (1) Construct stimulus source-EEG signal dataset

[0068] Video clips from the Internet were collected, including videos with positive, negative and neutral sentiments, with equal numbers of the three. Positive videos select clips from comedy movies, negative videos select clips from tragic movies, and neutral videos select clips from documentaries. Each video is 3-5 minutes long. Wear a 62-channel EEG scanner for the subject. After the signal is stable, let the subject watch the video of the stimulus source. The staff next to him are responsible for playing the video and recording the EEG signal. The videos are played in random order, so that the same subject can Watch continuously, and the video playback interval is 15s, and the interval time is for the subject to rest and calm down. The collect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com