Video-paragraph retrieval method and system based on local-whole graph reasoning network

A partial image and video clip technology, applied in the field of cross-modal retrieval, can solve the problems of long sequence direct coding performance degradation, etc., and achieve the best technical effect and the comprehensive effect of interactive information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be further described below in conjunction with the accompanying drawings.

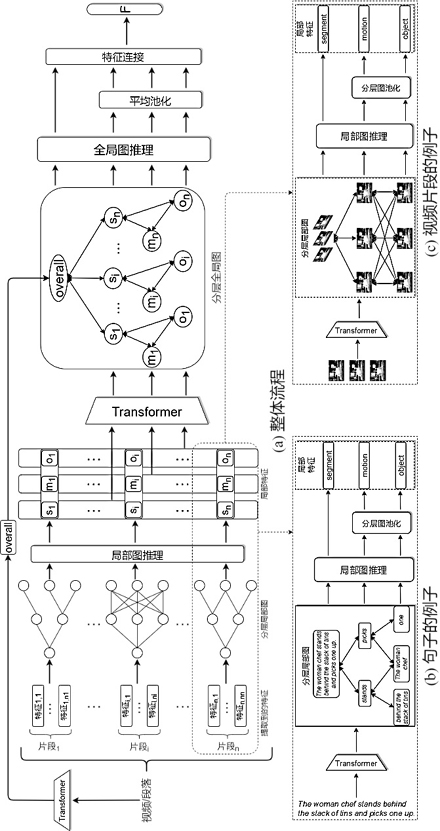

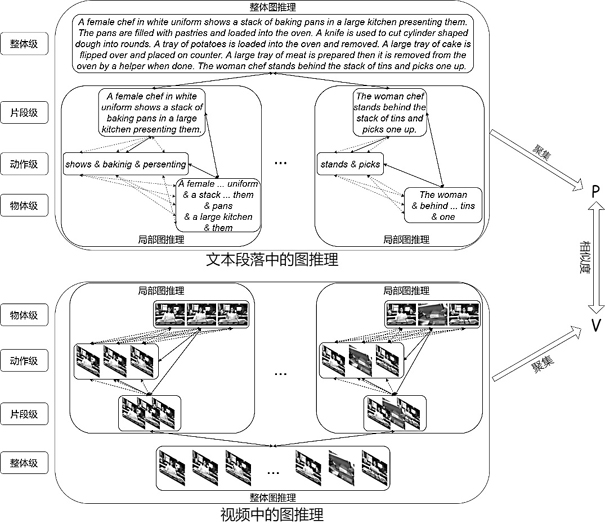

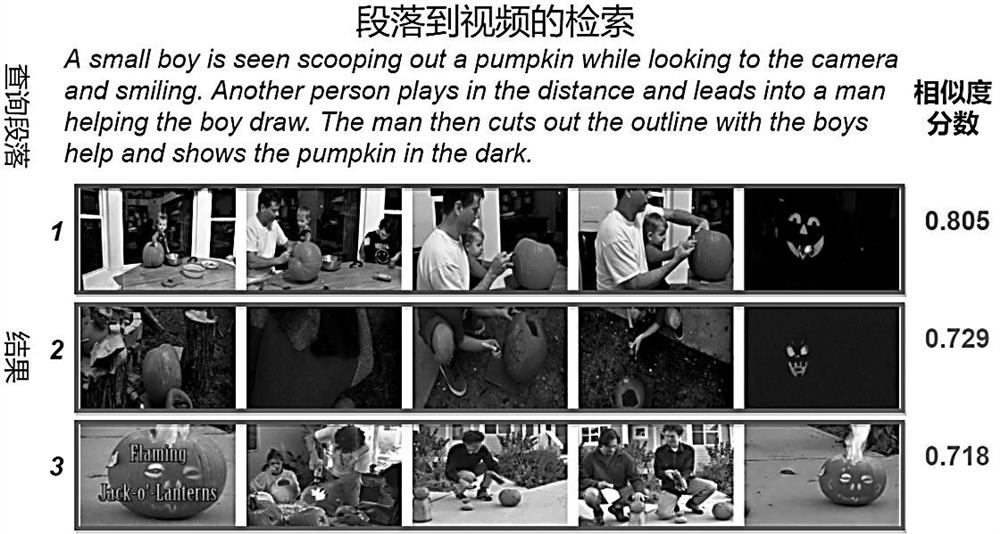

[0031] The present invention mainly designs four parts: firstly, video and text (paragraph) are preprocessed. Second, the given video and text are respectively encoded using a local-whole graph inference network to obtain the final video features and text features. After that, the similarity between video features and text features is calculated using cosine similarity. Finally, search is performed according to the similarity measurement results. In the local-whole graph reasoning network, the present invention proposes to decompose video and text into four-layer semantic structures, and construct a local graph and an overall graph respectively, and then use a graph convolutional network to perform graph reasoning operations.

[0032] Schematic diagram reference of the video-paragraph retrieval process of the present invention figure 1 As shown, it mainly includes t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com