Visual relation detection method and device based on scene graph high-order semantic structure

A detection method and scene graph technology, applied in the field of image processing, can solve problems such as difficulty in obtaining the correct type and quantity of triplet labels, achieve the effect of optimizing computational complexity, simple and direct position encoding processing, and reducing hardware requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036]In order to have a clearer understanding of the technical features, purposes and effects of the present invention, the specific implementation manners of the present invention will now be described with reference to the accompanying drawings.

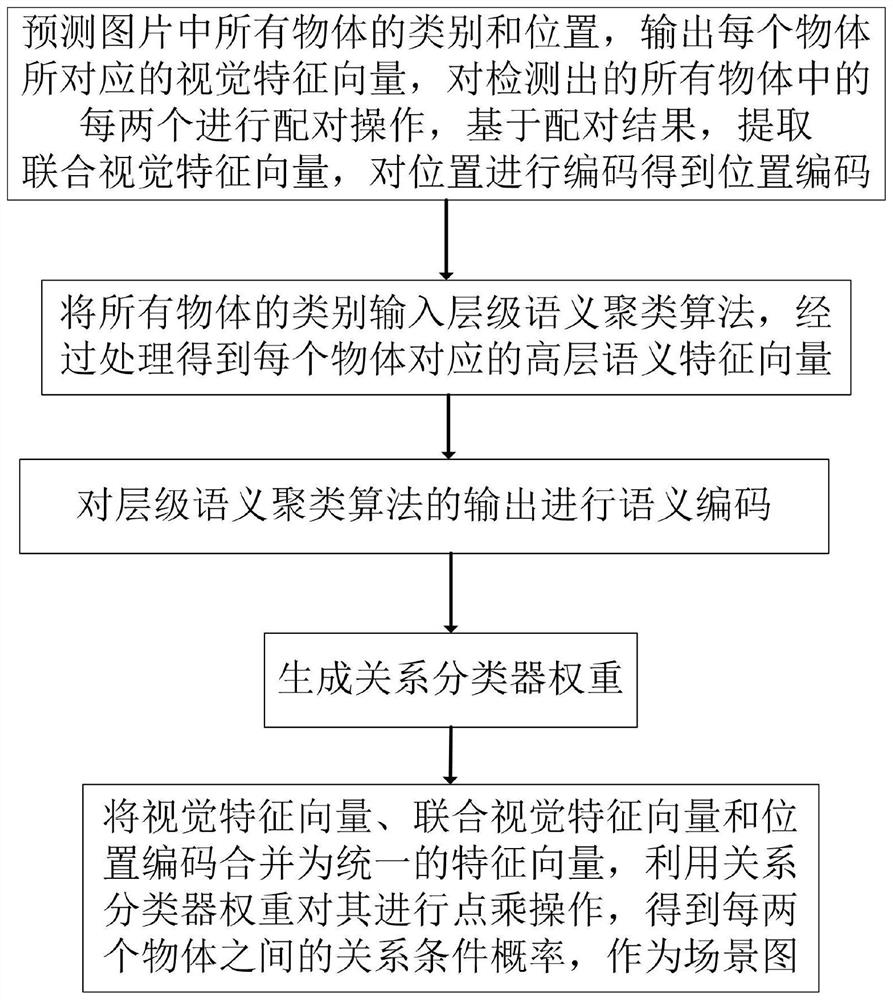

[0037] The visual relationship detection method based on the high-order semantics of the scene graph proposed by the embodiment of the present invention specifically includes:

[0038] S1, visual feature extraction, predict the category and position of all objects in the picture through the convolutional neural network CNN and the regional convolutional neural network RCNN, the category of the object is a number, generally by sequentially encoding the objects that may appear in the input data Obtained, the position of the object is a box, which is determined by two points, namely the upper left corner and the lower right corner of the box, each point includes the values of the abscissa and ordinate, and at the same time, it also ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com