A Federated Learning Adaptive Gradient Quantization Method

A quantification method and adaptive technology, applied in the direction of integrated learning, adjustment of transmission mode, network traffic/resource management, etc., which can solve the problems of large difference in communication time between fast and slow nodes, decreased model accuracy, and slowed down the training process.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

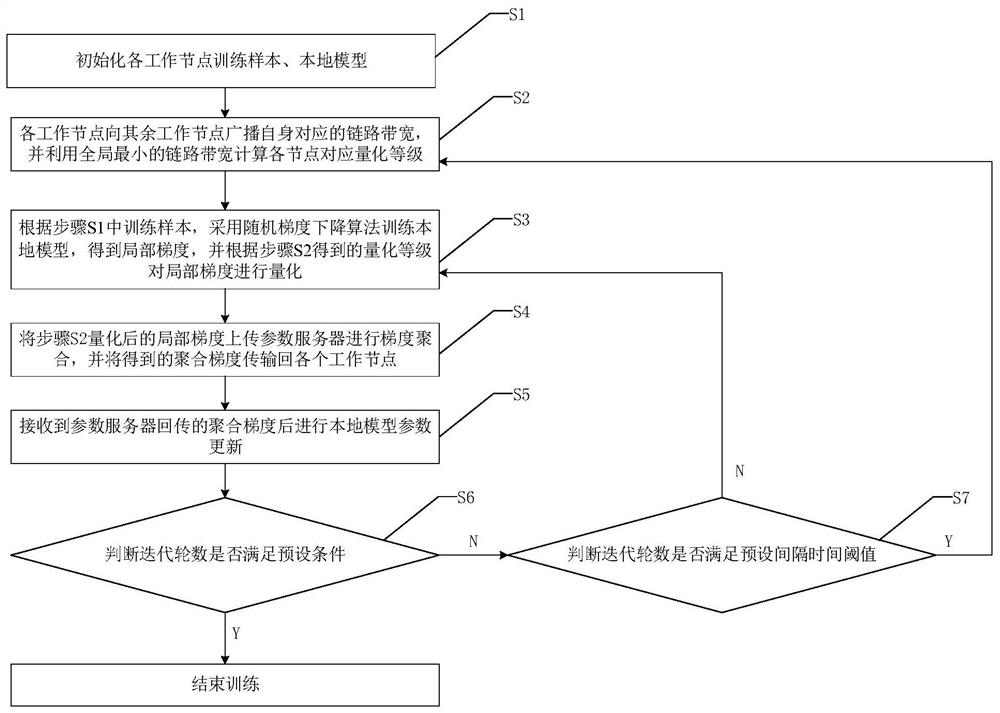

Method used

Image

Examples

Embodiment Construction

[0068]

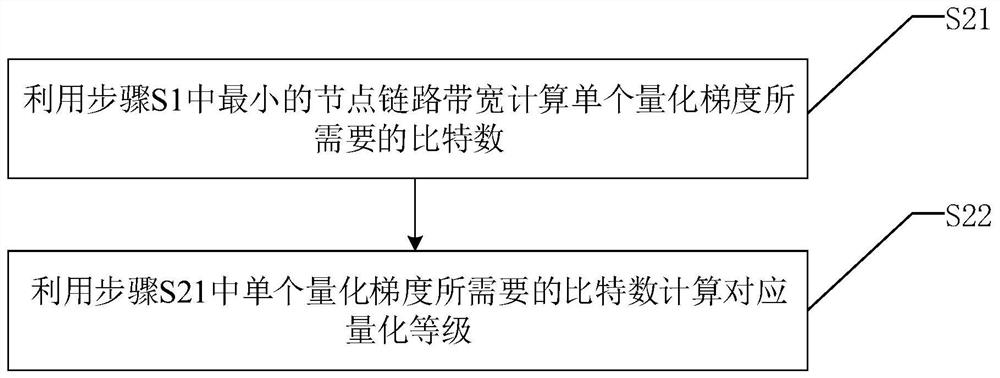

[0071]

[0072] Wherein, for the round-up operation.

[0080]

[0083] Q

[0087]

[0090]

[0094]

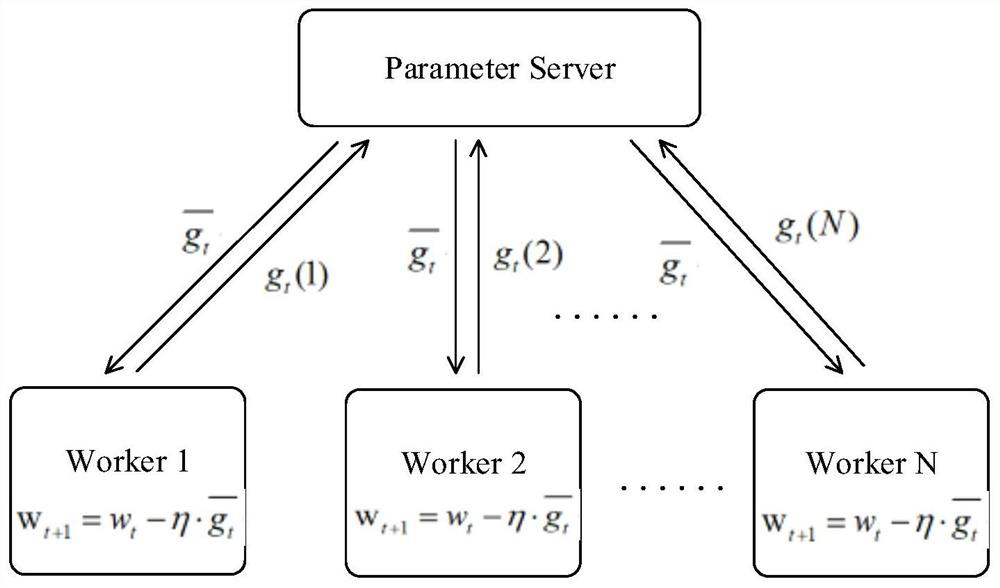

[0095] Among them, is the aggregation gradient, N is the number of working nodes, k is the working node, and is the working node k after quantization

[0097]

[0103] The present invention refers to processes of methods, apparatus (systems), and computer program products according to embodiments of the present invention

[0107] Those of ordinary skill in the art will appreciate that the embodiments described herein are intended to assist the reader in understanding the present invention

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com