Federal learning adaptive gradient quantification method

A quantization method and self-adaptive technology, applied in integrated learning, adjustment of transmission mode, network traffic/resource management, etc., can solve problems such as low-precision quantization gradients, aggravated stragglers, and decreased model accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0061] The specific embodiments of the present invention are described below so that those skilled in the art can understand the present invention, but it should be clear that the present invention is not limited to the scope of the specific embodiments. For those of ordinary skill in the art, as long as various changes Within the spirit and scope of the present invention defined and determined by the appended claims, these changes are obvious, and all inventions and creations using the concept of the present invention are included in the protection list.

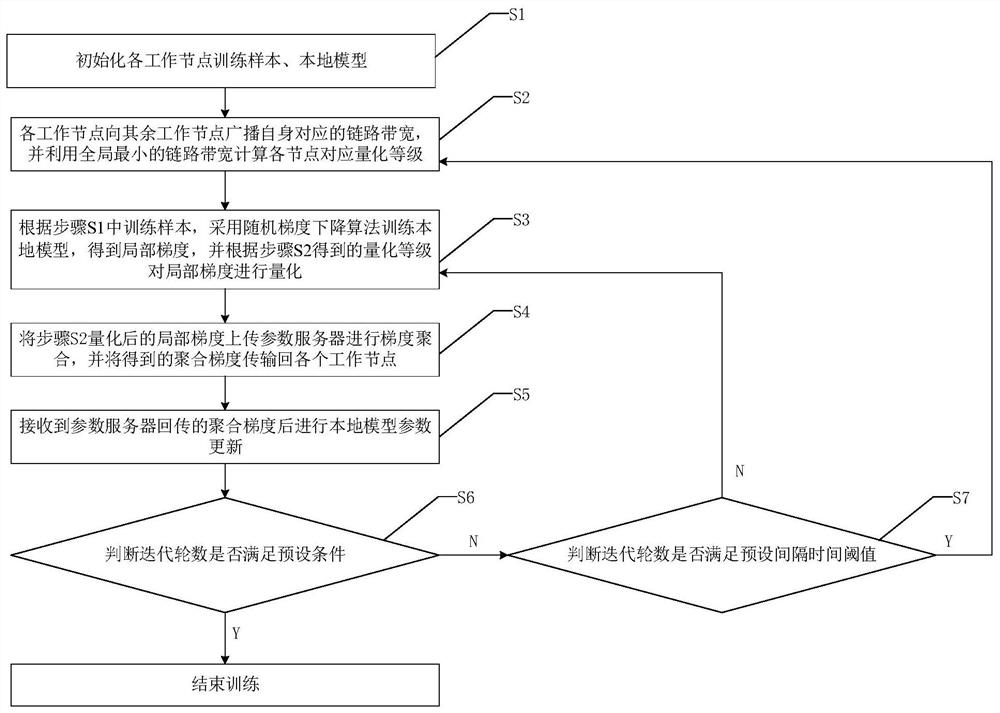

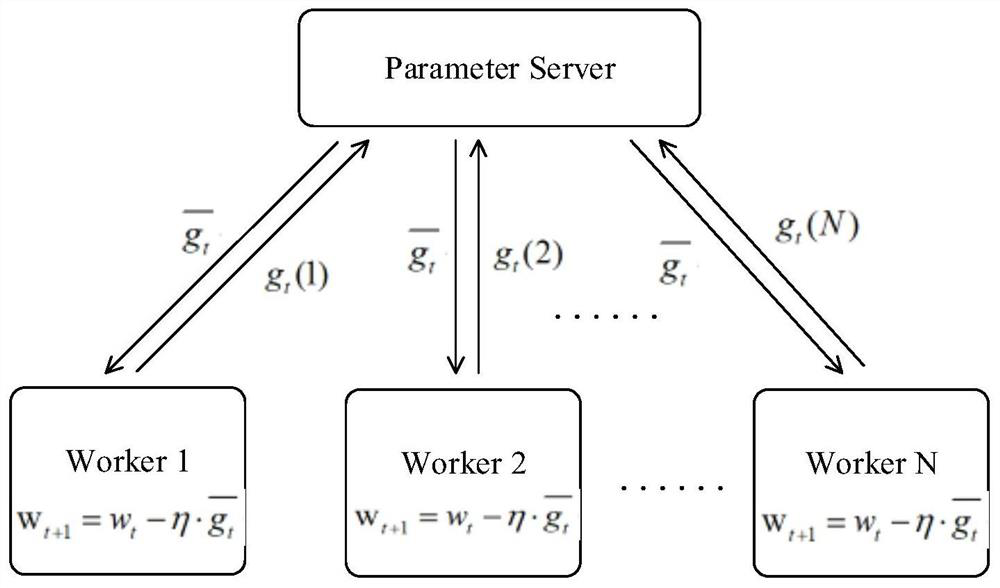

[0062] like figure 1 , figure 2 As shown, the present invention provides an adaptive gradient quantization method, comprising the following steps S1 to S7:

[0063] S1. Initialize training samples and local models of each working node;

[0064] In this embodiment, the data slices and local models acquired by each working node from the parameter server are initialized, wherein the data slices are used as training samples....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com